The convergence of artificial intelligence and three-dimensional spatial audio processing has opened entirely new frontiers in immersive sound technology, fundamentally transforming how we perceive and interact with digital audio environments. This revolutionary fusion of AI-driven algorithms and sophisticated spatial audio techniques is creating unprecedented opportunities for virtual reality experiences, gaming environments, live entertainment, and professional audio production, establishing a new paradigm where sound becomes a fully three-dimensional, dynamically responsive medium that adapts intelligently to user behavior and environmental conditions.

Explore the latest AI trends in audio technology to stay current with rapidly evolving innovations that are reshaping the landscape of immersive audio experiences. The integration of machine learning algorithms with spatial audio processing represents more than just technical advancement; it embodies a fundamental shift toward creating audio environments that understand context, anticipate user needs, and deliver personalized auditory experiences that were previously impossible to achieve through traditional audio processing methods.

The Foundation of AI-Powered Spatial Audio

The development of AI-enhanced spatial audio systems builds upon decades of research in psychoacoustics, digital signal processing, and machine learning algorithms to create sophisticated audio environments that can accurately simulate three-dimensional sound fields with remarkable precision and adaptability. Traditional spatial audio techniques relied on static algorithms and predetermined parameters to create the illusion of three-dimensional sound placement, but AI-powered systems introduce dynamic adaptation capabilities that continuously optimize audio processing based on real-time analysis of listener behavior, environmental acoustics, and content characteristics.

Modern AI spatial audio systems employ complex neural networks trained on vast datasets of acoustic measurements, head-related transfer functions, and perceptual audio data to understand how sound propagates through three-dimensional spaces and how human auditory perception interprets spatial audio cues. These machine learning models can predict optimal audio processing parameters for any given scenario, automatically adjusting reverb characteristics, distance attenuation, directional filtering, and binaural processing to create convincing three-dimensional audio experiences that adapt seamlessly to changing conditions and user preferences.

The sophistication of these AI systems extends beyond simple parameter optimization to include intelligent content analysis, where machine learning algorithms can analyze audio content in real-time to identify speech, music, environmental sounds, and other audio elements, applying appropriate spatial processing techniques to each component to maximize the effectiveness and realism of the three-dimensional audio presentation.

Revolutionary Applications in Virtual Reality

Virtual reality environments have emerged as the most compelling showcase for AI-powered spatial audio technology, where the combination of visual immersion and intelligent three-dimensional sound processing creates unprecedented levels of presence and engagement for users across entertainment, education, training, and therapeutic applications. AI-driven spatial audio systems in VR environments can track user head movements, analyze visual content, and predict user attention patterns to dynamically optimize audio processing for maximum immersion and comfort.

Experience advanced AI capabilities with Claude to understand how intelligent systems can enhance virtual reality audio processing through sophisticated analysis and real-time optimization. The integration of AI into VR audio processing enables features such as intelligent occlusion modeling, where the system automatically calculates how virtual objects should affect sound transmission, dynamic room acoustic simulation that adapts to virtual environment changes, and predictive audio rendering that pre-processes spatial audio based on anticipated user movements and interactions.

Advanced AI algorithms can analyze the geometric properties of virtual environments, material characteristics of surfaces, and atmospheric conditions to generate realistic acoustic models that respond appropriately to user actions and environmental changes. This level of sophistication allows virtual reality experiences to achieve audio realism that closely matches real-world acoustic behavior, significantly enhancing the sense of presence and immersion that users experience during VR sessions.

Transforming Gaming Audio Experiences

The gaming industry has rapidly embraced AI-powered spatial audio technology as a competitive advantage and immersive enhancement tool, where intelligent three-dimensional sound processing can provide strategic gameplay advantages, enhance narrative immersion, and create more engaging multiplayer experiences. Modern AI gaming audio systems can analyze gameplay situations, player behavior patterns, and game state information to dynamically optimize spatial audio presentation for both competitive advantage and emotional impact.

Intelligent spatial audio systems in gaming environments can automatically prioritize important audio cues based on gameplay context, ensuring that critical sounds such as approaching enemies, environmental hazards, or important dialogue remain clearly audible while maintaining realistic three-dimensional positioning. Machine learning algorithms can learn from player behavior to understand which audio elements are most important in different gaming scenarios, automatically adjusting spatial processing parameters to enhance the detectability and localization accuracy of these crucial audio cues.

The adaptive nature of AI-powered gaming audio extends to dynamic soundtrack management, where intelligent systems can seamlessly blend musical elements with environmental audio and sound effects, adjusting spatial positioning, reverb characteristics, and frequency response to maintain optimal balance between atmospheric immersion and gameplay functionality. These systems can also implement intelligent audio scaling that automatically adjusts the complexity and processing intensity of spatial audio effects based on system performance requirements, ensuring consistent audio quality across different hardware configurations.

Advancing Live Entertainment and Broadcasting

Live entertainment venues and broadcasting applications have discovered remarkable opportunities in AI-enhanced spatial audio technology for creating immersive audience experiences that extend far beyond traditional stereo or surround sound presentations. Intelligent spatial audio systems can analyze live performance content, venue acoustics, and audience distribution to optimize three-dimensional audio presentation for maximum impact and engagement across diverse listening environments and delivery platforms.

AI-powered spatial audio processing in live entertainment can automatically adapt to different venue characteristics, crowd noise levels, and performance dynamics to maintain optimal audio clarity and spatial positioning throughout events. Machine learning algorithms can predict optimal microphone placement, analyze performer movement patterns, and adjust spatial processing parameters in real-time to ensure that three-dimensional audio effects remain convincing and effective regardless of changing performance conditions.

Broadcasting applications benefit from AI spatial audio through intelligent format conversion systems that can automatically adapt three-dimensional audio content for different delivery platforms and playback systems, ensuring that spatial audio effects translate effectively whether the content is being consumed through high-end headphones, soundbars, or mobile device speakers. These adaptive systems can analyze the characteristics of target playback systems and automatically optimize spatial processing parameters to maximize the effectiveness of three-dimensional audio presentation within the constraints of each delivery platform.

Professional Audio Production Revolution

Professional audio production workflows have been fundamentally transformed by the introduction of AI-powered spatial audio tools that automate complex processing tasks, enhance creative possibilities, and streamline the production of immersive audio content for various media formats. AI-driven spatial audio production systems can analyze multitrack recordings, identify optimal spatial positioning for individual elements, and automatically generate convincing three-dimensional audio mixes that would previously require extensive manual adjustment and expertise.

Discover comprehensive AI research capabilities with Perplexity to explore the technical foundations and emerging developments in professional spatial audio production. Machine learning algorithms trained on expert mixing techniques can suggest optimal spatial placement for instruments, vocals, and effects based on musical genre, arrangement complexity, and intended listening environment, significantly reducing the time and expertise required to create professional-quality spatial audio productions.

Intelligent audio production systems can also provide automated quality assurance by analyzing spatial audio mixes for common issues such as phase problems, localization conflicts, and frequency masking, suggesting corrections that maintain the artistic intent while ensuring technical compatibility across different playback systems. These AI-powered tools enable smaller production teams to create sophisticated spatial audio content that previously required specialized expertise and extensive manual processing.

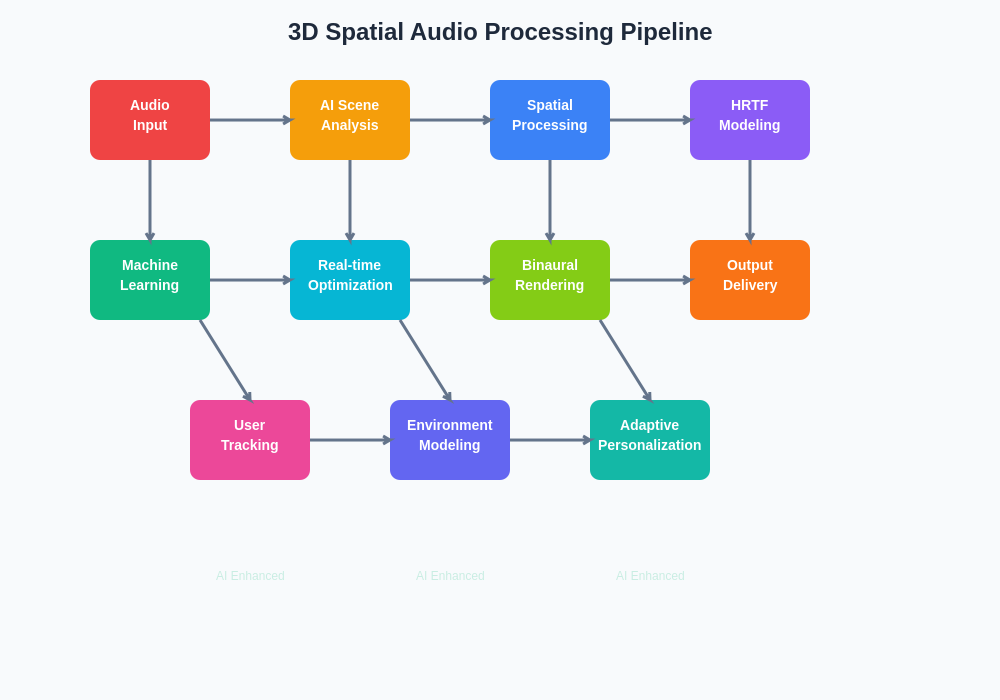

The modern AI-enhanced spatial audio processing pipeline represents a sophisticated integration of multiple machine learning systems working in concert to analyze, process, and optimize three-dimensional audio content. This comprehensive approach ensures that every aspect of spatial audio processing benefits from intelligent optimization and adaptive enhancement.

Machine Learning Models for Audio Spatialization

The development of effective machine learning models for audio spatialization requires sophisticated understanding of both acoustic principles and perceptual psychology, combining extensive datasets of measured acoustic responses with advanced neural network architectures capable of modeling the complex relationships between audio signals and three-dimensional spatial perception. Contemporary AI spatial audio systems employ multiple specialized neural networks, each optimized for specific aspects of spatial audio processing such as head-related transfer function modeling, room impulse response prediction, and perceptual optimization.

Convolutional neural networks have proven particularly effective for analyzing the spectral characteristics of spatial audio signals, while recurrent neural networks excel at modeling the temporal dynamics of three-dimensional audio perception and adaptation. Transformer architectures are increasingly being employed for their ability to model long-range dependencies in spatial audio processing, enabling AI systems to maintain consistent spatial audio characteristics across extended listening sessions and complex audio content.

The training of these machine learning models requires carefully curated datasets that include measurements from diverse populations, various acoustic environments, and different types of audio content to ensure that AI spatial audio systems can provide effective processing across the full range of real-world applications and user preferences. Advanced training techniques such as adversarial learning and reinforcement learning are being employed to create more robust and adaptable spatial audio processing systems that can handle novel scenarios and edge cases effectively.

Real-Time Processing and Computational Optimization

The implementation of AI-powered spatial audio processing in real-time applications presents significant computational challenges that require innovative approaches to algorithm optimization, hardware acceleration, and intelligent resource management. Modern spatial audio AI systems must balance processing quality with computational efficiency to deliver convincing three-dimensional audio experiences within the strict latency requirements of interactive applications such as gaming, virtual reality, and live performance.

Advanced optimization techniques such as neural network quantization, pruning, and knowledge distillation are being employed to reduce the computational requirements of AI spatial audio processing while maintaining perceptual quality. GPU acceleration and specialized audio processing hardware enable parallel processing of multiple spatial audio channels and complex convolution operations required for realistic three-dimensional audio rendering.

Intelligent resource management systems can dynamically adjust the complexity and quality of AI spatial audio processing based on available computational resources, system load, and application requirements, ensuring that spatial audio effects remain active and effective even under challenging performance conditions. These adaptive systems can seamlessly transition between different quality levels and processing algorithms to maintain optimal balance between audio quality and system performance.

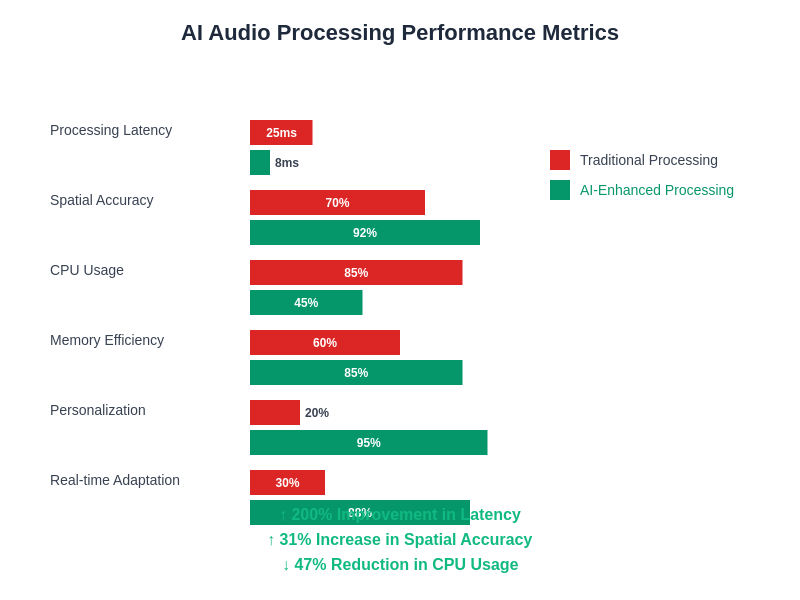

The performance characteristics of AI-enhanced spatial audio systems demonstrate significant improvements across multiple dimensions compared to traditional processing approaches. These metrics highlight the tangible benefits that machine learning brings to spatial audio processing efficiency and quality.

Personalization and Adaptive Audio Systems

One of the most promising aspects of AI-powered spatial audio technology lies in its ability to provide personalized audio experiences that adapt to individual user characteristics, preferences, and listening environments. Machine learning algorithms can analyze user behavior patterns, physiological responses, and stated preferences to customize spatial audio processing parameters for optimal perceptual effectiveness and user satisfaction.

Adaptive personalization systems can learn from user interactions, head movement patterns, and feedback to continuously refine spatial audio processing for each individual user, creating increasingly effective and comfortable three-dimensional audio experiences over time. These systems can account for individual differences in hearing sensitivity, head and ear anatomy, and perceptual preferences that significantly affect the effectiveness of spatial audio processing.

Advanced personalization systems can also adapt to different listening contexts and environments, automatically adjusting spatial audio processing based on factors such as ambient noise levels, acoustic characteristics of the listening space, and the specific playback system being used. This contextual adaptation ensures that personalized spatial audio experiences remain effective across diverse real-world listening scenarios.

Integration with Emerging Audio Technologies

The future of AI-powered spatial audio processing lies in its integration with emerging technologies such as augmented reality, brain-computer interfaces, and advanced haptic feedback systems that can create multi-sensory immersive experiences extending far beyond traditional audio presentation. These integrated systems promise to revolutionize how humans interact with digital audio content and virtual environments.

Augmented reality applications benefit from AI spatial audio through intelligent blending of virtual and real-world audio sources, where machine learning algorithms can analyze environmental acoustics and seamlessly integrate virtual audio elements that appear to originate from specific locations in the real world. Brain-computer interface integration could enable direct neural feedback for optimizing spatial audio processing based on measured brain responses to different spatial audio presentations.

Haptic feedback integration with AI spatial audio systems can create synesthetic experiences where three-dimensional audio positioning is reinforced through tactile sensations, providing additional perceptual cues that enhance spatial audio effectiveness and immersion. These multi-modal approaches represent the next frontier in immersive audio technology.

Technical Challenges and Future Developments

The continued advancement of AI-powered spatial audio processing faces several significant technical challenges that require ongoing research and development efforts. Computational complexity remains a primary concern, as the most effective spatial audio processing algorithms require substantial processing power that may not be available in all target applications and devices.

Standardization and compatibility across different platforms and devices present additional challenges, as the effectiveness of spatial audio processing can vary significantly depending on playback hardware characteristics and implementation details. The development of universal standards and adaptive processing algorithms that can optimize performance across diverse hardware configurations remains an active area of research and development.

The integration of AI spatial audio processing with existing audio production workflows and content distribution systems requires careful consideration of backward compatibility, quality preservation, and format standardization to ensure widespread adoption and effective implementation across the audio industry.

The technological roadmap for AI-enhanced spatial audio processing reveals exciting developments across multiple domains, from real-time personalization to multi-sensory integration. These emerging capabilities promise to transform how we experience and interact with three-dimensional audio content.

Industry Impact and Market Evolution

The widespread adoption of AI-powered spatial audio technology is driving significant changes across multiple industries, from entertainment and gaming to education, healthcare, and professional audio production. Market demand for immersive audio experiences continues to grow as consumers become more aware of the benefits and possibilities offered by three-dimensional audio processing.

The democratization of spatial audio production through AI-powered tools is enabling smaller content creators and production teams to create sophisticated immersive audio experiences that were previously accessible only to well-funded studios with specialized expertise. This democratization is driving innovation and creativity across the audio industry while expanding the market for spatial audio content and applications.

Investment in AI spatial audio research and development continues to increase as major technology companies recognize the strategic importance of immersive audio experiences for future computing platforms and entertainment systems. This investment is accelerating the pace of innovation and enabling more rapid deployment of advanced spatial audio technologies across consumer and professional applications.

The future of AI-powered spatial audio processing promises continued innovation and expansion into new application domains, with the potential to fundamentally transform how humans experience and interact with digital audio content across all aspects of modern life. As these technologies mature and become more accessible, we can expect to see increasingly sophisticated and effective three-dimensional audio experiences that blur the boundaries between virtual and real-world acoustic environments.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI technologies and their applications in spatial audio processing. Readers should conduct their own research and consider their specific requirements when implementing AI-powered spatial audio systems. The effectiveness of spatial audio processing may vary depending on individual hearing characteristics, hardware configurations, and specific application requirements.