The rapid proliferation of artificial intelligence systems across critical infrastructure, financial services, healthcare, and autonomous systems has created unprecedented security challenges that demand immediate attention from security professionals and AI developers alike. Adversarial attacks represent one of the most sophisticated and potentially devastating threats to machine learning systems, exploiting fundamental vulnerabilities in how AI models process and interpret data to cause misclassification, data poisoning, and system compromise.

Understanding and defending against these attacks requires a comprehensive approach that combines deep knowledge of machine learning architectures with advanced cybersecurity principles and defensive strategies. The stakes are particularly high given that compromised AI systems can affect everything from medical diagnoses and financial transactions to autonomous vehicle navigation and national security applications.

Stay updated with the latest AI security trends to understand emerging threats and defensive technologies that are reshaping the artificial intelligence security landscape. The evolving nature of adversarial attacks necessitates continuous learning and adaptation of security measures to protect against increasingly sophisticated exploitation techniques.

Understanding the Fundamentals of Adversarial Attacks

Adversarial attacks exploit the mathematical foundations of machine learning models by introducing carefully crafted perturbations to input data that are imperceptible to humans but cause AI systems to make incorrect predictions or classifications. These attacks leverage the high-dimensional nature of machine learning feature spaces, where small changes in input data can lead to dramatically different outputs due to the complex decision boundaries learned during training.

The sophistication of modern adversarial attacks extends far beyond simple data manipulation, incorporating advanced techniques such as gradient-based optimization, evolutionary algorithms, and transferability exploits that allow attacks crafted for one model to successfully compromise entirely different AI systems. This transferability property makes adversarial attacks particularly dangerous in real-world scenarios where attackers may not have direct access to target models but can develop attacks using substitute models with similar architectures or training data.

The mathematical elegance of adversarial attacks lies in their ability to find optimal perturbations that maximize model confusion while minimizing detectability, often operating within constraints that ensure the modified inputs remain within acceptable bounds for their intended application domain. This optimization problem requires deep understanding of both the target model’s architecture and the statistical properties of the data distribution on which it was trained.

Classification and Taxonomy of Attack Vectors

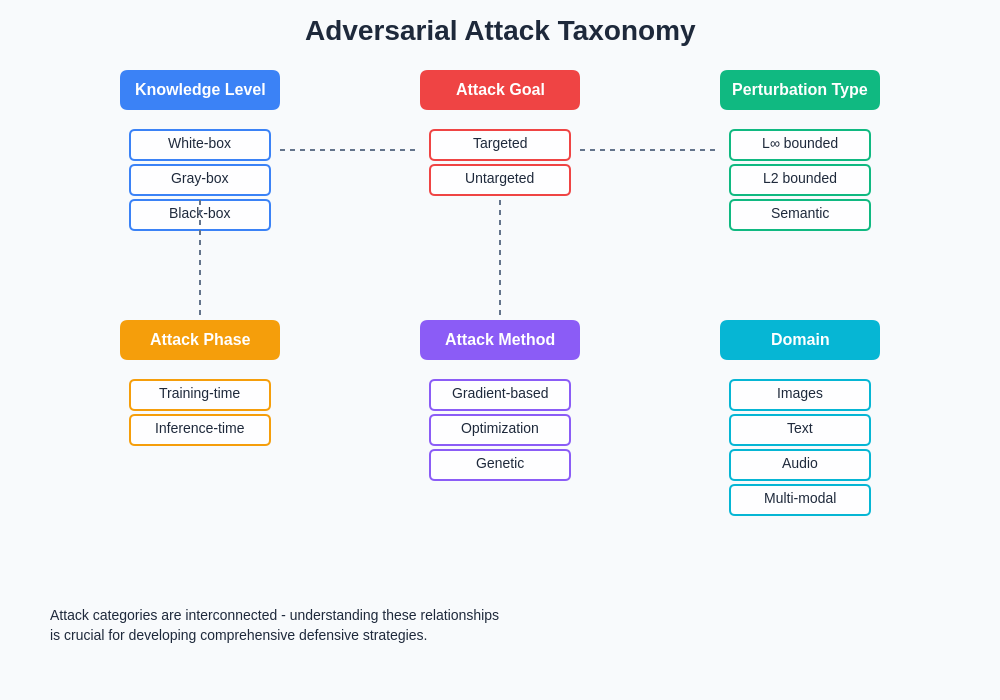

Adversarial attacks can be systematically categorized across multiple dimensions that help security professionals understand their potential impact and develop appropriate countermeasures. White-box attacks assume complete knowledge of the target model including architecture, parameters, and training data, enabling attackers to compute exact gradients and craft highly effective perturbations using techniques such as the Fast Gradient Sign Method, Projected Gradient Descent, and Carlini-Wagner attacks.

Black-box attacks operate under more realistic constraints where attackers have limited or no knowledge of the target model’s internals, relying instead on query-based optimization, transfer learning from substitute models, or statistical inference techniques to generate effective adversarial examples. These attacks often require significantly more computational resources and time but represent the most common real-world attack scenario where adversaries must work with limited information about their targets.

Enhance your AI security toolkit with Claude for advanced threat analysis and defensive strategy development that can help identify vulnerabilities and strengthen your machine learning systems against sophisticated attacks. The integration of AI-powered security tools enables more effective detection and mitigation of adversarial threats through automated analysis and response capabilities.

Targeted attacks aim to cause misclassification toward specific predetermined classes, while untargeted attacks simply seek to cause any incorrect classification regardless of the specific output. Targeted attacks are generally more challenging to execute but can be devastating in scenarios where attackers want to manipulate AI systems to produce specific outcomes, such as causing facial recognition systems to misidentify individuals or autonomous vehicles to misclassify traffic signs.

The temporal dimension of attacks distinguishes between evasion attacks that occur during inference time and poisoning attacks that compromise the training process itself. Data poisoning represents a particularly insidious threat vector where attackers inject malicious samples into training datasets, causing models to learn backdoors or develop systematic biases that can be exploited during deployment.

Technical Deep Dive into Attack Methodologies

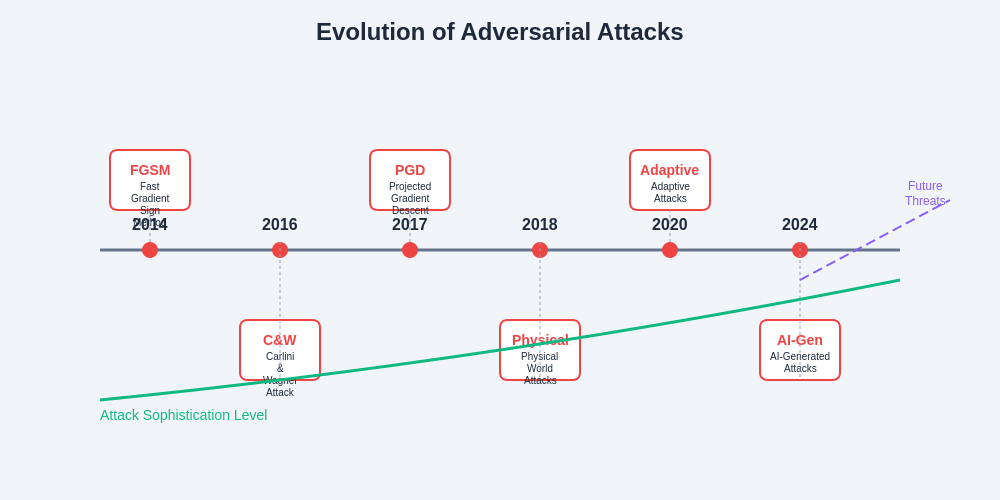

The mathematical foundation of adversarial attacks relies on exploiting the locally linear nature of deep neural networks in high-dimensional input spaces. The Fast Gradient Sign Method represents one of the most fundamental attack techniques, computing adversarial perturbations by taking the sign of the gradient of the loss function with respect to input features and scaling by a small epsilon value that controls perturbation magnitude.

More sophisticated iterative methods such as Projected Gradient Descent extend this approach by applying multiple smaller perturbation steps while projecting results back onto the feasible input space, often achieving higher attack success rates with better imperceptibility properties. The Carlini-Wagner attack formulation reframes adversarial example generation as a constrained optimization problem that simultaneously minimizes perturbation magnitude while maximizing attack effectiveness through carefully designed objective functions.

Advanced attack methodologies incorporate techniques from other domains such as evolutionary computation, reinforcement learning, and generative modeling to create more robust and transferable adversarial examples. Genetic algorithm-based approaches evolve populations of adversarial candidates over multiple generations, while generative adversarial networks can be repurposed to learn the distribution of effective adversarial perturbations for specific target models or datasets.

The complexity of modern attack vectors requires systematic understanding of their relationships, effectiveness patterns, and computational requirements to develop comprehensive defensive strategies that can address multiple threat scenarios simultaneously.

Real-World Impact and Case Studies

The practical implications of adversarial attacks extend far beyond academic research environments, with documented cases affecting production systems across multiple industries and application domains. Autonomous vehicle systems have demonstrated vulnerability to adversarial patches that can cause stop sign misclassification, potentially leading to catastrophic safety failures in real-world driving scenarios where split-second decisions determine passenger and pedestrian safety.

Medical imaging systems represent another critical vulnerability area where adversarial attacks could cause misdiagnosis of cancerous tumors, cardiac abnormalities, or other life-threatening conditions. The high-stakes nature of medical decision-making amplifies the potential consequences of successful attacks, as incorrect diagnoses could lead to inappropriate treatments, delayed interventions, or unnecessary procedures with significant patient impact.

Financial services have experienced adversarial attacks targeting fraud detection systems, credit scoring algorithms, and algorithmic trading platforms where attackers attempt to manipulate risk assessments, bypass security controls, or influence market behavior through coordinated adversarial inputs. The interconnected nature of financial systems means that successful attacks can have cascading effects across multiple institutions and markets.

Leverage Perplexity for comprehensive security research to stay informed about emerging threat intelligence and attack methodologies that could impact your AI systems and infrastructure. The rapidly evolving threat landscape requires continuous monitoring and analysis of new attack vectors and defensive technologies.

Biometric authentication systems including fingerprint scanners, facial recognition platforms, and voice verification systems have shown susceptibility to adversarial attacks that can enable unauthorized access to secure facilities, financial accounts, and personal devices. The physical nature of biometric attacks often requires sophisticated techniques such as 3D printing, audio synthesis, or specialized materials to create physical adversarial examples that can fool sensors and algorithms.

Defensive Strategies and Countermeasures

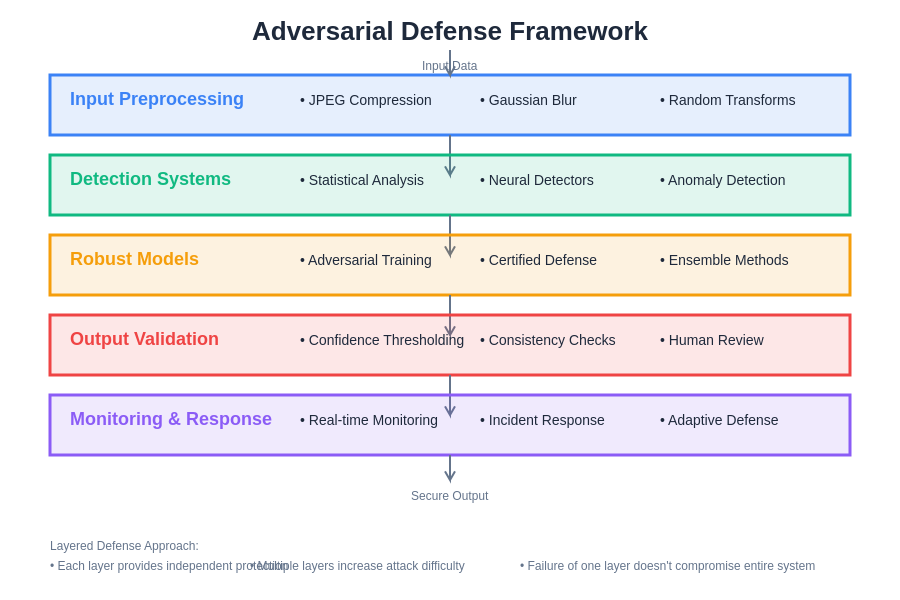

Defending against adversarial attacks requires a multi-layered approach that combines preventive measures, detection mechanisms, and response capabilities to create robust security architectures capable of withstanding sophisticated attacks. Adversarial training represents one of the most effective defensive techniques, involving the integration of adversarial examples into training datasets to improve model robustness against known attack patterns.

Defensive distillation techniques reduce model vulnerability by training networks to output softened probability distributions rather than hard classifications, making it more difficult for attackers to find effective gradient-based perturbations. This approach reduces the sensitivity of model outputs to small input changes while maintaining classification accuracy on legitimate inputs.

Input preprocessing and transformation methods can neutralize adversarial perturbations through techniques such as JPEG compression, spatial smoothing, bit-depth reduction, and random transformations that disrupt carefully crafted perturbations while preserving the semantic content of legitimate inputs. These preprocessing steps must be carefully calibrated to avoid degrading model performance on clean inputs while effectively countering adversarial manipulations.

Ensemble defense strategies leverage multiple diverse models to create voting mechanisms that are more resilient to attacks targeting individual models. The diversity can be achieved through different architectures, training procedures, or data augmentation techniques, making it significantly more challenging for attackers to craft adversarial examples that successfully fool all ensemble members simultaneously.

Detection-based approaches focus on identifying adversarial inputs before they reach the primary classification model through statistical analysis, auxiliary classifier networks, or anomaly detection algorithms trained to recognize the distinctive characteristics of adversarial perturbations. These detection systems can trigger alternative processing pipelines, human review processes, or system alerts when potential attacks are identified.

Advanced Detection and Monitoring Systems

Implementing effective adversarial attack detection requires sophisticated monitoring systems capable of analyzing input patterns, model behavior, and system performance metrics in real-time to identify potential security breaches or ongoing attacks. Statistical detection methods analyze the distribution properties of input data to identify samples that deviate significantly from expected patterns, leveraging techniques such as kernel density estimation, one-class support vector machines, and autoencoder-based reconstruction error analysis.

Behavioral analysis approaches monitor model confidence scores, prediction consistency, and decision boundary proximity to identify inputs that cause unusual model behavior characteristic of adversarial manipulation. These systems can track metrics such as prediction entropy, top-k accuracy gaps, and gradient magnitudes to establish baseline behavioral profiles and detect deviations that suggest adversarial interference.

Neural network-based detection systems employ specialized architectures trained specifically to distinguish between clean and adversarial inputs, often achieving high accuracy in detecting known attack types while maintaining low false positive rates on legitimate data. These detection networks can be integrated seamlessly into existing AI pipelines to provide real-time adversarial input filtering without significantly impacting system performance.

A comprehensive defense framework integrates multiple detection and mitigation strategies to provide layered protection against diverse attack vectors while maintaining system usability and performance standards.

Multi-modal detection systems combine information from multiple data sources and sensor types to create more robust detection capabilities that are resistant to coordinated attacks across different input channels. These systems are particularly valuable in applications such as autonomous vehicles or security systems where attackers might attempt to manipulate multiple sensors simultaneously to bypass individual detection mechanisms.

Implementation Guidelines and Best Practices

Developing robust defenses against adversarial attacks requires careful consideration of implementation details, performance trade-offs, and operational requirements that ensure security measures integrate effectively with existing systems and workflows. Security-by-design principles should guide the development process from initial architecture decisions through deployment and maintenance phases, incorporating threat modeling, risk assessment, and security testing throughout the development lifecycle.

Model hardening techniques include regularization methods, activation function selection, and architecture modifications that inherently reduce vulnerability to adversarial perturbations while maintaining or improving performance on legitimate tasks. Techniques such as spectral normalization, gradient penalty methods, and certified defense approaches provide mathematical guarantees about model robustness within specified perturbation bounds.

Continuous monitoring and adaptive defense systems enable real-time response to emerging threats through automated retraining, dynamic ensemble composition, and adaptive preprocessing parameters that can be tuned based on observed attack patterns and system performance metrics. These systems require robust logging, alerting, and incident response procedures to ensure security teams can quickly identify and respond to successful attacks or system compromises.

Testing and validation procedures for adversarial robustness should include comprehensive attack simulations using state-of-the-art attack methods, red team exercises conducted by independent security experts, and ongoing monitoring of model performance under various adversarial conditions. These testing procedures must cover both known attack types and novel attack strategies to ensure comprehensive security coverage.

Documentation and training requirements for development and operations teams include detailed security protocols, incident response procedures, and regular training updates to ensure personnel understand current threat landscapes and defensive capabilities. The rapidly evolving nature of adversarial attacks necessitates continuous education and skill development to maintain effective security postures.

Emerging Threats and Future Considerations

The adversarial attack landscape continues to evolve rapidly as researchers develop new attack methodologies and attackers adapt existing techniques to overcome defensive measures. Adaptive attacks represent a particularly concerning trend where adversaries specifically design attacks to bypass known defense mechanisms by incorporating knowledge of defensive strategies into their attack algorithms.

Physical adversarial attacks are becoming increasingly sophisticated, with researchers demonstrating successful attacks using 3D-printed objects, specially designed clothing patterns, and environmental modifications that can fool computer vision systems in real-world conditions. These attacks are particularly concerning for applications such as autonomous vehicles, surveillance systems, and access control mechanisms that rely on physical sensor inputs.

Multi-modal attacks targeting systems that process multiple types of input data simultaneously represent an emerging threat vector where adversaries coordinate attacks across different data modalities to increase attack success rates and bypass single-modality detection systems. These attacks require sophisticated coordination but can be highly effective against complex AI systems.

The historical progression and projected future development of adversarial attack capabilities demonstrate the need for proactive defense strategies and continuous security research investment.

Quantum computing developments may fundamentally alter the adversarial attack landscape by enabling new classes of attacks based on quantum algorithms while also potentially providing enhanced defensive capabilities through quantum-resistant security mechanisms. Organizations must begin considering the long-term implications of quantum computing on their AI security strategies.

Industry Standards and Compliance Frameworks

Developing comprehensive security standards for AI systems requires collaboration between industry experts, academic researchers, government agencies, and international standards organizations to establish baseline security requirements and testing methodologies. Current efforts include the development of adversarial robustness benchmarks, security certification processes, and regulatory frameworks that address AI system security across different application domains.

Compliance requirements for AI systems operating in regulated industries such as healthcare, finance, and transportation increasingly include provisions for adversarial robustness testing, security documentation, and incident response capabilities. Organizations must ensure their AI systems meet these evolving regulatory requirements while maintaining competitive performance and operational efficiency.

Risk assessment frameworks specific to AI systems help organizations evaluate their exposure to adversarial attacks and prioritize security investments based on threat likelihood, potential impact, and available defensive options. These frameworks must account for the unique characteristics of AI systems including model complexity, data sensitivity, and operational criticality.

Certification and audit processes for AI security provide independent validation of security measures and help organizations demonstrate compliance with industry standards and regulatory requirements. These processes typically include technical testing, documentation review, and operational assessment to ensure comprehensive security coverage.

Strategic Implementation and Organizational Readiness

Successfully implementing adversarial attack defenses requires organizational commitment, resource allocation, and cultural changes that prioritize security throughout the AI development and deployment lifecycle. Leadership support and cross-functional collaboration between security teams, data scientists, and operations personnel are essential for developing effective security strategies that balance protection with performance and usability requirements.

Investment in security infrastructure includes specialized hardware for adversarial training, monitoring systems for real-time threat detection, and backup systems for incident response and recovery scenarios. Organizations must also invest in personnel training, security tools, and ongoing research to maintain effective defenses against evolving threats.

Incident response planning for AI security breaches requires specialized procedures that account for the unique characteristics of adversarial attacks including potential for subtle manipulation, delayed detection, and complex forensic analysis requirements. Response teams must be trained to quickly identify attack vectors, assess system compromise, and implement appropriate containment and recovery measures.

Long-term security strategy development should include regular threat assessment updates, technology roadmap alignment, and strategic partnerships with security vendors, research institutions, and industry collaboratives to ensure access to latest defensive technologies and threat intelligence. Organizations must balance immediate security needs with long-term strategic planning to maintain effective protection in rapidly evolving threat environments.

The future of AI security depends on proactive investment in research, development, and implementation of robust defensive measures that can adapt to emerging threats while maintaining the innovative potential and practical benefits that make artificial intelligence systems valuable for organizations and society. Success requires sustained commitment to security excellence and continuous improvement in defensive capabilities.

Disclaimer

This article is for educational and informational purposes only and does not constitute professional security advice. The information presented is based on current understanding of adversarial attacks and defensive techniques in machine learning systems. Readers should conduct thorough security assessments and consult with qualified cybersecurity professionals before implementing AI systems in production environments. The effectiveness of defensive measures may vary depending on specific use cases, threat models, and implementation details. Organizations should develop comprehensive security strategies tailored to their specific requirements and risk profiles.