The preservation of audio history has entered a revolutionary new era through the application of artificial intelligence in audio restoration technology. Where traditional restoration methods required countless hours of manual labor and specialized expertise, AI-powered systems now deliver extraordinary results with unprecedented efficiency and precision. This transformation represents more than just technological advancement; it embodies the democratization of professional-grade audio restoration capabilities, making it possible for individuals and organizations worldwide to rescue precious recordings from the ravages of time and technological limitations.

Explore the latest AI audio trends and innovations to discover cutting-edge technologies that are reshaping how we approach sound processing and audio enhancement. The intersection of artificial intelligence with audio engineering has created possibilities that were unimaginable just a few years ago, opening new frontiers in both professional audio production and personal audio preservation projects.

Understanding the Evolution of Audio Restoration

The journey of audio restoration has progressed from purely analog techniques to sophisticated digital processing methods, and now to AI-driven approaches that can intelligently analyze and enhance audio content with minimal human intervention. Traditional restoration techniques relied heavily on manual equalization, careful noise reduction, and painstaking artifact removal that required deep technical knowledge and extensive time investment. The introduction of artificial intelligence has fundamentally changed this paradigm by enabling systems to learn from vast datasets of audio examples, developing an understanding of what constitutes clean, high-quality audio versus damaged or degraded content.

Modern AI restoration systems employ deep learning algorithms that have been trained on millions of audio samples, allowing them to recognize patterns of degradation and automatically apply appropriate corrective measures. These systems can distinguish between desired audio content and various forms of unwanted noise, distortion, or artifacts with remarkable accuracy. The sophistication of these algorithms enables them to make nuanced decisions about what elements to preserve, enhance, or remove, often achieving results that surpass traditional manual restoration techniques while requiring significantly less time and expertise.

The Science Behind AI Audio Enhancement

The foundation of AI audio restoration lies in advanced signal processing techniques combined with machine learning models that have been specifically designed to understand audio characteristics. Neural networks used in audio restoration are typically trained on paired datasets containing both degraded and clean versions of the same audio content, allowing the AI to learn the mapping between problematic audio and its restored counterpart. This training process enables the system to generalize its learning to new, previously unseen audio content, applying similar restoration principles to achieve consistent, high-quality results.

The most effective AI restoration systems utilize multiple types of neural network architectures working in concert to address different aspects of audio degradation. Convolutional neural networks excel at identifying patterns in spectrograms and frequency domain representations, while recurrent neural networks are particularly effective at modeling temporal dependencies in audio signals. The combination of these approaches allows AI systems to address complex restoration challenges that involve both frequency-specific issues and time-dependent degradation patterns.

Experience advanced AI capabilities with Claude for complex audio analysis and processing tasks that require sophisticated understanding of signal characteristics and restoration techniques. The integration of multiple AI technologies creates comprehensive solutions that can handle diverse restoration challenges with remarkable effectiveness.

Transforming Vinyl and Analog Recordings

The restoration of vinyl records and analog tape recordings represents one of the most compelling applications of AI audio technology. These historical recording mediums are particularly susceptible to various forms of degradation including surface noise, clicks, pops, wow and flutter, and frequency response irregularities that accumulate over decades of storage and playback. Traditional approaches to cleaning these recordings required extensive manual editing and careful application of noise reduction techniques that often compromised the original audio quality in the process of removing unwanted artifacts.

AI-powered restoration systems have revolutionized this process by developing sophisticated algorithms that can distinguish between musical content and surface noise with remarkable precision. These systems analyze the spectral characteristics of the audio content, identifying patterns that correspond to musical instruments, vocals, and ambient recording spaces while simultaneously recognizing the distinct signatures of vinyl surface noise, tape hiss, and mechanical artifacts. The AI can then selectively remove or reduce these unwanted elements while preserving the essential musical information, often revealing details in vintage recordings that were previously masked by decades of accumulated degradation.

The restoration of analog tape recordings presents unique challenges that AI systems have proven particularly adept at addressing. Magnetic tape degradation can result in frequency response variations, dropouts, print-through effects, and various forms of distortion that require sophisticated analysis to correct effectively. Modern AI restoration tools can identify these specific types of degradation and apply targeted corrections that restore the original frequency balance and dynamic characteristics of the recording while eliminating artifacts that detract from the listening experience.

Advanced Noise Reduction and Artifact Removal

The removal of unwanted noise and artifacts from audio recordings has been dramatically enhanced through the application of machine learning techniques that can adaptively identify and eliminate various forms of audio contamination. Traditional noise reduction methods often relied on static filtering approaches that could inadvertently affect desired audio content, resulting in a trade-off between noise reduction effectiveness and audio quality preservation. AI-powered systems overcome this limitation by employing dynamic analysis techniques that continuously evaluate the audio content and make real-time decisions about what constitutes noise versus music.

The sophistication of modern AI noise reduction extends to handling complex scenarios where noise and signal content occupy similar frequency ranges or exhibit temporal overlap. These systems can distinguish between background noise, room tone, equipment hum, and other unwanted elements while preserving subtle musical details such as reverb tails, ambient textures, and low-level instrumental passages that might be compromised by traditional noise reduction approaches.

Artifact removal capabilities of AI systems encompass a wide range of digital and analog distortions including clicks, pops, dropouts, digital clipping, and compression artifacts. The AI analyzes the surrounding audio context to intelligently reconstruct damaged portions of the signal, often producing seamless repairs that are virtually undetectable in the final restored audio. This reconstruction process involves sophisticated interpolation techniques that consider both spectral and temporal characteristics of the audio content to generate replacement audio that maintains musical and acoustic coherence with the surrounding material.

Frequency Response Correction and Tonal Balance

The restoration of proper frequency response and tonal balance in vintage recordings represents a critical aspect of AI-powered audio enhancement that requires deep understanding of both technical and musical considerations. Historical recording equipment, storage media, and playback systems each introduced their own frequency response characteristics that could significantly alter the original tonal balance of musical performances. AI restoration systems can analyze these frequency response irregularities and apply sophisticated correction algorithms that restore the intended tonal balance while preserving the essential character and authenticity of the original recording.

Machine learning algorithms trained on extensive databases of frequency response measurements from various historical recording and playback equipment can identify the specific characteristics of different systems and apply appropriate inverse filtering to neutralize their effects. This process requires careful consideration of the musical content to ensure that frequency response corrections enhance rather than compromise the artistic intent of the original recording. The AI must distinguish between intentional tonal characteristics that are part of the musical arrangement and unintended frequency response artifacts that detract from the overall audio quality.

The correction of frequency response irregularities extends beyond simple equalization to encompass complex interactions between different frequency bands and the temporal characteristics of the audio content. AI systems can identify and correct issues such as resonant peaks, frequency-dependent distortion, and non-linear frequency response effects that cannot be addressed through conventional equalization techniques. These advanced correction capabilities enable the restoration of recordings that might otherwise be considered beyond salvage using traditional methods.

Vocal Enhancement and Speech Clarity

The enhancement of vocal content and speech clarity in restored audio represents a specialized application of AI technology that requires sophisticated understanding of human vocal characteristics and speech patterns. Voice recordings from historical sources often suffer from multiple forms of degradation that can significantly impact intelligibility and emotional expression. AI systems designed for vocal enhancement employ specialized algorithms that have been trained specifically on human speech and vocal music, enabling them to identify and enhance the unique characteristics of vocal content while addressing various forms of degradation.

Utilize Perplexity’s research capabilities for comprehensive analysis of vocal processing techniques and speech enhancement methodologies that can inform advanced restoration projects. The combination of multiple AI technologies creates powerful solutions for addressing complex vocal restoration challenges.

Speech clarity enhancement involves sophisticated analysis of formant structures, fundamental frequency patterns, and temporal characteristics that define human speech. AI systems can identify and correct issues such as frequency masking, dynamic range compression, and spectral distortion that compromise speech intelligibility. The restoration process considers linguistic patterns and phonetic structures to ensure that enhanced speech maintains natural characteristics while achieving improved clarity and comprehension.

Vocal music restoration presents additional challenges related to preserving artistic expression and emotional content while addressing technical deficiencies in the original recording. AI systems must balance the restoration of technical audio quality with the preservation of performance nuances, vocal timbre, and expressive characteristics that define the artistic value of the recording. This requires sophisticated analysis of musical phrasing, vibrato patterns, and harmonic content that distinguish vocal music from speech and require specialized processing approaches.

Stereo Image Reconstruction and Spatial Enhancement

The restoration and enhancement of stereo imaging in vintage recordings has been revolutionized through AI techniques that can analyze and reconstruct spatial information from various source formats. Many historical recordings were created using stereo techniques that differ significantly from modern standards, or may have suffered from spatial degradation due to storage and playback issues. AI systems can analyze the spatial characteristics of recordings and apply sophisticated processing to enhance stereo separation, improve localization accuracy, and create more immersive listening experiences while maintaining the authenticity of the original performance.

The reconstruction of stereo imaging involves complex analysis of phase relationships, amplitude differences, and frequency-dependent spatial cues that define the perceived location and movement of sound sources within the stereo field. AI algorithms can identify and correct phase anomalies, stereo imbalance, and spatial distortions that compromise the three-dimensional presentation of the musical content. These corrections require careful consideration of the original recording techniques and artistic intentions to ensure that spatial enhancements complement rather than overwhelm the musical presentation.

Advanced spatial enhancement techniques can extract additional spatial information from mono recordings by analyzing the acoustic characteristics of the recorded environment and applying sophisticated algorithms to create pseudo-stereo effects that enhance the listening experience without introducing artificial artifacts. These techniques require extensive training on diverse recording environments and acoustic spaces to develop accurate models of how different spaces affect recorded sound.

Real-Time Processing and Hardware Integration

The development of real-time AI audio restoration capabilities has opened new possibilities for live performance enhancement and broadcast applications where immediate processing is essential. Real-time AI systems must balance restoration quality with processing latency requirements, employing optimized algorithms and specialized hardware to achieve professional-grade results within the constraints of live audio applications. These systems represent significant engineering challenges that require innovative approaches to algorithm design and hardware implementation.

Modern real-time AI restoration systems utilize specialized signal processing hardware including graphics processing units and dedicated AI acceleration chips to achieve the computational performance required for sophisticated restoration algorithms. The optimization of these systems involves careful consideration of algorithmic complexity, memory bandwidth requirements, and processing latency to ensure that restoration quality is not compromised by real-time constraints.

The integration of AI restoration capabilities into existing audio production workflows requires sophisticated software design that provides intuitive control interfaces while maintaining the flexibility and precision required for professional applications. These systems must accommodate diverse input formats, processing requirements, and output specifications while providing real-time feedback and adjustment capabilities that enable operators to fine-tune restoration parameters for optimal results.

Machine Learning Model Training and Customization

The effectiveness of AI audio restoration systems depends heavily on the quality and diversity of training data used to develop the underlying machine learning models. The creation of comprehensive training datasets requires careful curation of audio content that represents the full range of degradation types and restoration challenges that the system will encounter in practical applications. This process involves collecting high-quality reference recordings, systematically introducing various forms of degradation, and creating paired datasets that enable supervised learning approaches.

The training process for audio restoration models involves sophisticated techniques for data augmentation, regularization, and optimization that ensure robust performance across diverse audio content and degradation scenarios. The development of effective training methodologies requires deep understanding of both machine learning principles and audio engineering concepts to create models that generalize well to new audio content while maintaining high restoration quality.

Customization of AI restoration models for specific applications or audio content types can significantly improve restoration quality for specialized use cases. This customization process involves fine-tuning pre-trained models using domain-specific training data, adjusting algorithm parameters for particular types of degradation, and optimizing processing chains for specific restoration objectives. The ability to customize AI restoration systems enables users to achieve optimal results for their particular restoration requirements while leveraging the broader capabilities of general-purpose restoration algorithms.

Quality Assessment and Validation Methods

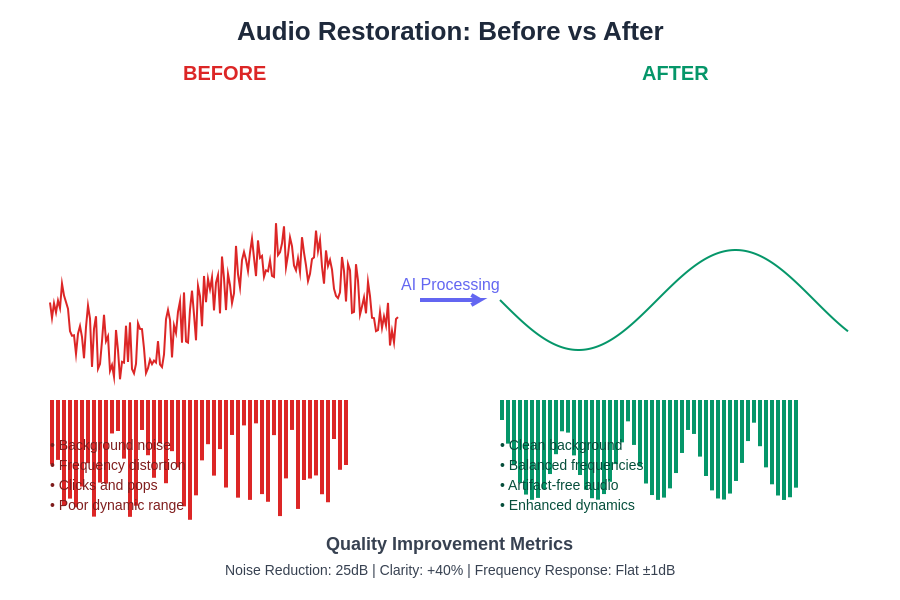

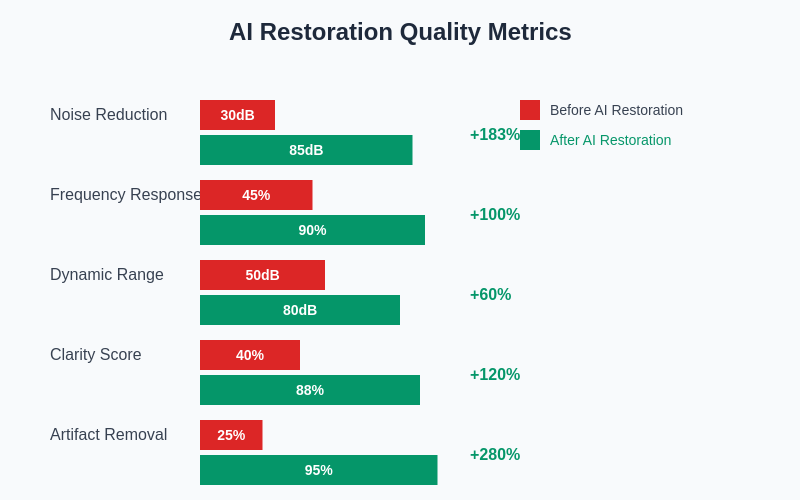

The evaluation of AI audio restoration quality requires sophisticated measurement techniques that can assess both technical improvements and subjective audio quality factors. Traditional audio quality metrics may not adequately capture the nuanced improvements achieved by AI restoration systems, necessitating the development of specialized evaluation methodologies that consider both objective measurements and perceptual quality factors.

Objective quality assessment involves detailed analysis of frequency response characteristics, noise reduction effectiveness, dynamic range improvement, and distortion reduction achieved through the restoration process. These measurements provide quantitative evidence of restoration effectiveness while enabling systematic comparison of different restoration approaches and optimization of algorithm parameters for specific applications.

Subjective quality evaluation requires carefully designed listening tests that assess the perceptual impact of restoration processing on audio quality, musical expression, and overall listening experience. These evaluations must consider factors such as listening environment, audio reproduction equipment, and listener expertise to ensure that quality assessments accurately reflect the practical benefits of AI restoration processing.

Industry Applications and Professional Workflows

The integration of AI audio restoration into professional audio production workflows has transformed how recording studios, broadcast facilities, and content creation organizations approach audio enhancement and restoration projects. The efficiency and quality improvements offered by AI systems have enabled organizations to undertake restoration projects that would have been economically unfeasible using traditional methods, expanding the scope of audio preservation and enhancement activities across diverse industries.

Broadcasting organizations have particularly benefited from AI restoration capabilities for enhancing archived content, improving live audio quality, and preparing historical material for modern distribution formats. The ability to process large volumes of audio content with consistent, high-quality results has enabled broadcasters to revitalize extensive archives while meeting contemporary audio quality standards for digital distribution platforms.

The music industry has embraced AI restoration for remastering classic recordings, preparing vintage content for high-resolution audio formats, and enhancing the quality of live performance recordings. These applications require careful balance between restoration quality and preservation of artistic authenticity to ensure that enhanced recordings maintain their historical and cultural significance while meeting modern technical standards.

Future Developments and Emerging Technologies

The future of AI audio restoration promises even more sophisticated capabilities through continued advances in machine learning algorithms, computational hardware, and audio processing techniques. Emerging technologies such as generative adversarial networks and transformer-based architectures offer new possibilities for audio enhancement and reconstruction that could further revolutionize the field of audio restoration.

The development of more sophisticated training methodologies and larger, more diverse datasets will enable AI systems to handle increasingly complex restoration challenges while maintaining high quality standards. These advances will likely expand the scope of AI restoration to encompass more specialized applications and enable restoration of audio content that is currently considered beyond salvage using existing techniques.

The integration of AI restoration with other audio technologies such as spatial audio processing, immersive audio formats, and interactive audio systems will create new opportunities for innovative audio experiences that combine historical content with cutting-edge presentation technologies. These developments will require continued collaboration between AI researchers, audio engineers, and content creators to ensure that technological capabilities align with artistic and practical requirements.

The democratization of AI audio restoration through cloud-based services, mobile applications, and consumer-friendly software tools will make professional-grade restoration capabilities accessible to a broader audience of audio enthusiasts, historians, and content creators. This accessibility will likely accelerate the preservation of audio heritage while enabling new forms of creative expression that build upon restored historical content.

Disclaimer

This article is for informational purposes only and does not constitute professional advice regarding audio restoration or AI technologies. The effectiveness of AI audio restoration systems may vary depending on the specific characteristics of source material, degradation types, and processing requirements. Readers should evaluate their specific needs and consult with audio professionals when undertaking critical restoration projects. The field of AI audio restoration continues to evolve rapidly, and new developments may supersede current techniques and capabilities.