The artificial intelligence revolution has fundamentally transformed how we approach computational performance evaluation, with MLPerf emerging as the gold standard for measuring AI hardware capabilities across diverse workloads and deployment scenarios. This comprehensive benchmark suite has become the definitive framework for evaluating everything from massive data center training clusters to edge inference devices, providing crucial insights that guide hardware procurement decisions, research directions, and technological development strategies across the entire AI ecosystem.

Stay updated with the latest AI hardware developments as benchmark results continue to reshape our understanding of computational efficiency and performance optimization in machine learning applications. The evolution of AI benchmarking represents a critical milestone in establishing standardized metrics that enable fair comparison between radically different hardware architectures, from traditional graphics processing units to specialized neural processing units and quantum computing platforms.

The MLPerf Benchmark Revolution

MLPerf represents a paradigm shift in how the technology industry approaches AI performance measurement, moving beyond simplistic floating-point operations metrics to comprehensive evaluation of real-world machine learning workloads that reflect actual deployment scenarios. The benchmark suite encompasses both training and inference categories, each designed to capture different aspects of AI system performance that matter most to practitioners, researchers, and enterprise users seeking optimal solutions for their specific requirements.

The sophistication of MLPerf lies in its ability to standardize evaluation methodologies while accommodating the diverse range of hardware architectures, software frameworks, and optimization techniques that characterize the modern AI landscape. Unlike traditional benchmarks that focus on peak theoretical performance, MLPerf emphasizes practical throughput, energy efficiency, and accuracy metrics that directly translate to real-world application performance and operational costs.

The benchmark’s evolution has been driven by collaboration between leading technology companies, research institutions, and standards organizations, ensuring that evaluation criteria remain relevant as AI workloads continue to evolve and new use cases emerge. This collaborative approach has resulted in benchmark suites that accurately reflect the computational demands of contemporary AI applications while providing forward-looking insights into future performance requirements.

Training Benchmarks and Data Center Performance

MLPerf Training benchmarks focus on evaluating the performance of AI systems during the computationally intensive model training phase, where hardware must process massive datasets while updating billions or trillions of parameters through iterative optimization processes. These benchmarks encompass diverse domains including computer vision, natural language processing, recommendation systems, and reinforcement learning, each presenting unique computational challenges that stress different aspects of hardware architecture and software optimization.

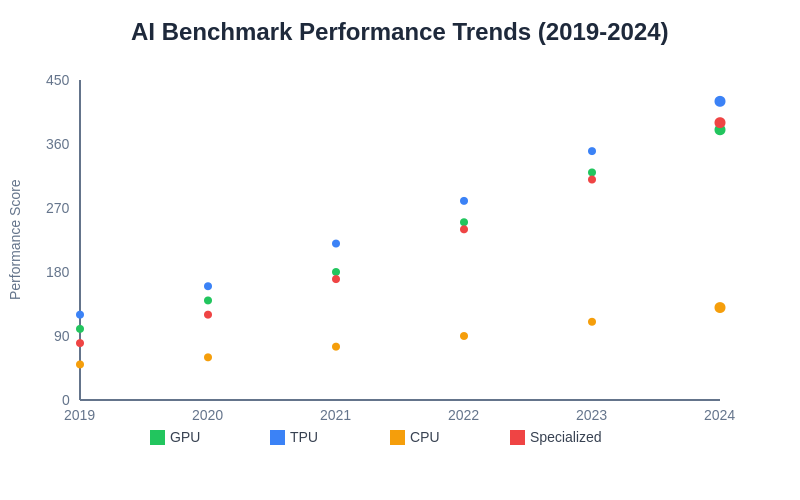

The training benchmark results have consistently demonstrated the superiority of specialized AI accelerators over traditional computing hardware, with modern tensor processing units and graphics processing units delivering order-of-magnitude improvements in training throughput compared to general-purpose processors. These performance advantages stem from architectural optimizations specifically designed for the matrix multiplication operations, memory access patterns, and numerical precision requirements that dominate machine learning training workloads.

Recent MLPerf Training results have highlighted the importance of system-level optimization beyond individual accelerator performance, with the highest-performing submissions leveraging advanced interconnect technologies, optimized software stacks, and sophisticated distributed training strategies. The benchmark results reveal that achieving peak performance requires careful coordination between hardware design, software implementation, and algorithmic optimization, emphasizing the holistic nature of AI system design.

Explore advanced AI development tools like Claude to understand how benchmark insights translate into practical development and deployment strategies that maximize performance while minimizing computational costs. The relationship between benchmark performance and real-world application success depends on numerous factors that extend beyond raw computational throughput to include development productivity, deployment flexibility, and operational efficiency.

Inference Performance and Edge Computing

MLPerf Inference benchmarks address the critical performance characteristics required for deploying trained models in production environments, where latency, throughput, and energy efficiency often matter more than raw training speed. These benchmarks evaluate hardware performance across diverse deployment scenarios, from high-throughput data center inference serving to latency-sensitive edge computing applications where models must process data within strict real-time constraints.

The inference benchmark results have revealed significant performance variations between different hardware architectures when optimized for production deployment scenarios. Specialized inference accelerators often demonstrate superior performance per watt compared to training-optimized hardware, reflecting architectural trade-offs that prioritize different aspects of computational efficiency based on deployment requirements and operational constraints.

The emergence of quantization techniques, model compression strategies, and specialized inference optimization frameworks has dramatically improved the performance landscape for edge deployment scenarios. MLPerf Inference results demonstrate how software optimization can unlock substantial performance improvements from existing hardware, enabling deployment of sophisticated AI models on resource-constrained devices that would have been impossible with earlier optimization techniques.

The benchmark results also highlight the growing importance of batch size optimization, precision selection, and workload scheduling in achieving optimal inference performance. These factors can dramatically impact both throughput and latency characteristics, with optimal configurations varying significantly based on specific deployment requirements and hardware capabilities.

Hardware Architecture Comparison

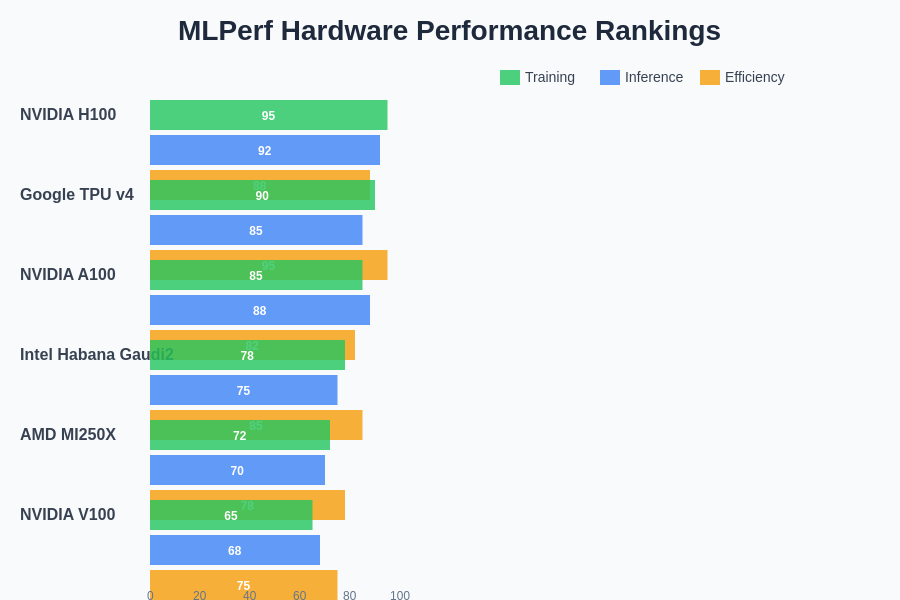

The comprehensive nature of MLPerf benchmarks enables detailed comparison between fundamentally different hardware architectures, revealing the strengths and limitations of various approaches to AI acceleration. Graphics processing units continue to demonstrate strong performance across diverse workloads, benefiting from mature software ecosystems, broad framework support, and extensive optimization efforts from both hardware vendors and the broader development community.

Tensor processing units and other specialized AI accelerators have shown exceptional performance in specific benchmark categories, particularly those that align closely with their architectural optimizations. These specialized processors often achieve superior performance per watt ratios compared to more general-purpose alternatives, though they may sacrifice flexibility and broad applicability in exchange for peak performance in targeted workloads.

Central processing units, while generally outperformed by specialized accelerators in raw throughput metrics, continue to play important roles in AI workloads that require complex control flow, irregular memory access patterns, or integration with traditional computing tasks. The benchmark results demonstrate that optimal AI system design often involves heterogeneous computing approaches that leverage the strengths of multiple processor types working in coordination.

The results also reveal the critical importance of memory subsystem design in AI accelerator performance, with memory bandwidth, capacity, and access patterns often determining overall system performance more than computational throughput capabilities. This insight has driven significant innovation in high-bandwidth memory technologies, on-chip memory hierarchies, and data movement optimization strategies.

The performance landscape revealed by MLPerf benchmarks demonstrates clear differentiation between hardware categories, with specialized AI accelerators consistently outperforming general-purpose processors across most workloads, while revealing important nuances in performance characteristics that inform optimal hardware selection strategies.

Energy Efficiency and Sustainability Metrics

Energy efficiency has emerged as a critical evaluation criterion in MLPerf benchmarks, reflecting growing awareness of the environmental impact and operational costs associated with large-scale AI deployment. The benchmark results reveal significant variations in performance per watt across different hardware architectures, with some specialized processors achieving dramatic efficiency improvements compared to traditional computing platforms.

The energy efficiency metrics provided by MLPerf have become particularly important for edge computing applications where battery life, thermal constraints, and power delivery limitations directly impact system viability. These constraints have driven innovation in low-power AI accelerator designs, advanced power management techniques, and algorithmic optimizations that reduce computational requirements without sacrificing model accuracy.

Data center applications also benefit significantly from improved energy efficiency, where power consumption directly impacts operational costs and environmental sustainability. The benchmark results have guided procurement decisions toward more efficient hardware platforms while highlighting the importance of system-level optimization in achieving optimal performance per watt ratios.

The sustainability implications of AI hardware performance extend beyond immediate energy consumption to include manufacturing impacts, lifecycle considerations, and the broader environmental footprint of AI infrastructure. MLPerf results provide crucial data for making informed decisions about hardware selection that balance performance requirements with environmental responsibility.

Discover comprehensive AI research capabilities with Perplexity to stay informed about emerging benchmark methodologies, hardware innovations, and performance optimization strategies that continue to reshape the AI acceleration landscape. The rapid pace of innovation in AI hardware requires continuous monitoring of benchmark results and performance trends to make optimal technology choices.

Software Framework Optimization

The MLPerf benchmark results demonstrate that hardware performance alone does not determine system capabilities, with software framework optimization playing an equally critical role in achieving peak performance. Different machine learning frameworks exhibit varying performance characteristics on identical hardware platforms, reflecting differences in optimization strategies, memory management approaches, and computational graph execution methodologies.

Framework-specific optimizations have become increasingly sophisticated, with advanced techniques such as automatic mixed precision, dynamic batching, kernel fusion, and memory pool optimization delivering substantial performance improvements across diverse hardware platforms. These optimizations often require deep understanding of both hardware architecture and algorithmic requirements to achieve optimal results.

The benchmark results have also highlighted the importance of compiler optimization in AI workload performance, with advanced compilation techniques enabling significant performance improvements through automated optimization of computational graphs, memory access patterns, and instruction scheduling. These compiler optimizations often bridge the gap between theoretical hardware capabilities and practical application performance.

The evolution of software optimization techniques continues to unlock additional performance from existing hardware platforms, demonstrating the ongoing importance of software development in maximizing AI system capabilities. This trend suggests that performance improvements will continue through software advancement even as hardware development faces increasing physical constraints.

Benchmark Methodology and Standards

The rigorous methodology underlying MLPerf benchmarks ensures fair comparison between different hardware and software configurations while maintaining relevance to real-world applications. The benchmark specifications define precise requirements for model architectures, dataset preprocessing, accuracy targets, and performance measurement methodologies that eliminate common sources of variation that could skew comparative results.

The standardization of benchmark methodology has been crucial for establishing trust in performance comparisons across the industry, enabling informed decision-making based on objective performance data rather than marketing claims or incomplete evaluations. This standardization process requires careful balance between prescriptive requirements that ensure fair comparison and flexibility that accommodates legitimate optimization approaches.

The continuous evolution of benchmark methodology reflects the rapid advancement of AI techniques and the emergence of new application domains that require specialized evaluation criteria. Regular updates to benchmark specifications ensure continued relevance while maintaining backward compatibility that enables performance trend analysis over time.

The transparency of benchmark methodology and the requirement for detailed submission documentation has fostered a culture of reproducible performance evaluation that benefits the entire AI research and development community. This transparency enables independent verification of results and facilitates knowledge sharing about optimization techniques and best practices.

The historical progression of MLPerf benchmark results reveals consistent performance improvements across hardware categories, driven by both architectural innovation and software optimization advances that continue to push the boundaries of AI system capabilities.

Industry Impact and Adoption Patterns

MLPerf benchmark results have significantly influenced hardware procurement decisions across the technology industry, with organizations using performance data to guide investment in AI infrastructure that aligns with their specific workload requirements and performance objectives. The standardized nature of benchmark results enables confident comparison between different vendor solutions and architectural approaches.

The impact of benchmark results extends beyond immediate purchasing decisions to influence hardware development roadmaps, software optimization priorities, and research directions throughout the AI ecosystem. Vendors actively optimize their platforms for MLPerf performance, driving innovation that benefits the broader community of AI practitioners and researchers.

Academic research has also been influenced by MLPerf benchmarks, with researchers using benchmark results to validate novel optimization techniques, evaluate hardware design proposals, and establish baseline performance expectations for new algorithmic approaches. This integration of benchmarking into research methodology has accelerated the pace of innovation in AI system design.

The adoption of MLPerf as an industry standard has facilitated more informed technical discussions between vendors, customers, and researchers, establishing a common vocabulary and measurement framework that improves communication and collaboration across the AI community.

Emerging Benchmark Categories

The continued evolution of AI applications has driven the development of new benchmark categories that address emerging workload characteristics and deployment scenarios not adequately covered by existing benchmarks. These new categories reflect the growing diversity of AI applications and the specialized requirements of different domains and use cases.

Federated learning benchmarks address the unique performance characteristics required for distributed training scenarios where data privacy, network constraints, and heterogeneous hardware configurations create new optimization challenges. These benchmarks evaluate system performance under realistic federated learning conditions that differ significantly from traditional centralized training scenarios.

Large language model benchmarks have emerged to address the specific computational requirements of transformer-based models that dominate natural language processing applications. These benchmarks capture the unique memory access patterns, attention computation requirements, and scaling characteristics that differentiate language models from other AI workloads.

Edge AI benchmarks continue to evolve to address the growing diversity of edge deployment scenarios, including automotive applications, industrial automation, and mobile devices, each with distinct performance requirements and operational constraints that require specialized evaluation criteria.

Future Directions and Technological Trends

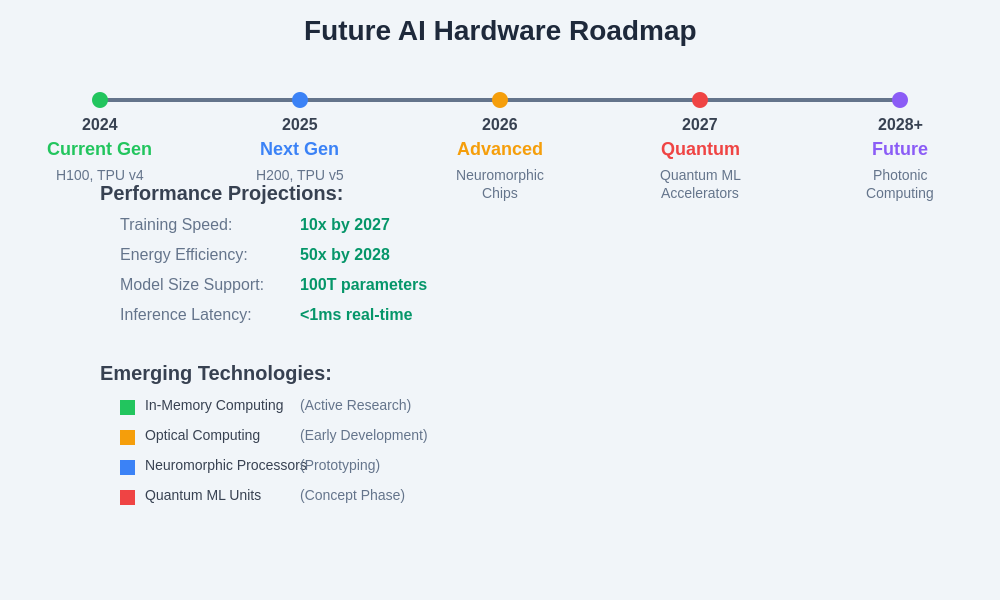

The future evolution of AI benchmarking will likely address emerging computational paradigms such as neuromorphic computing, quantum machine learning, and novel memory technologies that promise to reshape the performance landscape for AI applications. These new technologies require fundamentally different evaluation methodologies that may not be adequately captured by current benchmark frameworks.

The integration of accuracy and robustness metrics into performance evaluation represents an important trend toward more comprehensive system evaluation that considers not just computational efficiency but also model reliability, security, and deployment viability. This holistic approach to benchmark design reflects the maturation of AI deployment practices and the growing importance of production readiness.

Automated benchmark generation and evaluation techniques may enable more comprehensive and frequent performance assessment as the complexity and diversity of AI workloads continue to expand. These automated approaches could enable continuous performance monitoring and optimization that keeps pace with rapid technological advancement.

The development of domain-specific benchmarks that address the unique requirements of specialized applications such as scientific computing, autonomous systems, and real-time control systems will likely become increasingly important as AI adoption expands into new industries and use cases.

The anticipated trajectory of AI hardware development suggests continued performance improvements across multiple dimensions, with emerging technologies promising to deliver new capabilities while addressing current limitations in energy efficiency, memory capacity, and computational flexibility.

Practical Implications for System Design

MLPerf benchmark results provide crucial guidance for practical system design decisions that balance performance requirements, cost constraints, and operational considerations in real-world AI deployments. The benchmark data enables informed trade-off analysis between different hardware options based on objective performance measurements rather than theoretical specifications or marketing claims.

The results demonstrate the importance of considering total system performance rather than focusing exclusively on individual component capabilities, as optimal AI system design often requires careful coordination between processors, memory subsystems, storage infrastructure, and network connectivity. This systems-level perspective is essential for achieving peak performance in production environments.

Power and thermal management considerations revealed by benchmark results have become increasingly important factors in system design, particularly for high-density deployments where cooling costs and power delivery constraints can significantly impact total cost of ownership and operational viability.

The benchmark insights also highlight the critical importance of software optimization and framework selection in achieving optimal performance, suggesting that successful AI deployment requires expertise in both hardware selection and software configuration to realize the full potential of modern AI accelerator platforms.

Conclusion and Industry Outlook

The MLPerf benchmark suite has established itself as an indispensable tool for evaluating AI hardware performance and guiding technology adoption decisions across the industry. The comprehensive nature of these benchmarks provides crucial insights that inform hardware development, software optimization, and deployment strategies that maximize the effectiveness of AI system investments.

The continued evolution of benchmark methodology and the expansion into new application domains ensures that MLPerf will remain relevant as AI technology continues to advance and new use cases emerge. The collaborative development model that underlies MLPerf has proven effective at maintaining benchmark relevance while fostering innovation throughout the AI ecosystem.

The impact of standardized benchmarking extends far beyond immediate performance comparison to encompass improved research reproducibility, enhanced technology transfer between academic and industry settings, and more informed strategic planning for AI infrastructure investments. These broader benefits suggest that the influence of MLPerf will continue to grow as AI adoption expands across industries and applications.

The future success of AI deployment will increasingly depend on the ability to make informed decisions based on objective performance data, making standardized benchmarks like MLPerf essential tools for navigating the complex and rapidly evolving landscape of AI hardware and software technologies.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on publicly available MLPerf benchmark results and industry analysis. Readers should conduct their own research and consider their specific requirements when making hardware procurement or system design decisions. Benchmark results may vary based on specific configurations, software versions, and testing conditions. The effectiveness of different hardware platforms may vary depending on specific use cases, deployment requirements, and optimization efforts.