The evolution of software development has reached a pivotal moment where artificial intelligence transforms the fundamental processes of code quality assurance and review methodologies. Traditional code review practices, while essential for maintaining software integrity, have long been constrained by human limitations in processing vast codebases, identifying subtle vulnerabilities, and maintaining consistent quality standards across diverse development teams. The emergence of AI-powered code review tools represents a revolutionary leap forward in automated quality assurance, offering unprecedented capabilities in detecting bugs, security vulnerabilities, performance issues, and coding standard violations with remarkable precision and speed.

Stay updated with the latest AI development trends that are shaping the future of automated quality assurance and intelligent code analysis systems. The integration of machine learning algorithms into code review processes has fundamentally altered how development teams approach quality control, enabling them to achieve higher standards of code reliability while significantly reducing the time and resources traditionally required for comprehensive code analysis and review procedures.

The Foundation of AI-Driven Code Analysis

The sophisticated architecture underlying AI code review tools represents a convergence of multiple advanced technologies including natural language processing, pattern recognition, machine learning algorithms, and deep neural networks specifically trained on massive repositories of source code. These systems possess the remarkable ability to understand code semantics, recognize complex patterns that indicate potential issues, and apply contextual knowledge gained from analyzing millions of lines of code across diverse programming languages and development frameworks.

Unlike traditional static analysis tools that rely on predefined rules and patterns, AI-powered code review systems continuously learn and adapt their detection capabilities based on emerging coding patterns, newly discovered vulnerability types, and evolving best practices within the software development community. This dynamic learning approach enables these tools to identify subtle issues that might escape human reviewers, particularly in complex codebases where intricate interdependencies and edge cases create opportunities for bugs and security vulnerabilities to remain hidden.

The foundation of these systems rests on sophisticated machine learning models that have been trained on extensive datasets comprising both high-quality code examples and documented instances of bugs, security flaws, and performance issues. This comprehensive training enables AI code review tools to develop nuanced understanding of what constitutes good code architecture, proper error handling, secure coding practices, and optimal performance patterns across different programming paradigms and application domains.

Comprehensive Bug Detection and Prevention

The bug detection capabilities of modern AI code review tools extend far beyond simple syntax checking or basic rule validation, encompassing sophisticated analysis of code logic, data flow patterns, memory management, and algorithmic complexity. These systems excel at identifying subtle logical errors that might not manifest immediately during development but could cause significant issues in production environments under specific conditions or edge cases that human reviewers might not anticipate or thoroughly test.

AI-powered tools demonstrate particular strength in detecting memory-related bugs, race conditions, null pointer exceptions, buffer overflows, and other low-level issues that traditionally require extensive expertise and careful manual analysis to identify. The systems analyze code execution paths, variable lifecycles, and resource allocation patterns to predict potential failure scenarios and highlight code segments that exhibit characteristics commonly associated with problematic behavior in production environments.

Experience advanced AI coding assistance with Claude to complement your automated code review processes with intelligent debugging support and comprehensive code analysis capabilities. The combination of automated detection tools and AI-powered development assistance creates a robust quality assurance ecosystem that addresses issues at multiple stages of the development lifecycle.

The predictive capabilities of AI code review tools enable them to identify patterns that historically correlate with bug occurrences, allowing development teams to address potential issues before they manifest as actual problems in deployed applications. This proactive approach to bug prevention represents a significant advancement over reactive debugging methodologies, ultimately reducing maintenance costs and improving overall software reliability.

Advanced Security Vulnerability Scanning

Security vulnerability detection has become one of the most critical applications of AI-powered code review tools, particularly as cyber threats continue to evolve in sophistication and frequency. These systems leverage extensive knowledge bases of known vulnerabilities, attack patterns, and security best practices to identify potential security flaws that could expose applications to various forms of cyber attacks including injection attacks, cross-site scripting, authentication bypasses, and data exposure incidents.

The security analysis capabilities of AI code review tools extend beyond simple pattern matching to include sophisticated understanding of data flow analysis, privilege escalation paths, input validation weaknesses, and cryptographic implementation flaws. These systems can trace the flow of potentially malicious data through complex application architectures, identifying points where insufficient sanitization or validation could create security vulnerabilities that attackers might exploit.

Modern AI security scanning tools incorporate real-time threat intelligence feeds and continuously updated vulnerability databases, enabling them to detect newly discovered security issues and emerging attack vectors as they become known to the security community. This dynamic updating capability ensures that code review processes remain effective against evolving security threats and newly identified vulnerability classes.

The integration of security-focused AI analysis into the development workflow enables teams to address security concerns during the coding phase rather than discovering them during dedicated security testing or, worse, after deployment to production environments. This shift-left approach to security significantly reduces the cost and complexity of addressing security vulnerabilities while improving overall application security posture.

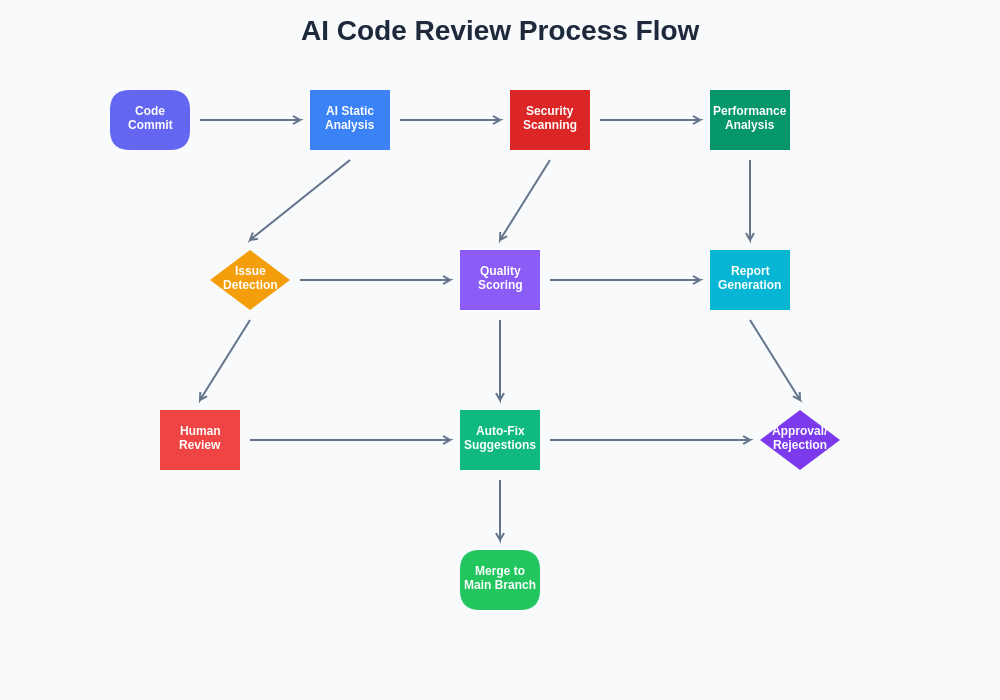

The comprehensive AI code review workflow demonstrates the systematic approach to automated quality assurance, incorporating multiple analysis stages from initial code commits through final approval and deployment. This structured process ensures thorough evaluation of code quality, security, and performance characteristics while maintaining efficient development velocity.

Performance Optimization and Efficiency Analysis

AI code review tools excel at identifying performance bottlenecks, inefficient algorithms, and resource utilization issues that could impact application performance under various load conditions. These systems analyze algorithmic complexity, database query patterns, memory allocation strategies, and computational efficiency to identify code segments that might cause performance degradation in production environments.

The performance analysis capabilities extend to identifying anti-patterns, inefficient data structures, unnecessary computational overhead, and suboptimal resource management practices that could impact application scalability and user experience. AI tools can suggest alternative implementations, recommend more efficient algorithms, and highlight opportunities for performance optimization that might not be immediately apparent to human reviewers.

These systems demonstrate particular strength in analyzing complex performance scenarios involving concurrent processing, distributed systems, and high-throughput applications where performance issues might only manifest under specific load conditions or in particular deployment environments. The ability to predict performance characteristics based on code analysis enables development teams to address potential scalability issues before they impact end users.

Code Quality and Standards Enforcement

Maintaining consistent code quality and adherence to established coding standards across large development teams presents significant challenges that AI code review tools address through automated analysis and enforcement capabilities. These systems can learn and apply organizational coding standards, style guidelines, and best practices consistently across all code submissions, ensuring uniformity and maintainability regardless of individual developer preferences or experience levels.

AI-powered quality analysis extends beyond superficial formatting and naming conventions to include deeper aspects of code quality such as architectural consistency, design pattern implementation, error handling completeness, and documentation adequacy. These tools can identify deviations from established architectural principles and suggest improvements that align with organizational standards and industry best practices.

The learning capabilities of AI code review systems enable them to adapt to organizational-specific quality requirements and evolve their enforcement mechanisms based on team feedback and changing requirements. This adaptability ensures that quality standards remain relevant and practical while maintaining the flexibility to accommodate legitimate exceptions and special circumstances.

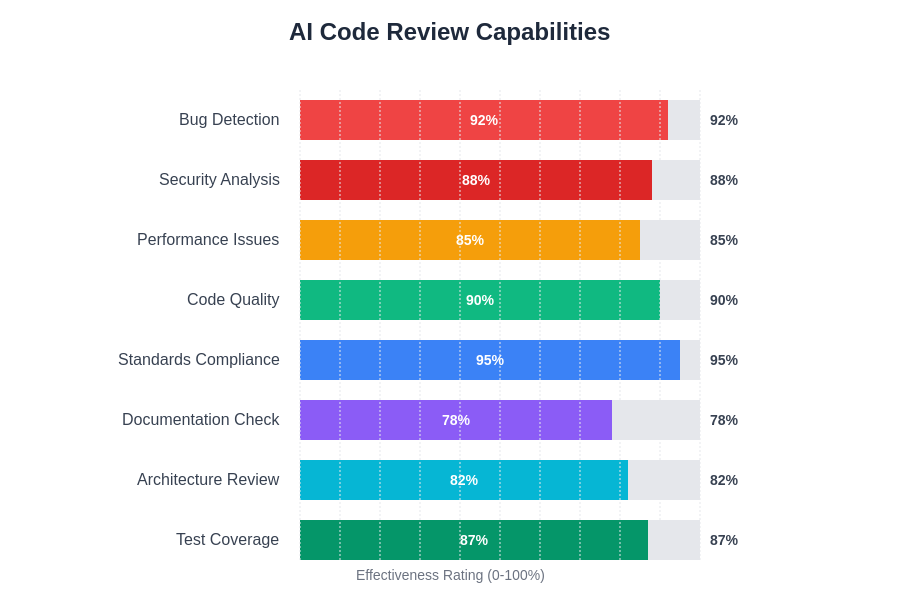

The effectiveness ratings across different AI code review capabilities demonstrate the technology’s strength in various quality assurance dimensions. Standards compliance and bug detection show particularly high effectiveness rates, while emerging areas like architecture review and documentation checking continue to improve as AI models become more sophisticated.

Enhance your development workflow with Perplexity for comprehensive research and analysis support that complements automated code review processes. The combination of AI-powered research capabilities and automated quality assurance creates a comprehensive development support ecosystem.

Integration with Development Workflows

The effectiveness of AI code review tools depends significantly on their seamless integration with existing development workflows, version control systems, and continuous integration pipelines. Modern AI review platforms provide sophisticated integration capabilities that enable automated analysis to occur at multiple points in the development lifecycle, from initial code commits through final deployment preparation.

These integration capabilities include real-time analysis during development, automated review of pull requests, continuous monitoring of code repositories, and integration with popular development environments and collaboration platforms. The seamless workflow integration ensures that quality assurance becomes an integral part of the development process rather than a separate, potentially disruptive activity that developers might circumvent or minimize.

The automated nature of AI code review tools enables them to provide immediate feedback on code quality, security, and performance issues, allowing developers to address problems while the context remains fresh and the cost of fixes remains minimal. This immediate feedback loop significantly improves the overall effectiveness of quality assurance processes while reducing the time between issue identification and resolution.

Advanced integration features include customizable notification systems, detailed reporting capabilities, and integration with project management tools that enable teams to track quality metrics, monitor improvement trends, and ensure that identified issues receive appropriate attention and resolution within established timeframes.

Machine Learning Evolution and Adaptation

The continuous learning capabilities of AI code review tools represent one of their most significant advantages over traditional static analysis solutions. These systems continuously refine their detection algorithms based on feedback from development teams, analysis of false positives and negatives, and exposure to new code patterns and development practices across diverse projects and organizations.

The evolutionary nature of machine learning-based code review systems enables them to improve their accuracy and relevance over time, reducing false positive rates while increasing their ability to detect subtle and complex issues that might escape initial detection algorithms. This continuous improvement process ensures that the tools remain effective as development practices evolve and new types of issues emerge.

Organizations implementing AI code review tools benefit from this evolutionary capability through improved detection accuracy, reduced manual review burden, and enhanced alignment between automated analysis results and actual quality concerns identified by human reviewers. The learning systems adapt to organizational-specific patterns and preferences, becoming more effective and relevant to particular development contexts over time.

Collaborative Human-AI Review Processes

The most effective implementations of AI code review tools recognize that artificial intelligence serves as an enhancement to human expertise rather than a replacement for experienced code reviewers. These collaborative approaches leverage the strengths of both AI analysis and human judgment to create comprehensive review processes that combine automated detection capabilities with contextual understanding and creative problem-solving skills that remain uniquely human.

Collaborative review workflows typically involve AI tools performing initial analysis and flagging potential issues, followed by human reviewers focusing their attention on the most critical findings and providing contextual evaluation of recommended changes. This approach maximizes the efficiency of human review time while ensuring that complex architectural decisions and nuanced quality considerations receive appropriate human attention.

The integration of AI analysis with human review processes creates opportunities for knowledge transfer and skill development, as reviewers can learn from AI-identified patterns and incorporate automated insights into their understanding of code quality principles and best practices. This educational aspect of collaborative review enhances the overall capability of development teams while maintaining the critical human elements of code review.

Enterprise-Scale Implementation Considerations

Implementing AI code review tools at enterprise scale requires careful consideration of organizational culture, existing development processes, tool integration requirements, and change management strategies that ensure successful adoption and maximum benefit realization. Large organizations must address challenges related to diverse technology stacks, varying skill levels among development teams, and integration with established quality assurance processes and compliance requirements.

Enterprise implementations typically involve phased rollout strategies that enable gradual adoption, feedback collection, and process refinement before full-scale deployment across all development teams and projects. These approaches allow organizations to identify and address implementation challenges while building confidence and expertise in AI-powered quality assurance methodologies.

The scalability considerations for enterprise AI code review implementations include computational resource requirements, integration complexity with existing development infrastructure, and the need for customization to address organization-specific quality standards and compliance requirements. Successful enterprise implementations often require dedicated teams to manage tool configuration, integration maintenance, and ongoing optimization of analysis algorithms.

Measuring Impact and Return on Investment

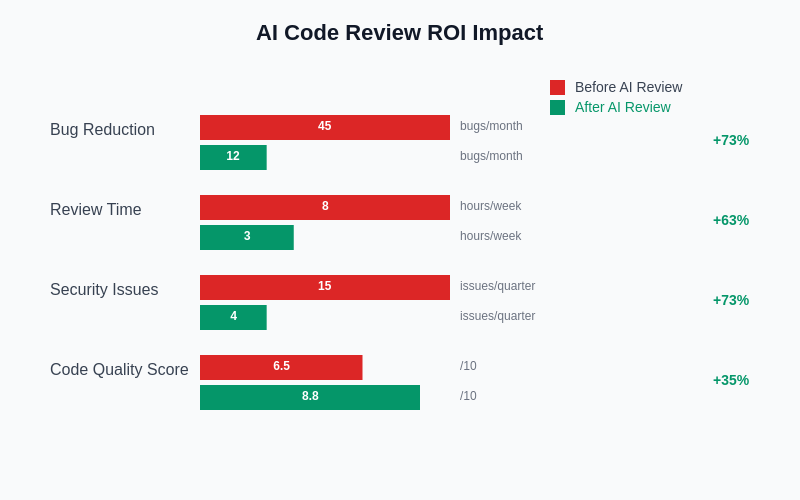

Organizations implementing AI code review tools must establish comprehensive metrics and measurement frameworks to evaluate the effectiveness and return on investment of automated quality assurance initiatives. These measurements typically encompass multiple dimensions including bug detection rates, security vulnerability identification, development velocity improvements, and overall code quality trends over time.

Key performance indicators for AI code review implementations include reduction in production bugs, decreased security incident frequency, improved code maintainability scores, and enhanced developer productivity metrics. Long-term measurements focus on trends in code quality, reduction in technical debt accumulation, and improvements in overall software reliability and performance characteristics.

The economic impact of AI code review tools extends beyond direct quality improvements to include reduced debugging time, decreased security remediation costs, improved development team efficiency, and enhanced customer satisfaction resulting from higher quality software releases. These broader economic benefits often justify the investment in AI-powered quality assurance tools even when direct quality improvements alone might not provide sufficient return on investment.

The quantified return on investment demonstrates substantial improvements across critical development metrics following AI code review implementation. Bug reduction, review time efficiency, security issue prevention, and overall code quality scores show significant positive trends that translate directly into cost savings and improved development productivity.

Future Directions and Emerging Capabilities

The evolution of AI code review tools continues to accelerate with advances in machine learning techniques, natural language processing capabilities, and integration with emerging development methodologies including DevOps practices, cloud-native architectures, and microservices implementations. Future developments promise even more sophisticated analysis capabilities, improved accuracy in complex scenarios, and enhanced integration with comprehensive development lifecycle management platforms.

Emerging capabilities include predictive quality analysis that can forecast potential quality issues based on development patterns, intelligent test case generation that creates comprehensive test suites based on code analysis, and automated refactoring suggestions that improve code architecture and maintainability. These advanced features represent the next generation of AI-powered development assistance that extends beyond simple issue detection to proactive quality improvement and optimization.

The integration of AI code review tools with broader artificial intelligence ecosystems promises to create comprehensive development assistance platforms that support every aspect of software creation, from initial design through deployment and maintenance. These integrated platforms will leverage shared knowledge bases, collaborative learning algorithms, and unified development workflows to provide seamless, intelligent support throughout the entire software development lifecycle.

The future landscape of AI-powered quality assurance will likely include increased personalization of review processes, enhanced collaboration between multiple AI systems, and deeper integration with project management and business process workflows that align technical quality improvements with organizational objectives and customer requirements.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI technologies and their applications in software development quality assurance. Readers should conduct their own research and consider their specific requirements when implementing AI-powered code review tools. The effectiveness of AI code review systems may vary depending on specific use cases, organizational contexts, and implementation approaches.