The exponential growth of artificial intelligence workloads has created unprecedented thermal challenges for data centers and high-performance computing facilities worldwide. As GPU clusters become increasingly dense and powerful, traditional cooling methods are being pushed to their absolute limits, necessitating innovative thermal management solutions that can handle the extreme heat generation of modern AI accelerators. The choice between liquid and air cooling systems has become a critical decision that directly impacts performance, reliability, operational costs, and the overall sustainability of AI infrastructure deployments.

Explore the latest AI infrastructure trends to understand how cooling innovations are enabling next-generation AI computing capabilities. The thermal management revolution in AI hardware represents a fundamental shift from conventional data center cooling approaches toward specialized solutions designed specifically for the unique demands of machine learning and deep learning workloads.

The Thermal Challenge of Modern AI Computing

Contemporary GPU architectures designed for AI workloads generate thermal loads that far exceed traditional computing applications, with flagship accelerators consuming between 300 to 700 watts per device under full computational load. When these powerful processors are densely packed into server configurations optimized for AI training and inference, the resulting thermal density can reach levels that challenge even the most sophisticated cooling infrastructure. The problem is further compounded by the continuous operation requirements of AI workloads, where sustained high-performance computing demands consistent thermal management without the periodic idle periods that might allow for thermal recovery in traditional computing environments.

The thermal characteristics of AI workloads differ significantly from conventional server applications due to their sustained high utilization rates, parallel processing demands, and the computational intensity of neural network operations. Modern transformer models and large language models require sustained computational performance that keeps GPU cores operating at maximum capacity for extended periods, creating thermal conditions that demand precision cooling solutions capable of maintaining optimal operating temperatures while supporting peak performance levels.

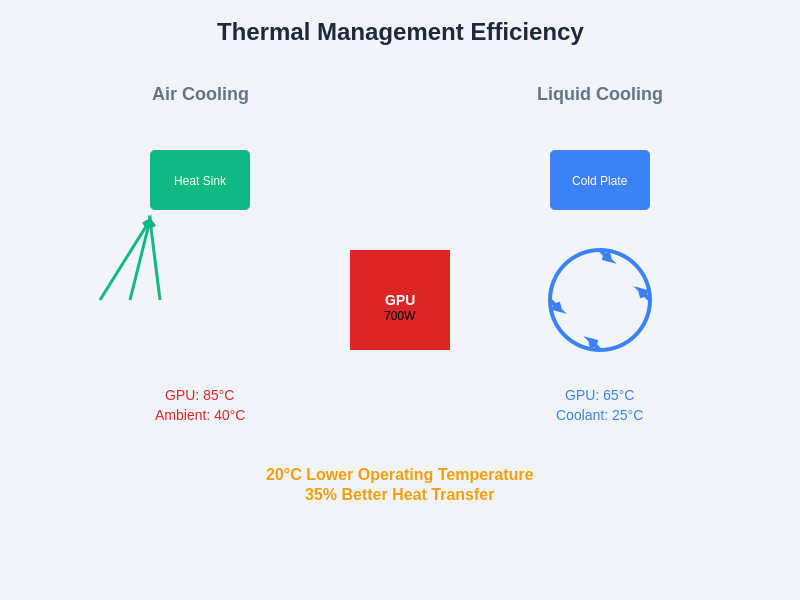

The fundamental differences in thermal management approaches become clearly evident when examining how air and liquid cooling systems handle identical GPU thermal loads. Liquid cooling systems demonstrate superior heat transfer capabilities, maintaining significantly lower component temperatures while enabling sustained peak performance operation.

Understanding Air Cooling Fundamentals

Air cooling systems have long served as the backbone of data center thermal management, relying on forced convection principles to transfer heat from computing components to the surrounding environment through carefully orchestrated airflow patterns. Traditional air cooling implementations utilize high-velocity fans to create directed airflow across heat sinks and thermal interface materials, facilitating heat transfer through convective processes that move thermal energy away from critical components and into the broader data center environment where it can be managed through HVAC systems.

The fundamental advantage of air cooling lies in its simplicity, reliability, and extensive ecosystem of proven components and maintenance procedures. Air cooling systems require minimal specialized infrastructure beyond standard data center power and environmental controls, making them accessible for organizations with existing facilities and established operational procedures. The technology leverages well-understood physics principles and benefits from decades of refinement in fan design, heat sink optimization, and airflow management techniques that have been continuously improved through extensive research and development efforts.

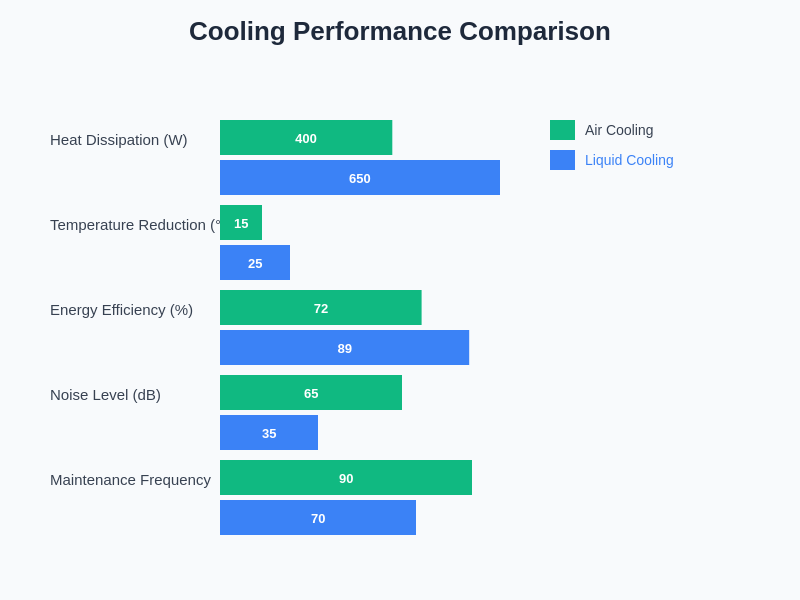

However, the limitations of air cooling become increasingly apparent as thermal loads intensify. Air has relatively poor thermal capacity compared to liquid cooling mediums, requiring significantly higher volumes and velocities to achieve equivalent heat transfer rates. This fundamental physical limitation means that air cooling systems must move substantially more air volume to manage the same thermal load, resulting in increased energy consumption for cooling infrastructure and potential acoustic challenges from high-velocity fan operations.

Experience advanced AI infrastructure solutions with Claude to optimize your cooling strategy through intelligent analysis of thermal patterns and performance requirements. The integration of AI-driven thermal management systems represents the next evolution in cooling optimization, where machine learning algorithms can predict thermal loads and automatically adjust cooling parameters for maximum efficiency.

Exploring Liquid Cooling Technologies

Liquid cooling represents a paradigmatic shift in thermal management philosophy, utilizing the superior thermal properties of liquids to achieve dramatically improved heat transfer efficiency compared to air-based systems. The fundamental advantage of liquid cooling stems from the significantly higher thermal capacity and thermal conductivity of liquids compared to air, enabling more effective heat extraction from high-performance computing components while requiring substantially less energy for circulation and heat transport.

Modern liquid cooling implementations encompass several distinct approaches, including direct-to-chip cooling systems that place liquid cooling interfaces in direct contact with GPU dies, immersion cooling solutions that submerge entire computing components in specialized dielectric fluids, and hybrid systems that combine liquid cooling for primary heat removal with air cooling for supplementary thermal management. Each approach offers unique advantages and considerations that must be evaluated based on specific deployment requirements, performance objectives, and operational constraints.

Direct-to-chip liquid cooling systems provide the most targeted thermal management by establishing direct thermal interfaces between high-performance processors and liquid cooling circuits. These systems typically utilize cold plates or micro-channel heat exchangers that are mounted directly onto GPU surfaces, creating highly efficient thermal pathways that can remove heat at the source before it can spread to surrounding components or contribute to ambient temperature increases within server enclosures.

Immersion cooling represents the most aggressive approach to liquid cooling, completely surrounding computing components with specialized dielectric fluids that provide comprehensive thermal management for entire server assemblies. This approach eliminates the need for traditional air cooling infrastructure within servers while providing uniform thermal management for all components regardless of their individual thermal characteristics or power consumption profiles.

Performance Analysis and Thermal Efficiency

The performance differential between liquid and air cooling systems becomes particularly pronounced when managing the extreme thermal loads generated by modern AI accelerators operating under sustained computational workloads. Liquid cooling systems consistently demonstrate superior thermal performance through their ability to maintain lower component temperatures while supporting higher sustained performance levels, enabling AI workloads to operate at peak computational capacity without thermal throttling or performance degradation.

Thermal efficiency measurements reveal that liquid cooling systems can maintain GPU junction temperatures approximately fifteen to twenty-five degrees Celsius lower than equivalent air cooling implementations under identical computational loads. This temperature differential translates directly into performance advantages, as modern GPU architectures implement dynamic thermal management systems that automatically reduce computational performance when thermal limits are approached to prevent component damage and ensure operational reliability.

The sustained performance advantages of liquid cooling become even more significant during extended AI training operations, where thermal accumulation effects can cause air-cooled systems to experience gradually degrading performance as ambient temperatures rise within server enclosures. Liquid cooling systems maintain consistent thermal performance throughout extended operational periods, enabling sustained peak performance that directly translates into reduced training times and improved computational efficiency for AI workloads.

Comprehensive performance metrics demonstrate the substantial advantages of liquid cooling across multiple critical parameters including heat dissipation capacity, temperature reduction effectiveness, energy efficiency, noise reduction, and maintenance requirements. These quantitative differences translate directly into operational benefits and improved total system performance.

Infrastructure Requirements and Implementation

The infrastructure requirements for liquid and air cooling systems differ substantially in terms of complexity, specialized equipment, and facility modifications necessary for successful implementation. Air cooling systems benefit from minimal infrastructure requirements beyond standard data center power distribution and environmental controls, making them readily compatible with existing facilities and operational procedures. The primary infrastructure considerations for air cooling involve ensuring adequate airflow pathways, proper hot aisle and cold aisle configurations, and sufficient HVAC capacity to manage the thermal loads transferred from computing equipment to the data center environment.

Liquid cooling implementations require significantly more specialized infrastructure, including dedicated cooling distribution units, liquid circulation pumps, heat exchangers, and monitoring systems designed to manage the additional complexity of liquid cooling circuits. The infrastructure requirements vary considerably based on the specific liquid cooling approach selected, with direct-to-chip systems requiring relatively modest modifications to existing server infrastructure, while immersion cooling systems necessitate complete redesign of server enclosures and rack-level cooling distribution systems.

The implementation complexity of liquid cooling systems extends beyond the immediate cooling infrastructure to encompass specialized maintenance procedures, leak detection systems, and emergency response protocols designed to address potential liquid cooling system failures. These requirements demand additional staff training, specialized maintenance equipment, and enhanced monitoring capabilities that add operational complexity compared to traditional air cooling systems.

Leverage Perplexity’s research capabilities to stay informed about the latest developments in cooling technology research and implementation best practices. The rapidly evolving landscape of thermal management solutions requires continuous monitoring of technological advances and industry best practices to optimize cooling system selection and implementation strategies.

Economic Analysis and Total Cost of Ownership

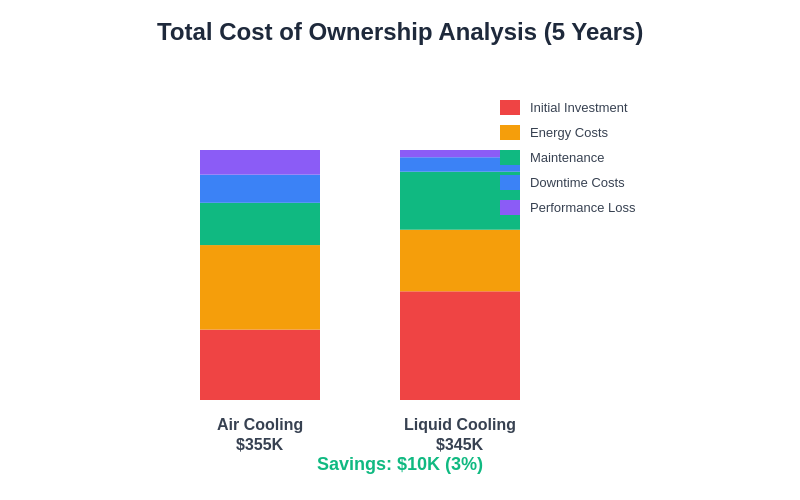

The economic comparison between liquid and air cooling systems encompasses multiple cost factors that extend far beyond initial equipment procurement to include operational expenses, maintenance requirements, energy consumption patterns, and long-term reliability considerations. While liquid cooling systems typically require higher upfront capital investment due to their specialized components and installation requirements, the total cost of ownership analysis reveals a more complex economic landscape where operational efficiency gains and performance improvements can provide substantial long-term value.

Initial capital expenditure comparisons show that liquid cooling systems generally require approximately thirty to fifty percent higher upfront investment compared to equivalent air cooling implementations. This cost differential reflects the specialized components, installation complexity, and infrastructure modifications required for liquid cooling deployment. However, the higher initial investment must be evaluated against the operational benefits and performance advantages that liquid cooling systems provide throughout their operational lifespan.

Operational cost analysis reveals significant advantages for liquid cooling systems through reduced energy consumption for thermal management and improved computational efficiency that translates into faster completion times for AI workloads. Liquid cooling systems typically consume twenty to thirty percent less energy for thermal management compared to equivalent air cooling systems while enabling sustained peak performance that reduces the total computational time required for AI training and inference operations.

The reliability and maintenance cost considerations present a more nuanced comparison, where air cooling systems benefit from simpler maintenance procedures and widely available replacement components, while liquid cooling systems offer potentially longer operational lifespans and reduced wear on critical computing components due to superior thermal management. The economic impact of improved component longevity and reduced thermal stress can provide substantial long-term value that offsets the higher initial investment and specialized maintenance requirements of liquid cooling systems.

The comprehensive five-year total cost of ownership analysis reveals the complex economic dynamics between initial investment requirements and long-term operational benefits. While liquid cooling systems require higher upfront capital expenditure, the operational efficiency gains and reduced energy consumption can provide significant long-term economic advantages for large-scale AI deployments.

Environmental Impact and Sustainability

The environmental implications of cooling system selection extend beyond immediate energy consumption to encompass broader sustainability considerations including carbon footprint reduction, resource utilization efficiency, and alignment with corporate environmental responsibility objectives. As organizations increasingly prioritize sustainable technology implementations, the environmental performance of cooling systems has become a critical decision factor that influences both technology selection and long-term strategic planning for AI infrastructure deployments.

Energy efficiency analysis demonstrates clear environmental advantages for liquid cooling systems through their superior thermal management efficiency and reduced total energy consumption for equivalent cooling performance. The improved energy efficiency of liquid cooling systems translates directly into reduced carbon emissions and environmental impact, particularly when deployed in facilities utilizing renewable energy sources or participating in carbon offset programs.

The resource utilization efficiency of liquid cooling systems extends beyond energy consumption to include more effective utilization of computing resources through sustained peak performance capabilities. By enabling AI workloads to operate at optimal performance levels without thermal constraints, liquid cooling systems maximize the computational output per unit of energy consumed, improving the overall environmental efficiency of AI computing operations.

Water usage considerations present important sustainability factors for liquid cooling implementations, particularly in regions where water resources are constrained or where water usage carries significant environmental costs. Modern liquid cooling systems can be designed with closed-loop architectures that minimize water consumption while providing superior thermal performance, addressing sustainability concerns while maintaining operational efficiency advantages.

Scalability and Future Considerations

The scalability characteristics of cooling systems become increasingly critical as AI infrastructure deployments expand to meet growing computational demands and support larger, more complex machine learning models. The ability of cooling systems to scale efficiently with expanding GPU clusters while maintaining thermal performance and operational reliability determines the long-term viability of infrastructure investments and influences strategic planning for future expansion requirements.

Air cooling systems demonstrate excellent scalability characteristics within their thermal capacity limits, offering straightforward expansion procedures that leverage existing infrastructure and operational expertise. The modular nature of air cooling systems enables incremental expansion that can be implemented without major infrastructure modifications or operational disruptions. However, the fundamental thermal capacity limitations of air cooling systems create scaling constraints that become increasingly problematic as GPU densities and thermal loads continue to increase with each generation of AI accelerators.

Liquid cooling systems offer superior scalability for high-density AI deployments through their ability to manage higher thermal loads per unit of infrastructure investment. The superior thermal capacity of liquid cooling enables more aggressive GPU clustering strategies that maximize computational density while maintaining optimal operating temperatures. This scalability advantage becomes particularly important for large-scale AI training operations where computational density directly impacts both performance and economic efficiency.

The future trajectory of AI hardware development suggests continued increases in computational power and thermal generation that will further favor liquid cooling solutions. As AI accelerators incorporate more advanced process technologies and architectural optimizations that increase computational density, the thermal challenges will intensify beyond the practical limits of air cooling systems, making liquid cooling implementations increasingly necessary for cutting-edge AI infrastructure deployments.

Implementation Strategies and Best Practices

Successful implementation of advanced cooling systems requires comprehensive planning that addresses technical requirements, operational considerations, and long-term strategic objectives while ensuring compatibility with existing infrastructure and organizational capabilities. The implementation strategy must carefully balance performance objectives with practical constraints including budget limitations, facility capabilities, and operational expertise requirements.

The evaluation process for cooling system selection should begin with detailed thermal analysis of specific AI workloads and GPU configurations to establish precise cooling requirements and performance objectives. This analysis must consider not only peak thermal loads but also sustained operational patterns, computational duty cycles, and future expansion plans that will influence long-term cooling requirements. The thermal analysis provides the foundation for objective comparison of cooling system alternatives and ensures that selected solutions will meet both current and anticipated future requirements.

Risk assessment and mitigation planning represent critical aspects of cooling system implementation, particularly for liquid cooling systems where potential failure modes differ significantly from traditional air cooling failures. Comprehensive risk assessment must evaluate potential failure scenarios, establish appropriate backup systems and emergency procedures, and ensure that staff training and maintenance capabilities align with the selected cooling system requirements.

The integration of monitoring and control systems enables proactive thermal management and early identification of potential issues that could impact cooling performance or system reliability. Advanced monitoring capabilities provide real-time visibility into cooling system performance, enable predictive maintenance scheduling, and support optimization efforts that maximize cooling efficiency while minimizing operational costs and environmental impact.

Conclusion and Strategic Recommendations

The selection between liquid and air cooling systems for AI GPU clusters represents a strategic decision that requires careful evaluation of performance requirements, economic considerations, and long-term infrastructure objectives. While air cooling systems continue to provide viable solutions for moderate thermal loads and organizations with established air cooling expertise, the superior thermal performance and efficiency advantages of liquid cooling systems make them increasingly attractive for high-performance AI deployments where sustained peak performance is critical.

The economic analysis supports liquid cooling implementation for organizations operating large-scale AI workloads where the operational efficiency gains and performance improvements justify the higher initial investment and specialized operational requirements. The total cost of ownership advantages of liquid cooling become more pronounced as the scale of AI operations increases and where sustained high-performance computing is essential for competitive advantage.

Environmental and sustainability considerations further support liquid cooling adoption for organizations committed to reducing their environmental impact while maximizing computational efficiency. The superior energy efficiency and resource utilization characteristics of liquid cooling systems align with sustainability objectives while providing the thermal performance necessary to support demanding AI workloads.

The future trajectory of AI hardware development and the increasing thermal challenges of next-generation accelerators suggest that liquid cooling adoption will become increasingly necessary for organizations seeking to deploy cutting-edge AI infrastructure. Early adoption of liquid cooling technologies provides valuable experience and infrastructure development that positions organizations for successful expansion as AI computational requirements continue to evolve and intensify.

Disclaimer

This article is for informational purposes only and does not constitute professional engineering or technical advice. The views expressed are based on current understanding of cooling technologies and their applications in AI infrastructure deployments. Readers should conduct thorough evaluation of their specific requirements and consult with qualified engineers and technical specialists when implementing cooling system modifications. Cooling system performance may vary significantly based on specific hardware configurations, facility conditions, and operational requirements.