The entertainment and media industry has witnessed a revolutionary transformation through the emergence of artificial intelligence-powered dubbing technology, which seamlessly combines sophisticated automatic translation systems with advanced voice synthesis capabilities. This groundbreaking innovation is fundamentally reshaping how content creators approach global distribution, enabling instantaneous localization of audio and video content across multiple languages while maintaining natural speech patterns and emotional authenticity that was previously achievable only through expensive human voice actors and lengthy production timelines.

Discover the latest AI trends in media technology to understand how artificial intelligence is revolutionizing content creation and distribution across global markets. The convergence of machine learning, natural language processing, and advanced audio synthesis technologies has created unprecedented opportunities for content creators to reach international audiences without the traditional barriers of language, cost, and production complexity that have historically limited global media expansion.

The Evolution of Voice Technology and Translation

The journey toward AI-powered dubbing represents the culmination of decades of research and development in multiple interconnected fields of artificial intelligence and audio engineering. Traditional dubbing processes required extensive human resources, including professional translators, voice actors, directors, and audio engineers, resulting in production costs that often exceeded hundreds of thousands of dollars for feature-length content. The time investment was equally substantial, with typical dubbing projects requiring several months from initial translation to final audio mixing, creating significant delays in global content distribution and limiting the economic viability of multilingual releases for smaller production companies.

The emergence of neural machine translation systems marked the first major breakthrough in automating the dubbing pipeline, enabling near-instantaneous conversion of source language scripts into target languages with unprecedented accuracy and contextual understanding. These systems, powered by transformer architectures and trained on massive multilingual datasets, demonstrated the ability to preserve not only literal meaning but also cultural nuances, idiomatic expressions, and emotional undertones that are crucial for maintaining the artistic integrity of original content across different linguistic and cultural contexts.

Technological Foundations of AI Dubbing Systems

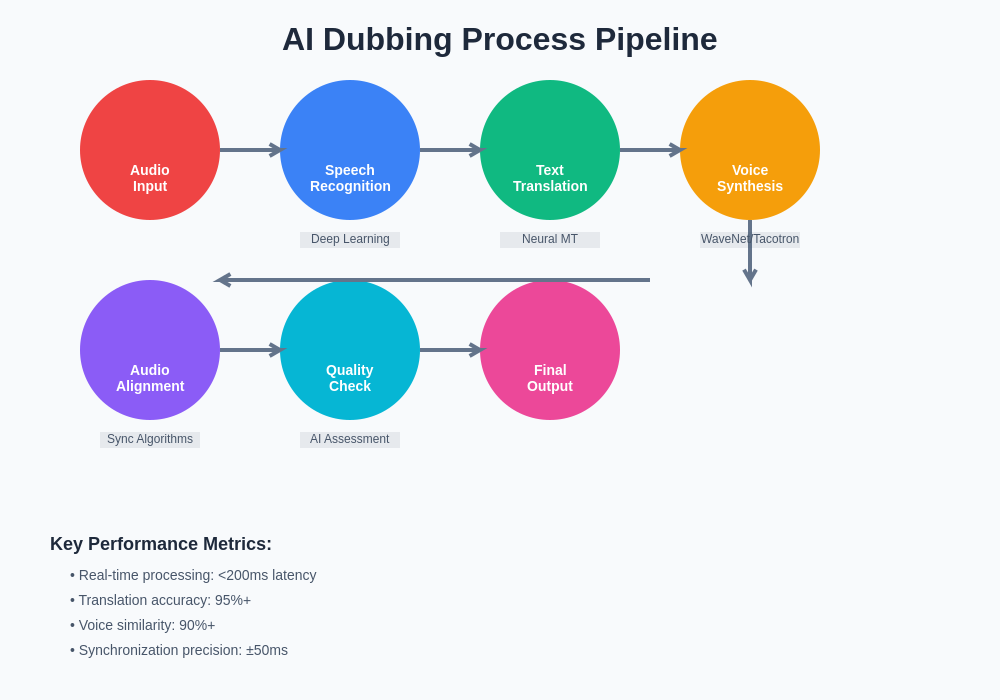

Modern AI dubbing platforms integrate multiple sophisticated technologies to create seamless multilingual audio experiences that rival traditional human-performed dubbing in quality and authenticity. The foundation of these systems rests on advanced speech recognition algorithms that can accurately transcribe spoken dialogue from source audio tracks, even in challenging acoustic environments with background music, sound effects, and multiple speakers. These transcription systems utilize deep learning models trained on diverse audio datasets, enabling them to handle various accents, speaking styles, and audio quality conditions that are commonly encountered in professional media production.

The translation component leverages state-of-the-art neural machine translation models that go beyond simple word-for-word conversion to understand context, maintain consistency across dialogue sequences, and adapt language register to match character personalities and situational contexts. These systems incorporate specialized training on entertainment content, allowing them to handle dialogue-specific challenges such as maintaining character voice consistency, preserving humor and wordplay, and adapting cultural references for target audiences while maintaining narrative coherence and emotional impact.

The comprehensive AI dubbing pipeline demonstrates the sophisticated integration of multiple technologies working in concert to transform source audio content into high-quality multilingual output. Each stage of the process incorporates advanced algorithms and quality control mechanisms to ensure optimal results throughout the transformation workflow.

Experience advanced AI capabilities with Claude for content creation and language processing tasks that require sophisticated understanding of context and cultural nuances. The voice synthesis component represents perhaps the most technically challenging aspect of AI dubbing, requiring the generation of natural-sounding speech that matches not only the linguistic content but also the emotional expression, timing, and vocal characteristics of original performances.

Voice Cloning and Synthesis Technologies

The most remarkable aspect of contemporary AI dubbing systems lies in their ability to create synthetic voices that maintain the unique vocal characteristics and emotional expressiveness of original performers while speaking entirely different languages. Voice cloning technology utilizes advanced neural networks trained on extensive audio samples to capture the subtle nuances of individual vocal patterns, including pitch variations, speech rhythm, accent characteristics, and emotional inflection patterns that define each speaker’s unique vocal identity.

These sophisticated voice synthesis models can generate speech that preserves not only the fundamental frequency characteristics of source voices but also the complex harmonic structures, breathing patterns, and micro-expressions that contribute to authentic vocal performance. The technology has advanced to the point where synthetic voices can maintain consistency across extended dialogue sequences while adapting to different emotional states, speaking speeds, and dramatic contexts without losing the essential qualities that make each voice distinctive and recognizable.

The training process for voice synthesis models involves analyzing thousands of hours of speech data to understand the mathematical relationships between linguistic content and acoustic output, enabling the system to generate new speech that sounds natural and emotionally appropriate for any given text input. Advanced models can even adapt synthetic voices to match the acoustic characteristics of different recording environments, ensuring that dubbed content maintains consistent audio quality and spatial characteristics throughout entire productions.

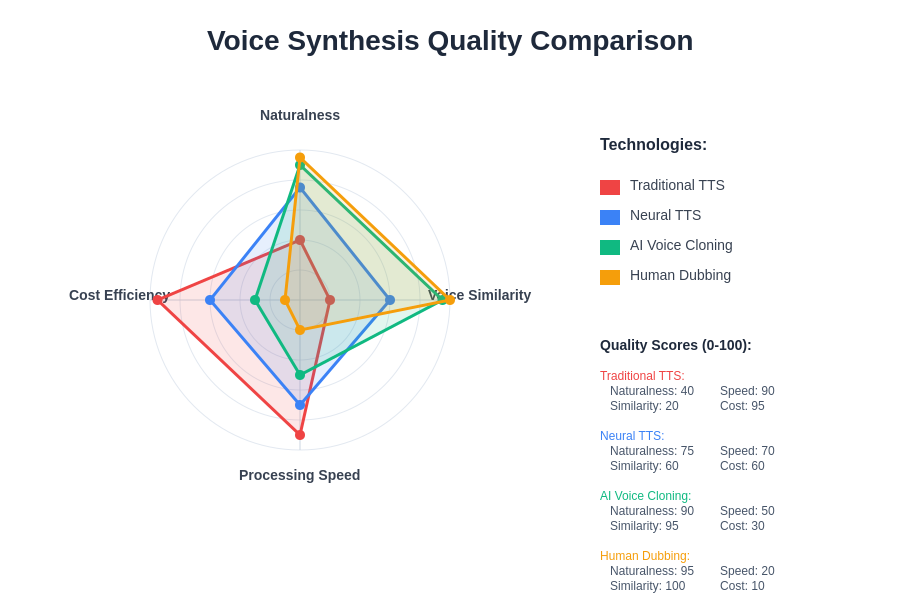

The comparative analysis of different voice synthesis technologies reveals the remarkable advancement of AI-powered voice cloning systems in achieving both natural speech quality and voice similarity metrics that approach human-level performance while offering significant advantages in processing speed and cost efficiency over traditional dubbing methods.

Real-Time Processing and Synchronization

One of the most technically demanding aspects of AI dubbing involves achieving precise synchronization between translated audio content and existing visual elements, particularly lip movements and facial expressions in video content. Traditional dubbing often required careful script adaptation to match mouth movements, sometimes necessitating changes to dialogue content that could affect narrative coherence or character development. AI dubbing systems address this challenge through sophisticated timing analysis algorithms that can automatically adjust speech pacing, pause duration, and pronunciation patterns to achieve optimal visual synchronization without compromising translation accuracy or emotional authenticity.

Real-time processing capabilities have enabled the development of live dubbing applications that can provide instantaneous multilingual audio translation for broadcast content, streaming media, and interactive applications. These systems process incoming audio streams with minimal latency, performing speech recognition, translation, voice synthesis, and audio mixing operations in parallel to deliver synchronized multilingual output that maintains the natural flow and timing of original content.

The synchronization algorithms analyze visual cues from video content to optimize the timing and pacing of synthetic speech, ensuring that translated dialogue appears natural and believable to viewers. Advanced systems can even adjust vocal emphasis and intonation patterns to match facial expressions and body language, creating a more cohesive and immersive viewing experience that preserves the original artistic intent across different language versions.

Quality Assurance and Human Oversight

Despite the remarkable capabilities of AI dubbing systems, maintaining high quality standards requires sophisticated quality assurance processes that combine automated analysis with human oversight and creative input. AI systems excel at consistency and efficiency but may struggle with subtle cultural references, complex wordplay, or highly emotional content that requires nuanced interpretation and creative adaptation. Professional dubbing workflows increasingly incorporate hybrid approaches that leverage AI technology for initial processing while providing human experts with tools for refinement and creative enhancement.

Quality assurance systems utilize multiple evaluation metrics to assess translation accuracy, vocal authenticity, synchronization precision, and overall listening experience quality. These automated evaluation systems can identify potential issues such as unnatural pronunciation, timing inconsistencies, or translation errors that might affect viewer comprehension or enjoyment. Human reviewers can then focus their attention on areas flagged by automated systems, enabling more efficient use of creative resources while maintaining high quality standards.

Explore comprehensive AI research capabilities with Perplexity for in-depth analysis and comparison of different AI dubbing technologies and their applications across various media formats. The iterative refinement process allows for continuous improvement of AI dubbing systems through feedback loops that incorporate both automated performance metrics and human creative judgment, resulting in increasingly sophisticated and nuanced multilingual content that meets professional production standards.

Applications Across Entertainment Industries

The impact of AI dubbing technology extends across multiple segments of the entertainment industry, from Hollywood blockbusters to independent streaming content, educational materials, and corporate communications. Major streaming platforms have begun implementing AI dubbing solutions to rapidly expand their content libraries across international markets, enabling simultaneous global releases that were previously impossible due to time and cost constraints associated with traditional dubbing processes.

The animation industry has particularly benefited from AI dubbing capabilities, as animated content typically provides more flexibility for audio synchronization compared to live-action productions. Animated series and films can leverage AI dubbing to create multiple language versions simultaneously during production, rather than as post-production additions, enabling more integrated multilingual content development that considers cultural adaptation from the earliest stages of creative development.

Documentary and educational content creators have found AI dubbing particularly valuable for reaching global audiences with factual and instructional materials that benefit from consistent vocal presentation across different languages. The technology enables rapid localization of time-sensitive content such as news documentaries, scientific presentations, and training materials that require quick distribution to international audiences without compromising accuracy or professional presentation quality.

Gaming and Interactive Media Integration

The gaming industry has embraced AI dubbing technology to enhance player immersion and accessibility across global markets, enabling developers to provide comprehensive multilingual voice acting for complex narrative games without the enormous costs traditionally associated with recording multiple language versions with professional voice actors. Modern games often feature hundreds of hours of dialogue content, making traditional dubbing approaches economically prohibitive for many developers, particularly those creating content for smaller or emerging markets.

AI dubbing systems enable game developers to create dynamic multilingual content that can adapt to player preferences and regional settings in real-time, providing personalized language experiences that enhance player engagement and accessibility. Advanced implementations can even generate character-specific vocal patterns that maintain consistency across extensive game narratives while adapting to different languages and cultural contexts.

Interactive applications such as virtual reality experiences and educational simulations have leveraged AI dubbing to create immersive multilingual environments where users can experience content in their preferred language without breaking immersion or requiring separate development tracks for different linguistic markets. These applications demonstrate the potential for AI dubbing to enable more inclusive and accessible interactive experiences that can reach diverse global audiences.

Challenges and Technological Limitations

Despite remarkable advances in AI dubbing technology, several significant challenges remain in achieving perfect multilingual content adaptation that fully matches the quality and nuance of human-performed dubbing. Cultural context and humor translation represent particularly complex challenges, as jokes, cultural references, and idiomatic expressions often require creative adaptation rather than literal translation to maintain their intended impact and meaning in different cultural contexts.

Emotional authenticity remains another area where AI systems continue to evolve, as capturing the subtle vocal nuances that convey complex emotional states requires sophisticated understanding of both linguistic and paralinguistic communication patterns. While AI systems can generate technically accurate speech with appropriate emotional markers, achieving the spontaneous and authentic emotional expression that characterizes exceptional human voice acting remains an ongoing area of research and development.

Technical limitations related to audio quality, background noise handling, and complex acoustic environments continue to present challenges for AI dubbing systems, particularly when working with source material that has challenging audio characteristics or complex soundscapes. Achieving consistent quality across diverse content types and production environments requires ongoing refinement of processing algorithms and training methodologies.

Economic Impact and Industry Transformation

The economic implications of AI dubbing technology extend far beyond simple cost reduction, fundamentally altering the economics of global content distribution and enabling new business models that were previously impossible due to traditional dubbing constraints. Content creators can now consider global distribution as a primary strategy rather than a secondary consideration, potentially accessing international revenue streams that justify larger production investments and more ambitious creative projects.

Smaller content creators and independent producers have particularly benefited from democratized access to professional-quality dubbing capabilities that enable them to compete in global markets previously dominated by major studios with substantial dubbing budgets. This democratization has led to increased diversity in international content offerings and greater representation of different cultural perspectives in global media markets.

The technology has also enabled new approaches to content localization that go beyond simple language translation to include cultural adaptation, regional humor modification, and audience-specific content optimization. These capabilities enable content creators to develop more nuanced and effective international marketing strategies that can maximize audience engagement and commercial success across diverse global markets.

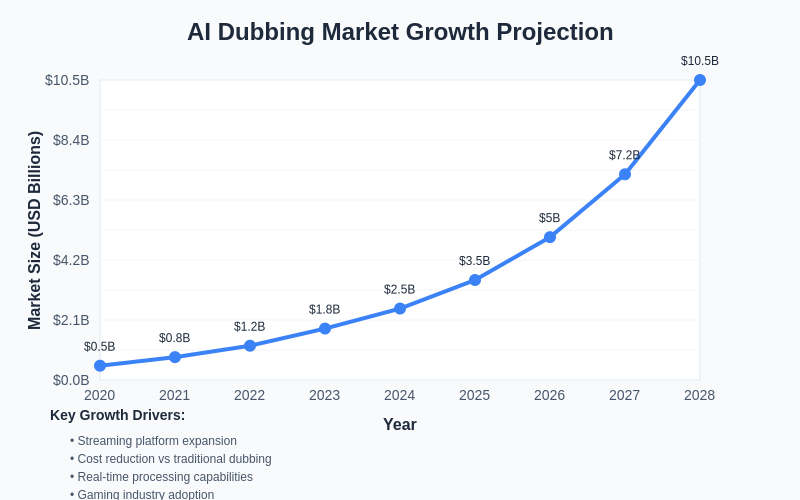

The projected growth trajectory of the AI dubbing market reflects the accelerating adoption of automated localization technologies across entertainment, gaming, and corporate communication sectors. This exponential growth pattern indicates the transformative impact of AI dubbing on global content distribution economics and the increasing recognition of automated dubbing as a viable alternative to traditional production methods.

Future Developments and Emerging Trends

The future evolution of AI dubbing technology promises even more sophisticated capabilities that will further blur the lines between synthetic and human-performed audio content. Emerging research in few-shot voice learning suggests that future systems may be able to create high-quality voice clones from minimal audio samples, enabling dubbing of historical content or posthumous voice recreation for archival purposes.

Integration with advanced emotion recognition and synthesis technologies will likely enable AI dubbing systems to better capture and reproduce subtle emotional nuances that are crucial for dramatic and artistic content. These developments may eventually enable AI systems to not only translate and synthesize speech but also adapt emotional expression to better resonate with different cultural contexts and audience expectations.

Real-time collaborative dubbing platforms are emerging that enable distributed teams of human creative professionals to work with AI systems to produce high-quality multilingual content more efficiently than traditional workflows. These platforms represent the evolution toward hybrid human-AI creative processes that leverage the strengths of both artificial intelligence and human creativity to achieve superior results.

The continued advancement of AI dubbing technology will likely lead to new forms of interactive and personalized media experiences where content can be dynamically adapted to individual viewer preferences, including language, cultural context, and even personal vocal preferences. These developments suggest a future where media consumption becomes increasingly personalized and accessible across all linguistic and cultural barriers.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI dubbing technologies and their applications in media production. Readers should conduct their own research and consider their specific requirements when implementing AI dubbing solutions. The effectiveness and quality of AI dubbing systems may vary depending on content type, source audio quality, target languages, and specific use case requirements.