The proliferation of artificial intelligence applications at the network edge has created an unprecedented demand for compact, efficient, and powerful computing platforms capable of executing complex machine learning models in resource-constrained environments. This paradigm shift has positioned two prominent contenders at the forefront of edge AI computing: the ubiquitous Raspberry Pi ecosystem and NVIDIA’s specialized Jetson platform. Understanding the nuanced differences between these platforms becomes crucial for developers, researchers, and organizations seeking to implement AI solutions that balance performance requirements with practical constraints such as power consumption, cost considerations, and deployment complexity.

Explore the latest AI hardware trends to discover cutting-edge developments in edge computing technologies that are shaping the future of distributed artificial intelligence applications. The evolution of edge computing represents a fundamental transformation in how AI systems are architected, moving computational intelligence closer to data sources and end-users while reducing latency and dependency on cloud infrastructure.

The Foundation of Edge AI Computing

Edge computing has emerged as a critical paradigm in the artificial intelligence landscape, driven by the need to process data locally rather than relying on distant cloud servers. This approach offers significant advantages in terms of reduced latency, enhanced privacy, improved reliability, and decreased bandwidth requirements. The implementation of AI models at the edge requires specialized hardware platforms that can efficiently execute neural network computations while operating within strict power and thermal constraints.

The Raspberry Pi platform, originally designed as an educational tool, has evolved into a versatile computing solution that democratizes access to programmable hardware. Its widespread adoption stems from its affordability, extensive community support, and general-purpose nature that accommodates a broad range of applications beyond AI processing. The platform’s ARM-based architecture provides sufficient computational capabilities for many edge computing scenarios while maintaining extremely low power consumption and cost-effectiveness.

NVIDIA’s Jetson platform represents a purpose-built approach to edge AI computing, incorporating dedicated GPU acceleration specifically optimized for machine learning workloads. This specialized hardware architecture enables significantly higher computational throughput for AI applications, particularly those involving computer vision, natural language processing, and complex neural network inference. The platform’s design philosophy prioritizes AI performance while maintaining the compact form factor required for edge deployment scenarios.

Architectural Differences and Design Philosophy

The fundamental architectural distinctions between Raspberry Pi and NVIDIA Jetson platforms reflect their different design philosophies and target applications. Raspberry Pi devices utilize ARM Cortex processors combined with basic GPU capabilities primarily intended for general computing tasks and light multimedia processing. This architecture excels in scenarios requiring moderate computational capabilities while prioritizing energy efficiency and cost optimization.

The latest Raspberry Pi models incorporate ARM Cortex-A76 cores running at frequencies up to 2.4 GHz, coupled with VideoCore VII GPU units that provide basic graphics acceleration and limited parallel processing capabilities for AI workloads. The platform’s unified memory architecture shares system RAM between CPU and GPU components, creating potential bottlenecks for memory-intensive AI applications but maintaining simplicity and cost-effectiveness.

Experience advanced AI processing with Claude for development tasks that require sophisticated reasoning and analysis capabilities in edge computing scenarios. The integration of powerful AI tools throughout the development process ensures optimal platform selection and implementation strategies for specific use cases.

NVIDIA Jetson platforms incorporate a fundamentally different architectural approach, featuring dedicated GPU cores specifically designed for parallel processing tasks common in machine learning applications. The Jetson AGX Orin, for example, includes an ARM Cortex-A78AE CPU cluster paired with an NVIDIA Ampere GPU containing 2048 CUDA cores and 64 Tensor cores optimized for AI inference operations. This specialized hardware configuration enables substantially higher computational throughput for AI workloads while maintaining relatively compact form factors suitable for edge deployment.

The Jetson platform’s memory subsystem typically features high-bandwidth LPDDR5 RAM with dedicated GPU memory allocation, enabling efficient data transfer and processing for large neural network models. This architectural design prioritizes AI performance over general-purpose computing flexibility, resulting in superior capabilities for machine learning applications at the cost of increased power consumption and platform complexity.

Performance Analysis Across AI Workloads

Computer vision applications represent one of the most demanding categories of edge AI workloads, requiring substantial computational resources for real-time image processing and object detection tasks. Raspberry Pi platforms demonstrate adequate performance for basic computer vision applications such as simple object detection using lightweight models like MobileNet or YOLO nano variants. The platform can achieve inference rates of approximately 1-3 frames per second for standard definition video processing using optimized models, making it suitable for non-real-time surveillance applications or basic automation tasks.

NVIDIA Jetson platforms exhibit dramatically superior performance for computer vision workloads, capable of processing high-definition video streams at 30+ frames per second while executing complex neural networks such as ResNet, EfficientNet, or transformer-based vision models. The dedicated Tensor cores enable mixed-precision inference that accelerates model execution while maintaining accuracy, resulting in performance improvements of 10-50 times compared to Raspberry Pi platforms for equivalent AI tasks.

The quantitative performance differences between Raspberry Pi and NVIDIA Jetson platforms are substantial across all key metrics relevant to AI edge computing applications. These measurements demonstrate the trade-offs between cost-effectiveness and computational capabilities that define platform selection decisions.

Natural language processing applications present different performance characteristics and requirements compared to computer vision workloads. Raspberry Pi platforms can handle basic NLP tasks such as keyword detection, simple text classification, or lightweight language models with limited vocabulary and context windows. The platform’s memory constraints typically limit the complexity of language models that can be effectively deployed, restricting applications to relatively simple linguistic tasks.

Jetson platforms accommodate significantly more sophisticated NLP applications, including large language model inference, complex text generation, and multi-modal AI applications that combine text and image processing. The platform’s substantial GPU memory and computational capabilities enable deployment of transformer models with billions of parameters, supporting applications such as conversational AI, content generation, and advanced text analysis that would be impractical on Raspberry Pi hardware.

Power Consumption and Thermal Management

Power efficiency represents a critical consideration for edge computing deployments, particularly in battery-powered applications or environments with limited electrical infrastructure. Raspberry Pi platforms excel in power efficiency, typically consuming 2-8 watts under normal operating conditions depending on the specific model and workload characteristics. This extremely low power consumption enables deployment in solar-powered installations, battery-operated devices, and other energy-constrained environments where operational costs and environmental impact must be minimized.

The thermal characteristics of Raspberry Pi platforms generally require minimal active cooling, with passive heat sinks sufficient for most applications. This simplicity reduces system complexity, improves reliability, and enables deployment in harsh environmental conditions where active cooling systems might fail or require maintenance. The platform’s low thermal output also facilitates integration into compact enclosures and embedded systems without significant thermal design considerations.

NVIDIA Jetson platforms consume significantly more power, typically ranging from 10-60 watts depending on the specific model and computational workload. The Jetson AGX Orin can consume up to 60 watts under maximum AI processing loads, while more compact models like the Jetson Nano operate in the 10-15 watt range. This increased power consumption reflects the substantially higher computational capabilities but may limit deployment scenarios where power availability is constrained.

Leverage Perplexity’s research capabilities to analyze specific power requirements and thermal management solutions for your edge computing deployment scenarios. Understanding the complete power ecosystem ensures successful implementation of AI edge computing solutions in diverse environmental conditions.

Thermal management for Jetson platforms typically requires active cooling solutions, particularly for higher-performance models operating under sustained AI workloads. The platforms incorporate thermal throttling mechanisms that reduce performance when temperature limits are exceeded, ensuring system stability but potentially impacting AI inference performance in thermally constrained environments. Proper thermal design becomes crucial for maintaining consistent performance in production deployments.

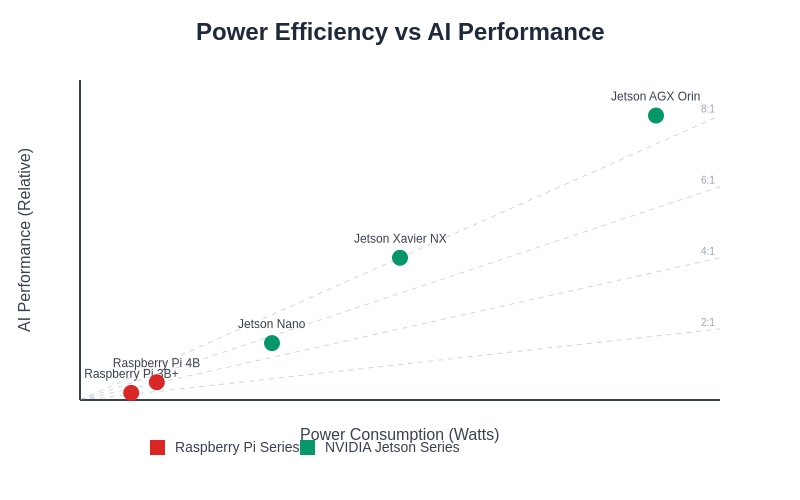

The relationship between power consumption and AI performance reveals distinct efficiency characteristics for each platform category. While Raspberry Pi devices excel in absolute power efficiency, NVIDIA Jetson platforms deliver superior performance-per-watt ratios for AI-intensive applications.

Development Environment and Software Ecosystem

The software development experience differs substantially between Raspberry Pi and NVIDIA Jetson platforms, reflecting their distinct target audiences and application domains. Raspberry Pi platforms benefit from an extensive open-source ecosystem built around standard Linux distributions, particularly Raspberry Pi OS and Ubuntu variants optimized for ARM architecture. This ecosystem provides broad compatibility with general-purpose development tools, programming languages, and software libraries that facilitate rapid prototyping and application development.

AI development on Raspberry Pi typically utilizes lightweight frameworks such as TensorFlow Lite, ONNX Runtime, or OpenVINO that provide optimized inference capabilities for ARM processors. These frameworks support model quantization, pruning, and other optimization techniques that enable deployment of moderately complex AI models within the platform’s computational and memory constraints. The development process often involves training models on more powerful hardware and then deploying optimized versions to Raspberry Pi devices for inference.

NVIDIA Jetson platforms provide a more specialized development environment optimized for AI applications through the JetPack SDK, which includes CUDA, cuDNN, TensorRT, and other NVIDIA-specific libraries that maximize GPU utilization for machine learning workloads. This software stack enables deployment of full-precision neural networks without extensive optimization, supporting more complex AI applications with minimal performance compromises compared to cloud-based inference.

The Jetson development environment includes specialized tools for AI model optimization, performance profiling, and deployment automation that streamline the process of moving AI applications from development to production. TensorRT provides automated model optimization that can improve inference performance by 2-10 times compared to unoptimized implementations, while maintaining compatibility with popular machine learning frameworks such as TensorFlow, PyTorch, and ONNX.

Cost Analysis and Economic Considerations

Economic factors play a crucial role in platform selection for edge computing deployments, particularly for applications requiring large-scale device deployments or cost-sensitive market segments. Raspberry Pi platforms offer exceptional value for basic AI edge computing applications, with device costs ranging from $15-100 depending on the specific model and configuration. This affordability enables deployment scenarios such as distributed sensor networks, educational AI projects, and cost-sensitive IoT applications that require minimal AI processing capabilities.

The total cost of ownership for Raspberry Pi deployments includes additional components such as storage media, power supplies, and enclosures, but these accessories remain relatively inexpensive and widely available from multiple suppliers. The platform’s standardized interfaces and broad ecosystem support reduce integration costs and enable rapid scaling of deployments across diverse application scenarios.

NVIDIA Jetson platforms command significantly higher initial costs, ranging from $100-2000 depending on the specific model and performance capabilities. The Jetson AGX Orin developer kit costs approximately $2000, while more accessible models like the Jetson Nano start around $100. These higher costs reflect the specialized AI hardware and associated development tools but may limit adoption for price-sensitive applications or large-scale deployments where per-unit costs become prohibitive.

The economic equation for Jetson platforms often justifies higher initial costs through superior AI performance that enables more sophisticated applications, reduced cloud computing expenses through local processing, and faster development cycles due to comprehensive software tooling. Organizations implementing complex AI applications may find that Jetson platforms provide better long-term value despite higher upfront investments.

Real-World Implementation Scenarios

Industrial automation applications represent a significant opportunity for edge AI computing, where platforms must balance performance requirements with reliability, cost, and environmental constraints. Raspberry Pi platforms excel in scenarios such as simple quality control systems, basic predictive maintenance, and environmental monitoring applications that require modest AI capabilities combined with robust connectivity and sensor integration. The platform’s GPIO capabilities and extensive hardware interface options facilitate integration with industrial control systems and legacy equipment.

Smart city infrastructure presents diverse requirements that may favor different platforms depending on specific application needs. Traffic monitoring systems utilizing basic vehicle detection and counting can effectively utilize Raspberry Pi platforms with appropriate camera interfaces and optimized computer vision models. More sophisticated applications such as real-time traffic flow optimization, comprehensive surveillance systems, or multi-modal transportation analysis typically require the enhanced processing capabilities provided by Jetson platforms.

Healthcare and medical device applications impose stringent requirements for accuracy, reliability, and regulatory compliance that influence platform selection decisions. Basic vital sign monitoring, simple diagnostic applications, or patient tracking systems may operate effectively on Raspberry Pi platforms with appropriate medical-grade accessories and certification. Advanced applications such as real-time medical imaging analysis, complex diagnostic AI, or surgical assistance systems generally require the computational capabilities and precision provided by NVIDIA Jetson platforms.

Agricultural technology applications demonstrate the diverse requirements and trade-offs inherent in edge AI platform selection. Simple crop monitoring systems, basic pest detection, or environmental sensing applications can effectively utilize Raspberry Pi platforms deployed in solar-powered field installations. More sophisticated applications such as precision agriculture, advanced plant disease detection, or automated harvesting systems typically require the enhanced AI capabilities provided by Jetson platforms despite higher power consumption and cost considerations.

Performance Benchmarking and Quantitative Analysis

Systematic performance evaluation across standardized benchmarks provides objective insights into the relative capabilities of Raspberry Pi and NVIDIA Jetson platforms for AI edge computing applications. Image classification benchmarks using standard datasets such as ImageNet demonstrate the substantial performance differences between platforms, with Raspberry Pi 4 achieving approximately 2-5 inferences per second for ResNet-50 models, while NVIDIA Jetson AGX Orin can process 100-300 inferences per second depending on optimization settings and precision modes.

Object detection benchmarks using COCO dataset evaluations reveal even more pronounced performance differences, as these applications require processing entire images rather than pre-cropped samples. Raspberry Pi platforms typically achieve 0.1-1 frames per second for YOLO-based object detection on standard definition video, while Jetson platforms can process high-definition video streams at 30+ frames per second using equivalent or more sophisticated detection models.

Memory bandwidth limitations significantly impact AI performance for both platforms, but the constraints manifest differently due to architectural differences. Raspberry Pi platforms share system memory between CPU and GPU components, creating bottlenecks for memory-intensive AI operations that require frequent data transfers between processing units. Jetson platforms provide dedicated GPU memory and high-bandwidth interconnects that eliminate many memory-related performance bottlenecks while supporting larger and more complex AI models.

Power efficiency metrics demonstrate the trade-offs between computational performance and energy consumption for different deployment scenarios. Raspberry Pi platforms achieve approximately 0.1-0.5 inferences per watt for typical AI workloads, while NVIDIA Jetson platforms deliver 1-5 inferences per watt depending on the specific model and optimization settings. These efficiency differences reflect the specialized AI hardware in Jetson platforms that provides superior performance per watt for machine learning applications despite higher absolute power consumption.

Future Technology Trends and Platform Evolution

The evolution of edge AI computing platforms continues to accelerate, driven by advancing semiconductor technologies, improved AI model architectures, and growing demand for local processing capabilities. Raspberry Pi development focuses on maintaining affordability and accessibility while incrementally improving performance through more efficient ARM processors, enhanced GPU capabilities, and optimized software stacks that better support AI workloads within existing power and cost constraints.

NVIDIA’s Jetson roadmap emphasizes continued AI performance improvements through next-generation GPU architectures, advanced AI accelerators, and enhanced software optimization tools. Future Jetson platforms will likely incorporate more specialized AI hardware such as vision processing units, dedicated neural processing elements, and advanced memory technologies that further improve AI inference performance while maintaining or reducing power consumption.

The convergence of AI hardware and software optimization techniques promises to narrow the performance gap between general-purpose and specialized platforms for certain classes of AI applications. Advanced model compression techniques, neural architecture search, and automated optimization tools may enable more sophisticated AI applications on resource-constrained platforms like Raspberry Pi while maximizing the capabilities of high-performance platforms like NVIDIA Jetson.

Emerging AI model architectures such as efficient transformers, sparse neural networks, and neuromorphic computing approaches may fundamentally alter the performance characteristics and requirements for edge AI computing platforms. These developments could shift the optimal balance between general-purpose flexibility and specialized AI acceleration, influencing future platform selection decisions and development strategies.

Integration Challenges and Solution Strategies

Deploying AI edge computing solutions involves numerous technical challenges beyond simple platform performance comparisons, including connectivity requirements, data management, model deployment automation, and system maintenance considerations. Raspberry Pi platforms benefit from extensive community knowledge and standardized deployment practices that simplify integration with existing systems and infrastructure.

The diverse connectivity options available for Raspberry Pi platforms, including Ethernet, Wi-Fi, Bluetooth, and various serial interfaces, facilitate integration with legacy systems and diverse networking environments. This connectivity flexibility proves particularly valuable for retrofitting AI capabilities into existing installations where network infrastructure may be limited or non-standard.

NVIDIA Jetson platforms provide more sophisticated deployment and management tools through the NVIDIA Fleet Command platform, which enables remote device management, over-the-air updates, and centralized monitoring for large-scale edge computing deployments. These enterprise-focused tools address the complexity challenges associated with managing high-performance edge AI systems but require additional infrastructure and expertise compared to simpler Raspberry Pi deployments.

Model deployment and optimization represent ongoing challenges that require different approaches for each platform type. Raspberry Pi deployments typically benefit from aggressive model optimization, quantization, and pruning techniques that reduce computational requirements at the cost of some accuracy degradation. Jetson platforms can accommodate larger, more accurate models but still benefit from optimization techniques that improve inference speed and reduce power consumption.

Conclusion and Platform Selection Guidelines

The choice between Raspberry Pi and NVIDIA Jetson platforms for AI edge computing applications depends on a complex interplay of performance requirements, cost constraints, power limitations, and development complexity considerations. Raspberry Pi platforms excel in scenarios prioritizing cost-effectiveness, power efficiency, and deployment simplicity, making them ideal for applications such as basic IoT sensing, simple computer vision tasks, and educational AI projects where modest performance requirements can be satisfied within tight budget constraints.

NVIDIA Jetson platforms provide superior AI performance that enables sophisticated applications such as real-time computer vision, complex natural language processing, and multi-modal AI systems that require substantial computational capabilities. The higher cost and power consumption of Jetson platforms are justified for applications where AI performance directly impacts functionality, user experience, or business value generation.

The optimal platform selection strategy often involves careful analysis of specific application requirements, deployment constraints, and long-term scalability considerations. Organizations should evaluate not only current AI processing needs but also anticipated future requirements, model complexity evolution, and performance scaling demands that may influence platform suitability over the deployment lifecycle.

Different application domains exhibit varying suitability profiles for each platform type, with clear patterns emerging based on computational requirements, cost sensitivity, and deployment constraints. This analysis helps guide platform selection decisions based on specific use case characteristics.

The continuing evolution of both platform ecosystems suggests that future selection decisions will benefit from even more capable hardware options, improved software optimization tools, and enhanced development environments that simplify the deployment of sophisticated AI applications at the network edge. Success in edge AI computing ultimately depends on matching platform capabilities to application requirements while balancing the multiple constraints and trade-offs inherent in distributed AI system design.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The performance comparisons and analysis presented are based on publicly available specifications and benchmarks, which may vary depending on specific configurations, software versions, and testing conditions. Readers should conduct their own evaluations and testing to determine the most appropriate platform for their specific use cases and requirements. The rapidly evolving nature of AI hardware and software may impact the relative performance and capabilities of these platforms over time.