The rapid proliferation of artificial intelligence technologies has brought unprecedented computational capabilities to countless industries, revolutionizing everything from healthcare diagnostics to autonomous transportation systems. However, this technological renaissance comes with a significant environmental cost that demands urgent attention from technologists, policymakers, and organizations worldwide. The energy consumption patterns of modern AI systems have reached staggering proportions, with some large language models requiring electricity equivalent to powering thousands of homes for extended periods during their training phases alone.

Discover the latest developments in sustainable AI technologies to understand how the industry is responding to growing environmental concerns and implementing innovative solutions for energy-efficient computing. The intersection of artificial intelligence advancement and environmental responsibility represents one of the most critical challenges facing the technology sector as we strive to balance innovation with planetary stewardship.

The urgency of addressing AI’s environmental impact extends far beyond simple energy consumption metrics. As AI systems become increasingly sophisticated and ubiquitous, their cumulative carbon footprint threatens to undermine global climate initiatives unless decisive action is taken to implement sustainable computing practices. This environmental challenge presents both obstacles and opportunities for the AI community to pioneer green technologies that demonstrate how cutting-edge innovation can coexist harmoniously with environmental conservation.

Understanding AI’s Energy Consumption Landscape

The computational demands of modern artificial intelligence systems represent a fundamental shift in how we approach large-scale computing infrastructure and energy utilization. Training state-of-the-art AI models requires massive parallel processing capabilities that consume extraordinary amounts of electricity, often running continuously for weeks or months across thousands of specialized graphics processing units. This intensive computational workload translates directly into substantial carbon emissions, particularly when powered by electricity grids that still rely heavily on fossil fuel sources.

The energy consumption profile of AI systems varies dramatically depending on the specific application, model architecture, and deployment strategy employed. Large language models like GPT-4 and similar transformer-based architectures require particularly intensive training processes that can consume several gigawatt-hours of electricity, equivalent to the annual energy consumption of hundreds of average households. These figures become even more concerning when considering the iterative nature of AI development, where multiple training runs and extensive hyperparameter optimization experiments multiply the total energy requirements exponentially.

Beyond the initial training phase, the operational deployment of AI systems presents ongoing energy challenges that affect millions of user interactions daily. Every query processed by AI-powered search engines, recommendation systems, or conversational interfaces requires computational resources that translate into measurable energy consumption. The cumulative effect of billions of AI-powered interactions occurring globally creates a substantial and growing contribution to overall technology sector energy consumption.

The Carbon Footprint Challenge in AI Development

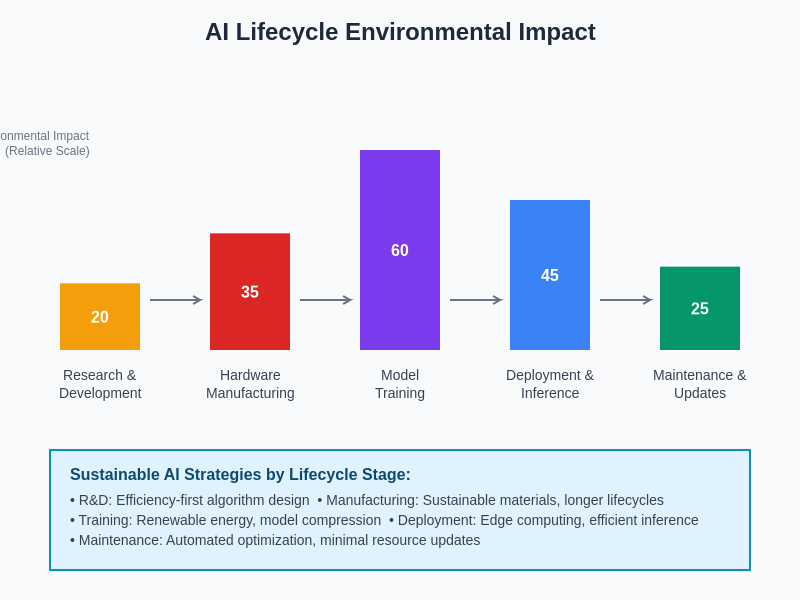

The carbon footprint implications of artificial intelligence development extend far beyond immediate energy consumption to encompass the entire lifecycle of AI systems, from initial research and development through deployment and eventual decommissioning. The manufacturing and deployment of specialized AI hardware, including graphics processing units, tensor processing units, and custom AI accelerators, requires significant energy-intensive manufacturing processes that contribute substantially to the overall environmental impact of AI technologies.

Explore advanced AI capabilities and their environmental implications while learning about cutting-edge approaches to sustainable computing that prioritize both performance and environmental responsibility. The challenge of accurately measuring and reporting AI-related carbon emissions remains complex due to the distributed nature of AI development, varying electricity grid compositions across different geographic regions, and the difficulty of attributing shared infrastructure usage to specific AI workloads.

The temporal aspects of AI carbon footprint present additional complexity, as the environmental impact of training a model occurs upfront while the benefits are distributed across potentially millions of inference operations over extended periods. This temporal disconnect makes it challenging to perform accurate cost-benefit analyses of AI environmental impact, particularly when considering models that may be used for years after their initial training period.

Contemporary research indicates that the carbon footprint of training large AI models can range from hundreds to thousands of tons of carbon dioxide equivalent, depending on the model size, training duration, and geographic location of the computing infrastructure. These emissions levels are comparable to those produced by manufacturing and operating hundreds of passenger vehicles for entire years, highlighting the substantial environmental stakes involved in AI development decisions.

Green Computing Principles for AI Systems

The implementation of green computing principles in AI development requires a fundamental reconsideration of how we approach system design, algorithm optimization, and infrastructure utilization. Energy-efficient AI design begins with architectural decisions that prioritize computational efficiency without sacrificing performance capabilities, including the development of sparse neural networks, quantized models, and innovative attention mechanisms that reduce computational complexity while maintaining accuracy.

Model compression techniques have emerged as crucial strategies for reducing the environmental impact of AI systems by decreasing both training and inference energy requirements. These approaches include network pruning, knowledge distillation, and weight quantization methods that can dramatically reduce model size and computational requirements while preserving most of the original model’s performance characteristics. The successful implementation of these techniques often results in models that are not only more environmentally friendly but also more practical for deployment in resource-constrained environments.

The optimization of training procedures represents another critical avenue for improving AI energy efficiency, encompassing strategies such as progressive training, transfer learning, and efficient hyperparameter optimization techniques. Progressive training approaches gradually increase model complexity during the training process, allowing for early termination when sufficient performance is achieved, while transfer learning leverages pre-trained models to reduce the computational requirements for new applications significantly.

Hardware efficiency considerations play an equally important role in green AI computing, with specialized processors designed specifically for AI workloads offering substantially improved performance-per-watt ratios compared to traditional computing hardware. Modern AI accelerators, including Google’s Tensor Processing Units, NVIDIA’s specialized AI chips, and emerging neuromorphic computing platforms, demonstrate how purpose-built hardware can dramatically reduce energy consumption for AI workloads.

Sustainable AI Infrastructure and Data Centers

The environmental impact of AI systems is intrinsically linked to the sustainability practices of the data centers and cloud computing infrastructure that power these technologies. Modern AI workloads require substantial computing resources that are typically provided by large-scale data centers, making the energy efficiency and renewable energy utilization of these facilities critical factors in determining the overall environmental impact of AI applications.

Leading technology companies have made substantial investments in renewable energy infrastructure to power their data centers, with some achieving carbon neutrality or even carbon negativity for their computing operations. These initiatives include direct investments in solar and wind power generation, power purchase agreements with renewable energy providers, and innovative cooling technologies that reduce the energy overhead associated with maintaining optimal operating temperatures for computing equipment.

The geographic distribution of AI computing infrastructure significantly influences its environmental impact, as different regions have vastly different electricity grid compositions and renewable energy availability. Training AI models in regions with high renewable energy penetration can reduce carbon emissions by orders of magnitude compared to regions heavily dependent on fossil fuel-generated electricity. This geographic consideration has led some organizations to strategically locate their AI training operations in areas with abundant clean energy resources.

Advanced cooling technologies and data center design innovations continue to improve the energy efficiency of AI infrastructure, with techniques such as liquid cooling, free air cooling, and waste heat recovery systems reducing the energy overhead associated with maintaining optimal operating conditions for AI hardware. These efficiency improvements compound the environmental benefits achieved through renewable energy adoption and more efficient computing hardware.

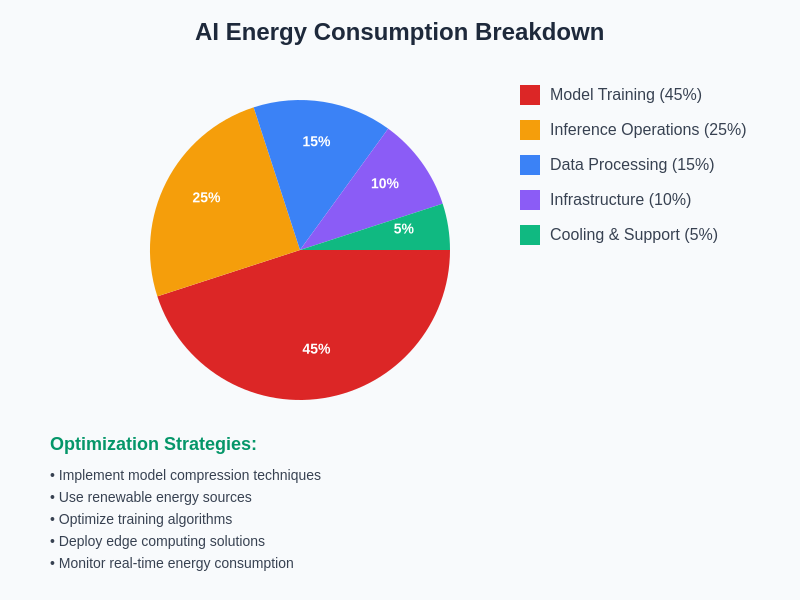

The comprehensive analysis of AI energy consumption reveals that training large models represents the most significant single contributor to AI-related energy usage, followed by ongoing inference operations and supporting infrastructure. Understanding these consumption patterns enables targeted optimization efforts that can achieve maximum environmental impact reduction.

Measuring and Monitoring AI Environmental Impact

Accurate measurement of AI environmental impact requires sophisticated methodologies that account for the complex, distributed nature of modern AI development and deployment processes. Traditional carbon footprint calculation methods often fall short when applied to AI systems due to the difficulty of attributing shared infrastructure usage, the temporal distribution of environmental costs, and the varying energy intensity of different computational operations.

Emerging standards and frameworks for AI carbon footprint measurement are beginning to address these challenges by providing standardized methodologies for calculating and reporting AI-related emissions. These frameworks typically consider factors such as hardware utilization rates, electricity grid carbon intensity, cooling and infrastructure overhead, and the full lifecycle impact of specialized AI hardware manufacturing and disposal.

Enhance your understanding of AI environmental monitoring through comprehensive research tools that provide insights into the latest measurement methodologies and reporting standards being developed by industry leaders and environmental organizations. Real-time monitoring systems are increasingly being integrated into AI development workflows, providing developers with immediate feedback on the energy consumption and carbon footprint implications of their design decisions.

The development of carbon-aware computing systems represents an emerging approach to automatically optimizing AI workloads based on real-time electricity grid composition and renewable energy availability. These systems can automatically delay or migrate computational workloads to times and locations where cleaner energy sources are available, potentially reducing carbon emissions without requiring significant changes to existing AI development practices.

Energy-Efficient Algorithm Design and Optimization

The pursuit of energy-efficient AI algorithms requires a fundamental shift in how we evaluate and optimize machine learning models, moving beyond traditional metrics focused solely on accuracy to incorporate energy consumption and computational efficiency as primary design constraints. This paradigm shift has led to the development of innovative algorithmic approaches that achieve comparable performance to traditional methods while requiring substantially fewer computational resources.

Neural architecture search techniques specifically designed to optimize for energy efficiency have emerged as powerful tools for automatically discovering model architectures that balance performance and environmental impact. These automated design systems can explore vast architectural search spaces to identify configurations that achieve desired performance levels while minimizing energy consumption, often discovering novel architectural patterns that human designers might not consider.

The implementation of dynamic neural networks that can adapt their computational requirements based on input complexity represents another promising avenue for improving AI energy efficiency. These adaptive systems can allocate computational resources more efficiently by using simpler processing pathways for straightforward inputs while reserving complex computations for challenging cases that require the full model capacity.

Federated learning approaches offer additional opportunities for energy-efficient AI by distributing computational workloads across multiple devices and reducing the need for centralized training on energy-intensive cloud infrastructure. This distributed approach can leverage the collective computational power of edge devices while minimizing data transmission requirements and reducing reliance on large-scale data center infrastructure.

Renewable Energy Integration in AI Computing

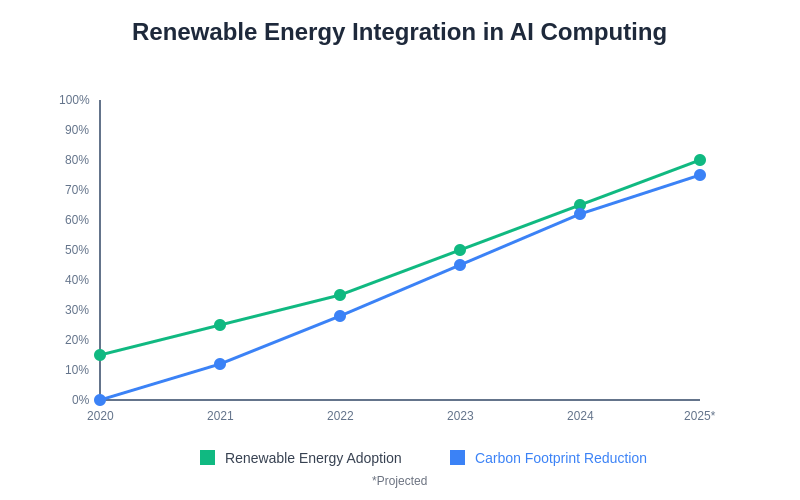

The integration of renewable energy sources into AI computing infrastructure represents one of the most impactful strategies for reducing the environmental footprint of artificial intelligence systems. Leading technology companies have demonstrated that large-scale AI operations can be powered entirely by renewable energy through strategic investments in solar, wind, and other clean energy technologies, providing a roadmap for industry-wide adoption of sustainable energy practices.

The intermittent nature of renewable energy sources presents unique challenges for AI workloads that traditionally require consistent, high-intensity computational resources over extended periods. Innovative approaches to managing this intermittency include the development of AI training algorithms that can pause and resume efficiently, energy storage systems that can buffer renewable energy for consistent availability, and workload scheduling systems that align computational demands with renewable energy availability patterns.

Corporate renewable energy procurement strategies have evolved to include direct investment in renewable energy projects, long-term power purchase agreements with clean energy providers, and participation in renewable energy certificate markets. These approaches enable organizations to offset their AI-related energy consumption with equivalent renewable energy generation, even when direct renewable energy supply is not immediately available for their computing infrastructure.

The co-location of AI computing facilities with renewable energy generation sites represents an emerging trend that can maximize the environmental benefits of clean energy integration while minimizing transmission losses and grid infrastructure requirements. These integrated facilities can achieve exceptional energy efficiency by directly utilizing renewable energy output without the losses associated with long-distance power transmission and grid conversion processes.

The transformation of AI computing infrastructure through renewable energy integration demonstrates how technology companies can achieve both environmental sustainability goals and operational efficiency improvements through strategic clean energy investments and innovative infrastructure design.

Edge Computing and Distributed AI for Efficiency

Edge computing architectures offer substantial opportunities for improving AI energy efficiency by processing data closer to its source, reducing the energy requirements associated with data transmission and centralized cloud processing. This distributed approach to AI computing can significantly reduce the overall energy footprint of AI applications while also improving response times and reducing dependence on energy-intensive data center infrastructure.

The deployment of AI capabilities directly on user devices, including smartphones, tablets, and Internet of Things devices, enables intelligent processing without requiring constant communication with remote servers. This edge-based approach can reduce energy consumption by eliminating the need for data transmission over networks and reducing the computational load on centralized AI systems, though it also requires careful optimization of AI models for resource-constrained environments.

Collaborative edge computing systems that enable multiple devices to share AI computational workloads represent an innovative approach to distributed AI that can leverage the collective processing power of device networks while minimizing individual device energy consumption. These systems can dynamically distribute computational tasks based on device availability, battery status, and processing capabilities to optimize overall system efficiency.

The development of specialized edge AI hardware designed specifically for energy-efficient inference operations has enabled the deployment of sophisticated AI capabilities in battery-powered and resource-constrained environments. These specialized processors, including neural processing units and AI accelerators optimized for edge deployment, can deliver impressive AI performance while consuming minimal power compared to traditional computing hardware.

Lifecycle Assessment and Circular Economy in AI

A comprehensive understanding of AI environmental impact requires consideration of the entire lifecycle of AI systems, from initial research and development through hardware manufacturing, deployment, operation, and eventual disposal or recycling. This lifecycle perspective reveals environmental impact contributors that may not be immediately apparent when focusing solely on operational energy consumption, including embedded carbon in specialized hardware and the environmental costs of electronic waste disposal.

The manufacturing phase of AI hardware represents a substantial environmental impact that is often overlooked in discussions of AI sustainability. The production of graphics processing units, specialized AI accelerators, and other computing hardware requires energy-intensive manufacturing processes, rare earth materials, and complex supply chains that contribute significantly to the overall environmental footprint of AI systems.

Strategies for extending the useful life of AI hardware through efficient utilization, hardware sharing, and multi-generational deployment can substantially improve the environmental efficiency of AI systems by amortizing manufacturing-related environmental impacts across longer operational periods and multiple use cases. These approaches include the development of hardware-agnostic AI software that can efficiently utilize diverse computing resources and the implementation of hardware sharing platforms that maximize utilization rates.

The emerging concept of circular economy principles applied to AI infrastructure emphasizes the importance of designing systems for longevity, repairability, and recyclability. This approach includes the development of modular AI hardware that can be upgraded incrementally rather than replaced entirely, the implementation of hardware leasing models that incentivize efficient utilization, and the establishment of comprehensive recycling programs for end-of-life AI equipment.

The comprehensive lifecycle assessment of AI systems reveals that while operational energy consumption represents the largest single environmental impact category, the cumulative effects of hardware manufacturing, transportation, and disposal create substantial additional environmental considerations that must be addressed through holistic sustainability strategies.

Policy and Regulatory Frameworks for Sustainable AI

The development of effective policy and regulatory frameworks for sustainable AI requires careful consideration of the complex interplay between technological innovation, environmental protection, and economic competitiveness. Governments worldwide are beginning to recognize the environmental implications of AI development and are exploring regulatory approaches that can incentivize sustainable practices without stifling technological advancement or economic growth.

Carbon pricing mechanisms and emissions trading systems provide market-based incentives for reducing AI-related greenhouse gas emissions by making carbon-intensive AI development practices more expensive while rewarding organizations that implement energy-efficient and renewable-powered AI systems. These economic instruments can drive innovation in sustainable AI technologies by creating clear financial incentives for environmental responsibility.

Mandatory environmental impact reporting requirements for large AI systems could provide transparency and accountability for AI-related environmental impacts while enabling better informed decision-making by organizations and policymakers. These reporting frameworks would need to address the technical challenges of accurately measuring AI environmental impact while providing standardized metrics that enable meaningful comparisons across different AI systems and applications.

International cooperation on AI sustainability standards and best practices can help ensure that environmental considerations are integrated into AI development practices globally, preventing the displacement of environmental impacts to regions with less stringent environmental regulations. This cooperation might include the development of international standards for AI environmental impact measurement, mutual recognition of sustainability certifications, and collaborative research programs focused on sustainable AI technologies.

Industry Initiatives and Corporate Responsibility

Leading technology companies have launched ambitious initiatives to address the environmental impact of their AI operations, setting industry precedents for corporate responsibility in AI sustainability. These initiatives often include commitments to carbon neutrality or carbon negativity for AI operations, substantial investments in renewable energy infrastructure, and the development of more energy-efficient AI technologies and practices.

Industry consortiums and collaborative initiatives are emerging to address AI sustainability challenges that require coordinated action across multiple organizations and stakeholders. These collaborative efforts include the development of shared best practices for energy-efficient AI development, joint investments in sustainable AI research, and the establishment of industry-wide standards for measuring and reporting AI environmental impact.

Corporate sustainability reporting increasingly includes specific metrics related to AI environmental impact, reflecting growing stakeholder interest in the environmental implications of AI investments and operations. These reporting practices help create transparency and accountability for AI-related environmental impacts while providing investors and other stakeholders with information needed to make informed decisions about AI investments.

The integration of environmental considerations into AI product development processes is becoming a standard practice among leading technology companies, with sustainability assessments being conducted alongside traditional performance and cost evaluations. This integration ensures that environmental impact is considered as a primary design constraint rather than an afterthought in AI development processes.

Future Directions and Emerging Technologies

The future of sustainable AI computing is being shaped by emerging technologies and research directions that promise to dramatically reduce the environmental impact of artificial intelligence systems while maintaining or improving their capabilities. These innovations span multiple domains, from novel computing paradigms to advanced materials science and quantum computing applications.

Neuromorphic computing represents one of the most promising approaches to energy-efficient AI, with brain-inspired computing architectures that can potentially reduce energy consumption by orders of magnitude compared to traditional digital computing systems. These systems mimic the energy-efficient processing patterns of biological neural networks and offer the potential for AI systems that consume power levels comparable to biological brains rather than traditional computers.

Quantum computing applications to machine learning and AI could fundamentally transform the energy requirements of certain AI workloads by leveraging quantum mechanical principles to solve specific problem classes more efficiently than classical computers. While quantum computing technology is still in its early stages, research suggests that quantum algorithms could provide exponential improvements in computational efficiency for certain AI applications.

Advanced materials research is contributing to AI sustainability through the development of more energy-efficient computing hardware, including superconducting processors that operate at near-zero electrical resistance and novel semiconductor materials that can improve the energy efficiency of AI accelerators. These materials innovations could enable the development of AI hardware that delivers substantially improved performance per unit of energy consumed.

The convergence of AI and renewable energy technologies is creating opportunities for intelligent energy systems that can optimize renewable energy generation, storage, and distribution while using AI-powered demand forecasting and load balancing to minimize energy waste. These integrated systems demonstrate how AI can contribute to overall energy system efficiency rather than simply consuming energy for computational purposes.

Practical Implementation Strategies

Organizations seeking to implement sustainable AI practices can benefit from a systematic approach that addresses multiple aspects of AI development and deployment, from initial project planning through ongoing operations and maintenance. This comprehensive approach requires coordination across technical teams, procurement departments, facilities management, and executive leadership to ensure that sustainability considerations are integrated throughout the organization’s AI initiatives.

The establishment of internal carbon accounting systems specifically designed for AI workloads enables organizations to track and manage their AI-related environmental impact with the same rigor applied to other business metrics. These systems should include real-time monitoring of energy consumption, automated calculation of carbon footprint based on grid composition and renewable energy utilization, and reporting capabilities that enable informed decision-making about AI project priorities and resource allocation.

Technical implementation strategies for sustainable AI include the adoption of energy-efficient model architectures, the implementation of automated hyperparameter optimization systems that consider energy consumption alongside performance metrics, and the deployment of carbon-aware scheduling systems that automatically optimize AI workloads based on renewable energy availability and grid carbon intensity.

Organizational policies and procedures can support sustainable AI practices by establishing clear guidelines for AI project evaluation that include environmental impact assessment, requiring justification for energy-intensive AI applications, and incentivizing the development of energy-efficient AI solutions through recognition programs and resource allocation decisions.

The environmental implications of artificial intelligence represent both significant challenges and unprecedented opportunities for the technology industry to demonstrate leadership in sustainable innovation. As AI systems become increasingly powerful and ubiquitous, the decisions made today regarding AI sustainability will have lasting impacts on global environmental outcomes and the long-term viability of AI technologies as tools for addressing humanity’s greatest challenges.

The path forward requires continued innovation in energy-efficient AI algorithms, substantial investments in renewable energy infrastructure, and the development of comprehensive policy frameworks that support sustainable AI development while enabling continued technological advancement. Through coordinated action across industry, government, and research institutions, it is possible to realize the transformative potential of artificial intelligence while minimizing its environmental impact and contributing to global climate goals.

The success of sustainable AI initiatives will ultimately depend on the recognition that environmental responsibility and technological excellence are not competing priorities but complementary objectives that can drive innovation, reduce costs, and create competitive advantages for organizations that embrace comprehensive sustainability strategies. The future of AI lies not in choosing between environmental protection and technological advancement, but in demonstrating how these goals can be achieved simultaneously through intelligent design, strategic resource allocation, and unwavering commitment to sustainable practices.

Disclaimer

This article is for informational purposes only and does not constitute professional environmental or technical advice. The views expressed are based on current understanding of AI technologies and their environmental implications. Readers should conduct their own research and consult with appropriate experts when implementing AI sustainability strategies. Environmental impact assessments may vary significantly depending on specific technologies, deployment scenarios, and local conditions.