The rapid advancement of artificial intelligence technology has brought unprecedented opportunities for innovation and societal transformation, yet it has simultaneously created complex ethical challenges that demand careful consideration and structured governance. AI Ethics Boards have emerged as essential institutions within organizations and governments worldwide, serving as guardians of responsible AI development and implementation. These specialized committees represent a critical response to the growing recognition that artificial intelligence systems, while powerful tools for progress, must be developed and deployed with careful attention to their potential impacts on individuals, communities, and society as a whole.

Explore the latest developments in AI governance and ethics to understand how organizations are adapting to the evolving landscape of responsible artificial intelligence. The establishment of AI Ethics Boards reflects a fundamental shift in how technology companies, research institutions, and government agencies approach the development of AI systems, moving beyond purely technical considerations to encompass broader questions of fairness, transparency, accountability, and social responsibility.

The emergence of AI Ethics Boards represents more than just a procedural response to regulatory pressure or public scrutiny. These bodies embody a commitment to proactive ethical stewardship that recognizes the profound influence artificial intelligence systems can have on human lives and societal structures. As AI technologies become increasingly integrated into critical decision-making processes across healthcare, finance, criminal justice, employment, and education, the need for systematic ethical oversight has become not just advisable but essential for maintaining public trust and ensuring beneficial outcomes for all stakeholders.

The Foundation of AI Ethics Governance

AI Ethics Boards serve as the cornerstone of responsible AI governance frameworks, providing structured oversight and guidance for the development, deployment, and ongoing management of artificial intelligence systems. These interdisciplinary committees bring together diverse expertise from fields including computer science, philosophy, law, social sciences, and domain-specific areas to ensure comprehensive evaluation of AI systems from multiple perspectives. The fundamental purpose of these boards extends beyond simple compliance checking to encompass proactive identification of potential ethical issues, development of organizational ethical standards, and creation of processes that embed ethical considerations throughout the AI development lifecycle.

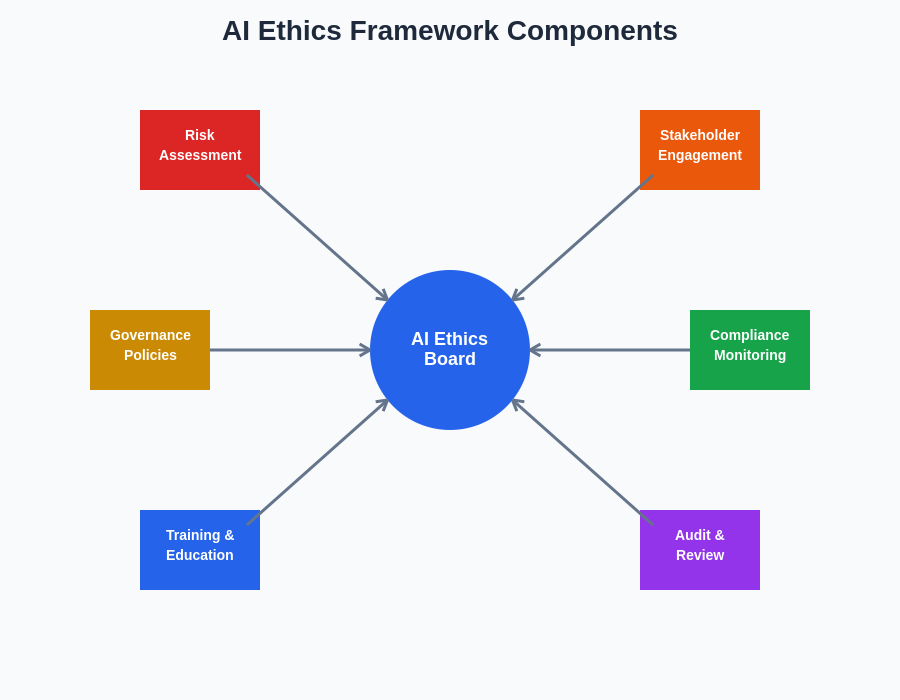

The governance framework established by AI Ethics Boards typically encompasses several key components, including ethical principles and guidelines, risk assessment methodologies, review processes for AI projects, incident response procedures, and continuous monitoring mechanisms. These frameworks are designed to be both comprehensive enough to address the full spectrum of ethical considerations and flexible enough to adapt to rapidly evolving AI technologies and emerging ethical challenges. The most effective AI Ethics Boards operate with clear mandates, adequate resources, and meaningful authority to influence AI development decisions within their organizations.

The establishment of robust governance structures requires careful consideration of the board’s composition, scope of authority, decision-making processes, and relationship with other organizational functions. Successful AI Ethics Boards must strike a delicate balance between providing meaningful oversight and enabling innovation, ensuring that ethical considerations enhance rather than hinder the development of beneficial AI technologies. This balance requires ongoing dialogue between technical teams, ethics experts, and organizational leadership to create governance structures that are both practically implementable and ethically sound.

Composition and Structure of Effective Ethics Boards

The effectiveness of an AI Ethics Board depends heavily on the diversity and expertise of its members, who must collectively possess the knowledge and perspective necessary to address the multifaceted nature of AI ethics challenges. Successful boards typically include technical experts who understand the capabilities and limitations of AI systems, ethicists and philosophers who can provide theoretical grounding for ethical decision-making, legal professionals who understand regulatory requirements and liability issues, social scientists who can assess societal impacts, and domain experts from areas where AI systems will be deployed.

Leverage advanced AI reasoning capabilities with Claude to support ethical decision-making processes and comprehensive analysis of complex AI governance scenarios. The inclusion of diverse perspectives ensures that ethics boards can identify potential issues that might be overlooked by homogeneous groups and develop solutions that account for the varied ways AI systems might impact different communities and stakeholders.

Beyond professional expertise, effective AI Ethics Boards must also consider demographic diversity to ensure that the perspectives of different communities are represented in ethical deliberations. This includes consideration of gender, ethnicity, age, socioeconomic background, and geographic representation, as well as inclusion of voices from communities that may be disproportionately affected by AI systems. The goal is to create a board composition that reflects the diversity of stakeholders who will be impacted by AI technologies and can therefore provide comprehensive assessment of ethical implications.

The structural organization of AI Ethics Boards varies depending on organizational context, but most effective boards establish clear roles and responsibilities, regular meeting schedules, defined decision-making processes, and mechanisms for escalating complex or controversial issues. Many boards operate with subcommittees focused on specific areas such as algorithmic bias, privacy protection, or sector-specific applications, allowing for more detailed examination of specialized ethical issues while maintaining overall coherence in the board’s approach to AI governance.

Core Principles and Frameworks for AI Ethics

AI Ethics Boards operate according to foundational principles that guide their decision-making and provide consistency in their approach to evaluating AI systems and practices. While specific principles may vary between organizations, most ethics boards embrace core concepts including fairness and non-discrimination, transparency and explainability, accountability and responsibility, privacy and data protection, human autonomy and dignity, and beneficence and non-maleficence. These principles serve as both aspirational goals and practical criteria for evaluating AI systems throughout their development and deployment lifecycle.

The principle of fairness and non-discrimination requires AI systems to treat all individuals and groups equitably, avoiding bias that could result in unfair advantages or disadvantages based on protected characteristics or other morally irrelevant factors. This principle encompasses both individual fairness, ensuring that similar individuals are treated similarly, and group fairness, ensuring that different demographic groups experience equitable outcomes from AI systems. Implementing this principle requires careful attention to data collection practices, algorithm design, and ongoing monitoring of system performance across different populations.

Transparency and explainability principles demand that AI systems operate in ways that can be understood and scrutinized by relevant stakeholders, including users, affected parties, and regulatory authorities. This principle recognizes that trust in AI systems depends on the ability of stakeholders to understand how these systems make decisions and to verify that they operate according to stated principles and requirements. The implementation of transparency requirements must balance the need for understanding with considerations of intellectual property protection, competitive advantage, and security concerns.

The comprehensive framework for AI ethics encompasses multiple interconnected components that work together to ensure responsible AI development and deployment. Each component plays a crucial role in creating a robust governance structure that addresses the complex ethical challenges inherent in artificial intelligence systems.

Risk Assessment and Management Processes

Effective AI Ethics Boards implement systematic risk assessment processes that identify, evaluate, and mitigate potential ethical risks associated with AI systems throughout their lifecycle. These processes begin during the earliest stages of AI system design and continue through development, testing, deployment, and ongoing operation. The risk assessment framework typically encompasses multiple categories of potential harm, including individual harm such as discrimination or privacy violations, societal harm such as job displacement or social manipulation, and systemic harm such as erosion of democratic institutions or concentration of power.

The risk assessment process involves both qualitative and quantitative evaluation methods, drawing on established risk management frameworks while adapting them to address the unique characteristics of AI systems. Qualitative assessment focuses on identifying potential ethical issues through stakeholder consultation, scenario analysis, and expert judgment, while quantitative assessment utilizes metrics and measurement tools to evaluate the likelihood and magnitude of potential harms. The combination of these approaches provides a comprehensive understanding of ethical risks that can inform decision-making about AI system development and deployment.

Risk mitigation strategies developed by AI Ethics Boards typically include technical measures such as algorithmic adjustments to reduce bias, procedural measures such as enhanced testing and validation processes, and governance measures such as ongoing monitoring and audit requirements. The selection and implementation of appropriate mitigation strategies require careful consideration of their effectiveness, feasibility, and potential unintended consequences. Successful risk management also requires mechanisms for updating and refining mitigation strategies as new information becomes available and as AI systems evolve over time.

Stakeholder Engagement and Public Participation

AI Ethics Boards increasingly recognize the importance of engaging with diverse stakeholders and facilitating public participation in AI governance processes. This engagement extends beyond traditional organizational boundaries to include affected communities, civil society organizations, academic researchers, and members of the general public who may be impacted by AI systems. Stakeholder engagement serves multiple purposes, including gathering diverse perspectives on ethical issues, building public trust in AI governance processes, and ensuring that governance decisions reflect the values and priorities of affected communities.

Enhance your research capabilities with Perplexity to conduct comprehensive stakeholder analysis and gather diverse perspectives on AI ethics and governance challenges. Effective stakeholder engagement requires proactive outreach to identify and include voices that might not otherwise be represented in AI governance processes, particularly those from marginalized or vulnerable communities that may be disproportionately affected by AI systems.

Public participation mechanisms employed by AI Ethics Boards include public consultations, citizen panels, community workshops, online surveys, and formal comment periods on proposed policies or guidelines. These mechanisms must be designed to be accessible and inclusive, accounting for differences in technical knowledge, language, cultural background, and available time and resources. The challenge lies in creating meaningful opportunities for participation while managing the practical constraints of decision-making timelines and resource limitations.

The integration of stakeholder input into AI governance processes requires careful consideration of how diverse perspectives and concerns can be synthesized into actionable guidance for AI development and deployment. This process often involves trade-offs between competing values or interests, requiring ethics boards to develop transparent methods for weighing different considerations and explaining their decision-making rationale to stakeholders and the broader public.

Implementation Challenges and Solutions

The implementation of AI Ethics Board recommendations and governance frameworks faces numerous challenges that require creative and adaptive solutions. Technical challenges arise from the complexity of AI systems and the difficulty of translating ethical principles into specific technical requirements or constraints. Many ethical concepts, such as fairness or transparency, do not have universally agreed-upon technical definitions, requiring ethics boards to work closely with technical teams to develop practically implementable approaches that align with ethical principles.

Organizational challenges stem from the need to integrate ethical considerations into existing business processes, development workflows, and decision-making structures. This integration often requires changes to organizational culture, resource allocation, performance metrics, and accountability mechanisms. Resistance to these changes may arise from concerns about increased costs, slower development timelines, or reduced flexibility in system design. Successful implementation requires strong leadership support, clear communication about the benefits of ethical AI practices, and demonstration of how ethical considerations can enhance rather than hinder business objectives.

Resource constraints represent another significant implementation challenge, as effective AI governance requires substantial investments in personnel, tools, processes, and ongoing monitoring activities. Organizations must balance the costs of comprehensive ethical oversight against other priorities while ensuring that resource limitations do not compromise the effectiveness of governance mechanisms. Solutions include leveraging automated tools for routine monitoring tasks, developing shared resources and expertise across organizations, and focusing resources on the highest-risk AI applications.

The rapidly evolving nature of AI technology creates additional implementation challenges, as governance frameworks must adapt to new capabilities, applications, and ethical challenges that emerge as the field advances. This requires ethics boards to maintain awareness of technological developments, engage with research communities, and develop flexible governance approaches that can evolve with changing circumstances while maintaining consistency in fundamental ethical principles.

Measuring Success and Impact

The effectiveness of AI Ethics Boards and their governance frameworks must be evaluated through comprehensive measurement and assessment processes that capture both immediate outcomes and longer-term impacts. Success metrics for AI ethics governance encompass multiple dimensions, including process metrics that evaluate the functioning of governance mechanisms, outcome metrics that assess the ethical performance of AI systems, and impact metrics that measure broader effects on stakeholders and society.

Process metrics focus on the operational effectiveness of ethics boards and governance frameworks, including measures such as the frequency and quality of ethics reviews, stakeholder engagement levels, compliance with established procedures, and timeliness of decision-making. These metrics help identify areas where governance processes may need refinement or additional resources and provide accountability for the functioning of ethics oversight mechanisms.

Outcome metrics evaluate the direct results of AI ethics governance on the systems and practices being overseen. These measures include assessments of algorithmic bias reduction, privacy protection effectiveness, transparency improvements, and incident prevention or response. The development of appropriate outcome metrics often requires collaboration between ethics boards and technical teams to identify measurable indicators that meaningfully reflect ethical principles and values.

The measurement of AI ethics success requires a comprehensive dashboard that tracks multiple dimensions of ethical performance, from process effectiveness to stakeholder satisfaction and societal impact. This multifaceted approach ensures that governance efforts are creating meaningful improvements in AI system ethics and accountability.

Impact metrics assess the broader effects of AI ethics governance on stakeholders, organizations, and society. These measures are often more challenging to quantify but are essential for understanding the ultimate value of ethics oversight efforts. Impact metrics may include measures of public trust in AI systems, equity in AI system outcomes across different populations, organizational reputation and stakeholder confidence, and contribution to broader societal goals such as justice, equality, and human flourishing.

International Cooperation and Standards Development

The global nature of AI development and deployment necessitates international cooperation in AI ethics governance, as ethical challenges transcend national boundaries and require coordinated responses. AI Ethics Boards increasingly participate in international networks and collaborative initiatives that facilitate sharing of best practices, development of common standards, and coordination of governance approaches across different jurisdictions and sectors. These collaborative efforts help ensure that AI ethics governance keeps pace with the global development and deployment of AI technologies.

International standards development represents a critical area of cooperation, as common standards can facilitate interoperability, reduce compliance complexity for multinational organizations, and ensure consistent ethical protections for individuals regardless of their location. Organizations such as the International Organization for Standardization, the Institute of Electrical and Electronics Engineers, and various professional associations are developing technical standards for AI systems that incorporate ethical requirements and guidelines.

Regulatory harmonization efforts seek to align different national and regional approaches to AI governance while respecting cultural differences and local priorities. This work involves sharing information about regulatory approaches, identifying areas of common concern, and developing mechanisms for mutual recognition of compliance with ethical standards. The challenge lies in balancing the benefits of harmonization with respect for sovereignty and cultural differences in ethical values and priorities.

Cross-border collaboration also addresses specific ethical challenges that arise from the global deployment of AI systems, such as data transfers across jurisdictions with different privacy requirements, coordination of responses to AI-related incidents that affect multiple countries, and prevention of regulatory arbitrage that could undermine ethical protections.

Sector-Specific Applications and Considerations

Different sectors face unique ethical challenges in AI deployment, requiring AI Ethics Boards to develop specialized expertise and tailored governance approaches for various application domains. Healthcare AI systems raise distinctive issues related to patient safety, medical decision-making, privacy of sensitive health information, and equity in access to medical AI technologies. Financial services AI applications involve concerns about discriminatory lending practices, systemic risk, consumer protection, and market manipulation. Criminal justice AI systems present challenges related to due process, presumption of innocence, proportionality of punishment, and the potential for algorithmic bias to perpetuate or exacerbate existing inequalities in the justice system.

Educational AI applications require consideration of student privacy, academic integrity, equity in educational opportunities, and the appropriate role of AI in educational assessment and decision-making. Autonomous vehicle systems raise questions about liability in accidents, trade-offs between different types of safety risks, and public acceptance of AI-driven transportation systems. Each of these sectors requires ethics boards to develop specialized knowledge and adapt general ethical principles to the specific context and requirements of the domain.

The development of sector-specific guidance involves collaboration between AI Ethics Boards and domain experts, regulatory authorities, professional associations, and affected communities. This collaborative approach ensures that ethical guidance is both technically feasible and practically relevant to the challenges faced by organizations operating in specific sectors. The resulting guidance must balance general ethical principles with sector-specific requirements and constraints while maintaining consistency in fundamental ethical commitments.

Future Directions and Emerging Challenges

The field of AI ethics governance continues to evolve as new technologies emerge, societal understanding of AI impacts deepens, and governance frameworks mature through practical experience. Emerging AI technologies such as large language models, generative AI systems, and artificial general intelligence present new ethical challenges that will require continued adaptation of governance approaches. These technologies raise questions about intellectual property, authenticity, misinformation, and the appropriate boundaries of AI capabilities that were not previously central to AI ethics discussions.

The increasing sophistication and autonomy of AI systems will likely require evolution in how ethics boards conceptualize responsibility and accountability for AI decisions and actions. As AI systems become capable of more complex and independent decision-making, traditional models of human oversight and control may need to be supplemented or replaced with new approaches to ensuring ethical behavior. This evolution will require careful consideration of how to maintain human agency and dignity while leveraging the capabilities of advanced AI systems.

The democratization of AI development through increasingly accessible tools and platforms creates new governance challenges as AI capabilities spread beyond traditional technology companies and research institutions. AI Ethics Boards will need to develop approaches that can provide ethical guidance and oversight for a much broader and more diverse community of AI developers, including small organizations, individual developers, and non-technical users who may lack specialized knowledge of AI ethics principles and practices.

Climate change and environmental sustainability are emerging as important considerations for AI ethics governance, as the energy consumption and carbon footprint of large-scale AI systems become increasingly significant. Ethics boards will need to integrate environmental considerations into their governance frameworks and develop approaches that balance the benefits of AI technologies against their environmental costs.

Building Organizational Culture for Ethical AI

The success of AI Ethics Boards ultimately depends on their ability to foster organizational cultures that prioritize ethical considerations in AI development and deployment. This cultural transformation requires moving beyond compliance-oriented approaches to create environments where ethical reasoning is integrated into daily decision-making processes and where all team members feel empowered and responsible for identifying and addressing ethical issues. Building such cultures requires sustained commitment from organizational leadership, comprehensive training and education programs, and structural changes that align incentives with ethical objectives.

Leadership commitment to AI ethics must be demonstrated through resource allocation, public statements, and decision-making that consistently prioritizes ethical considerations even when they conflict with short-term business interests. This commitment must be authentic and sustained, as organizational cultures are shaped more by observed behaviors than by stated policies or values. Leaders must model ethical decision-making and create environments where ethical concerns can be raised without fear of retaliation or career consequences.

Training and education programs play a crucial role in building organizational capacity for ethical AI development. These programs must address both technical aspects of implementing ethical principles in AI systems and broader questions about the societal impacts of AI technologies. Effective training programs are tailored to different roles and responsibilities within organizations, provide practical tools and frameworks for ethical decision-making, and are regularly updated to reflect evolving understanding of AI ethics challenges and best practices.

The integration of ethical considerations into organizational systems and processes ensures that ethics becomes a routine part of AI development rather than an afterthought or external constraint. This integration includes incorporating ethical review into project approval processes, including ethical metrics in performance evaluations, and creating feedback mechanisms that allow continuous learning and improvement in ethical practices.

Conclusion and Path Forward

AI Ethics Boards represent a critical component of the infrastructure needed to ensure that artificial intelligence technologies are developed and deployed in ways that benefit humanity while minimizing potential harms. The experiences of early ethics boards provide valuable lessons about effective governance structures, stakeholder engagement methods, and implementation strategies that can inform the continued evolution of AI ethics governance. As AI technologies continue to advance and their societal impacts become more pronounced, the role of ethics boards in providing oversight, guidance, and accountability will only become more important.

The path forward for AI ethics governance requires continued learning, adaptation, and collaboration across organizations, sectors, and national boundaries. This evolution must be informed by ongoing research into the effectiveness of different governance approaches, regular engagement with affected communities and stakeholders, and careful attention to emerging ethical challenges as they arise. The ultimate goal is to create governance frameworks that enable the beneficial development of AI technologies while protecting human values, rights, and dignity.

The success of AI Ethics Boards in fulfilling this mission will depend on their ability to remain relevant and effective as AI technologies evolve, to maintain public trust through transparent and accountable governance processes, and to demonstrate that ethical AI development is not only morally necessary but also practically achievable and economically viable. The continued development of AI ethics governance represents one of the most important challenges of our time, requiring sustained commitment, creativity, and collaboration from all stakeholders in the AI ecosystem.

Disclaimer

This article is for informational purposes only and does not constitute legal, professional, or policy advice. The views expressed are based on current understanding of AI ethics and governance practices. Readers should consult with qualified professionals and conduct their own research when developing AI ethics policies or governance frameworks. The effectiveness of AI ethics governance approaches may vary depending on organizational context, regulatory environment, and specific AI applications.