The rapid advancement of artificial intelligence has brought unprecedented capabilities to solve complex problems across industries, yet this progress has introduced a fundamental challenge that threatens the very foundation of AI adoption: the black box problem. As machine learning models become increasingly sophisticated and powerful, their decision-making processes have simultaneously become more opaque and difficult to understand, creating a critical need for explainable artificial intelligence that can bridge the gap between computational complexity and human comprehension.

Discover the latest developments in AI transparency to understand how the field is evolving toward more interpretable and accountable artificial intelligence systems. The imperative for AI explainability extends far beyond academic curiosity, touching every aspect of how we deploy, regulate, and trust artificial intelligence in mission-critical applications where understanding the reasoning behind decisions can mean the difference between life and death, justice and discrimination, or success and catastrophic failure.

The Black Box Dilemma in Modern AI

The term “black box” in artificial intelligence refers to models whose internal workings are so complex or opaque that even their creators cannot easily explain how specific inputs lead to particular outputs. This phenomenon has become increasingly prevalent with the rise of deep learning networks, ensemble methods, and other sophisticated machine learning techniques that achieve remarkable performance at the cost of interpretability. The black box nature of these systems creates fundamental challenges for deployment in high-stakes environments where decision transparency is not merely desirable but absolutely essential.

The complexity of modern AI systems stems from their ability to identify and utilize patterns in data that are far too intricate for human perception and analysis. While this capability enables breakthrough performance in areas such as image recognition, natural language processing, and predictive analytics, it simultaneously creates a barrier to understanding that can undermine trust, accountability, and regulatory compliance. Organizations find themselves in the paradoxical position of deploying highly effective systems whose decision-making processes they cannot adequately explain to stakeholders, regulators, or even themselves.

The consequences of this opacity extend beyond mere inconvenience, potentially leading to biased decisions, regulatory violations, and loss of public trust in AI systems. Healthcare providers struggle to explain AI-driven diagnostic recommendations to patients, financial institutions cannot adequately justify AI-based loan decisions to regulators, and autonomous vehicle manufacturers face scrutiny over decisions made by algorithms they cannot fully interpret. This fundamental tension between performance and explainability has catalyzed an entire field of research dedicated to making artificial intelligence more transparent and accountable.

Foundations of Explainable Artificial Intelligence

Explainable Artificial Intelligence, commonly referred to as XAI, represents a systematic approach to developing AI systems that can provide clear, understandable explanations for their decisions and behaviors. This field encompasses a broad range of techniques, methodologies, and principles designed to make AI systems more transparent without necessarily sacrificing their performance or effectiveness. The foundation of XAI rests on the premise that artificial intelligence systems should be designed with inherent interpretability or equipped with mechanisms that can generate meaningful explanations for their outputs.

Experience advanced AI reasoning with Claude to see how modern AI systems can provide detailed explanations for their thought processes and decision-making. The development of explainable AI requires a fundamental shift in how we approach machine learning model design, moving from a purely performance-focused mindset to one that balances accuracy with interpretability and transparency.

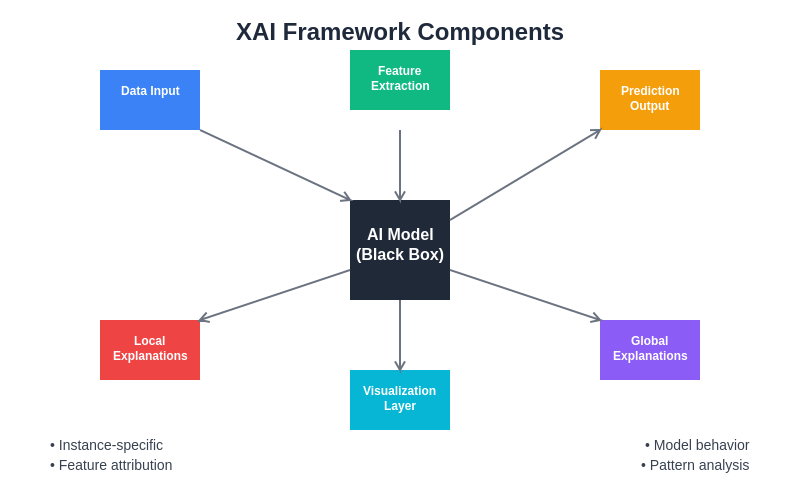

The theoretical framework of XAI distinguishes between several types of explainability, including global explanations that describe overall model behavior, local explanations that clarify specific individual predictions, and counterfactual explanations that illustrate how different inputs would lead to different outcomes. Each type of explanation serves different stakeholder needs and use cases, requiring sophisticated techniques and tools to generate meaningful insights that bridge the gap between complex mathematical operations and human understanding.

The comprehensive framework for explainable AI encompasses multiple interconnected components that work together to transform opaque model outputs into meaningful insights. This systematic approach ensures that explanations are generated consistently and can be effectively communicated to diverse stakeholders with varying technical backgrounds.

Techniques for Model Interpretation

The landscape of AI interpretation techniques spans a diverse array of approaches, each designed to address specific aspects of the explainability challenge. Feature importance analysis represents one of the most fundamental approaches, providing insights into which input variables have the greatest influence on model predictions. This technique can reveal patterns in how models weigh different factors, helping stakeholders understand the relative significance of various data elements in the decision-making process.

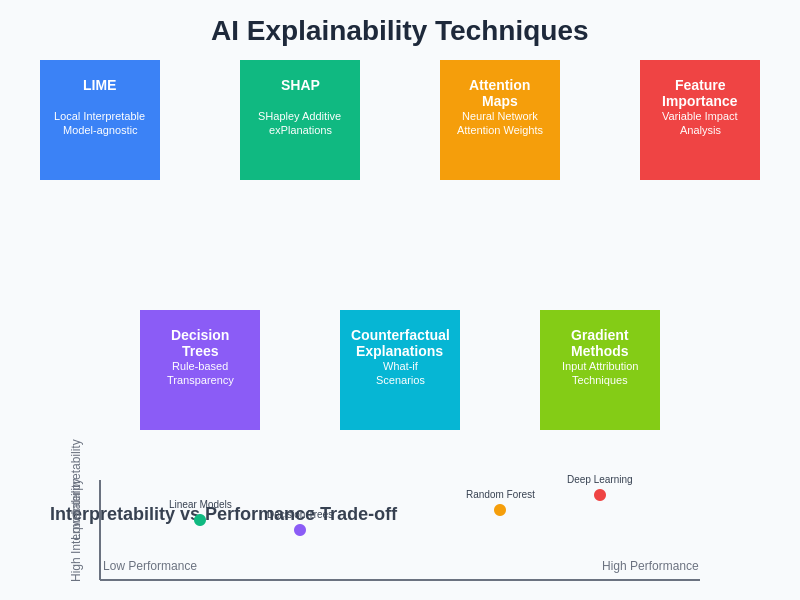

LIME, which stands for Local Interpretable Model-agnostic Explanations, offers a powerful methodology for explaining individual predictions by approximating the behavior of complex models with simpler, more interpretable models in the local vicinity of specific instances. This approach enables practitioners to understand why a particular decision was made for a specific case, providing granular insights that can be crucial for applications requiring detailed justification of individual outcomes.

SHAP, or SHapley Additive exPlanations, provides a unified framework for explaining model outputs based on cooperative game theory, offering mathematically rigorous explanations that satisfy desirable properties such as efficiency, symmetry, and additivity. The SHAP framework has become particularly valuable for its ability to provide both local and global explanations while maintaining theoretical foundations that ensure consistency and reliability across different types of models and applications.

Attention mechanisms, particularly prevalent in natural language processing and computer vision applications, offer another avenue for explainability by highlighting which parts of the input data the model focuses on when making decisions. These mechanisms provide intuitive visualizations that can help users understand the model’s reasoning process, particularly in applications involving text analysis, image recognition, and sequential data processing.

The diverse landscape of explainability techniques offers different strengths and capabilities, with each method addressing specific aspects of the interpretability challenge. Understanding the trade-offs between interpretability and performance helps organizations select the most appropriate techniques for their specific use cases and requirements.

Interpretable Model Architectures

The pursuit of explainable AI has led to the development of inherently interpretable model architectures that prioritize transparency from the ground up rather than attempting to explain opaque systems after the fact. Decision trees represent one of the most intuitive examples of interpretable models, providing clear pathways from input features to final predictions through a series of easily understood decision rules. While individual decision trees may have limited capacity for complex pattern recognition, ensemble methods such as random forests can combine multiple trees to achieve better performance while maintaining relative interpretability.

Linear models, including logistic regression and linear regression, offer another class of inherently interpretable algorithms where the relationship between inputs and outputs can be expressed through simple mathematical equations. The coefficients in these models directly indicate the magnitude and direction of each feature’s influence on the final prediction, providing straightforward explanations that can be easily communicated to non-technical stakeholders.

Rule-based systems represent another approach to interpretable AI, utilizing explicit logical rules that can be directly examined and understood by human experts. These systems excel in domains where expert knowledge can be codified into clear decision rules, offering complete transparency in their reasoning process while maintaining the flexibility to incorporate domain-specific insights and constraints.

Visualization and Communication Strategies

Effective communication of AI explanations requires sophisticated visualization techniques that can transform complex mathematical relationships into intuitive visual representations accessible to diverse audiences. Feature importance plots provide straightforward visualizations of which variables matter most in model decisions, using bar charts, heat maps, and other graphical representations to convey relative significance in an easily digestible format.

Partial dependence plots offer insights into how individual features influence model predictions across their entire range of values, enabling stakeholders to understand non-linear relationships and interaction effects that might not be apparent from simple feature importance measures. These visualizations help identify thresholds, optimal ranges, and unexpected patterns in how models respond to different input values.

Interactive dashboards and exploration tools have emerged as powerful platforms for enabling stakeholders to investigate model behavior through dynamic visualizations and what-if analysis capabilities. These tools allow users to manipulate input parameters and observe corresponding changes in predictions and explanations, fostering deeper understanding through hands-on exploration and experimentation.

Enhance your research capabilities with Perplexity to access comprehensive information about the latest visualization techniques and tools for AI explainability. The development of effective communication strategies requires careful consideration of audience needs, technical expertise levels, and the specific context in which explanations will be used.

Industry Applications and Case Studies

The healthcare industry has emerged as a critical domain for AI explainability, where the ability to understand and justify AI-driven decisions can directly impact patient outcomes and clinical acceptance. Medical diagnostic systems that can explain their reasoning help physicians understand the rationale behind AI recommendations, enabling more informed clinical decision-making and building trust between healthcare providers and AI tools. Explainable AI in radiology, for example, can highlight specific regions in medical images that contribute to diagnostic conclusions, helping radiologists validate AI findings and identify potential areas for further investigation.

Financial services represent another sector where explainability is not just valuable but often legally required. Credit scoring models must provide clear explanations for lending decisions to comply with fair lending regulations and enable applicants to understand factors affecting their creditworthiness. Fraud detection systems benefit from explainable AI by helping analysts understand why certain transactions are flagged as suspicious, enabling more effective investigation and reducing false positives that can negatively impact customer experience.

Autonomous vehicle development relies heavily on explainable AI to build public trust and regulatory acceptance. Understanding why an autonomous vehicle makes specific driving decisions becomes crucial for safety validation, accident investigation, and continuous improvement of driving algorithms. Explainable AI enables engineers to identify decision patterns that might lead to unsafe behaviors and provides insights necessary for regulatory approval and public acceptance of autonomous vehicle technology.

Criminal justice applications of AI, including risk assessment tools used in sentencing and parole decisions, require exceptional levels of explainability to ensure fairness and accountability in legal proceedings. The ability to explain how AI systems assess recidivism risk or recommend sentencing guidelines is essential for maintaining justice system integrity and ensuring that algorithmic decisions can be properly scrutinized and challenged when necessary.

Regulatory Landscape and Compliance

The regulatory environment surrounding AI explainability has evolved rapidly as governments and international organizations recognize the need for transparency and accountability in artificial intelligence systems. The European Union’s General Data Protection Regulation (GDPR) includes provisions for algorithmic transparency, granting individuals the right to explanation for automated decision-making that significantly affects them. This regulation has established important precedents for AI explainability requirements and influenced similar initiatives worldwide.

The proposed EU AI Act represents a comprehensive approach to AI regulation that includes specific requirements for high-risk AI systems to provide clear explanations and maintain human oversight. These regulations will likely require organizations to implement explainable AI techniques not as optional enhancements but as fundamental compliance requirements for certain types of AI applications.

Financial regulatory bodies have begun implementing specific guidelines for AI explainability in banking and insurance applications, requiring institutions to demonstrate that they can adequately explain AI-driven decisions affecting customers. These regulations often mandate the ability to provide detailed explanations for adverse decisions and require ongoing monitoring of AI system behavior to ensure continued compliance with fairness and transparency standards.

Healthcare regulators are developing frameworks for AI explainability in medical devices and diagnostic systems, recognizing that the unique safety requirements of healthcare applications demand exceptional levels of transparency and interpretability. These emerging regulations will likely require medical AI systems to provide clinically meaningful explanations that support rather than replace physician judgment.

Technical Challenges and Limitations

Despite significant advances in explainable AI techniques, fundamental technical challenges continue to limit the effectiveness and adoption of AI explanation methods. The accuracy-interpretability trade-off remains a persistent challenge, as many highly accurate models achieve their performance through complexity that inherently resists explanation. Organizations must carefully balance the need for accurate predictions against the requirement for transparency, often accepting reduced performance to achieve necessary levels of explainability.

Explanation consistency represents another significant challenge, as different explanation techniques applied to the same model can sometimes produce conflicting insights about feature importance or decision rationale. This inconsistency can undermine confidence in explanations and create confusion among stakeholders who expect coherent and reliable insights from AI explanation tools.

The scalability of explanation techniques poses practical challenges for large-scale AI deployments, as many current methods require significant computational resources to generate explanations for individual predictions. This limitation can make real-time explanation generation impractical for high-volume applications, forcing organizations to choose between comprehensive explainability and operational efficiency.

Adversarial explanations represent an emerging concern, as research has demonstrated that explanation techniques can sometimes be manipulated to provide misleading insights while maintaining model performance. This vulnerability raises questions about the reliability of AI explanations and highlights the need for robust explanation methods that resist manipulation and provide consistently accurate insights.

Future Directions and Emerging Trends

The future of AI explainability is being shaped by several promising research directions and emerging technological trends that promise to address current limitations while opening new possibilities for transparent artificial intelligence. Causal AI represents one of the most significant developments, moving beyond correlation-based explanations to provide insights into causal relationships that drive model predictions. This advancement promises to deliver more meaningful and actionable explanations that can support better decision-making and policy development.

Neural symbolic AI combines the pattern recognition capabilities of deep learning with the interpretability of symbolic reasoning systems, creating hybrid approaches that can achieve high performance while maintaining transparency. These systems promise to bridge the gap between the effectiveness of modern AI and the explainability requirements of critical applications.

Automated explanation generation represents another frontier in explainable AI research, with systems being developed that can automatically generate natural language explanations for AI decisions. These capabilities could democratize access to AI explanations by making them accessible to users without technical expertise in machine learning or statistics.

The integration of domain expertise into explanation systems promises to create more contextually relevant and actionable insights. By incorporating knowledge from subject matter experts, explanation systems can provide insights that are not only technically accurate but also practically meaningful within specific application domains.

Quantum computing applications in explainable AI represent a longer-term but potentially revolutionary development, with quantum algorithms potentially enabling new approaches to model interpretation that are computationally infeasible with classical computing systems. These developments could unlock new levels of explanation granularity and accuracy while addressing scalability challenges that limit current techniques.

Building Trust Through Transparency

The ultimate goal of AI explainability extends beyond technical transparency to encompass the broader objective of building trust between humans and artificial intelligence systems. Trust in AI requires not only the ability to understand how systems make decisions but also confidence that these systems behave consistently, fairly, and reliably across different contexts and populations. Explainable AI serves as a foundation for this trust by providing mechanisms for validation, accountability, and continuous improvement of AI systems.

The relationship between explainability and trust is complex and multifaceted, involving technical, psychological, and social dimensions that must be carefully considered in the design and deployment of AI systems. Effective explanations must be tailored to different audiences, with technical explanations for data scientists and engineers complemented by intuitive explanations for end users and decision-makers who rely on AI outputs.

The democratization of AI understanding through improved explainability has the potential to reduce the digital divide and enable broader participation in AI-driven innovation. By making AI systems more accessible and understandable, explainable AI can help ensure that the benefits of artificial intelligence are more equitably distributed and that diverse perspectives can contribute to the development and validation of AI systems.

Educational initiatives focused on AI literacy and explainability will play crucial roles in preparing society for the continued integration of artificial intelligence into daily life. These programs must balance technical education with practical understanding of AI capabilities and limitations, helping individuals and organizations make informed decisions about AI adoption and use.

The evolution of AI explainability represents not just a technical challenge but a fundamental aspect of the responsible development and deployment of artificial intelligence. As AI systems become more prevalent and influential in society, the ability to understand, explain, and trust these systems becomes essential for realizing the full potential of artificial intelligence while mitigating its risks and ensuring its benefits are broadly shared.

The journey toward truly explainable AI requires continued collaboration between researchers, practitioners, policymakers, and society at large. This collaborative effort must address not only the technical challenges of making AI systems more transparent but also the broader questions of how explanation and understanding can support human agency, democratic governance, and social justice in an increasingly AI-driven world.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI explainability techniques and their applications. Readers should conduct their own research and consider their specific requirements when implementing explainable AI solutions. The effectiveness and appropriateness of different explainability techniques may vary depending on specific use cases, regulatory requirements, and organizational needs.