The proliferation of artificial intelligence and machine learning systems across enterprise environments has introduced unprecedented security challenges that demand specialized incident response strategies. As organizations increasingly depend on AI-driven decision-making processes, the potential impact of security breaches in machine learning systems extends far beyond traditional cybersecurity concerns, encompassing data integrity, model reliability, and algorithmic fairness. The complexity of AI systems requires a sophisticated approach to incident response that addresses both conventional security threats and unique vulnerabilities specific to machine learning architectures.

Stay updated with the latest AI security trends to understand emerging threats and defensive strategies in the rapidly evolving landscape of artificial intelligence security. The intersection of cybersecurity and artificial intelligence creates a dynamic threat environment where traditional security approaches must be augmented with AI-specific incident response methodologies that account for the unique characteristics of machine learning systems and their operational requirements.

Understanding AI System Vulnerabilities

Machine learning systems present a multifaceted attack surface that encompasses traditional cybersecurity vulnerabilities alongside novel threats specific to artificial intelligence architectures. Unlike conventional software systems, AI models are susceptible to adversarial attacks that can manipulate decision-making processes without traditional system compromise indicators. These attacks can include data poisoning during training phases, model inversion techniques that extract sensitive information from trained models, and adversarial examples designed to cause misclassification or inappropriate responses.

The distributed nature of modern AI systems, often spanning cloud environments, edge devices, and hybrid architectures, creates additional complexity in vulnerability management and incident detection. Training data repositories, model serving infrastructure, and inference endpoints each represent potential entry points for malicious actors seeking to compromise AI system integrity. Furthermore, the opacity of many machine learning models, particularly deep learning systems, can obscure the presence of security incidents and complicate forensic analysis when breaches occur.

Supply chain vulnerabilities represent another critical concern in AI security, as organizations frequently rely on pre-trained models, open-source frameworks, and third-party datasets that may contain hidden backdoors or malicious modifications. The interconnected nature of AI development ecosystems means that a compromise in one component can cascade through multiple systems and organizations, requiring incident response teams to consider both direct and indirect impacts of security breaches.

Establishing AI-Specific Incident Detection

Traditional security monitoring approaches must be enhanced with AI-specific detection capabilities that can identify anomalous behavior patterns indicative of attacks against machine learning systems. Model performance degradation, unusual prediction patterns, and unexpected changes in output distributions can serve as early warning indicators of potential security incidents. Implementing continuous monitoring of model accuracy, fairness metrics, and prediction confidence levels enables security teams to detect subtle attacks that might evade conventional security controls.

Leverage advanced AI tools like Claude for security analysis and incident detection in complex AI environments where traditional monitoring approaches may prove insufficient. The integration of AI-powered security tools creates a comprehensive defense ecosystem capable of identifying sophisticated threats targeting machine learning systems through behavioral analysis and pattern recognition.

Anomaly detection systems specifically designed for AI environments must account for the inherent variability in machine learning model behavior while maintaining sensitivity to malicious activities. This requires establishing baseline performance metrics across different operational conditions and implementing adaptive thresholds that can distinguish between legitimate model drift and potential security incidents. Log analysis of AI system components, including training pipelines, model registries, and inference services, provides crucial visibility into potential compromise indicators.

Data integrity monitoring represents a critical component of AI incident detection, as training data manipulation can have long-lasting effects on model behavior even after the initial compromise is resolved. Implementing cryptographic verification of training datasets, monitoring for unexpected data quality changes, and tracking provenance of model inputs helps security teams identify potential data poisoning attacks before they can significantly impact model performance.

Incident Classification and Severity Assessment

AI security incidents require specialized classification frameworks that account for the unique characteristics and potential impacts of attacks against machine learning systems. Traditional incident severity metrics must be augmented with AI-specific considerations such as model accuracy degradation, fairness impact, and potential for algorithmic bias introduction. The classification system should distinguish between incidents affecting training phases, model deployment, and inference operations, as each category requires different response strategies and recovery procedures.

High-severity incidents in AI systems may include complete model compromise, large-scale data poisoning attacks, or adversarial manipulations that could lead to discriminatory outcomes or safety-critical failures. Medium-severity incidents might involve partial data corruption, minor accuracy degradation, or exposure of model architecture information. Low-severity incidents could include unsuccessful adversarial attacks, minor data quality issues, or non-critical information disclosure.

The assessment process must consider both immediate technical impacts and broader organizational consequences, including regulatory compliance implications, reputation damage, and potential legal liability arising from compromised AI decision-making processes. Special attention must be paid to incidents affecting AI systems used in sensitive applications such as healthcare diagnostics, financial services, or safety-critical control systems where the consequences of compromise extend beyond traditional cybersecurity concerns.

Containment Strategies for ML Systems

Effective containment of AI security incidents requires rapid isolation of compromised components while maintaining operational continuity of critical business processes. The distributed nature of modern AI systems demands containment strategies that can quickly segment affected infrastructure while preserving the integrity of uncompromised model components. This may involve isolating specific model versions, redirecting inference traffic to backup systems, or temporarily reverting to previous model checkpoints while investigation and remediation activities proceed.

Model rollback capabilities represent a crucial containment mechanism that allows organizations to quickly revert to known-good model states when compromise is suspected. Implementing automated rollback triggers based on performance thresholds or anomaly detection alerts enables rapid response to emerging threats without requiring manual intervention. However, rollback procedures must be carefully designed to avoid disrupting legitimate model updates or introducing additional vulnerabilities through hasty restoration of previous states.

Network segmentation and access control enforcement become particularly important during AI incident containment, as compromised models may attempt to exfiltrate training data or inject malicious predictions into downstream systems. Implementing dynamic policy enforcement that can quickly restrict model access to sensitive data sources or limit inference request volumes helps prevent lateral movement and reduces potential damage from ongoing attacks.

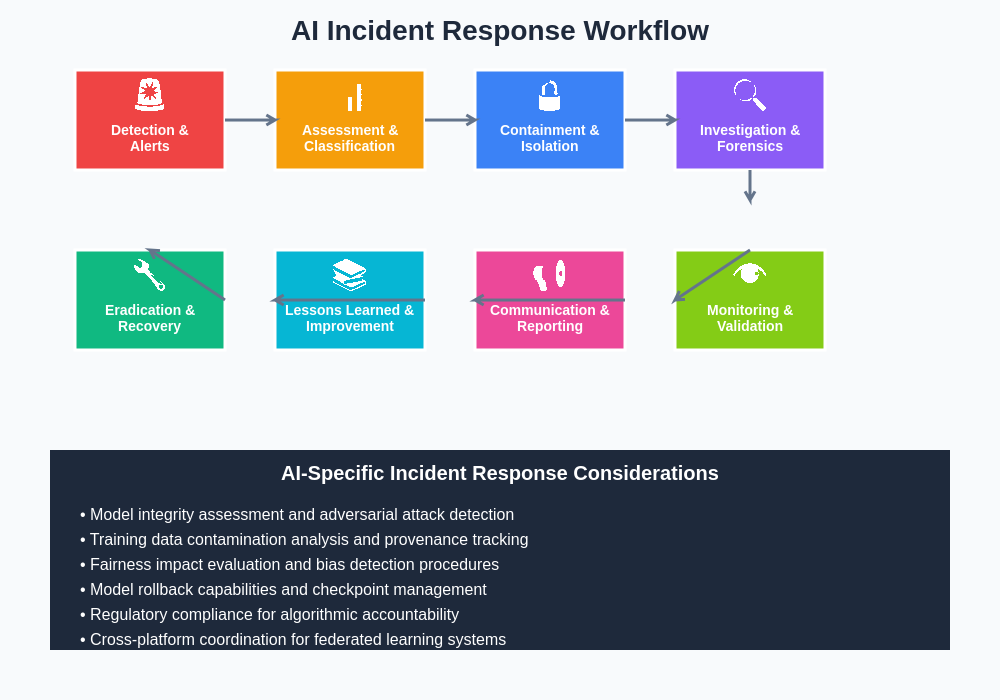

The incident response workflow for AI systems requires specialized procedures that address both traditional cybersecurity concerns and unique machine learning security challenges. This comprehensive approach ensures systematic handling of incidents while maintaining the integrity and availability of critical AI services throughout the response process.

Forensic Analysis of AI Breaches

Digital forensics in AI environments presents unique challenges that require specialized tools and methodologies for effective investigation of security incidents. Traditional forensic techniques must be adapted to handle the complexity of machine learning systems, including analysis of training data, model weights, and inference logs that may contain evidence of malicious activities. The high-dimensional nature of AI model parameters and the volume of training data involved in machine learning systems necessitate advanced analytical approaches for identifying signs of compromise.

Model forensics involves examining changes in model behavior, weight distributions, and prediction patterns that may indicate malicious manipulation or adversarial training. Comparing compromised models against known-good baselines can reveal subtle modifications that might escape traditional detection methods. Advanced techniques such as model diff analysis, gradient inspection, and activation pattern examination help forensic analysts understand the scope and impact of model compromise.

Data provenance tracking becomes crucial in AI forensics, as investigators must trace the source and lineage of training data to identify potential points of compromise. This requires comprehensive logging of data collection, preprocessing, and augmentation activities throughout the machine learning pipeline. Cryptographic signatures and blockchain-based provenance systems can provide tamper-evident records of data handling that support forensic investigations.

Enhance your security research capabilities with Perplexity to stay current with the latest developments in AI forensics and incident analysis methodologies. The rapidly evolving field of AI security requires continuous learning and adaptation of forensic techniques to address emerging threats and attack vectors.

Recovery and Model Restoration

Recovery from AI security incidents involves complex procedures for restoring model integrity while ensuring that malicious modifications or backdoors are completely eliminated. The recovery process must address both immediate operational concerns and long-term security implications of the compromise. This typically involves comprehensive model retraining using verified clean datasets, implementation of enhanced security controls, and thorough validation of restored model behavior across diverse operational scenarios.

Model retraining represents the most thorough approach to recovery but requires significant computational resources and time to complete. Organizations must balance the thoroughness of retraining against operational requirements for AI system availability. Incremental retraining approaches can provide faster recovery times while maintaining security by focusing on critical model components or recently compromised data segments.

Validation of recovered models requires extensive testing to ensure that malicious modifications have been eliminated and that model performance meets operational requirements. This includes accuracy testing across diverse datasets, fairness evaluation to detect potential bias introduction, and adversarial testing to verify resilience against known attack vectors. The validation process must also verify that security controls and monitoring capabilities are functioning correctly to prevent similar incidents in the future.

Backup and checkpoint management strategies become critical during recovery operations, as organizations need access to multiple clean model versions and training datasets to support restoration activities. Implementing secure, immutable backup systems that maintain cryptographic integrity of stored models and data helps ensure the availability of trustworthy recovery resources when incidents occur.

Regulatory Compliance and Reporting

AI security incidents often trigger regulatory reporting requirements that extend beyond traditional cybersecurity compliance frameworks. Organizations operating in regulated industries must navigate complex requirements related to algorithmic accountability, fairness reporting, and impact assessment documentation. The incident response process must incorporate procedures for documenting AI-specific impacts such as discriminatory outcomes, privacy violations, or safety-critical failures that may require immediate regulatory notification.

Compliance frameworks such as GDPR, CCPA, and emerging AI governance regulations impose specific requirements for incident documentation, impact assessment, and remediation reporting. The response process must capture detailed information about data subjects potentially affected by compromised AI systems, including assessment of privacy impacts and potential discriminatory effects. This documentation supports both regulatory compliance and organizational learning from security incidents.

Industry-specific regulations in healthcare, finance, and critical infrastructure sectors impose additional requirements for AI incident reporting and response. Organizations must maintain detailed records of incident timeline, technical details of compromise, and remediation actions taken to demonstrate compliance with sector-specific security requirements and safety standards.

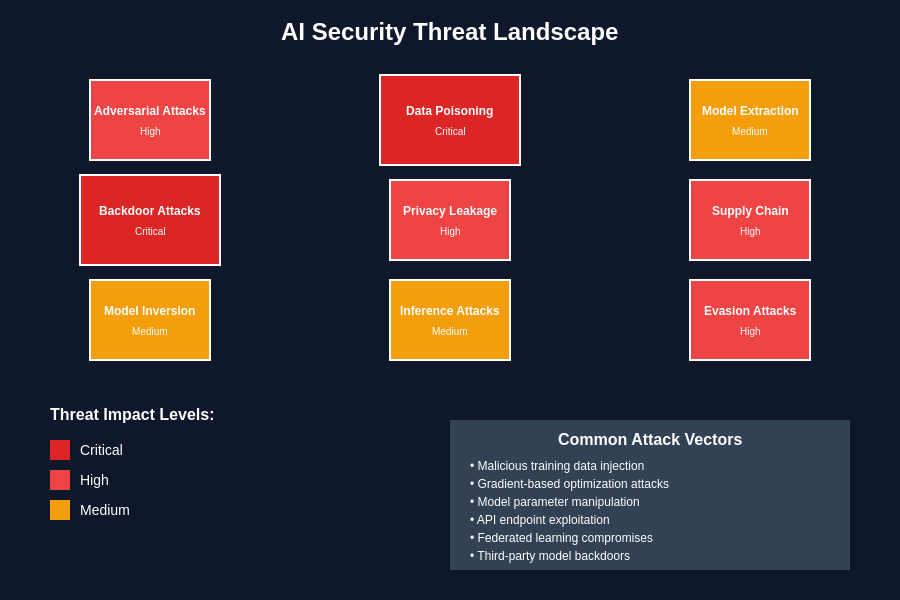

The diverse threat landscape facing AI systems requires comprehensive understanding of different attack vectors and their potential impacts on model integrity, data privacy, and organizational operations. This taxonomy helps incident response teams quickly classify threats and implement appropriate countermeasures.

Stakeholder Communication and Coordination

Effective communication during AI security incidents requires coordination among diverse stakeholders including technical teams, business leadership, legal counsel, and regulatory authorities. The complexity of AI systems and their business impacts demands clear communication protocols that can effectively convey technical details to non-technical stakeholders while providing actionable information for decision-making processes.

Internal communication procedures must account for the cross-functional nature of AI systems that often span multiple business units and technical domains. Data science teams, IT security, legal compliance, and business operations all play crucial roles in incident response and must be effectively coordinated throughout the response process. Regular status updates and clear escalation procedures help maintain organizational alignment during high-stress incident situations.

External communication with customers, partners, and regulatory authorities requires careful consideration of disclosure requirements, competitive implications, and reputation management concerns. The communication strategy must balance transparency requirements with security considerations, avoiding disclosure of technical details that could enable similar attacks while providing sufficient information to support stakeholder decision-making and regulatory compliance.

Public communication about AI security incidents must address growing societal concerns about algorithmic bias, privacy protection, and AI system reliability. Organizations must be prepared to explain both the technical nature of incidents and the broader implications for affected communities or user populations.

Continuous Improvement and Lessons Learned

Post-incident analysis in AI environments must capture lessons learned specific to machine learning security while identifying opportunities for improving both technical controls and organizational processes. The analysis should examine the effectiveness of AI-specific detection mechanisms, the adequacy of containment procedures, and the completeness of recovery processes. This information feeds into continuous improvement of incident response capabilities and enhancement of AI security controls.

Root cause analysis for AI incidents requires deep technical investigation of model architecture, training processes, and operational procedures that may have contributed to the security breach. This analysis helps identify systemic vulnerabilities that extend beyond the immediate incident and informs broader security architecture improvements. The findings should be incorporated into security design reviews for future AI systems and updates to existing security controls.

Training and awareness programs must be updated based on incident lessons learned to ensure that technical teams, management, and other stakeholders understand emerging AI security threats and appropriate response procedures. Regular tabletop exercises and simulation drills help validate incident response procedures and identify areas for improvement before real incidents occur.

Knowledge sharing within the AI security community helps advance collective understanding of emerging threats and effective countermeasures. Organizations should consider participating in industry forums, threat intelligence sharing, and collaborative research initiatives that advance the state of AI security practice while protecting sensitive organizational information.

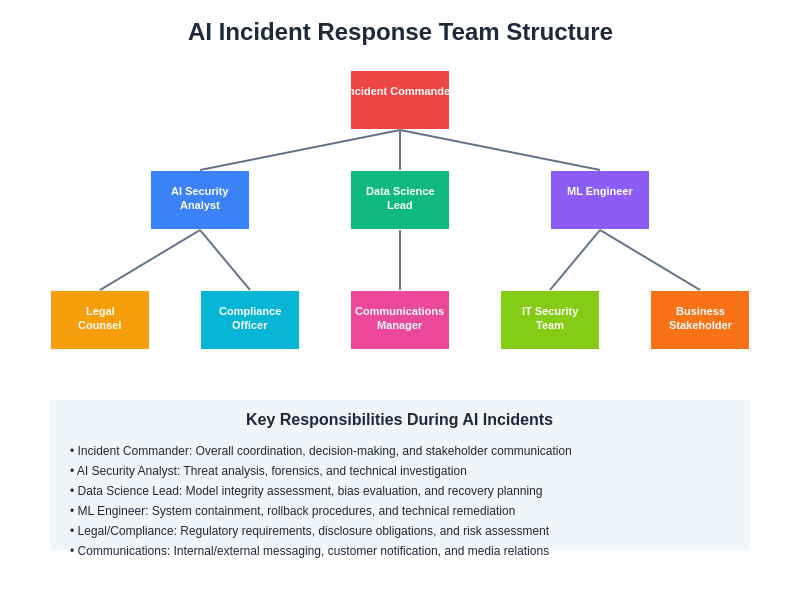

The organizational structure for AI incident response requires specialized roles and expertise that span traditional cybersecurity, data science, and AI ethics domains. This interdisciplinary approach ensures comprehensive coverage of technical, legal, and ethical considerations throughout the incident response process.

Future Considerations and Emerging Challenges

The rapidly evolving landscape of artificial intelligence presents ongoing challenges for incident response teams as new attack vectors emerge and AI systems become increasingly sophisticated. Quantum computing developments may eventually render current cryptographic protections obsolete, requiring fundamental changes to AI security architectures and incident response procedures. Organizations must maintain awareness of these emerging threats and adapt their security postures accordingly.

Federated learning and edge AI deployments introduce new complexities for incident detection and response as AI capabilities become more distributed across diverse environments. Traditional centralized monitoring and response approaches may prove inadequate for detecting and containing incidents in highly distributed AI systems. New approaches to decentralized security monitoring and coordinated response across federated environments will be essential.

The integration of AI systems into critical infrastructure and safety-critical applications raises the stakes for effective incident response as the potential consequences of compromise extend beyond traditional cybersecurity concerns to public safety and national security implications. This evolution requires enhanced coordination between private sector incident response teams and government agencies responsible for critical infrastructure protection.

Regulatory frameworks for AI governance continue to evolve, potentially imposing new requirements for incident response capabilities, reporting procedures, and organizational accountability. Organizations must maintain flexibility in their incident response programs to adapt to changing regulatory requirements while maintaining effective security postures.

The democratization of AI capabilities through accessible platforms and tools creates new challenges for incident response as the number of potential targets and attack vectors continues to expand. Organizations must develop scalable approaches to AI security that can protect increasingly diverse and distributed AI deployments while maintaining operational efficiency and cost-effectiveness.

Disclaimer

This article is for informational and educational purposes only and does not constitute professional cybersecurity or legal advice. The information presented reflects current understanding of AI security challenges and incident response best practices, but the rapidly evolving nature of artificial intelligence and cybersecurity requires continuous adaptation of security strategies. Organizations should consult with qualified cybersecurity professionals, legal counsel, and regulatory experts when developing AI incident response capabilities. The effectiveness of security measures may vary depending on specific organizational contexts, regulatory requirements, and threat environments.