The rapid expansion of artificial intelligence and machine learning research has created an unprecedented volume of scholarly publications, making systematic literature reviews essential for understanding the current state of knowledge, identifying research gaps, and establishing evidence-based foundations for future investigations. Systematic literature reviews in machine learning represent a critical methodology for synthesizing vast amounts of research data, evaluating the effectiveness of different approaches, and providing comprehensive overviews of specific domains within the artificial intelligence landscape.

Explore the latest AI research trends and developments to stay informed about cutting-edge discoveries and methodological advances that are shaping the future of machine learning research. The systematic approach to literature review has become increasingly sophisticated, incorporating advanced analytical techniques and computational tools that enable researchers to process and synthesize information at scales previously impossible with traditional manual review methods.

Foundations of Systematic Literature Review in AI Research

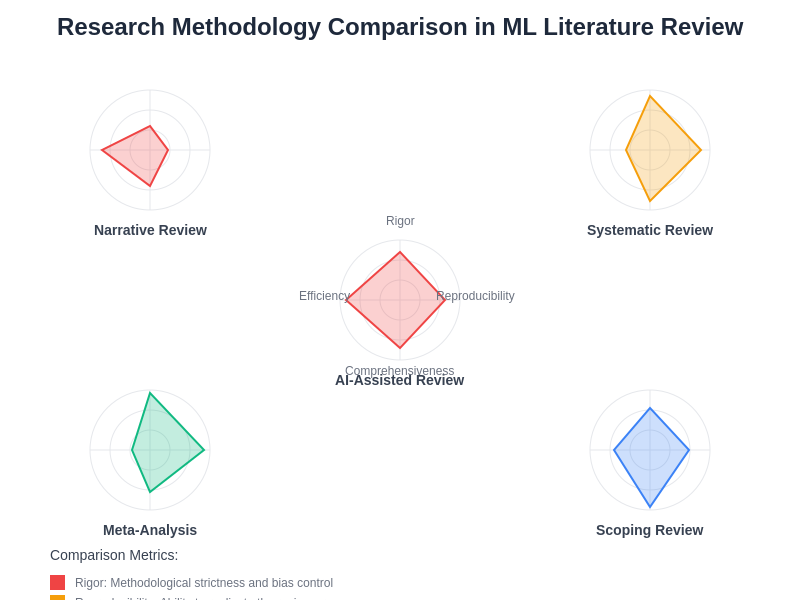

Systematic literature reviews in artificial intelligence and machine learning differ significantly from traditional narrative reviews in their rigorous methodology, comprehensive scope, and reproducible processes. These reviews follow structured protocols that ensure objectivity, minimize bias, and provide transparent documentation of the review process. The systematic approach is particularly crucial in machine learning research, where the rapid pace of innovation and the interdisciplinary nature of the field can make it challenging to maintain awareness of all relevant developments.

The foundation of effective systematic literature review lies in establishing clear research questions, defining precise inclusion and exclusion criteria, and implementing comprehensive search strategies that capture the breadth of relevant literature. This methodological rigor ensures that the resulting review provides reliable insights into the current state of knowledge and identifies areas where further research is needed. The systematic approach also enables other researchers to replicate and extend the review, contributing to the cumulative nature of scientific knowledge.

Methodological Framework for AI Literature Reviews

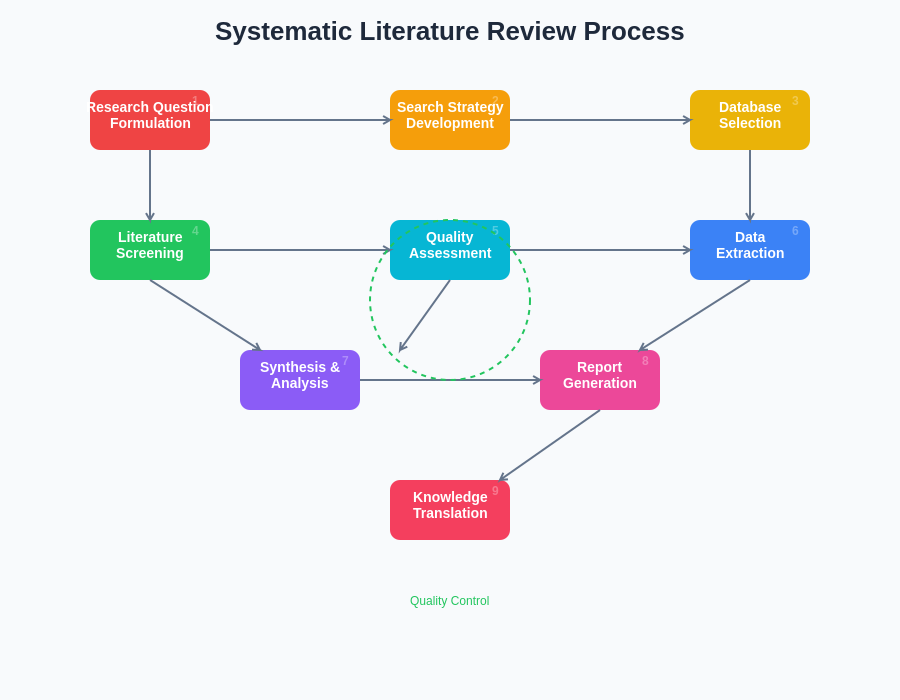

The methodological framework for conducting systematic literature reviews in artificial intelligence encompasses several distinct phases, each requiring careful attention to detail and adherence to established protocols. The initial phase involves formulating research questions using frameworks such as PICO (Population, Intervention, Comparison, Outcome) or SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type), adapted specifically for machine learning contexts where traditional medical review frameworks may not directly apply.

Search strategy development represents a critical component of the methodological framework, requiring expertise in both the subject domain and information retrieval techniques. Effective search strategies in machine learning research must account for the rapidly evolving terminology in the field, the interdisciplinary nature of much AI research, and the diverse publication venues that contribute to the literature. The search strategy should encompass not only traditional academic databases but also preprint servers, conference proceedings, and specialized AI publication venues that may not be indexed in conventional databases.

Access advanced AI research capabilities through Claude to enhance your literature review process with sophisticated analysis and synthesis capabilities. The integration of AI tools in the literature review process itself represents an emerging area of methodological innovation, where machine learning techniques are being applied to improve the efficiency and comprehensiveness of systematic reviews.

Database Selection and Search Strategy Development

The selection of appropriate databases for systematic literature reviews in machine learning requires careful consideration of the diverse publication landscape within the artificial intelligence research community. Traditional academic databases such as PubMed, Scopus, and Web of Science provide comprehensive coverage of established journals but may have limited representation of the rapidly evolving AI literature. Specialized databases such as IEEE Xplore, ACM Digital Library, and arXiv preprint server have become essential resources for capturing the full scope of machine learning research.

The development of effective search strategies requires balancing sensitivity and specificity to ensure comprehensive coverage while maintaining manageable result sets. Search terms must account for the evolving terminology in machine learning, including synonyms, alternative spellings, and emerging concepts that may not yet be standardized in controlled vocabularies. Boolean operators, proximity searches, and field-specific searches should be employed strategically to optimize retrieval while minimizing irrelevant results. The iterative refinement of search strategies based on preliminary results and expert feedback helps ensure that the final search captures the intended scope of literature.

Screening and Selection Processes

The screening and selection process represents one of the most time-intensive phases of systematic literature review in machine learning, requiring careful evaluation of potentially thousands of publications against predefined inclusion and exclusion criteria. The two-stage screening process, involving initial title and abstract screening followed by full-text evaluation, helps manage the volume of literature while ensuring that all potentially relevant studies receive appropriate consideration.

Inclusion and exclusion criteria must be precisely defined and consistently applied throughout the screening process. These criteria should address various dimensions including study design, population characteristics, intervention types, outcome measures, and publication quality. In machine learning research, additional considerations may include the availability of implementation details, dataset characteristics, evaluation metrics, and experimental methodology. The use of multiple reviewers and inter-rater reliability assessments helps ensure consistent application of selection criteria and reduces the risk of bias in study selection.

The systematic literature review process follows a structured workflow that ensures comprehensive coverage and rigorous evaluation of the available evidence. This methodological approach provides transparency and reproducibility while managing the complexity of synthesizing large volumes of research across diverse machine learning domains.

Data Extraction and Quality Assessment

Data extraction in systematic literature reviews of machine learning research requires careful attention to the technical details that characterize AI studies, including algorithmic approaches, dataset specifications, evaluation methodologies, and performance metrics. Standardized data extraction forms should be developed to capture relevant information consistently across all included studies, while remaining flexible enough to accommodate the diverse methodological approaches found in machine learning research.

Quality assessment of machine learning studies presents unique challenges that differ from traditional clinical or social science research. Quality criteria must address aspects such as experimental design rigor, dataset appropriateness, baseline comparisons, statistical significance testing, and reproducibility considerations. The development of quality assessment frameworks specifically tailored to machine learning research has become an active area of methodological research, with various checklists and assessment tools being proposed to standardize quality evaluation across different AI domains.

Synthesis and Analysis Techniques

The synthesis of findings from systematic literature reviews in machine learning often requires sophisticated analytical techniques that can accommodate the heterogeneity of study designs, outcome measures, and reporting standards found across AI research. Traditional meta-analytic techniques may be applicable when studies report comparable outcomes using similar metrics, but many machine learning reviews require narrative synthesis approaches that can integrate findings across diverse methodological contexts.

Quantitative synthesis techniques, when applicable, should account for the unique characteristics of machine learning research, including the potential for dataset-dependent performance variations, the influence of hyperparameter selection on outcomes, and the challenges of comparing results across different evaluation frameworks. Statistical heterogeneity assessment becomes particularly important in machine learning meta-analyses, where variations in experimental conditions can significantly influence reported performance metrics.

Enhance your research capabilities with Perplexity’s advanced search and analysis features to access comprehensive information sources and analytical tools that support sophisticated literature review methodologies. The integration of advanced AI tools in the synthesis phase can help identify patterns and relationships across large bodies of literature that might not be apparent through manual analysis alone.

Technological Tools and Automation

The application of technological tools and automation in systematic literature reviews of AI research represents a natural evolution of the field, where machine learning techniques are being applied to improve the efficiency and accuracy of the review process itself. Reference management software, screening tools, and data extraction platforms have become essential components of modern systematic review workflows, enabling researchers to manage large volumes of literature more effectively.

Automated screening tools using machine learning algorithms can assist in the initial stages of literature review by identifying potentially relevant studies based on title and abstract content. These tools can significantly reduce the manual workload while maintaining high sensitivity for relevant studies. However, the application of automation in systematic reviews requires careful validation to ensure that automated processes do not introduce bias or miss important studies that might be captured through manual screening processes.

Specialized Considerations for Machine Learning Domains

Different domains within machine learning research present unique challenges and considerations for systematic literature review. Natural language processing research, for example, may require attention to language-specific considerations, dataset linguistic characteristics, and cross-lingual evaluation challenges. Computer vision research may focus on image dataset properties, annotation quality, and visual task complexity factors that influence study comparability.

Deep learning research presents particular challenges for systematic review due to the rapid evolution of architectures, the influence of implementation details on performance, and the computational requirements that may limit study replication. Reviews of deep learning literature must carefully consider factors such as network architecture variations, training procedures, optimization algorithms, and hardware specifications that can significantly influence reported results.

Reporting Standards and Guidelines

The reporting of systematic literature reviews in machine learning research should follow established guidelines while adapting to the specific characteristics of AI research. The PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) framework provides a foundation for systematic review reporting, but modifications may be necessary to address the unique aspects of machine learning literature synthesis.

Transparency in reporting becomes particularly important in machine learning systematic reviews due to the technical complexity of the reviewed studies and the potential for subtle methodological differences to significantly influence conclusions. Detailed reporting of search strategies, selection criteria, data extraction procedures, and synthesis methods enables other researchers to evaluate the review’s credibility and potentially replicate or extend the findings.

The comparison of different research methodologies in machine learning reveals the relative strengths and limitations of various approaches to systematic literature review. Understanding these methodological trade-offs is essential for selecting appropriate review strategies and interpreting review findings within the broader context of AI research.

Challenges in AI Literature Review

Systematic literature reviews in artificial intelligence face several unique challenges that distinguish them from reviews in more established scientific domains. The rapid pace of innovation in machine learning means that the literature landscape changes quickly, with new techniques, datasets, and evaluation metrics being introduced frequently. This dynamic environment requires reviewers to carefully consider the temporal scope of their reviews and the potential for recent developments to significantly alter the conclusions drawn from the literature.

The interdisciplinary nature of AI research presents additional challenges, as relevant studies may be published across diverse venues spanning computer science, statistics, cognitive science, neuroscience, and domain-specific application areas. This distribution requires comprehensive search strategies that extend beyond traditional computer science publication venues while maintaining focus on the specific research questions being addressed.

Quality and Bias Assessment in ML Research

Quality assessment in machine learning research requires evaluation frameworks that address the unique characteristics of computational research, including reproducibility concerns, dataset bias considerations, and evaluation methodology appropriateness. Traditional quality assessment criteria developed for clinical or social science research may not adequately capture the methodological rigor relevant to machine learning studies.

Bias assessment in AI research must consider various sources of potential bias, including dataset selection bias, algorithmic bias, evaluation metric bias, and publication bias. The prevalence of positive results in machine learning publications raises concerns about publication bias, where negative or null results may be underrepresented in the literature. Systematic reviewers must develop strategies to identify and account for these various forms of bias in their synthesis of the literature.

Emerging Trends and Future Directions

The field of systematic literature review in artificial intelligence continues to evolve, with emerging trends reflecting both advances in review methodology and changes in the AI research landscape. The increasing availability of preprint servers and rapid publication venues requires reviewers to develop strategies for incorporating rapidly evolving literature while maintaining quality standards.

The application of artificial intelligence techniques to the systematic review process itself represents a promising area of methodological development. Machine learning algorithms are being developed to assist with various phases of the review process, from automated study identification and screening to data extraction and synthesis. These developments have the potential to significantly improve the efficiency and comprehensiveness of systematic reviews while maintaining methodological rigor.

Impact Assessment and Knowledge Translation

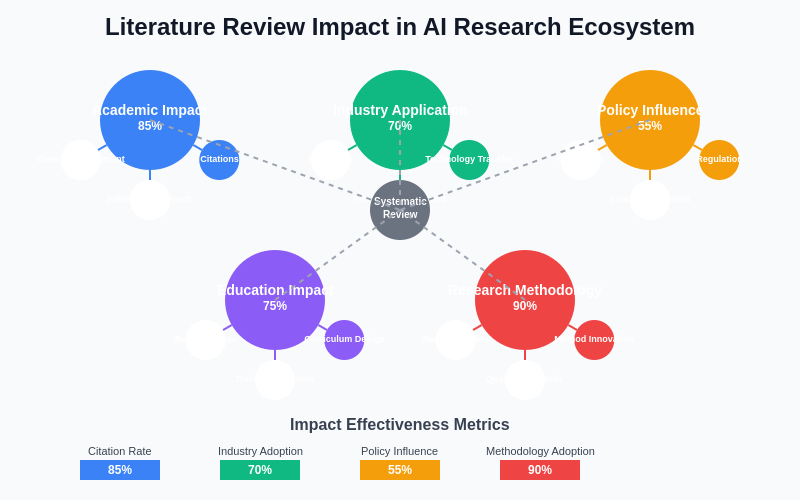

The impact of systematic literature reviews in machine learning extends beyond academic knowledge synthesis to influence practical applications, policy decisions, and future research directions. Effective knowledge translation requires careful consideration of the target audience and the appropriate presentation of findings to maximize their utility for decision-making processes.

Impact assessment of systematic reviews in AI research should consider both academic metrics such as citations and practical applications such as influence on industry standards, regulatory decisions, or clinical practice guidelines. The development of frameworks for assessing the real-world impact of AI literature reviews represents an important area for future methodological research.

The analysis of literature review impact demonstrates the multiple pathways through which systematic reviews influence the broader artificial intelligence research ecosystem. Understanding these impact mechanisms is essential for maximizing the value and utilization of systematic review findings in both academic and practical contexts.

Collaborative and International Approaches

The global nature of artificial intelligence research necessitates collaborative and international approaches to systematic literature review that can capture the full diversity of research perspectives and methodological approaches. International collaboration in systematic reviews can help address language barriers, cultural differences in research practices, and variations in publication patterns across different research communities.

Collaborative review platforms and shared methodological resources are emerging to support large-scale systematic reviews that require expertise across multiple domains and geographic regions. These collaborative approaches can improve the comprehensiveness and quality of reviews while distributing the substantial workload involved in systematic literature synthesis.

Training and Capacity Building

The specialized skills required for conducting high-quality systematic literature reviews in machine learning necessitate targeted training and capacity building initiatives. Traditional systematic review training programs may not adequately address the unique challenges and technical requirements of AI literature synthesis, creating a need for specialized educational resources and training programs.

Capacity building in AI systematic review methodology should address both technical skills related to search strategy development, data extraction, and synthesis techniques, as well as domain-specific knowledge about machine learning research practices, evaluation methodologies, and quality assessment criteria. The development of standardized training programs and certification processes could help ensure consistent quality standards across systematic reviews in the field.

Integration with Research Infrastructure

The integration of systematic literature review processes with broader research infrastructure represents an important consideration for maximizing the efficiency and impact of review activities. This integration includes connections with research databases, computational resources, collaboration platforms, and knowledge management systems that support the research enterprise.

The development of specialized infrastructure for AI systematic reviews, including databases optimized for machine learning literature, automated analysis tools, and collaborative platforms designed for technical literature synthesis, could significantly improve the efficiency and quality of review processes. Investment in such infrastructure represents a strategic priority for supporting evidence-based decision-making in artificial intelligence research and applications.

Disclaimer

This article is for informational and educational purposes only and does not constitute professional research advice. The methodologies and approaches described should be adapted to specific research contexts and requirements. Readers should consult with experienced systematic review methodologists and domain experts when planning and conducting literature reviews in artificial intelligence and machine learning. The effectiveness of different review approaches may vary depending on the specific research questions, available resources, and intended applications of the review findings.