The modern application landscape demands unprecedented levels of performance, scalability, and reliability, particularly as artificial intelligence workloads become increasingly complex and resource-intensive. Traditional load testing approaches, while effective for conventional applications, often fall short when dealing with the dynamic, unpredictable nature of AI-powered systems and the sophisticated user interactions they enable. This evolution has given rise to a new generation of intelligent load testing tools and methodologies that leverage artificial intelligence to create more realistic, adaptive, and comprehensive performance assessments.

Discover the latest AI performance testing trends to understand how cutting-edge organizations are revolutionizing their approach to system reliability and scalability. The integration of AI into load testing represents a fundamental shift from static, predefined test scenarios to dynamic, intelligent simulation that adapts in real-time to application behavior and performance characteristics.

The Evolution of Load Testing in the AI Era

Traditional load testing methodologies were designed for relatively predictable application behaviors where user journeys followed well-defined patterns and system responses remained consistent across similar requests. However, AI-powered applications introduce unprecedented complexity through machine learning inference, dynamic content generation, and personalized user experiences that vary dramatically based on context, user history, and real-time data processing. This complexity necessitates sophisticated testing approaches that can simulate the unpredictable nature of AI workloads while providing meaningful insights into system performance under realistic conditions.

The emergence of tools like Artillery and K6 has transformed the load testing landscape by introducing programmable, scriptable testing frameworks that can adapt to complex scenarios and provide detailed performance analytics. These tools represent a significant advancement over traditional load testing solutions by offering developers and performance engineers the flexibility to create sophisticated test scenarios that accurately reflect real-world usage patterns and system behaviors.

Artillery: Advanced Load Testing for Modern Applications

Artillery has established itself as a powerful, developer-friendly load testing toolkit that excels in creating complex, realistic test scenarios for modern web applications and APIs. The platform’s strength lies in its ability to handle sophisticated testing workflows that include authentication, session management, dynamic data handling, and multi-step user journeys that closely mirror real user behavior. Artillery’s architecture is particularly well-suited for testing AI-powered applications because of its flexibility in handling variable response times, dynamic content, and complex API interactions that are characteristic of machine learning systems.

The tool’s configuration-driven approach allows teams to define complex load testing scenarios using YAML configuration files that can be version-controlled, shared across teams, and integrated into continuous integration pipelines. This approach democratizes load testing by making it accessible to developers who may not have specialized performance testing expertise while still providing the sophisticated features required for comprehensive performance assessment. Artillery’s ability to simulate realistic user behavior patterns, including think times, session persistence, and multi-phase testing scenarios, makes it particularly effective for testing AI applications where user interactions often involve complex, multi-step processes.

Artillery’s reporting and analytics capabilities provide detailed insights into system performance characteristics, including response time distributions, error rates, throughput metrics, and custom performance indicators that can be tailored to specific application requirements. The platform’s real-time monitoring capabilities enable teams to observe system behavior during testing and make immediate adjustments to test parameters or system configurations based on observed performance patterns.

Experience advanced AI testing capabilities with Claude to enhance your load testing strategy with intelligent test scenario generation and performance analysis. The combination of human expertise and AI-powered insights creates a comprehensive approach to performance testing that addresses both technical requirements and business objectives.

K6: Scriptable Performance Testing for Complex Scenarios

K6 represents a modern approach to load testing that prioritizes developer experience, scriptability, and integration with contemporary development workflows. Built with JavaScript as its scripting language, K6 enables developers to create sophisticated test scenarios using familiar programming constructs while leveraging the platform’s powerful performance testing engine. This approach is particularly valuable for AI applications where test scenarios often require complex logic, dynamic data generation, and sophisticated response validation that goes beyond simple HTTP status code checking.

The platform’s architecture is designed to handle high-performance load generation while maintaining precision in timing measurements and resource utilization tracking. K6’s ability to simulate thousands of virtual users while maintaining accurate performance metrics makes it ideal for testing AI systems that may exhibit significant performance variations based on model complexity, data processing requirements, and concurrent user loads. The tool’s modular design allows teams to extend functionality through custom modules and integrations, enabling specialized testing approaches for unique AI application requirements.

K6’s cloud integration capabilities provide scalable load generation that can simulate realistic global user distributions and network conditions. This feature is particularly important for AI applications that serve global user bases and must maintain consistent performance across diverse geographical locations and network conditions. The platform’s ability to generate load from multiple geographic regions simultaneously enables comprehensive testing of content delivery networks, edge computing deployments, and globally distributed AI services.

The tool’s comprehensive metrics collection and analysis capabilities provide deep insights into application performance characteristics, including custom metrics that can be tailored to specific AI application requirements such as model inference times, data processing latencies, and resource utilization patterns. K6’s integration with popular monitoring and observability platforms enables seamless incorporation of load testing insights into broader system monitoring and alerting frameworks.

Advanced Performance Simulation Techniques

Modern AI applications require sophisticated performance simulation approaches that go beyond traditional load testing methodologies. These applications often exhibit non-linear performance characteristics where system behavior under load can vary dramatically based on factors such as model complexity, input data characteristics, cache hit rates, and dynamic resource allocation. Advanced performance simulation techniques address these challenges by incorporating intelligent workload generation, adaptive testing strategies, and comprehensive system behavior modeling.

Intelligent workload generation involves creating test scenarios that accurately reflect the complex, often unpredictable nature of real user interactions with AI systems. This includes simulating varying input data complexity, dynamic user preferences, personalization algorithms, and the cascading effects of machine learning model updates on system performance. Advanced simulation frameworks can generate synthetic but realistic datasets that exercise AI models across their full operational range while maintaining the privacy and security constraints that are critical in production environments.

Adaptive testing strategies leverage real-time performance feedback to automatically adjust test parameters, user behavior patterns, and load characteristics based on observed system responses. This approach enables more comprehensive exploration of system performance boundaries while avoiding the limitations of static test scenarios that may not adequately stress all system components. Advanced simulation platforms can automatically identify performance bottlenecks, scale testing intensity to explore system limits, and generate detailed reports on system behavior under various load conditions.

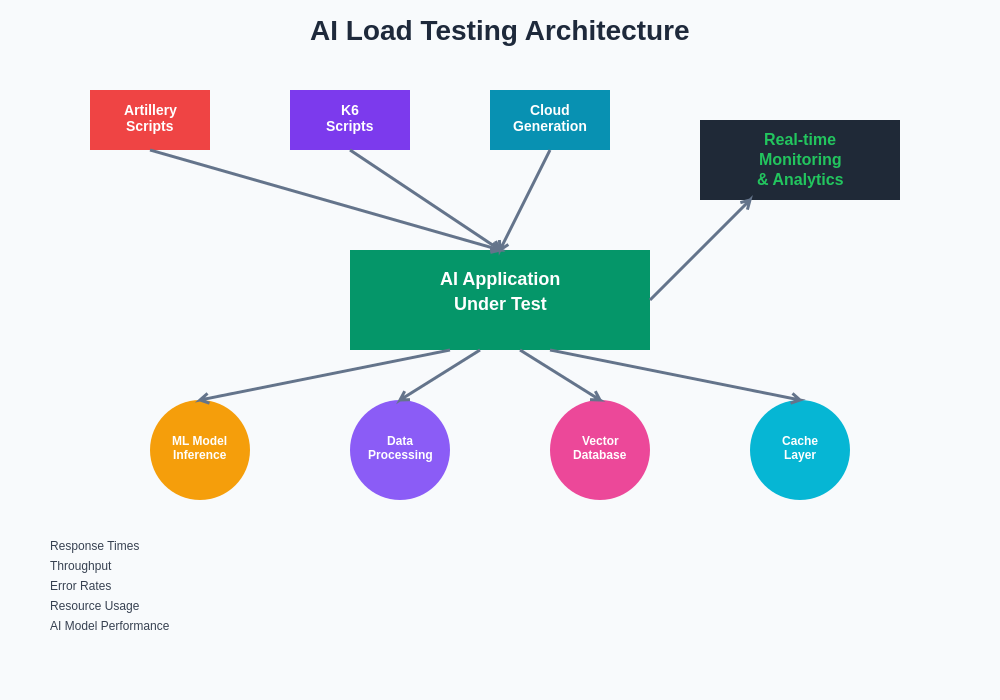

The comprehensive architecture for AI load testing encompasses multiple load generation sources, sophisticated monitoring systems, and specialized components that handle the unique characteristics of AI workloads. This integrated approach ensures that all aspects of AI application performance are thoroughly validated under realistic conditions.

The integration of artificial intelligence into load testing tools themselves represents a significant advancement in performance simulation capabilities. AI-powered testing platforms can analyze historical performance data to generate more realistic test scenarios, predict system behavior under various load conditions, and automatically optimize test configurations for maximum coverage and efficiency.

Enhance your testing strategy with Perplexity to research and implement cutting-edge performance testing methodologies that align with your specific AI application requirements. The combination of multiple AI tools creates a comprehensive testing ecosystem that addresses every aspect of performance validation and system optimization.

Implementing AI-Specific Load Testing Strategies

AI applications present unique testing challenges that require specialized approaches and considerations beyond traditional web application testing. Machine learning models introduce variability in processing times based on input complexity, model architecture, and available computational resources. This variability necessitates testing strategies that account for worst-case scenarios, resource contention, and the dynamic nature of AI workloads. Effective AI load testing strategies must consider model warm-up times, cache optimization, batch processing efficiency, and the impact of concurrent requests on model inference performance.

The implementation of realistic AI load testing requires understanding of the specific characteristics of machine learning workloads, including the relationship between input data characteristics and processing requirements, the impact of model updates on system performance, and the complex dependencies between different system components. Successful testing strategies incorporate these factors through sophisticated test scenario design that exercises the full range of expected system behaviors while maintaining realistic user interaction patterns.

Monitoring and observability during AI load testing require specialized metrics and analysis approaches that go beyond traditional web application performance indicators. Key metrics for AI applications include model inference latency, resource utilization patterns, queue depths for batch processing, cache hit rates, and the performance impact of model updates or retraining operations. Comprehensive monitoring strategies must also account for the cascading effects of performance issues in AI systems, where bottlenecks in one component can have far-reaching impacts on overall system performance.

Artillery Configuration and Best Practices

Effective Artillery implementation for AI applications requires careful consideration of configuration parameters, test scenario design, and performance metric collection strategies. Artillery’s flexible configuration system enables teams to create sophisticated test scenarios that accurately simulate the complex interaction patterns typical of AI-powered applications. Best practices for Artillery configuration include proper session management, realistic think times, dynamic data generation, and comprehensive error handling that accounts for the variable nature of AI system responses.

Artillery’s ability to handle complex authentication flows and session persistence makes it particularly well-suited for testing AI applications that require user authentication, personalization, or state management across multiple interactions. The platform’s support for custom functions and plugins enables teams to extend functionality for specialized AI testing requirements such as dynamic input generation, response validation, and custom performance metric collection.

Performance optimization for Artillery-based AI testing involves careful consideration of load generation patterns, resource allocation, and test execution strategies that maximize the accuracy and comprehensiveness of performance assessments. This includes optimizing virtual user behavior patterns, implementing efficient data handling strategies, and configuring appropriate monitoring and logging to capture detailed system behavior information during testing.

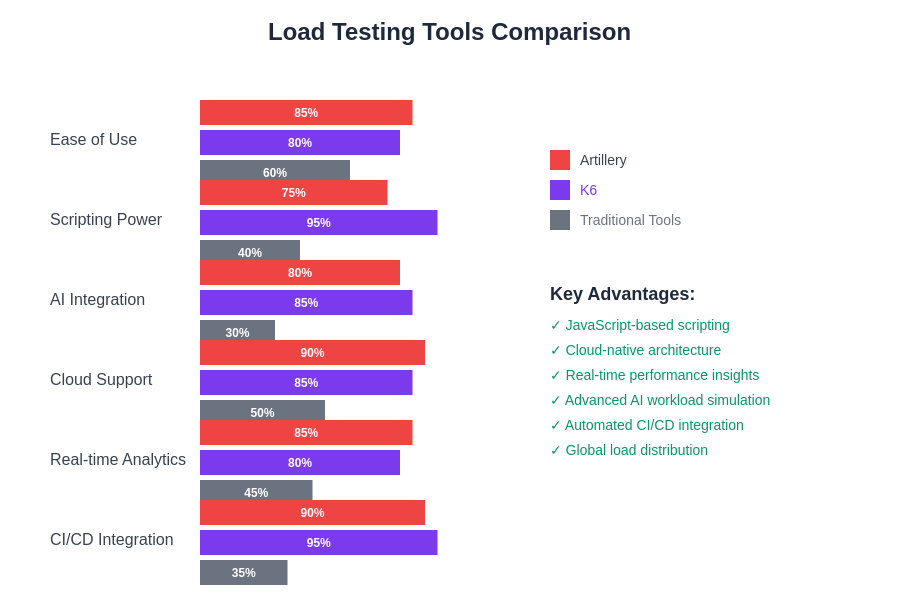

The comparative analysis of modern load testing tools reveals significant advantages in AI integration capabilities, scripting flexibility, and cloud-native features. Artillery and K6 demonstrate superior performance across key metrics that are critical for comprehensive AI application testing, particularly in areas of real-time analytics and continuous integration support.

The integration of Artillery into continuous integration and deployment pipelines enables automated performance validation that ensures AI applications maintain acceptable performance characteristics throughout the development lifecycle. This integration requires careful configuration of test thresholds, automated reporting, and failure handling strategies that provide meaningful feedback to development teams while maintaining the efficiency of automated deployment processes.

K6 Scripting for AI Application Testing

K6’s JavaScript-based scripting environment provides powerful capabilities for creating sophisticated AI application test scenarios that go beyond simple HTTP request generation. Effective K6 scripting for AI applications involves implementing dynamic data generation, complex response validation, and sophisticated user behavior simulation that accurately reflects real-world usage patterns. The platform’s scripting capabilities enable teams to create test scenarios that adapt to application responses, handle variable processing times, and validate complex AI system outputs.

Advanced K6 scripting techniques for AI applications include implementing intelligent retry mechanisms for variable response times, creating dynamic input datasets that exercise AI models across their full operational range, and developing custom validation functions that can assess the quality and correctness of AI-generated outputs. These techniques are essential for comprehensive testing of AI systems where simple response time and status code validation may not provide sufficient insight into system behavior and output quality.

K6’s modular architecture enables integration with external systems and services that are often critical components of AI application ecosystems. This includes integration with data storage systems, machine learning model serving platforms, and external APIs that provide data or services to AI applications. Effective testing strategies leverage these integration capabilities to create comprehensive test scenarios that exercise the full system architecture rather than isolated components.

Performance optimization for K6-based AI testing involves careful consideration of script efficiency, resource utilization, and test execution strategies that maximize the accuracy and reliability of performance measurements. This includes implementing efficient data handling, optimizing script execution patterns, and configuring appropriate monitoring and metrics collection to capture detailed system performance information during testing.

Cloud-Based Load Testing and Scalability

Modern AI applications often require global scale and must maintain consistent performance across diverse geographical locations, network conditions, and user demographics. Cloud-based load testing platforms provide the scalability and geographic distribution necessary for comprehensive performance validation of globally deployed AI systems. These platforms enable teams to simulate realistic global user distributions while maintaining precise control over test parameters and performance measurements.

The implementation of cloud-based load testing for AI applications requires careful consideration of data privacy, security, and compliance requirements that may restrict where testing can be conducted and what data can be used in test scenarios. Effective cloud testing strategies address these constraints through sophisticated data handling, encryption, and geographic restrictions while maintaining the comprehensiveness and accuracy of performance assessments.

Cloud-based testing platforms provide access to diverse infrastructure configurations and network conditions that enable comprehensive validation of AI application performance across different deployment scenarios. This includes testing performance on various hardware configurations, different geographic regions, and diverse network conditions that accurately reflect real-world deployment environments.

The integration of cloud-based load testing into continuous integration and deployment pipelines enables automated performance validation at scale while maintaining the efficiency and reliability of automated deployment processes. This integration requires sophisticated orchestration, monitoring, and reporting capabilities that provide meaningful insights into system performance while minimizing the complexity and overhead of automated testing processes.

Performance Monitoring and Analysis

Comprehensive performance monitoring and analysis are critical components of effective AI load testing strategies. AI applications generate complex performance data that requires sophisticated analysis techniques to identify bottlenecks, optimize system configurations, and validate performance requirements. Effective monitoring strategies for AI applications go beyond traditional web application metrics to include specialized indicators such as model inference times, resource utilization patterns, and the performance impact of dynamic system behaviors.

Advanced analysis techniques for AI performance data include statistical analysis of variable response times, trend analysis for identifying performance degradation patterns, and correlation analysis for understanding the relationships between different system components and performance characteristics. These techniques enable teams to gain deep insights into system behavior and make informed decisions about optimization strategies and capacity planning.

The implementation of real-time performance monitoring during load testing enables immediate identification of performance issues and dynamic adjustment of testing parameters based on observed system behavior. This capability is particularly important for AI applications where performance characteristics may change dynamically based on system load, data characteristics, or model behavior.

Automated performance analysis and reporting capabilities enable teams to quickly identify and prioritize performance issues while maintaining comprehensive documentation of system behavior across different testing scenarios. These capabilities are essential for maintaining performance standards throughout the development lifecycle and ensuring that AI applications meet their performance requirements under various operational conditions.

Integration with CI/CD Pipelines

The integration of AI load testing into continuous integration and continuous deployment pipelines represents a critical advancement in ensuring that AI applications maintain acceptable performance characteristics throughout their development lifecycle. This integration requires sophisticated orchestration of testing activities, automated analysis of performance results, and intelligent decision-making processes that can determine when applications are ready for deployment based on performance criteria.

Effective CI/CD integration for AI load testing involves implementing automated test execution, intelligent test result analysis, and sophisticated failure handling that accounts for the variable nature of AI system performance. This includes implementing adaptive test thresholds that account for the inherent variability in AI system behavior while maintaining strict performance standards that ensure acceptable user experiences.

The automation of performance validation through CI/CD integration enables teams to maintain high performance standards while accelerating development and deployment processes. This requires careful balance between comprehensive testing coverage and pipeline efficiency, often achieved through intelligent test selection, parallel test execution, and optimized resource allocation strategies.

Advanced CI/CD integration techniques for AI applications include implementing conditional testing strategies that adapt test coverage based on code changes, automated performance regression detection, and intelligent deployment decisions that consider both functional correctness and performance characteristics. These techniques enable teams to maintain high quality standards while maximizing development velocity and minimizing deployment risks.

Future Directions in AI Load Testing

The future of AI load testing is being shaped by advances in artificial intelligence, machine learning, and automated testing technologies that promise to further revolutionize how teams approach performance validation for complex AI systems. Emerging trends include the use of AI to automatically generate realistic test scenarios, intelligent analysis of performance data to predict system behavior, and automated optimization of testing strategies based on application characteristics and performance requirements.

Advanced AI-powered testing platforms are beginning to incorporate machine learning algorithms that can learn from historical performance data to generate more effective test scenarios, predict system behavior under various load conditions, and automatically optimize testing configurations for maximum effectiveness and efficiency. These capabilities represent a significant advancement in testing automation that promises to reduce the complexity and overhead of comprehensive performance validation while improving the accuracy and reliability of testing results.

The integration of AI load testing with broader system observability and monitoring frameworks enables comprehensive understanding of system behavior across the entire application lifecycle. This integration provides valuable insights into the relationship between development practices, deployment strategies, and system performance that can inform both immediate optimization decisions and long-term architectural planning.

The continued evolution of AI applications and infrastructure technologies will require ongoing advancement in load testing methodologies and tools to ensure that performance validation keeps pace with the increasing complexity and sophistication of AI systems. This includes developing testing approaches for emerging AI technologies, integrating with new infrastructure paradigms, and addressing the evolving security and privacy requirements of AI applications.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI load testing technologies and their applications in software development. Readers should conduct their own research and consider their specific requirements when implementing AI load testing tools and strategies. The effectiveness of load testing approaches may vary depending on specific use cases, application architectures, and performance requirements. Always ensure that load testing activities comply with relevant terms of service, privacy regulations, and ethical guidelines for your specific deployment environment.