The exponential growth of artificial intelligence and machine learning applications across industries has created an unprecedented need for sophisticated governance frameworks that ensure model reliability, compliance, and accountability throughout their lifecycle. AI model governance represents a critical discipline that encompasses version control, deployment management, performance monitoring, and regulatory compliance, forming the backbone of responsible AI development and deployment in enterprise environments.

Explore the latest AI governance trends to understand how organizations are implementing comprehensive frameworks for managing AI systems at scale. The complexity of modern AI systems demands robust governance structures that can adapt to rapidly evolving technological landscapes while maintaining strict oversight and control over model behavior, performance, and ethical implications.

The Foundation of AI Model Governance

Effective AI model governance begins with establishing comprehensive frameworks that address the entire machine learning lifecycle, from initial data collection and model development through deployment, monitoring, and eventual retirement. This holistic approach ensures that every aspect of the AI system is subject to appropriate oversight, documentation, and control mechanisms that facilitate accountability, reproducibility, and continuous improvement.

The foundation of robust AI governance rests on several key pillars including data lineage tracking, model versioning, experiment management, deployment orchestration, and performance monitoring. These interconnected components work together to create a transparent and auditable system that enables organizations to maintain control over their AI assets while facilitating innovation and rapid iteration. The implementation of proper governance frameworks not only mitigates risks associated with AI deployment but also enhances the overall quality and reliability of machine learning systems.

Modern AI governance frameworks must accommodate the unique challenges posed by machine learning systems, including their probabilistic nature, dependency on training data quality, susceptibility to model drift, and potential for unintended bias. Unlike traditional software systems where behavior is deterministic and predictable, AI models exhibit complex behaviors that emerge from their training processes and can change over time as they encounter new data patterns or as underlying data distributions shift.

Version Control Strategies for Machine Learning Models

Traditional software version control systems, while foundational to AI model governance, require significant adaptation and extension to effectively manage the complexities inherent in machine learning workflows. Machine learning version control encompasses not only code versioning but also data versioning, model artifact management, experiment tracking, and configuration management, creating a multidimensional versioning challenge that demands specialized tools and methodologies.

The implementation of effective version control for machine learning involves managing multiple interconnected components including training datasets, feature engineering pipelines, model architectures, hyperparameter configurations, training scripts, evaluation metrics, and deployment configurations. Each of these components can evolve independently, creating complex dependency relationships that must be carefully tracked and managed to ensure reproducibility and maintain system integrity.

Leverage advanced AI tools like Claude for implementing sophisticated model governance workflows that integrate seamlessly with existing development practices while providing enhanced capabilities for managing complex AI systems. The integration of AI-powered governance tools enables organizations to automate many aspects of model management while maintaining human oversight and control over critical decisions.

Data versioning presents unique challenges in machine learning environments where datasets can be extremely large, frequently updated, and subject to complex transformations and preprocessing steps. Effective data versioning strategies must balance storage efficiency with the need for complete auditability and reproducibility, often employing techniques such as delta compression, content-addressable storage, and metadata-driven versioning to manage the scale and complexity of machine learning datasets.

Model artifact versioning requires careful consideration of the various components that constitute a complete machine learning model, including trained weights, architecture definitions, preprocessing parameters, post-processing logic, and associated metadata. Modern model versioning systems must support branching and merging operations that account for the unique characteristics of machine learning artifacts, including the ability to compare model performance across versions and manage complex deployment scenarios.

Implementing Model Lifecycle Management

Comprehensive model lifecycle management encompasses the systematic approach to managing machine learning models from conception through retirement, ensuring that each stage of the model’s existence is properly governed, documented, and controlled. This lifecycle approach provides organizations with the visibility and control necessary to maintain high-quality AI systems while meeting regulatory requirements and business objectives.

The model development phase requires robust experiment tracking and management capabilities that capture all relevant information about model training runs, including hyperparameters, training data versions, code versions, environmental configurations, and resulting performance metrics. This comprehensive tracking enables teams to reproduce successful experiments, understand the factors that contribute to model performance, and make informed decisions about model selection and deployment.

Model validation and testing represent critical phases in the lifecycle that require specialized governance approaches tailored to the unique characteristics of machine learning systems. Unlike traditional software testing, ML model validation must account for statistical performance across diverse data distributions, fairness and bias considerations, robustness to adversarial inputs, and behavioral consistency across different operating environments.

The deployment phase introduces additional governance requirements including canary deployments, A/B testing frameworks, rollback mechanisms, and real-time monitoring systems that can detect model degradation or anomalous behavior. Effective deployment governance ensures that models are released safely and can be quickly reverted if issues are detected, minimizing the impact of potential problems on production systems.

Data Lineage and Provenance Tracking

Data lineage tracking forms a cornerstone of effective AI governance by providing complete visibility into the origins, transformations, and usage patterns of data throughout the machine learning pipeline. This comprehensive tracking enables organizations to understand how data flows through their systems, identify potential sources of bias or quality issues, and ensure compliance with data protection regulations and ethical guidelines.

Effective data provenance systems capture detailed information about data sources, collection methodologies, preprocessing steps, feature engineering transformations, and quality validation procedures. This metadata provides the foundation for understanding model behavior, debugging performance issues, and ensuring that models are trained on appropriate and representative data that aligns with intended use cases.

The implementation of robust data lineage tracking requires sophisticated tools and processes that can automatically capture metadata at each stage of the data pipeline while providing intuitive interfaces for exploring and analyzing data relationships. Modern data lineage systems often employ graph-based representations that visualize complex data dependencies and enable impact analysis when changes are made to upstream data sources or processing logic.

Enhance your research capabilities with Perplexity to stay current with evolving best practices in data governance and lineage tracking across diverse industry sectors and regulatory environments. The continuous evolution of data governance requirements demands ongoing research and adaptation of tracking methodologies to address emerging challenges and opportunities.

Model Performance Monitoring and Drift Detection

Continuous monitoring of model performance in production environments represents a critical aspect of AI governance that ensures deployed models continue to meet performance expectations and business requirements over time. Machine learning models are subject to various forms of degradation including data drift, concept drift, and performance decay that can significantly impact their effectiveness and reliability.

Data drift detection involves monitoring the statistical properties of input data to identify when the distribution of incoming data differs significantly from the training data distribution. This monitoring enables early detection of scenarios where model performance may be compromised due to changes in the underlying data patterns, environmental conditions, or user behavior that affect the relevance of the training data.

Concept drift monitoring focuses on detecting changes in the relationship between input features and target outcomes, which can occur even when input data distributions remain stable. This type of monitoring is particularly challenging because it often requires access to ground truth labels that may not be immediately available in production environments, necessitating the use of proxy metrics and delayed validation approaches.

Performance monitoring systems must be designed to operate efficiently at scale while providing timely alerts when significant degradation is detected. These systems typically employ statistical tests, machine learning-based anomaly detection, and threshold-based alerting to identify potential issues while minimizing false positives that could overwhelm operations teams with unnecessary alerts.

Compliance and Regulatory Frameworks

The regulatory landscape surrounding artificial intelligence continues to evolve rapidly, with new requirements emerging at local, national, and international levels that impact how organizations must govern their AI systems. Effective AI governance frameworks must be designed to accommodate current regulatory requirements while maintaining flexibility to adapt to future regulatory changes and emerging compliance standards.

Current regulatory frameworks such as GDPR, CCPA, and emerging AI-specific regulations impose various requirements related to data usage, algorithmic transparency, bias prevention, and user rights that directly impact AI model governance practices. Organizations must implement governance systems that can demonstrate compliance with these requirements through comprehensive documentation, audit trails, and reporting capabilities.

The implementation of compliance-ready governance frameworks requires careful consideration of data retention policies, user consent management, algorithmic auditing procedures, and bias testing methodologies. These systems must provide the documentation and evidence necessary to demonstrate compliance during regulatory audits while supporting ongoing compliance monitoring and reporting activities.

Future regulatory developments are likely to impose additional requirements related to algorithmic accountability, explainability, and fairness that will further expand the scope of AI governance frameworks. Organizations that implement robust governance practices today will be better positioned to adapt to these evolving requirements while maintaining operational efficiency and competitive advantage.

Model Explainability and Interpretability

The growing emphasis on algorithmic transparency and accountability has elevated model explainability and interpretability as essential components of comprehensive AI governance frameworks. Organizations must implement systems and processes that can provide appropriate levels of explanation for model decisions, particularly in high-stakes applications where AI decisions significantly impact individuals or business outcomes.

Model interpretability encompasses both global explanations that describe overall model behavior and local explanations that provide insights into specific predictions or decisions. Effective governance frameworks must support both types of explanations while ensuring that explanation systems are themselves reliable, accurate, and appropriately validated for their intended use cases.

The implementation of explainability systems requires careful consideration of the trade-offs between model performance, explanation quality, and computational efficiency. Different stakeholder groups may require different types and levels of explanation, necessitating flexible explanation systems that can adapt to diverse user needs and regulatory requirements.

Governance frameworks must also address the validation and testing of explanation systems to ensure that provided explanations are accurate, consistent, and helpful for their intended purposes. This validation process is particularly challenging because explanation quality is often subjective and context-dependent, requiring sophisticated evaluation methodologies and ongoing monitoring.

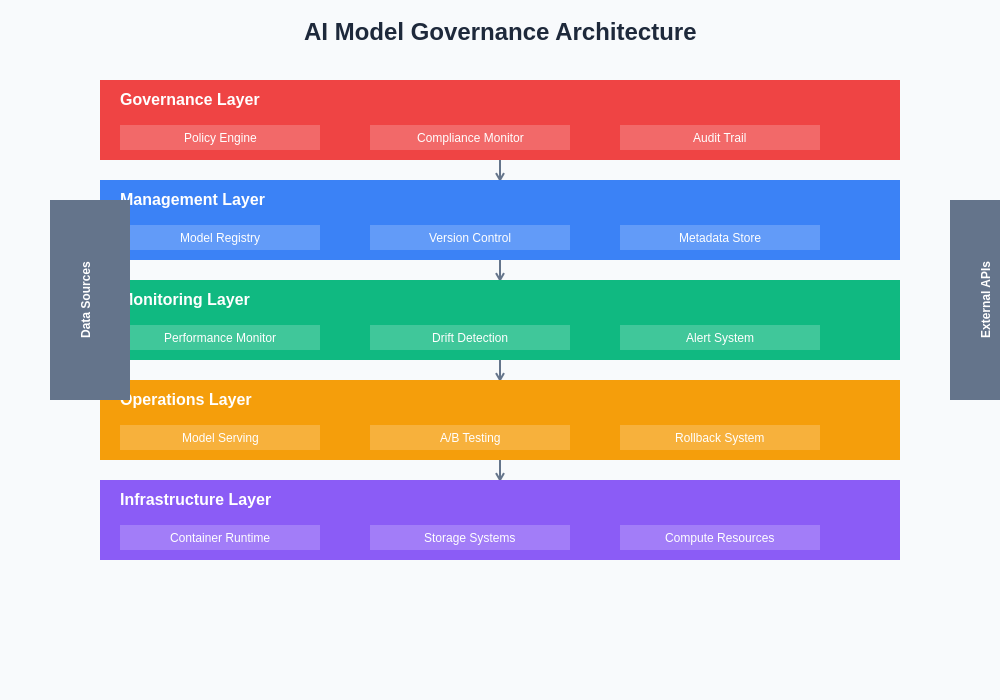

The architectural foundation of comprehensive AI governance systems integrates multiple interconnected components that work together to provide complete oversight and control over machine learning systems throughout their lifecycle. This systematic approach ensures that all aspects of model development, deployment, and monitoring are subject to appropriate governance controls.

Security and Access Control in AI Systems

Security considerations in AI governance extend beyond traditional cybersecurity concerns to encompass unique vulnerabilities and risks associated with machine learning systems. AI models can be subject to adversarial attacks, data poisoning, model extraction, and other specialized threats that require dedicated security measures and monitoring capabilities integrated into the governance framework.

Access control systems for AI environments must provide fine-grained permissions that account for the various roles and responsibilities involved in machine learning workflows. Different team members may require access to different aspects of the AI system, including training data, model artifacts, experiment results, and production deployment configurations, necessitating sophisticated role-based access control systems.

Model security monitoring involves detecting anomalous usage patterns, unauthorized access attempts, and potential attacks against deployed models. These monitoring systems must be designed to operate efficiently in production environments while providing comprehensive coverage of potential security threats without impacting model performance or user experience.

The implementation of robust security measures must balance protection against threats with the need for collaboration and innovation within AI development teams. Governance frameworks should facilitate secure sharing of resources and knowledge while maintaining appropriate controls and audit trails for all access and usage activities.

Automated Testing and Validation Frameworks

Comprehensive testing and validation frameworks represent essential components of AI governance that ensure model quality, reliability, and safety throughout the development and deployment lifecycle. These frameworks must address the unique testing challenges posed by machine learning systems, including statistical validation, fairness testing, robustness evaluation, and behavioral consistency assessment.

Automated testing pipelines for machine learning models must encompass both technical performance testing and ethical/fairness validation to ensure that deployed models meet both functional requirements and organizational values. These pipelines should be integrated into the continuous integration and deployment processes while providing comprehensive reporting and documentation of test results.

Statistical validation frameworks must account for the probabilistic nature of machine learning models by implementing appropriate sampling strategies, significance testing, and confidence interval estimation. These frameworks should provide reliable assessments of model performance across diverse data distributions and usage scenarios while accounting for potential sources of bias and uncertainty.

Robustness testing involves evaluating model behavior under various stress conditions, including adversarial inputs, corrupted data, and edge cases that may not be well-represented in training datasets. These tests help ensure that deployed models will behave appropriately in real-world scenarios where input data may differ from training conditions.

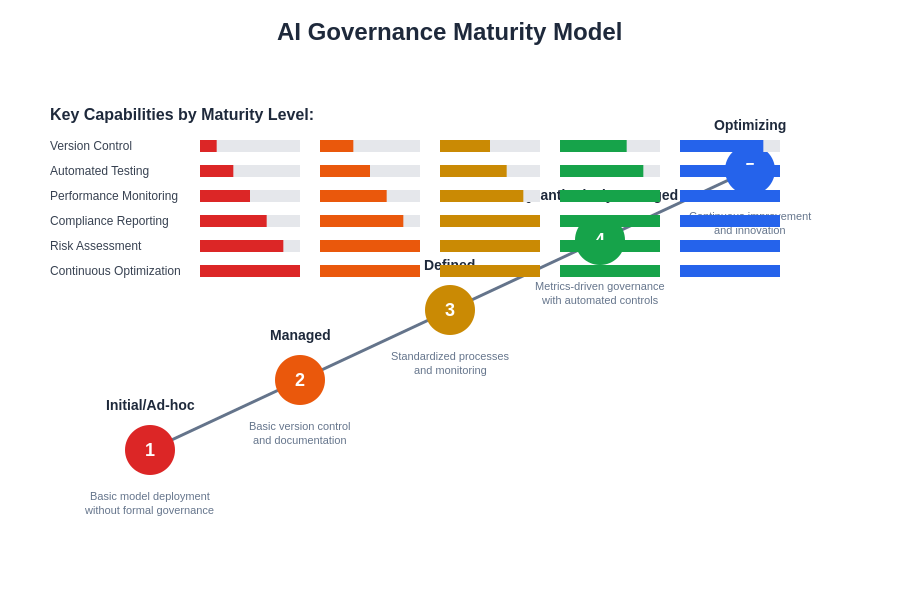

Organizations typically progress through distinct maturity levels in their AI governance capabilities, evolving from ad-hoc practices to sophisticated, automated governance frameworks that provide comprehensive oversight and control over AI systems. This maturity progression enables organizations to gradually build governance capabilities while maintaining operational efficiency.

Integration with DevOps and MLOps Practices

The successful implementation of AI governance requires seamless integration with existing DevOps practices and emerging MLOps methodologies that support the unique requirements of machine learning workflows. This integration ensures that governance controls are embedded throughout the development and deployment pipeline without creating friction or impediments to innovation and rapid iteration.

MLOps platforms provide the foundation for implementing governance controls by offering standardized interfaces, automated workflows, and comprehensive monitoring capabilities that support the entire machine learning lifecycle. These platforms must be configured and customized to enforce organizational governance policies while providing the flexibility needed for diverse AI use cases and team structures.

Continuous integration and continuous deployment practices for machine learning require adaptation to accommodate the unique characteristics of AI systems, including data validation, model testing, performance benchmarking, and gradual rollout strategies. Governance frameworks must be designed to work seamlessly with these CI/CD processes while providing appropriate checkpoints and approval workflows.

The automation of governance processes through MLOps platforms reduces the manual effort required for compliance and oversight while improving consistency and reliability of governance controls. This automation enables organizations to scale their AI initiatives while maintaining appropriate levels of oversight and control over their AI systems.

Future Trends in AI Governance

The field of AI governance continues to evolve rapidly as organizations gain experience with large-scale AI deployments and regulatory frameworks become more sophisticated and comprehensive. Emerging trends in AI governance include increased automation of compliance processes, integration of ethical considerations into technical systems, and development of industry-specific governance standards and best practices.

Automated governance systems are becoming increasingly sophisticated, leveraging AI technologies themselves to monitor, analyze, and optimize AI governance processes. These systems can provide real-time insights into model performance, compliance status, and potential risks while reducing the manual effort required for oversight and reporting activities.

The integration of ethical considerations into technical governance frameworks represents a growing area of focus as organizations seek to embed their values and principles directly into their AI systems. This integration requires sophisticated frameworks that can translate high-level ethical principles into concrete technical requirements and monitoring capabilities.

Industry-specific governance standards are emerging as different sectors develop specialized requirements and best practices tailored to their unique regulatory environments, risk profiles, and operational constraints. These standards provide valuable guidance for organizations while enabling the development of specialized tools and platforms that support sector-specific governance needs.

The continued evolution of AI governance will likely be driven by advances in AI technology itself, changing regulatory requirements, and growing organizational experience with large-scale AI deployments. Organizations that invest in robust governance capabilities today will be better positioned to adapt to future changes while maintaining competitive advantage and regulatory compliance.

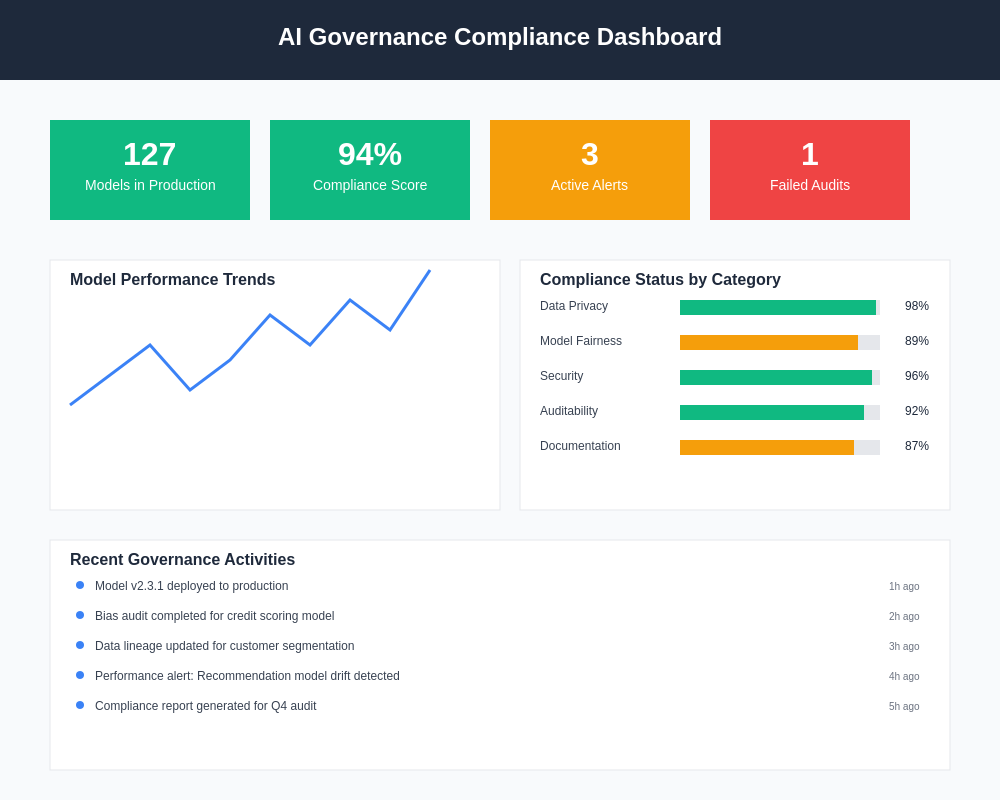

Modern AI governance platforms provide comprehensive dashboards and reporting capabilities that enable organizations to monitor compliance status, track key performance indicators, and generate detailed reports for internal stakeholders and regulatory authorities. These interfaces must balance comprehensive functionality with usability to support diverse user needs and organizational requirements.

Disclaimer

This article is for informational purposes only and does not constitute legal, regulatory, or professional advice. The views expressed are based on current understanding of AI governance best practices and regulatory requirements, which are subject to change. Organizations should consult with qualified legal and technical experts when implementing AI governance frameworks and ensure compliance with applicable laws and regulations in their jurisdictions. The effectiveness of governance approaches may vary depending on specific organizational contexts, use cases, and regulatory environments.