The rapid advancement of artificial intelligence and machine learning technologies has created unprecedented value in trained models, making them attractive targets for intellectual property theft and unauthorized replication. As organizations invest millions of dollars and countless hours developing sophisticated AI systems, the protection of these digital assets has become a critical concern that extends far beyond traditional cybersecurity measures into the specialized realm of model security and intellectual property safeguarding.

Stay updated with the latest AI security trends to understand emerging threats and protection strategies in the rapidly evolving landscape of artificial intelligence security. The sophistication of model stealing attacks continues to evolve, requiring organizations to implement comprehensive defense strategies that address both technical vulnerabilities and operational security considerations.

Understanding the Landscape of AI Model Theft

Model stealing, also known as model extraction or model inversion, represents a sophisticated form of intellectual property theft where adversaries attempt to replicate the functionality, architecture, or training data of proprietary machine learning models without authorization. This type of attack poses significant risks to organizations that have invested substantial resources in developing competitive AI capabilities, as successful model theft can eliminate competitive advantages, expose proprietary methodologies, and result in substantial financial losses.

The motivations behind model stealing attacks vary considerably, ranging from commercial espionage aimed at replicating successful business models to academic curiosity about cutting-edge research implementations. State-sponsored actors may target models with national security implications, while cybercriminals might seek to monetize stolen models through unauthorized services or by selling extracted intellectual property on underground markets. Understanding these diverse threat actors and their capabilities is essential for developing appropriate defensive strategies.

The technical sophistication of model stealing attacks has increased dramatically as attackers develop more refined techniques for extracting model information through various channels. These attacks can target models during training, inference, or storage phases, exploiting different vulnerabilities at each stage of the machine learning lifecycle. The growing availability of model stealing tools and techniques has lowered the barrier to entry for potential attackers, making this threat accessible to a broader range of adversaries.

Common Attack Vectors and Methodologies

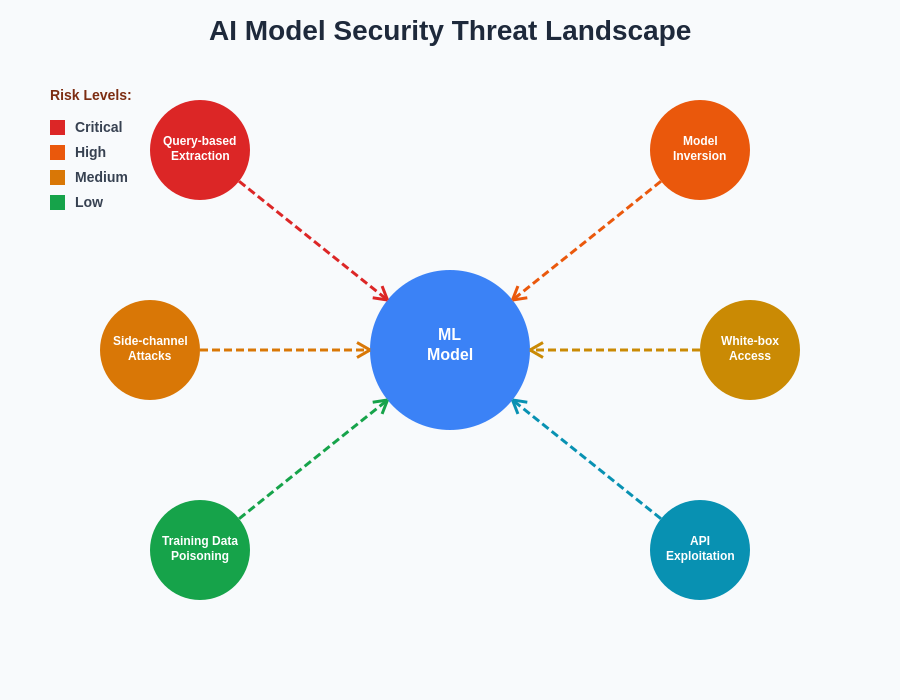

Model extraction attacks typically fall into several distinct categories, each exploiting different aspects of machine learning systems and requiring specific defensive countermeasures. Query-based attacks represent one of the most common approaches, where adversaries systematically probe publicly accessible models through APIs or web interfaces to gather input-output pairs that can be used to train surrogate models with similar functionality.

Black-box extraction techniques focus on replicating model behavior without requiring direct access to model parameters or architecture details. Attackers construct carefully crafted queries designed to maximize information extraction from target models, often employing active learning strategies to optimize the efficiency of their data collection efforts. These attacks can be particularly challenging to detect because they may appear as legitimate usage patterns, especially when conducted gradually over extended periods.

Enhance your AI security knowledge with Claude’s advanced analysis capabilities to better understand sophisticated attack patterns and develop robust defensive strategies. The complexity of modern model stealing techniques requires advanced analytical capabilities to identify subtle patterns that indicate malicious activity.

White-box attacks occur when adversaries gain unauthorized access to model parameters, weights, or architectural specifications through system compromises, insider threats, or inadequate access controls. These attacks can enable complete model replication and may also expose sensitive training data or proprietary algorithmic innovations. The impact of white-box attacks is typically more severe than black-box attempts because they provide adversaries with comprehensive information about target systems.

Side-channel attacks exploit indirect information leakage from machine learning systems, such as timing variations, power consumption patterns, or memory access behaviors that can reveal details about model architecture or processing characteristics. These sophisticated attacks require specialized knowledge and equipment but can be highly effective against systems that appear secure through conventional security measures.

Technical Vulnerabilities in ML Systems

Machine learning systems introduce unique security vulnerabilities that differ significantly from traditional software applications, creating attack surfaces that require specialized understanding and protection strategies. The distributed nature of ML workflows, involving data collection, preprocessing, training, validation, and deployment across multiple systems and environments, creates numerous potential entry points for attackers seeking to compromise models or extract intellectual property.

Training data vulnerabilities represent a significant concern because models can inadvertently memorize sensitive information from their training datasets, making this information recoverable through carefully constructed queries or model inversion attacks. Privacy-preserving techniques such as differential privacy can mitigate some of these risks but may impact model performance and require careful implementation to be effective.

Model serving infrastructures often expose additional attack surfaces through APIs, web interfaces, or edge deployment scenarios where models operate in environments with limited security controls. The need to balance model accessibility for legitimate users while preventing unauthorized access creates ongoing security challenges that require sophisticated monitoring and access control mechanisms.

The evolving threat landscape for AI model security encompasses multiple attack vectors, from direct model extraction to sophisticated side-channel attacks that exploit implementation details. Understanding these diverse threats is essential for implementing comprehensive protection strategies that address both technical and operational security requirements.

Detection and Monitoring Strategies

Effective detection of model stealing attempts requires comprehensive monitoring strategies that can identify suspicious patterns in model usage, query behavior, and system access logs. Traditional network security monitoring tools may not be sufficient for detecting sophisticated model extraction attacks that mimic legitimate usage patterns or exploit legitimate access channels to gather information over extended periods.

Behavioral analysis techniques can identify anomalous query patterns that may indicate extraction attempts, such as systematic exploration of input spaces, unusual query frequencies, or requests that appear designed to probe model boundaries rather than solve legitimate problems. Machine learning techniques can be employed to develop detection systems that learn normal usage patterns and flag deviations that might indicate malicious activity.

Statistical analysis of query distributions and response patterns can reveal signs of active learning strategies commonly employed in model extraction attacks. Attackers often use sophisticated sampling techniques to maximize information extraction efficiency, creating detectable signatures in query patterns that differ from typical user behavior.

Real-time monitoring systems should integrate multiple data sources, including API access logs, query content analysis, user behavior profiling, and system resource utilization patterns, to provide comprehensive visibility into potential model theft attempts. The correlation of information across these diverse data sources can reveal attack patterns that might not be apparent when examining individual data streams in isolation.

Leverage Perplexity’s research capabilities to stay informed about the latest model stealing techniques and defensive innovations emerging from academic research and industry practice. The rapid evolution of both attack and defense techniques requires continuous learning and adaptation of security strategies.

Legal and Regulatory Frameworks

The legal landscape surrounding AI model intellectual property protection continues to evolve as lawmakers and regulators grapple with the unique challenges posed by machine learning technologies. Traditional intellectual property laws, including patents, copyrights, and trade secrets, provide some protection for AI models but may not fully address the specific characteristics of machine learning systems and the novel forms of theft they enable.

Patent protection for AI models faces significant challenges due to the abstract nature of many machine learning algorithms and the requirement for non-obviousness in rapidly advancing fields. While some aspects of model architecture or training techniques may be patentable, the practical enforcement of AI-related patents can be complex and costly, particularly when dealing with international adversaries or sophisticated copying techniques.

Copyright protection may apply to specific model implementations, training code, or documentation, but the extent of protection for learned model weights and parameters remains legally ambiguous in many jurisdictions. The transformative nature of model training processes can complicate copyright claims, particularly when models are trained on publicly available data or when similar models can be independently developed through different approaches.

Trade secret protection often provides the most practical legal framework for protecting AI models, as it can cover proprietary training data, architectural innovations, and operational methodologies that provide competitive advantages. However, trade secret protection requires organizations to implement reasonable measures to maintain secrecy, making security practices not just good business practice but legal necessities for IP protection.

International considerations become particularly important as model stealing attacks often cross national boundaries, involving attackers, victims, and infrastructure in multiple jurisdictions with different legal frameworks and enforcement capabilities. Organizations must consider the international dimensions of AI model protection when developing security strategies and legal compliance programs.

Technical Protection Mechanisms

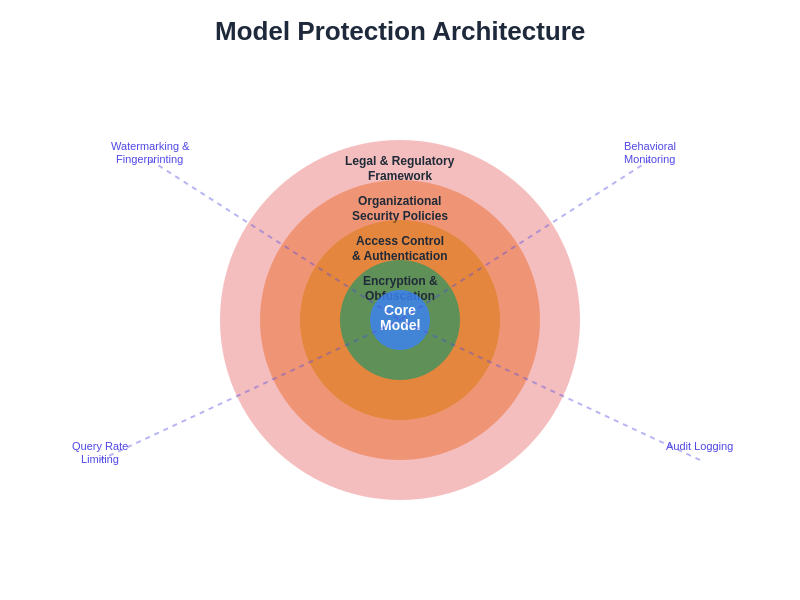

Implementing robust technical protection for AI models requires a multi-layered approach that addresses security concerns throughout the entire machine learning lifecycle, from initial data collection through final model deployment and ongoing operation. These protection mechanisms must balance security requirements with operational needs, ensuring that defensive measures do not unduly impact model performance or legitimate usage.

Access control systems for machine learning environments should implement fine-grained permissions that limit user access to only the specific model components and operations required for their roles. This includes separate access controls for training data, model parameters, inference capabilities, and administrative functions, with regular auditing of access patterns and permission assignments.

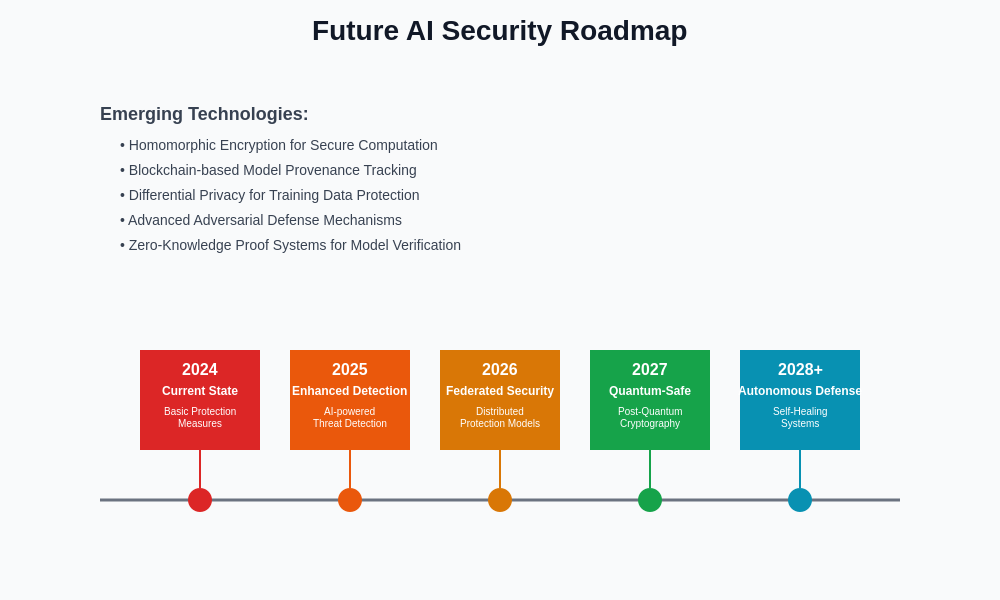

Encryption technologies play a crucial role in protecting models at rest and in transit, but the unique requirements of machine learning systems may require specialized encryption approaches. Homomorphic encryption techniques enable computation on encrypted data, allowing for secure model inference without exposing model parameters or input data to potentially compromised systems.

Model obfuscation techniques can make it more difficult for attackers to extract useful information from stolen model parameters or reverse-engineer model architectures. These approaches may include parameter perturbation, architectural complexity introduction, or encoding schemes that preserve functionality while making unauthorized analysis more challenging.

Watermarking and fingerprinting technologies enable model owners to embed identifying information within their models that can prove ownership and detect unauthorized usage. These techniques must be robust against various forms of model modification while remaining imperceptible during normal operation and not significantly impacting model performance.

A comprehensive model protection architecture implements multiple defense layers, from access controls and encryption to behavioral monitoring and legal protections. This layered approach ensures that even if individual security measures are bypassed, additional protections remain in place to safeguard intellectual property.

A comprehensive model protection architecture implements multiple defense layers, from access controls and encryption to behavioral monitoring and legal protections. This layered approach ensures that even if individual security measures are bypassed, additional protections remain in place to safeguard intellectual property.

Organizational Security Practices

Effective protection of AI model intellectual property requires comprehensive organizational security practices that extend beyond technical measures to encompass personnel, processes, and operational procedures. These practices must be integrated into the broader organizational security culture while addressing the specific risks and requirements associated with machine learning systems.

Personnel security measures should include thorough background checks for individuals with access to sensitive AI models, ongoing security awareness training focused on model protection risks, and clear policies regarding the handling of proprietary machine learning assets. Organizations should implement role-based access controls that limit individual access to only the minimum required for job functions and regularly review these permissions.

Secure development lifecycle practices for machine learning projects should incorporate security considerations from initial project conception through final deployment and ongoing maintenance. This includes secure coding practices for ML systems, regular security assessments of model development environments, and comprehensive testing of security controls before production deployment.

Third-party risk management becomes particularly important in AI development contexts where organizations often rely on external data sources, cloud computing resources, model training services, or collaborative development partnerships. Each of these relationships introduces potential security risks that must be carefully evaluated and managed through appropriate contractual protections and technical safeguards.

Incident response planning for model theft attempts requires specialized procedures that account for the unique characteristics of AI security incidents. This includes rapid containment procedures for compromised models, forensic analysis techniques for determining the scope of potential data or model exposure, and legal notification requirements for intellectual property theft incidents.

Industry-Specific Considerations

Different industries face varying levels of risk and regulatory requirements when protecting AI model intellectual property, necessitating tailored security approaches that address sector-specific threats and compliance obligations. Financial services organizations, for example, may face regulatory requirements for model governance and risk management that extend to security considerations.

Healthcare organizations must navigate complex privacy regulations such as HIPAA while protecting AI models that may contain sensitive patient information or represent significant competitive advantages in diagnosis or treatment optimization. The intersection of patient privacy protection and intellectual property security creates unique compliance challenges that require specialized expertise.

Technology companies developing AI products for commercial markets face intense competitive pressures that make model protection critical for business success, while also dealing with the challenges of securing models deployed across diverse customer environments with varying security capabilities.

Government and defense applications of AI require the highest levels of security due to national security implications and the sophistication of potential adversaries. These environments often require specialized security clearances, air-gapped development environments, and comprehensive supply chain security measures.

Research institutions and universities must balance intellectual property protection with academic freedom and collaboration traditions, often requiring nuanced approaches that protect valuable innovations while maintaining openness for legitimate research collaboration.

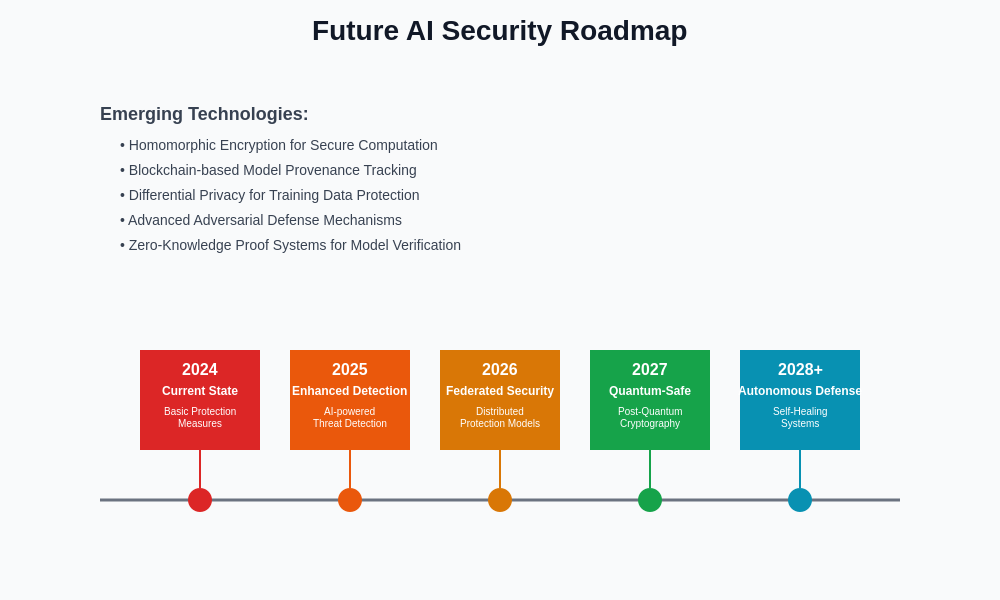

Emerging Technologies and Future Directions

The landscape of AI model protection continues to evolve rapidly as new technologies emerge that can enhance security while also creating new vulnerabilities that attackers may exploit. Federated learning approaches can reduce exposure risks by distributing model training across multiple parties without centralizing sensitive data, but they also introduce new attack vectors that require specialized defensive strategies.

Blockchain technologies offer potential solutions for model provenance tracking, licensing management, and secure multi-party computation scenarios, but implementation challenges and scalability limitations must be carefully considered. Smart contract approaches to model licensing and usage tracking could provide automated enforcement mechanisms for intellectual property protection.

Quantum computing developments may eventually impact both AI model security and the cryptographic techniques used to protect models, requiring organizations to consider long-term security planning that accounts for potential quantum threats to current encryption methods.

Advanced adversarial machine learning techniques continue to evolve, creating new attack possibilities while also providing defensive capabilities for detecting and preventing model theft attempts. The ongoing arms race between attack and defense techniques requires continuous adaptation of security strategies.

The evolution of AI security technologies and practices follows a complex roadmap that balances emerging threats with advancing defensive capabilities. Organizations must plan for both current security requirements and future challenges as the AI landscape continues to evolve rapidly.

The evolution of AI security technologies and practices follows a complex roadmap that balances emerging threats with advancing defensive capabilities. Organizations must plan for both current security requirements and future challenges as the AI landscape continues to evolve rapidly.

Best Practices and Implementation Guidelines

Successful implementation of AI model protection requires a systematic approach that prioritizes risks based on business impact and threat likelihood while maintaining operational efficiency and user experience. Organizations should begin with comprehensive risk assessments that identify their most valuable AI assets and the most likely attack vectors for their specific operational environment.

Security policies for AI model protection should be clearly documented, regularly updated, and consistently enforced across all departments and business units involved in machine learning activities. These policies must address technical requirements, operational procedures, and compliance obligations while providing clear guidance for personnel at all levels of the organization.

Regular security assessments and penetration testing specific to AI systems should be conducted to validate the effectiveness of protection measures and identify potential vulnerabilities before they can be exploited by malicious actors. These assessments should include both technical testing of system security and evaluation of operational procedures and personnel practices.

Continuous monitoring and improvement processes should be established to adapt security measures as threats evolve and new technologies become available. This includes regular review of security metrics, incident analysis to identify improvement opportunities, and ongoing investment in security technology and training.

Collaboration with industry peers, security researchers, and law enforcement agencies can provide valuable intelligence about emerging threats and effective defensive strategies. Participation in information sharing initiatives and industry security groups can enhance organizational security capabilities while contributing to broader community defense efforts.

The protection of AI model intellectual property represents a critical challenge that will only increase in importance as artificial intelligence becomes more central to competitive advantage across industries. Organizations that invest in comprehensive model protection strategies today will be better positioned to maintain their competitive advantages and avoid the significant costs associated with intellectual property theft. The combination of technical protection measures, organizational security practices, legal safeguards, and industry collaboration provides the foundation for effective AI model security in an increasingly complex threat environment.

Disclaimer

This article is provided for informational and educational purposes only and does not constitute legal, technical, or professional advice. The information presented reflects current understanding of AI model security challenges and should not be considered comprehensive or definitive guidance for any specific situation. Organizations should consult with qualified security professionals and legal counsel when developing AI model protection strategies. The effectiveness of security measures may vary depending on specific technical implementations, threat environments, and organizational contexts. Readers should conduct thorough risk assessments and testing before implementing any security measures discussed in this article.