The intersection of artificial intelligence and musical creativity has ushered in a revolutionary era where machines can compose symphonies, generate melodies, and create entirely new forms of musical expression. This technological renaissance has transformed the landscape of music production, enabling artists, producers, and even non-musicians to harness the power of generative models to create sophisticated audio content that pushes the boundaries of traditional musical composition.

Discover the latest trends in AI-powered creative tools to stay informed about cutting-edge developments in artificial intelligence applications for music and audio production. The convergence of advanced machine learning algorithms with musical theory has created unprecedented opportunities for innovation in the creative industries, fundamentally altering how we conceptualize and produce musical content.

The Evolution of AI in Music Creation

The journey of artificial intelligence in music composition represents a fascinating evolution from simple algorithmic pattern generation to sophisticated neural networks capable of understanding and reproducing complex musical structures. Early computer-assisted composition systems relied heavily on rule-based algorithms and mathematical models that could generate basic melodic sequences and harmonic progressions. However, these primitive systems lacked the nuanced understanding of musical expression, emotional depth, and stylistic variations that characterize human-composed music.

The advent of deep learning and neural network architectures has fundamentally transformed the capabilities of AI music composition systems. Modern generative models can analyze vast databases of musical compositions, learning intricate patterns, stylistic characteristics, and compositional techniques from diverse musical traditions spanning classical symphonies to contemporary electronic dance music. This comprehensive understanding enables AI systems to generate original compositions that exhibit remarkable sophistication in terms of harmonic complexity, melodic development, and rhythmic innovation.

The progression from deterministic algorithms to probabilistic generative models has enabled AI systems to introduce controlled randomness and creative variability into the composition process. This development has been crucial in addressing one of the primary criticisms of early AI music systems, which often produced repetitive and predictable compositions lacking the spontaneity and emotional resonance associated with human creativity.

Understanding Generative Models in Audio Creation

Generative models represent the technological foundation upon which modern AI music composition systems are built. These sophisticated algorithms are designed to learn the underlying statistical distributions and patterns present in training data, subsequently using this learned knowledge to generate new content that maintains the essential characteristics of the original dataset while introducing novel variations and creative elements.

The most prominent generative architectures employed in AI music composition include Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformer-based models. Each of these approaches offers distinct advantages and capabilities for different aspects of music generation, from melodic creation to rhythmic pattern generation and harmonic progression development.

Generative Adversarial Networks have proven particularly effective for creating realistic audio waveforms and generating musical compositions that closely mimic the stylistic characteristics of specific genres or artists. The adversarial training process, involving a generator network that creates musical content and a discriminator network that evaluates the authenticity of generated compositions, results in increasingly sophisticated and convincing musical outputs through iterative refinement.

Experience advanced AI capabilities with Claude to explore how sophisticated language models can assist in understanding complex musical structures and compositional techniques. The integration of natural language processing with music generation has opened new possibilities for intuitive interaction with AI composition systems, allowing users to describe desired musical characteristics in everyday language.

Neural Network Architectures for Music Generation

The architectural design of neural networks used in music generation plays a crucial role in determining the quality, coherence, and creativity of the generated compositions. Recurrent Neural Networks (RNNs) and their more advanced variants, Long Short-Term Memory (LSTM) networks, have been extensively employed for sequential music generation tasks due to their ability to maintain temporal dependencies and capture long-term musical patterns.

The introduction of attention mechanisms and Transformer architectures has revolutionized the field of AI music composition by enabling models to capture complex relationships between different musical elements across extended temporal sequences. These architectures excel at understanding musical structure, harmonic progressions, and thematic development in ways that more closely approximate human musical cognition and composition strategies.

Convolutional Neural Networks (CNNs) have found particular application in the analysis and generation of musical spectrograms and audio features, enabling AI systems to work directly with raw audio data rather than symbolic musical representations. This capability has proven essential for generating realistic instrumental timbres, vocal characteristics, and complex acoustic textures that would be difficult to achieve through purely symbolic approaches.

The integration of multiple neural network architectures within hierarchical generative systems has enabled the creation of AI composition platforms capable of simultaneously handling multiple aspects of musical creation, from high-level structural organization to detailed instrumental articulation and audio production techniques.

Deep Learning Approaches to Musical Creativity

Deep learning methodologies have introduced unprecedented levels of sophistication and creativity into AI music composition systems. These approaches leverage multiple layers of neural processing to extract increasingly abstract and complex features from musical training data, enabling the generation of compositions that demonstrate genuine creative insight and artistic merit.

One of the most significant developments in deep learning for music composition has been the implementation of hierarchical generation models that can simultaneously operate at multiple temporal and structural scales. These systems can generate overarching musical forms while maintaining coherent local harmonic and melodic relationships, resulting in compositions that exhibit both immediate appeal and long-term structural integrity.

The application of unsupervised learning techniques has enabled AI systems to discover novel musical patterns and stylistic combinations that may not be explicitly present in training data. This capability has led to the emergence of entirely new musical genres and hybrid styles that combine elements from disparate musical traditions in innovative and aesthetically compelling ways.

Transfer learning approaches have proven particularly valuable for adapting pre-trained music generation models to specific musical styles, instruments, or compositional requirements. This methodology enables rapid customization of AI composition systems for specialized applications while maintaining the sophisticated musical understanding developed through extensive training on large-scale musical datasets.

Real-Time Audio Synthesis and Processing

The integration of real-time audio synthesis capabilities with AI music composition systems has opened new possibilities for interactive musical creation and live performance applications. These systems can generate high-quality audio output in real-time, enabling musicians and performers to incorporate AI-generated musical elements into live performances and interactive installations.

Modern AI synthesis systems employ sophisticated neural audio generation techniques that can produce realistic instrumental sounds, vocal textures, and environmental audio effects directly from high-level musical instructions or symbolic representations. This capability eliminates the need for extensive sample libraries and enables the creation of entirely novel instrumental sounds and acoustic textures.

The development of differentiable audio synthesis techniques has enabled end-to-end training of AI music systems that can optimize both compositional and acoustic aspects of musical generation simultaneously. This integrated approach results in compositions that are not only musically coherent but also optimally suited for their intended acoustic realization and performance context.

Real-time parameter control and interactive modification capabilities have transformed AI music composition from a passive generation process into an active collaborative tool that can respond to user input, environmental conditions, and performance dynamics in sophisticated and musically meaningful ways.

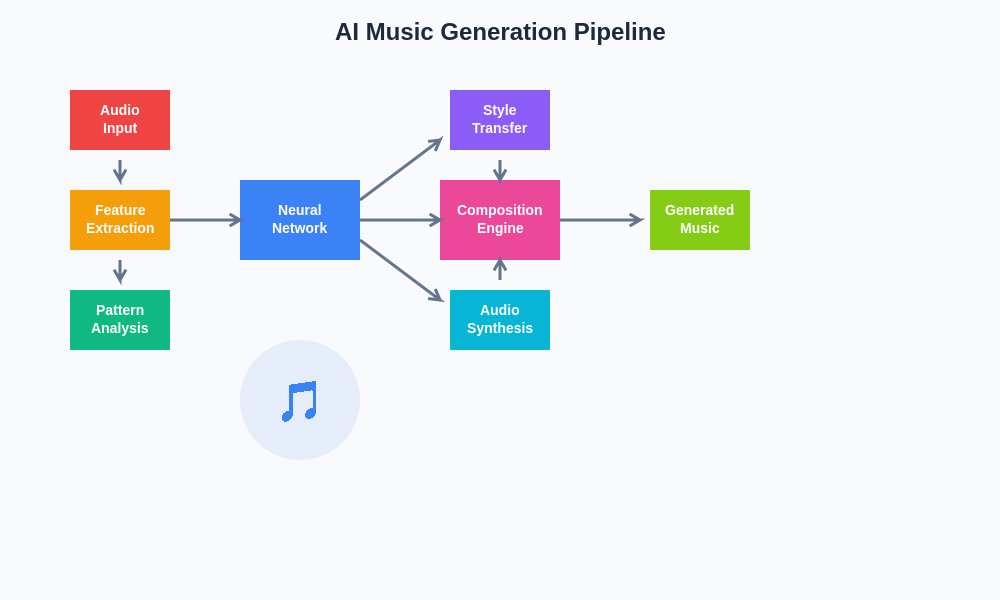

The modern AI music generation pipeline incorporates multiple stages of processing, from initial pattern recognition and style analysis through neural network composition to final audio synthesis and post-processing. This comprehensive approach ensures that generated musical content maintains both technical excellence and artistic coherence throughout the creation process.

Style Transfer and Musical Adaptation

Style transfer techniques have emerged as one of the most compelling applications of AI in music composition, enabling the transformation of existing musical compositions into different stylistic frameworks while preserving essential melodic and harmonic content. These systems can take a classical piano piece and reimagine it in the style of jazz, electronic music, or world music traditions, creating fascinating hybrid compositions that explore the boundaries between different musical genres.

The implementation of neural style transfer for music involves sophisticated feature extraction and reconstruction processes that can separate content-related musical elements from style-specific characteristics. This separation enables precise control over the transformation process and allows for the creation of compositions that seamlessly blend elements from multiple musical traditions.

Advanced style transfer systems can operate at multiple levels of musical abstraction, from surface-level instrumental and rhythmic modifications to deep structural and harmonic transformations. This multi-level approach enables the creation of style adaptations that maintain musical coherence while introducing substantial creative variations and stylistic innovations.

The application of style transfer techniques to collaborative composition workflows has enabled new forms of musical collaboration between human composers and AI systems, where initial musical ideas can be explored and developed through multiple stylistic interpretations and variations.

Explore comprehensive AI research capabilities with Perplexity to delve deeper into the technical literature and latest developments in AI music composition and generative audio technologies. The rapid pace of innovation in this field requires continuous engagement with cutting-edge research and development efforts.

Interactive Composition and Human-AI Collaboration

The evolution of AI music composition systems toward interactive and collaborative platforms has transformed these tools from autonomous composition generators into sophisticated creative partners that can work alongside human musicians and composers. These systems enable real-time musical dialogue between human creativity and artificial intelligence, resulting in compositions that leverage the unique strengths of both human artistic insight and machine learning capabilities.

Interactive AI composition platforms provide intuitive interfaces that allow musicians to guide the generation process through various forms of input, including melodic sketches, rhythmic patterns, harmonic progressions, and high-level structural descriptions. The AI system interprets these inputs and generates complementary musical material that enhances and develops the human-provided musical ideas while maintaining stylistic coherence and artistic merit.

The implementation of reinforcement learning techniques in interactive composition systems has enabled AI to learn from user feedback and preferences, gradually adapting its compositional strategies to align with individual artistic visions and creative goals. This personalized approach to AI composition results in systems that become increasingly effective collaborative partners through continued interaction and mutual adaptation.

Multi-modal interaction capabilities have expanded the ways in which humans can communicate with AI composition systems, incorporating gesture recognition, voice commands, and even biometric feedback to create more intuitive and expressive collaborative interfaces that respond to the natural creative processes of human musicians.

Challenges and Limitations in AI Music Generation

Despite the remarkable advances in AI music composition technology, several significant challenges and limitations continue to constrain the full realization of artificial musical creativity. One of the primary challenges involves the difficulty of capturing and reproducing the subtle emotional nuances and cultural contexts that inform human musical expression. While AI systems can generate technically proficient compositions, they often struggle to imbue their creations with the deep emotional resonance and cultural significance that characterizes the most meaningful musical works.

The issue of musical coherence over extended temporal scales remains a persistent challenge for AI composition systems. While modern neural networks excel at maintaining local musical relationships and short-term structural coherence, generating compositions that exhibit satisfying long-term development and large-scale formal organization continues to require careful architectural design and training methodologies.

Copyright and intellectual property considerations present complex legal and ethical challenges for AI music composition, particularly when training datasets include copyrighted musical works or when generated compositions closely resemble existing musical pieces. These issues require careful consideration of fair use principles and the development of new legal frameworks that can accommodate the unique characteristics of AI-generated creative content.

The computational requirements for training and operating sophisticated AI music composition systems remain substantial, potentially limiting access to these technologies and creating barriers to widespread adoption among individual musicians and smaller creative organizations.

Commercial Applications and Industry Impact

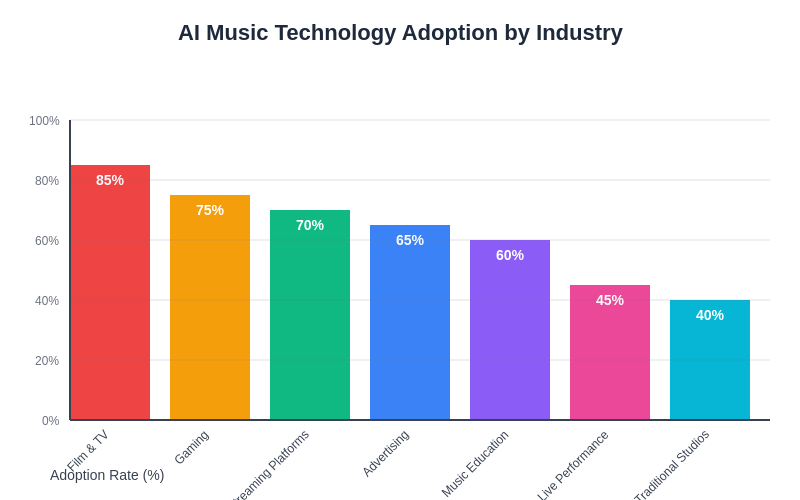

The commercial applications of AI music composition technology have expanded rapidly across multiple sectors of the entertainment and media industries. Film and television production companies increasingly rely on AI-generated musical scores and soundtracks to reduce production costs while maintaining high-quality audio content. These systems can generate appropriate musical accompaniment for specific scenes, moods, and narrative requirements while adapting to precise timing and synchronization constraints.

The gaming industry has embraced AI music composition for creating dynamic and responsive soundtracks that can adapt to player actions, game states, and environmental conditions in real-time. This adaptive audio approach enhances player immersion and creates more engaging interactive experiences that respond musically to the unfolding narrative and gameplay dynamics.

Streaming platforms and content creators have adopted AI music generation tools for creating background music, podcast intros, and promotional content that can be quickly customized for specific applications without the licensing complexities and costs associated with traditional music libraries.

The advertising and marketing industries have found AI music composition particularly valuable for creating brand-specific musical identities and campaign soundtracks that can be rapidly generated and modified to suit different demographic targets and marketing objectives.

The adoption of AI music technologies across different industry sectors demonstrates the broad applicability and commercial viability of these innovative tools. From entertainment production to educational applications, AI-generated music is finding increasingly sophisticated applications that leverage the unique capabilities of machine learning systems.

Educational Applications and Music Learning

AI music composition systems have found significant applications in music education, providing innovative tools for teaching musical theory, composition techniques, and creative expression. These systems can generate musical examples that illustrate specific theoretical concepts, create practice exercises tailored to individual student needs, and provide immediate feedback on compositional attempts and musical analysis exercises.

The ability of AI systems to generate compositions in specific styles and historical periods has proven valuable for music history education, enabling students to explore different musical traditions through interactive generation and analysis of representative compositions. This hands-on approach to musical style study enhances understanding and retention of complex stylistic characteristics and historical development patterns.

Adaptive learning systems that incorporate AI music generation can provide personalized instruction paths that adjust to individual student progress and learning preferences. These systems can generate increasingly challenging compositional exercises and provide scaffolded support for developing composers as they master fundamental techniques and explore more advanced creative strategies.

The integration of AI composition tools into collaborative learning environments has enabled new forms of musical collaboration and peer learning, where students can work together with AI systems to explore musical ideas and develop compositional skills through guided experimentation and creative exploration.

Future Directions and Technological Horizons

The future development of AI music composition technology promises even more sophisticated and creative capabilities as research advances in neural architecture design, training methodologies, and human-computer interaction paradigms. Emerging techniques in few-shot learning and meta-learning may enable AI systems to rapidly adapt to new musical styles and compositional requirements with minimal training data, making these tools more accessible and versatile for diverse musical applications.

The integration of multimodal AI systems that can simultaneously process audio, visual, and textual information opens possibilities for AI composition systems that can create musical content based on visual inputs, narrative descriptions, or emotional specifications. These capabilities would enable more intuitive and expressive interfaces for musical creation and collaboration.

Advances in quantum computing may eventually provide the computational resources necessary for training even more sophisticated neural architectures capable of capturing the full complexity of musical expression and creativity. These developments could lead to AI systems that demonstrate genuine artistic insight and creative innovation comparable to human musical genius.

The continued development of more sophisticated evaluation metrics and quality assessment techniques will enable better optimization of AI music composition systems and more objective comparison of different approaches and methodologies. This progress will be essential for advancing the field and ensuring that technological developments align with artistic and aesthetic objectives.

Ethical Considerations and Creative Authenticity

The increasing sophistication of AI music composition systems raises important questions about creative authenticity, artistic ownership, and the nature of musical creativity itself. As AI-generated compositions become increasingly indistinguishable from human-created music, the music industry must grapple with questions about attribution, authorship, and the value of human creativity in an age of artificial intelligence.

The potential displacement of human musicians and composers by AI systems represents a significant concern that requires careful consideration of the social and economic implications of widespread AI adoption in creative industries. Balancing the benefits of AI-enhanced creativity with the preservation of opportunities for human artistic expression will be crucial for maintaining a vibrant and diverse musical culture.

Issues of bias and representation in AI training data must be addressed to ensure that AI composition systems do not perpetuate cultural stereotypes or exclude important musical traditions from their generative capabilities. Diverse and inclusive training datasets will be essential for creating AI systems that can serve the needs of global musical communities.

The development of transparent and explainable AI systems will be important for maintaining trust and understanding in the creative applications of artificial intelligence, enabling users to understand and control the compositional processes that generate musical content.

Conclusion: The Harmonious Future of AI and Music

The evolution of AI music composition represents one of the most exciting frontiers in the application of artificial intelligence to creative endeavors. As generative models become increasingly sophisticated and capable of producing musical content that rivals human creativity, we are witnessing the emergence of new forms of artistic collaboration and creative expression that transcend traditional boundaries between human and machine intelligence.

The future of AI music composition lies not in the replacement of human creativity but in the augmentation and enhancement of human artistic capabilities through intelligent collaboration with advanced AI systems. These tools will enable composers, musicians, and creative professionals to explore new musical territories, overcome creative blocks, and realize artistic visions that might otherwise remain unreachable.

The continued development of AI music composition technology will require ongoing collaboration between technologists, musicians, ethicists, and legal experts to ensure that these powerful tools serve the broader goals of artistic expression, cultural diversity, and creative innovation while addressing legitimate concerns about authenticity, ownership, and social impact.

As we look toward the future, the symphony of human creativity and artificial intelligence promises to compose new chapters in the ongoing story of musical evolution, creating opportunities for artistic expression that we can only begin to imagine.

Disclaimer

This article is for informational purposes only and does not constitute professional advice regarding music production, copyright law, or commercial applications of AI technology. The views expressed are based on current understanding of AI music composition technologies and their applications. Readers should conduct their own research and consider their specific requirements when implementing AI-powered music creation tools. The effectiveness and appropriateness of AI music composition systems may vary depending on specific use cases, artistic objectives, and legal jurisdictions.