The intersection of artificial intelligence and musical creativity has ushered in a revolutionary era where machines can compose symphonies, generate adaptive soundscapes, and create personalized audio experiences that respond dynamically to user interactions and environmental contexts. This technological breakthrough represents more than just algorithmic composition; it embodies a fundamental transformation in how music is conceived, produced, and experienced across entertainment media, interactive applications, and creative industries.

Discover the latest trends in AI-powered entertainment as machine learning technologies continue to reshape creative expression and redefine the boundaries between human artistry and computational innovation. The emergence of sophisticated AI music generation systems has democratized music creation while opening unprecedented possibilities for dynamic, context-aware audio experiences that adapt in real-time to user preferences and situational demands.

The Evolution of Algorithmic Music Creation

The journey from simple algorithmic composition to sophisticated AI-driven music generation represents decades of technological advancement and creative exploration. Early computer-generated music relied on basic mathematical formulas and predetermined rules to create simple melodies and rhythmic patterns. Today’s AI systems leverage deep learning networks, neural transformers, and vast datasets of musical compositions to understand complex harmonic relationships, melodic progressions, and stylistic nuances that characterize different musical genres and cultural traditions.

Modern AI music generation systems can analyze the emotional content of existing compositions, understand the relationship between musical elements and listener responses, and generate original pieces that evoke specific moods or complement particular visual or narrative contexts. This evolution has enabled the creation of music that not only sounds technically proficient but also demonstrates genuine artistic sensibility and emotional resonance.

The sophistication of contemporary AI music systems extends beyond simple note generation to encompass complex orchestration, dynamic arrangement, and real-time adaptation capabilities. These systems can understand temporal relationships in music, create coherent musical narratives that develop over time, and respond to external inputs such as gameplay dynamics, user emotions, or environmental conditions to generate contextually appropriate soundscapes.

Dynamic Soundtrack Systems in Interactive Media

Interactive entertainment has emerged as one of the most compelling applications for AI-generated music, where traditional static soundtracks give way to dynamic audio experiences that evolve in response to player actions, narrative developments, and environmental changes. Modern video games leverage AI music generation to create seamless audio landscapes that enhance immersion while providing unique auditory experiences for each playthrough.

Experience advanced AI capabilities with Claude to understand how machine learning can enhance creative workflows and generate sophisticated multimedia content. Dynamic soundtrack systems utilize multiple layers of musical elements that can be combined, modified, and orchestrated in real-time based on complex algorithms that analyze gameplay state, player behavior patterns, and narrative progression to deliver contextually appropriate musical accompaniment.

The technical implementation of dynamic soundtracks involves sophisticated audio engines that can seamlessly blend different musical segments, adjust tempo and intensity based on action levels, and introduce or remove instrumental layers to match the emotional tone of specific scenes or interactions. These systems often incorporate machine learning models trained on vast libraries of existing game music to understand the relationships between gameplay mechanics and appropriate musical responses.

Advanced dynamic soundtrack systems can also learn from individual player preferences and behavioral patterns, gradually adapting their musical selections and generation parameters to create personalized audio experiences that resonate with specific users. This level of customization represents a significant departure from the one-size-fits-all approach of traditional game audio design, offering instead a tailored auditory experience that enhances emotional engagement and immersion.

Personalized Music Generation and Adaptive Audio

The application of AI music generation extends far beyond entertainment into personalized audio experiences that adapt to individual preferences, daily rhythms, and contextual needs. Modern AI systems can analyze listening history, emotional responses, and environmental factors to generate custom musical compositions that align with specific moods, activities, or therapeutic goals.

These personalized music generation systems often incorporate biometric data, location information, and temporal patterns to create audio experiences that complement natural human rhythms and support various activities such as focus work, relaxation, exercise, or sleep preparation. The AI analyzes patterns in user behavior and physiological responses to optimize musical parameters such as tempo, harmony, instrumentation, and dynamic range for maximum effectiveness in supporting desired mental states.

The sophistication of personalized AI music extends to understanding cultural preferences, genre inclinations, and individual artistic tastes that shape musical preferences. Advanced systems can generate compositions that blend familiar elements with novel musical ideas, creating pieces that feel both comfortable and engaging while introducing subtle variations that prevent monotony and encourage continued listening engagement.

Creative Collaboration Between AI and Human Artists

Rather than replacing human creativity, AI music generation has emerged as a powerful collaborative tool that enhances and extends artistic capabilities. Professional composers, producers, and musicians increasingly integrate AI-generated elements into their creative workflows, using machine learning systems to explore new harmonic possibilities, generate rhythmic variations, and create foundational musical ideas that can be further developed through human artistic interpretation.

The collaborative relationship between AI and human artists often involves iterative processes where musicians provide creative direction, emotional context, and artistic vision while AI systems contribute technical proficiency, harmonic sophistication, and the ability to rapidly explore vast creative spaces. This partnership enables the creation of musical works that combine human emotional intelligence and artistic intuition with computational precision and creative breadth.

Many contemporary artists use AI music generation as a creative catalyst, employing machine learning systems to break through creative blocks, suggest unexpected musical directions, and provide fresh perspectives on familiar compositional challenges. The AI serves as an intelligent creative partner that can offer alternative approaches to melody development, harmonic progression, and rhythmic arrangement while respecting the overall artistic vision and emotional intent of human collaborators.

Technical Foundations of AI Music Systems

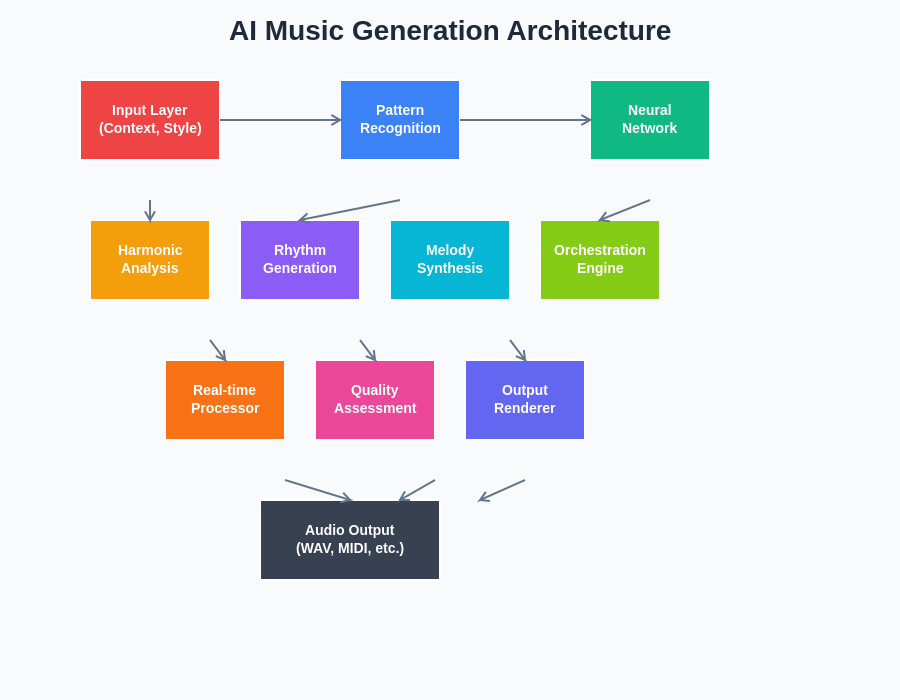

The underlying technology powering modern AI music generation relies on sophisticated machine learning architectures, particularly transformer networks and generative adversarial networks that have been specifically adapted for musical applications. These systems are trained on massive datasets containing diverse musical compositions across genres, cultures, and historical periods, enabling them to understand complex patterns in harmony, melody, rhythm, and musical structure.

The technical architecture of AI music systems typically involves multiple specialized components working in concert to analyze musical input, understand contextual requirements, and generate appropriate audio output. These components include pattern recognition modules that identify musical motifs and structural elements, harmonic analysis systems that understand chord progressions and tonal relationships, and generation engines that create new musical content based on learned patterns and specified parameters.

Advanced AI music systems also incorporate real-time processing capabilities that enable dynamic adaptation and responsive generation. These systems can analyze audio input streams, environmental data, or user interactions to modify their output in real-time, creating seamless transitions between different musical sections and maintaining coherent musical narratives despite changing contextual requirements.

Applications in Film and Media Production

The film and media production industry has embraced AI music generation as a powerful tool for creating sophisticated soundtracks, ambient soundscapes, and adaptive audio experiences that enhance narrative storytelling and emotional engagement. AI systems can generate music that precisely matches the pacing, emotional tone, and dramatic arc of visual content while providing filmmakers with unprecedented flexibility in audio post-production.

Explore comprehensive AI research capabilities with Perplexity to stay informed about cutting-edge developments in creative AI applications and multimedia production technologies. Modern film scoring workflows increasingly incorporate AI-generated musical elements that can be customized, refined, and orchestrated to match specific scenes, character developments, or narrative transitions.

The use of AI in film scoring enables rapid iteration and experimentation with different musical approaches without requiring extensive recording sessions or large orchestral arrangements. Directors and producers can quickly audition various musical styles, emotional tones, and instrumental configurations to find the optimal audio accompaniment for their visual content, significantly reducing production timelines while maintaining high artistic standards.

AI music generation also facilitates the creation of adaptive soundtracks for interactive media, virtual reality experiences, and immersive installations where traditional linear compositions would be insufficient. These applications require music that can respond to user choices, environmental changes, and narrative branching, creating unique audio experiences that enhance immersion and engagement.

Emotional Intelligence in AI-Generated Music

One of the most remarkable aspects of advanced AI music generation systems is their ability to understand and manipulate emotional content within musical compositions. These systems are trained to recognize the relationship between musical elements such as harmony, melody, rhythm, and instrumentation and their corresponding emotional impacts on listeners, enabling the generation of music with specific emotional characteristics and psychological effects.

The emotional intelligence of AI music systems extends beyond simple mood matching to encompass complex emotional narratives that develop over time. These systems can create musical compositions that guide listeners through emotional journeys, building tension and release, creating moments of anticipation and resolution, and establishing emotional connections that enhance the overall listening experience.

Research into the psychological effects of AI-generated music has revealed that properly trained systems can create compositions that reliably evoke specific emotional responses while maintaining the complexity and nuance associated with human-created music. This capability has important implications for therapeutic applications, educational contexts, and entertainment experiences where emotional engagement is paramount.

Real-Time Music Generation and Adaptive Systems

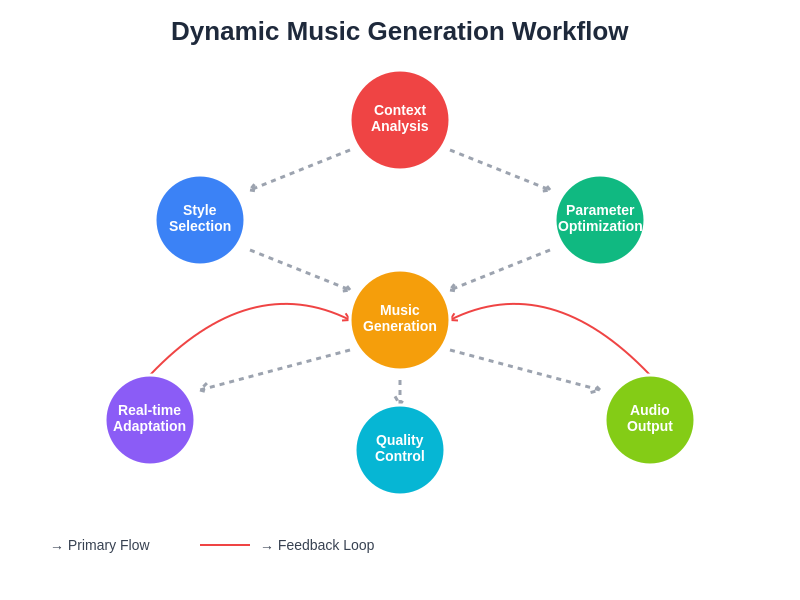

The development of real-time AI music generation capabilities represents a significant technological achievement that enables the creation of truly dynamic and responsive audio experiences. These systems can generate music on-demand based on immediate contextual inputs, user interactions, and environmental conditions, creating unique musical experiences that could never be replicated through traditional composition and recording methods.

Real-time generation systems must balance computational efficiency with musical sophistication, employing optimized algorithms and specialized hardware to ensure seamless audio output without latency or artifacts that could disrupt the user experience. Advanced systems can process multiple input streams simultaneously, incorporating data from sensors, user interfaces, and environmental monitoring systems to create contextually appropriate musical responses.

The workflow for dynamic music generation involves sophisticated decision-making algorithms that evaluate contextual inputs, select appropriate musical parameters, and coordinate the generation of coherent musical output in real-time. These systems must maintain musical continuity while adapting to changing conditions, ensuring that transitions between different musical sections remain musically logical and emotionally coherent.

Quality Assessment and Musical Authenticity

Evaluating the quality and authenticity of AI-generated music presents unique challenges that require both technical analysis and subjective artistic assessment. Traditional metrics used to evaluate computational systems may not adequately capture the nuanced aspects of musical quality such as emotional resonance, cultural authenticity, and artistic innovation that characterize exceptional musical compositions.

Contemporary evaluation approaches combine quantitative analysis of musical structure and harmonic content with qualitative assessment by human experts and listener feedback studies. These multi-faceted evaluation methods help ensure that AI-generated music meets both technical standards and artistic expectations while maintaining the cultural and emotional authenticity that makes music meaningful to human listeners.

The question of musical authenticity in AI-generated compositions raises important philosophical and artistic considerations about the nature of creativity, originality, and artistic expression. While AI systems can create technically proficient and emotionally engaging music, the relationship between computational generation and authentic artistic expression remains an active area of debate and exploration within the creative community.

Impact on Music Industry and Creative Workflows

The integration of AI music generation into professional music production has fundamentally altered traditional creative workflows and business models within the music industry. Record labels, production studios, and independent artists increasingly rely on AI tools to streamline composition processes, explore creative possibilities, and reduce production costs while maintaining high artistic standards.

The democratization of music creation through AI tools has enabled individuals without formal musical training to create sophisticated compositions and explore musical creativity that was previously accessible only to trained musicians. This accessibility has led to an explosion of musical content creation while raising questions about the future role of traditional musical education and professional expertise.

The economic implications of AI music generation extend beyond individual creativity to encompass broader questions about intellectual property, artistic attribution, and compensation models for AI-assisted creative work. As AI systems become more sophisticated and widely adopted, the industry continues to evolve frameworks for understanding ownership, attribution, and value creation in AI-augmented creative processes.

Therapeutic and Wellness Applications

The application of AI music generation in therapeutic and wellness contexts represents an emerging field that leverages the psychological and physiological effects of music to support mental health, cognitive development, and emotional wellbeing. AI systems can generate personalized musical experiences designed to support specific therapeutic goals such as stress reduction, focus enhancement, sleep improvement, or mood regulation.

Therapeutic AI music systems often incorporate biometric monitoring and feedback mechanisms that enable real-time adaptation based on physiological responses such as heart rate, breathing patterns, and neurological activity. This capability allows for the creation of truly personalized therapeutic experiences that adapt to individual needs and responses, optimizing the beneficial effects of musical intervention.

Research into the therapeutic applications of AI-generated music has demonstrated significant potential for supporting various mental health conditions, cognitive rehabilitation programs, and general wellness initiatives. The ability to create personalized, adaptive musical experiences offers new possibilities for therapeutic intervention that complement traditional approaches while providing scalable solutions for broader population health initiatives.

Future Directions and Emerging Technologies

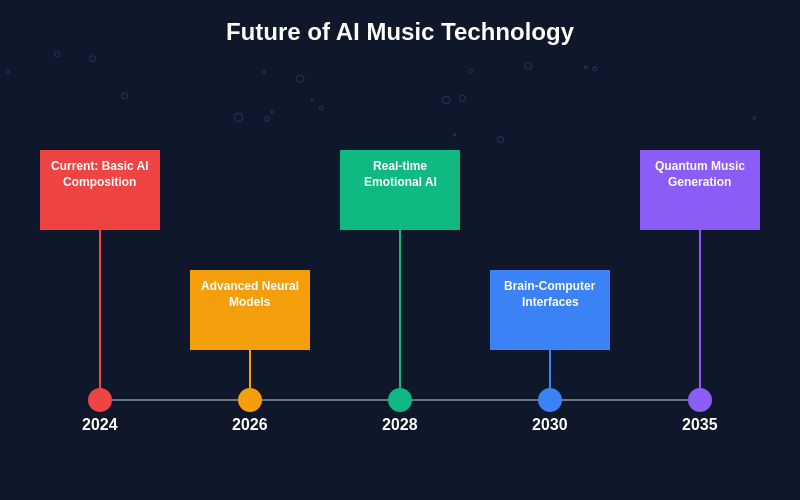

The future of AI music generation promises even more sophisticated capabilities as advances in machine learning, computational power, and audio processing technologies continue to expand the possibilities for intelligent music creation. Emerging technologies such as quantum computing, advanced neural architectures, and improved training methodologies may enable AI systems that approach or exceed human-level musical creativity and sophistication.

The integration of AI music generation with other emerging technologies such as virtual reality, augmented reality, and brain-computer interfaces opens new frontiers for immersive musical experiences that blur the boundaries between performer, composer, and audience. These technological convergences may enable entirely new forms of musical expression and interaction that were previously impossible or impractical to implement.

The continued evolution of AI music generation will likely focus on improving emotional intelligence, cultural sensitivity, and collaborative capabilities that enhance rather than replace human artistic expression. Future systems may demonstrate more nuanced understanding of cultural contexts, historical musical traditions, and individual artistic preferences, enabling more sophisticated and meaningful musical collaborations between human and artificial intelligence.

The development of more accessible and user-friendly AI music tools will continue to democratize music creation while raising important questions about the preservation of traditional musical skills and cultural practices. As these technologies become more widespread and sophisticated, society will need to navigate the balance between technological capability and human artistic expression, ensuring that AI enhancement supports rather than supplants the fundamental human drive for creative expression and cultural communication through music.

Disclaimer

This article is for informational purposes only and does not constitute professional advice regarding music production, technology implementation, or creative practices. The views expressed are based on current understanding of AI technologies and their applications in music generation. Readers should conduct their own research and consider their specific requirements when implementing AI-powered music tools. The effectiveness and appropriateness of AI music generation may vary depending on specific use cases, artistic goals, and individual preferences.