The effectiveness of artificial intelligence and machine learning systems cannot be determined through intuition or superficial observation alone. In an era where AI-driven solutions are becoming integral to business operations across industries, establishing robust performance measurement frameworks has become essential for ensuring sustainable success and continuous improvement. The ability to accurately assess AI system performance through well-defined key performance indicators represents the difference between transformative business value and costly technological investments that fail to deliver meaningful results.

Understanding and implementing comprehensive AI performance metrics enables organizations to make data-driven decisions about their machine learning initiatives, optimize resource allocation, and demonstrate tangible business value to stakeholders. Explore the latest AI trends and developments to understand how performance measurement is evolving alongside technological advancement. The sophisticated landscape of AI performance evaluation encompasses technical accuracy measures, business impact assessments, operational efficiency indicators, and long-term sustainability metrics that collectively provide a holistic view of AI system effectiveness.

Foundational Principles of AI Performance Measurement

The measurement of AI system performance extends far beyond simple accuracy scores or error rates. Effective performance evaluation requires a multidimensional approach that considers technical performance, business alignment, operational sustainability, and ethical implications. This comprehensive framework ensures that AI systems not only perform well in controlled testing environments but also deliver consistent value in real-world applications where variables are unpredictable and stakes are high.

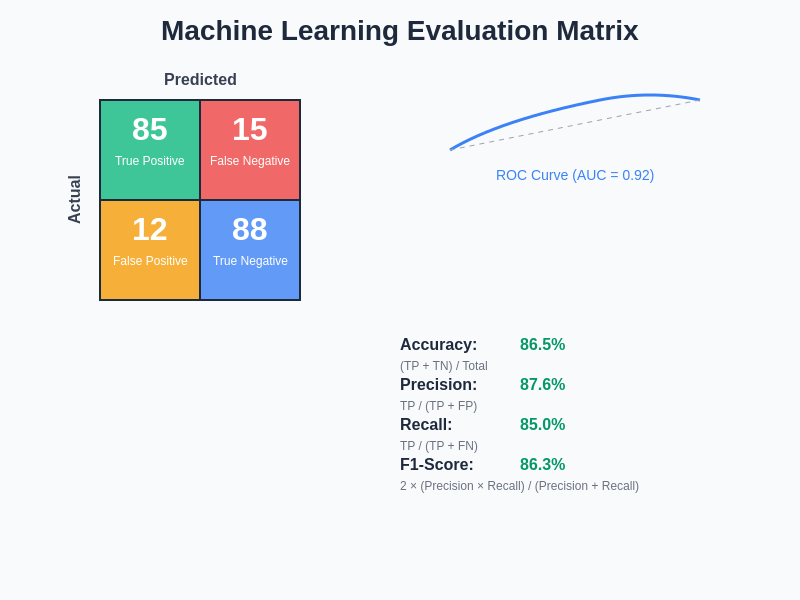

Modern AI performance measurement must account for the dynamic nature of data environments, changing business requirements, and evolving user expectations. The most successful AI implementations are those that establish baseline performance metrics during development and continuously monitor these indicators throughout the system lifecycle. This approach enables organizations to detect performance degradation early, identify optimization opportunities, and maintain competitive advantage through superior AI system effectiveness.

The foundation of robust AI performance measurement lies in establishing clear objectives that align technical capabilities with business outcomes. Organizations must define what success looks like across multiple dimensions, including accuracy, efficiency, reliability, fairness, and interpretability. This multifaceted approach ensures that AI systems contribute meaningfully to organizational goals while maintaining ethical standards and operational sustainability.

Technical Performance Metrics for Model Accuracy

Technical performance metrics form the cornerstone of AI system evaluation, providing quantitative measures of how well models perform their intended functions. These metrics vary significantly depending on the type of machine learning problem being addressed, whether classification, regression, clustering, or reinforcement learning. Understanding the appropriate metrics for each problem type and their implications for real-world performance is crucial for effective AI system development and deployment.

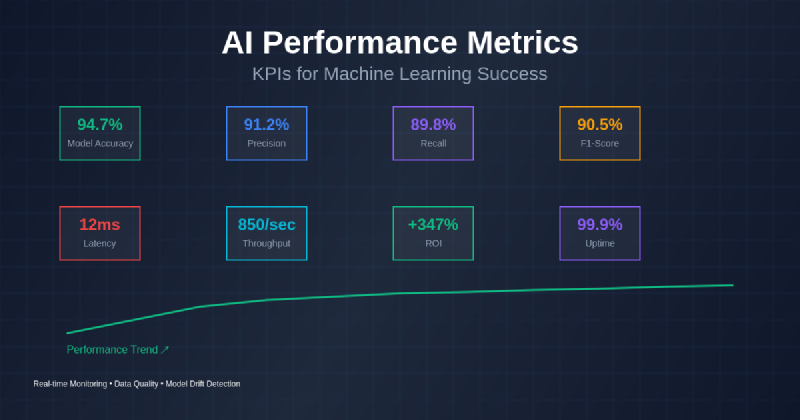

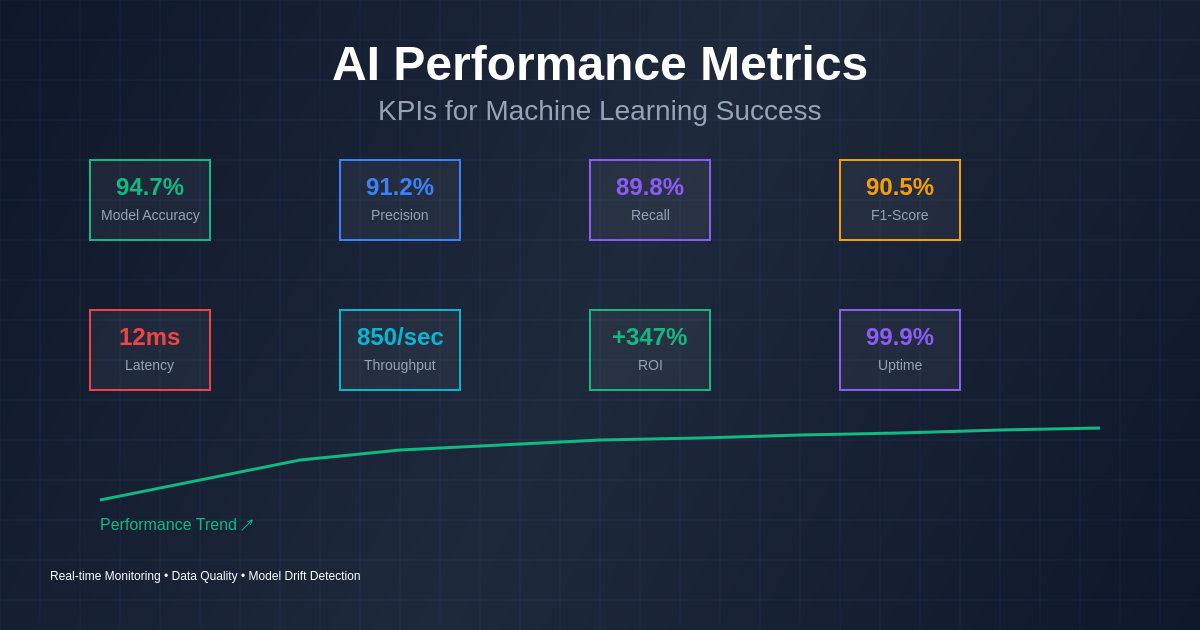

Classification problems require metrics such as accuracy, precision, recall, F1-score, and area under the ROC curve. Each metric provides different insights into model performance, with accuracy measuring overall correctness, precision indicating the proportion of positive predictions that are actually correct, and recall measuring the proportion of actual positive cases that are correctly identified. The F1-score provides a balanced measure that considers both precision and recall, while ROC curves illustrate the tradeoff between true positive and false positive rates across different decision thresholds.

Regression problems utilize metrics including mean squared error, mean absolute error, root mean squared error, and R-squared values. These metrics help assess how closely model predictions align with actual values, with lower error values generally indicating better performance. However, the choice of metric depends on the specific business context and the relative importance of different types of errors in the application domain.

Advanced technical metrics encompass model calibration, uncertainty quantification, and robustness measures that assess how well models perform under various conditions. Calibration metrics evaluate whether predicted probabilities accurately reflect actual likelihood of outcomes, which is crucial for applications requiring reliable confidence estimates. Uncertainty quantification measures help assess model confidence and identify cases where predictions may be unreliable, enabling more informed decision-making in critical applications.

Business Impact and ROI Measurement

The ultimate success of AI implementations must be measured through their impact on business outcomes and return on investment. Technical performance metrics, while important, only tell part of the story. Business impact metrics translate technical performance into tangible value creation, cost reduction, revenue generation, and competitive advantage. These metrics enable organizations to justify AI investments, optimize resource allocation, and demonstrate the strategic value of machine learning initiatives to stakeholders and decision-makers.

Revenue-focused metrics include direct revenue attribution, conversion rate improvements, customer lifetime value increases, and market share gains directly attributable to AI system deployment. These metrics require careful attribution modeling to isolate the impact of AI systems from other business factors. Organizations must establish baseline measurements before AI implementation and track changes over time while controlling for external variables that might influence business outcomes.

Cost reduction metrics encompass operational efficiency gains, resource optimization, automated process improvements, and reduced manual intervention requirements. Enhance your AI development workflow with Claude to streamline performance measurement and optimization processes. These metrics often provide the most immediate and measurable benefits from AI implementation, as automation and optimization typically produce quantifiable cost savings that can be directly traced to AI system performance.

Customer satisfaction and experience metrics represent increasingly important indicators of AI system success. Net Promoter Score improvements, customer retention rate changes, support ticket reduction, and user engagement increases provide insights into how AI systems impact end-user experiences. These metrics are particularly crucial for customer-facing AI applications where user acceptance and satisfaction directly influence business success.

Operational Efficiency and System Performance

Operational efficiency metrics focus on how effectively AI systems integrate into existing business processes and infrastructure. These metrics assess system reliability, scalability, maintainability, and resource utilization to ensure that AI implementations enhance rather than complicate operational workflows. Effective operational measurement enables organizations to optimize AI system deployment, predict scaling requirements, and maintain consistent performance as usage patterns evolve.

System uptime and availability metrics track the reliability of AI systems in production environments. These measurements include mean time between failures, mean time to recovery, and overall system availability percentages. For mission-critical applications, even small improvements in reliability can translate into significant business value and risk reduction. Organizations must establish service level agreements that define acceptable performance thresholds and implement monitoring systems that provide real-time visibility into system health.

Throughput and latency metrics assess how efficiently AI systems process requests and deliver results. Response time measurements, processing capacity indicators, and concurrent user support capabilities help organizations understand system performance under various load conditions. These metrics are crucial for applications requiring real-time or near-real-time responses, where performance delays can significantly impact user experience and business outcomes.

Resource utilization metrics including CPU usage, memory consumption, storage requirements, and network bandwidth utilization help optimize infrastructure costs and predict scaling needs. Efficient resource utilization not only reduces operational costs but also enables organizations to support larger user bases and more complex workloads without proportional infrastructure investments. These metrics guide decisions about hardware provisioning, cloud resource allocation, and system architecture optimization.

Data Quality and Model Drift Detection

Data quality metrics represent fundamental indicators of AI system health, as model performance is intrinsically linked to the quality and representativeness of input data. These metrics encompass data completeness, accuracy, consistency, timeliness, and relevance measures that directly impact model effectiveness. Organizations must establish continuous monitoring systems that track data quality indicators and alert stakeholders when quality degradation threatens system performance.

Model drift detection metrics identify when AI system performance degrades due to changes in data distributions, user behavior patterns, or environmental conditions. Concept drift occurs when the relationship between input features and target outcomes changes over time, while data drift involves changes in input data distributions without necessarily affecting target relationships. Both types of drift can significantly impact model performance and require proactive detection and response strategies.

Feature importance and stability metrics help assess whether models continue to rely on relevant and stable input variables. Changes in feature importance rankings may indicate underlying data shifts or model degradation that requires attention. Feature stability measures track how consistently individual features behave across different time periods, helping identify potential data quality issues or external factors affecting system inputs.

Leverage Perplexity’s advanced search capabilities for comprehensive research into emerging data quality measurement techniques and industry best practices. Data lineage and provenance tracking provide additional quality assurance by monitoring data sources, transformation processes, and update frequencies that impact model inputs. These metrics enable organizations to quickly identify and address data quality issues that could compromise AI system performance.

Fairness, Bias, and Ethical Performance Indicators

Ethical performance measurement has become an essential component of comprehensive AI evaluation frameworks, as organizations increasingly recognize the importance of fair and unbiased AI systems. Fairness metrics assess whether AI systems treat different population groups equitably and avoid discriminatory outcomes that could result in legal, reputational, or social consequences. These measurements require sophisticated statistical analysis and domain expertise to interpret correctly and implement effectively.

Demographic parity metrics evaluate whether AI system outcomes are distributed equally across different demographic groups. Equal opportunity measures assess whether the system provides similar benefits or positive outcomes to members of different groups, while equalized odds metrics examine whether false positive and false negative rates are consistent across groups. These metrics help organizations identify potential bias in AI system behavior and implement corrective measures to ensure fair treatment.

Individual fairness metrics assess whether similar individuals receive similar treatment from AI systems, regardless of their group membership. These measurements require defining appropriate similarity measures and outcome consistency thresholds that align with business objectives and ethical standards. Counterfactual fairness analysis examines whether AI system decisions would remain consistent if individuals belonged to different demographic groups, providing insights into potential discriminatory decision-making processes.

Transparency and explainability metrics measure how well AI system decisions can be understood and justified to stakeholders, users, and regulators. Model interpretability scores, explanation quality assessments, and decision audit trail completeness help ensure that AI systems operate in accordance with regulatory requirements and organizational governance standards. These metrics are particularly important in regulated industries where algorithmic decision-making must be explainable and defensible.

Implementation Strategies for Performance Monitoring

Effective AI performance monitoring requires systematic implementation of measurement frameworks that provide continuous visibility into system health and effectiveness. Organizations must establish baseline measurements, define performance thresholds, implement automated monitoring systems, and create response protocols that enable rapid identification and resolution of performance issues. This comprehensive approach ensures that AI systems maintain optimal performance throughout their operational lifecycle.

Real-time monitoring systems provide immediate feedback on AI system performance, enabling rapid response to anomalies or degradation. These systems must balance comprehensive coverage with practical implementation constraints, focusing on the most critical metrics while avoiding information overload that could obscure important signals. Automated alerting mechanisms notify stakeholders when performance thresholds are exceeded, enabling proactive intervention before issues impact business operations.

Historical performance analysis enables organizations to identify trends, patterns, and cyclical variations in AI system behavior. Longitudinal measurement provides insights into system evolution, seasonal effects, and long-term sustainability that cannot be captured through real-time monitoring alone. This historical perspective informs optimization strategies, capacity planning decisions, and system upgrade priorities that enhance long-term AI system effectiveness.

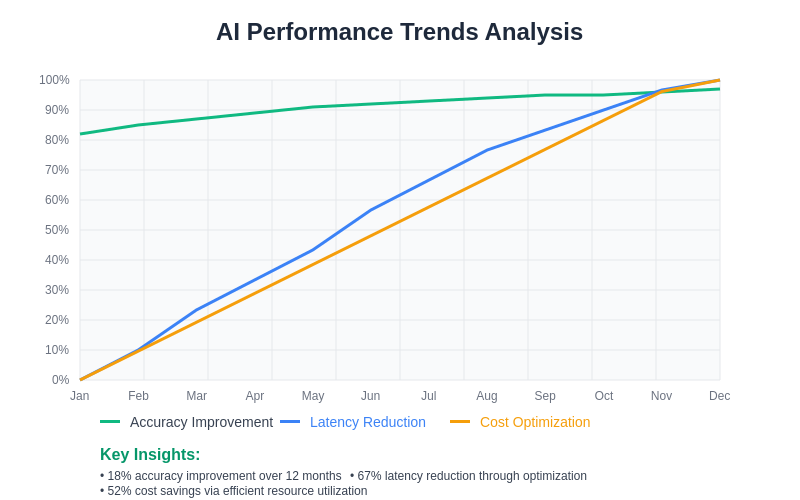

Performance dashboard design plays a crucial role in making AI metrics accessible and actionable for different stakeholder groups. Executive dashboards focus on business impact metrics and high-level performance summaries, while technical dashboards provide detailed system health information for operational teams. User experience dashboards highlight customer-facing performance indicators that influence satisfaction and adoption rates.

Advanced Analytics and Predictive Performance Modeling

Advanced analytical techniques enable organizations to move beyond reactive performance monitoring toward predictive performance management that anticipates and prevents issues before they impact system effectiveness. Predictive modeling applies machine learning techniques to historical performance data, identifying patterns that indicate impending performance degradation or optimization opportunities. This proactive approach minimizes system downtime and maintains consistent user experiences.

Performance forecasting models help organizations plan for future capacity requirements, infrastructure upgrades, and system scaling needs. These models consider historical usage patterns, seasonal variations, business growth projections, and external factors that influence AI system demand. Accurate performance forecasting enables cost-effective resource provisioning and ensures that systems can handle anticipated workload increases without performance degradation.

Anomaly detection systems identify unusual patterns in AI system behavior that may indicate performance issues, security threats, or data quality problems. These systems must balance sensitivity with specificity to minimize false alarms while ensuring that genuine issues are detected promptly. Machine learning-based anomaly detection can adapt to changing system behavior patterns and identify subtle performance degradation that might be missed by static threshold-based monitoring.

Root cause analysis capabilities enable organizations to quickly identify the underlying factors contributing to performance issues. Automated root cause analysis systems correlate performance metrics with system logs, data quality indicators, and external environmental factors to pinpoint the sources of problems. This capability reduces mean time to resolution and enables more effective long-term system optimization strategies.

Integration with Business Intelligence and Reporting

AI performance metrics must be integrated with broader business intelligence and reporting systems to provide comprehensive visibility into organizational performance and enable data-driven decision-making. This integration ensures that AI system performance is evaluated within the context of overall business operations and strategic objectives. Effective integration requires careful consideration of data formats, reporting frequencies, and stakeholder information requirements.

Executive reporting frameworks present AI performance information in formats that support strategic decision-making and resource allocation. These reports focus on business impact metrics, return on investment calculations, and competitive advantage indicators that demonstrate the value of AI investments. Executive reports must balance comprehensive information with accessible presentation formats that enable quick understanding and informed decision-making.

Operational reporting systems provide detailed performance information for technical teams responsible for AI system maintenance and optimization. These reports include technical performance metrics, system health indicators, and diagnostic information that supports troubleshooting and performance tuning activities. Operational reports must be updated frequently and provide drill-down capabilities that enable detailed investigation of specific issues or trends.

Regulatory and compliance reporting ensures that AI systems meet industry standards, legal requirements, and organizational governance policies. These reports document fairness metrics, audit trail information, and risk assessment data that demonstrate compliance with relevant regulations and ethical standards. Compliance reporting must be comprehensive, accurate, and readily available for regulatory reviews or audits.

Future Directions in AI Performance Measurement

The field of AI performance measurement continues to evolve as new technologies, methodologies, and business requirements emerge. Organizations must stay current with best practices and emerging standards to maintain effective measurement frameworks that support their AI initiatives. Future developments in performance measurement will likely focus on automation, standardization, and integration with emerging AI technologies and business models.

Automated performance optimization represents a significant advancement in AI system management, where measurement systems not only identify performance issues but also implement corrective actions automatically. These systems use machine learning techniques to optimize hyperparameters, adjust resource allocation, and modify system configurations based on performance feedback. Automated optimization reduces the burden on human operators while maintaining optimal system performance under changing conditions.

Industry standardization efforts aim to establish common metrics, measurement methodologies, and reporting formats that enable benchmarking and best practice sharing across organizations. Standardized performance measurement frameworks will facilitate vendor comparisons, regulatory compliance, and knowledge transfer between organizations implementing similar AI solutions. These standards will likely emerge through collaboration between industry associations, regulatory bodies, and technology vendors.

Multi-model and ensemble performance measurement addresses the complexity of modern AI systems that combine multiple models, algorithms, and data sources. These measurement frameworks must account for interactions between system components, assess overall system coherence, and identify optimization opportunities that span multiple models. Advanced measurement systems will provide holistic performance assessment capabilities that match the sophistication of modern AI architectures.

The integration of AI performance measurement with emerging technologies such as edge computing, federated learning, and quantum computing will require new metrics and measurement approaches. These technologies introduce unique performance characteristics, privacy considerations, and scalability challenges that traditional measurement frameworks may not address adequately. Future measurement systems must be flexible and extensible to accommodate technological evolution while maintaining measurement consistency and accuracy.

The continued advancement of AI performance measurement capabilities will enable organizations to extract maximum value from their AI investments while maintaining ethical standards and operational excellence. Success in this domain requires ongoing commitment to measurement best practices, continuous learning about emerging techniques, and adaptation to changing business and technological requirements. Organizations that master AI performance measurement will be best positioned to leverage artificial intelligence as a sustainable competitive advantage.

Disclaimer

This article is for informational purposes only and does not constitute professional advice regarding AI implementation or performance measurement strategies. The metrics and methodologies discussed should be adapted to specific organizational contexts and requirements. Readers should consult with qualified professionals when implementing AI performance measurement systems and ensure compliance with relevant industry regulations and standards. The effectiveness of performance measurement approaches may vary depending on specific use cases, data characteristics, and business objectives.