The traditional approach to bug detection in software development has long been a reactive process, where errors are discovered after they manifest in production environments or during extensive manual testing phases. However, the emergence of artificial intelligence-powered bug detection tools is fundamentally transforming this paradigm, enabling developers to identify and resolve potential issues before they ever reach end users. These sophisticated systems leverage machine learning algorithms, pattern recognition, and advanced static analysis to proactively scan codebases and predict where problems are most likely to occur.

Stay updated with the latest AI development trends that are reshaping how we approach software quality assurance and automated testing methodologies. The integration of AI into bug detection represents a quantum leap forward in software reliability, offering unprecedented accuracy and speed in identifying potential vulnerabilities, performance bottlenecks, and logical errors that traditional testing methods might miss.

The Evolution of Bug Detection Technology

The journey from manual code reviews to AI-powered bug detection represents one of the most significant advances in software development practices. Traditional bug detection relied heavily on human expertise, time-intensive testing procedures, and often discovered issues only after they had already caused problems in production environments. This reactive approach frequently resulted in costly fixes, user dissatisfaction, and significant delays in software delivery cycles.

Modern AI-powered bug detection systems have revolutionized this process by implementing sophisticated algorithms that can analyze code patterns, identify anomalies, and predict potential failure points with remarkable accuracy. These systems learn from vast repositories of code examples, bug reports, and fix patterns to develop an increasingly sophisticated understanding of what constitutes problematic code structures and implementations.

The transformation extends beyond simple error detection to encompass comprehensive code quality analysis, security vulnerability identification, and performance optimization recommendations. AI systems can now process entire codebases in minutes, providing detailed reports that would require weeks of manual review to compile, while maintaining consistency and objectivity that human reviewers cannot match over extended periods.

Machine Learning Approaches to Code Analysis

The foundation of AI-powered bug detection lies in sophisticated machine learning models trained on massive datasets of source code, bug patterns, and remediation strategies. These models employ various techniques including natural language processing to understand code semantics, deep learning networks to identify complex patterns, and reinforcement learning to continuously improve their detection capabilities based on feedback and outcomes.

Neural networks specifically designed for code analysis can recognize subtle patterns that indicate potential bugs, such as unusual variable usage patterns, inconsistent error handling, or dangerous memory management practices. These systems excel at identifying issues that traditional static analysis tools often miss, particularly those involving complex interactions between different parts of the codebase or subtle logical errors that only manifest under specific conditions.

The machine learning approach enables these tools to adapt and improve over time, learning from each codebase they analyze and incorporating feedback from developers about the accuracy and usefulness of their suggestions. This continuous learning process results in increasingly sophisticated detection capabilities that become more valuable as they process more code and receive more feedback from development teams.

Enhance your development workflow with Claude’s advanced AI capabilities for comprehensive code analysis and intelligent bug detection that goes beyond traditional static analysis tools. The combination of multiple AI approaches creates a robust ecosystem for maintaining code quality and preventing issues before they impact users.

Static Analysis Enhanced by Artificial Intelligence

Traditional static analysis tools have been valuable components of software development toolchains for decades, but they have inherent limitations in terms of accuracy, context understanding, and adaptability to different coding styles and frameworks. AI-enhanced static analysis tools address these limitations by incorporating machine learning models that can understand code context, reduce false positives, and provide more meaningful insights about potential issues.

These advanced systems can distinguish between legitimate code patterns and potentially problematic implementations by analyzing the broader context in which code operates. They understand the relationships between different components, the intended behavior of various code sections, and the typical patterns used in specific frameworks or programming languages. This contextual understanding dramatically reduces the number of false positives that plague traditional static analysis tools.

The AI enhancement also enables these tools to provide more actionable feedback, suggesting specific remediation strategies rather than simply flagging potential issues. They can recommend code refactoring approaches, suggest alternative implementations, and even generate corrected code snippets that address identified problems while maintaining the original functionality and coding style.

Real-Time Bug Prevention During Development

One of the most significant advantages of AI-powered bug detection tools is their ability to provide real-time feedback during the development process, catching potential issues as code is being written rather than waiting for compilation or testing phases. These systems integrate seamlessly with popular integrated development environments, providing immediate visual feedback about potential problems and suggested improvements.

Real-time detection enables developers to address issues immediately while the context and intent behind the code are still fresh in their minds. This immediate feedback loop significantly reduces the cost and complexity of bug fixes, as problems are resolved before they become entangled with other code changes or propagate throughout the system architecture.

The real-time approach also serves as an educational tool, helping developers learn about best practices and common pitfalls as they work. Over time, this continuous feedback helps teams develop better coding habits and reduces the likelihood of introducing similar issues in future development efforts.

Advanced Pattern Recognition for Complex Bugs

Modern AI bug detection systems excel at identifying complex, multi-faceted bugs that traditional tools often miss. These sophisticated patterns might involve interactions between multiple components, race conditions in concurrent code, or subtle logical errors that only manifest under specific runtime conditions. Machine learning models trained on extensive bug databases can recognize these complex patterns and flag potential issues before they cause problems.

The pattern recognition capabilities extend to identifying security vulnerabilities, performance bottlenecks, and maintainability issues that might not cause immediate failures but could lead to significant problems over time. These systems can analyze code flow, data dependencies, and execution paths to identify potential issues that would be extremely difficult for human reviewers to catch consistently.

Advanced pattern recognition also enables these tools to understand the intent behind code implementations and identify discrepancies between what the code appears to be trying to accomplish and what it actually does. This capability is particularly valuable for catching logical errors and design flaws that might not trigger compilation errors but could cause incorrect behavior in production environments.

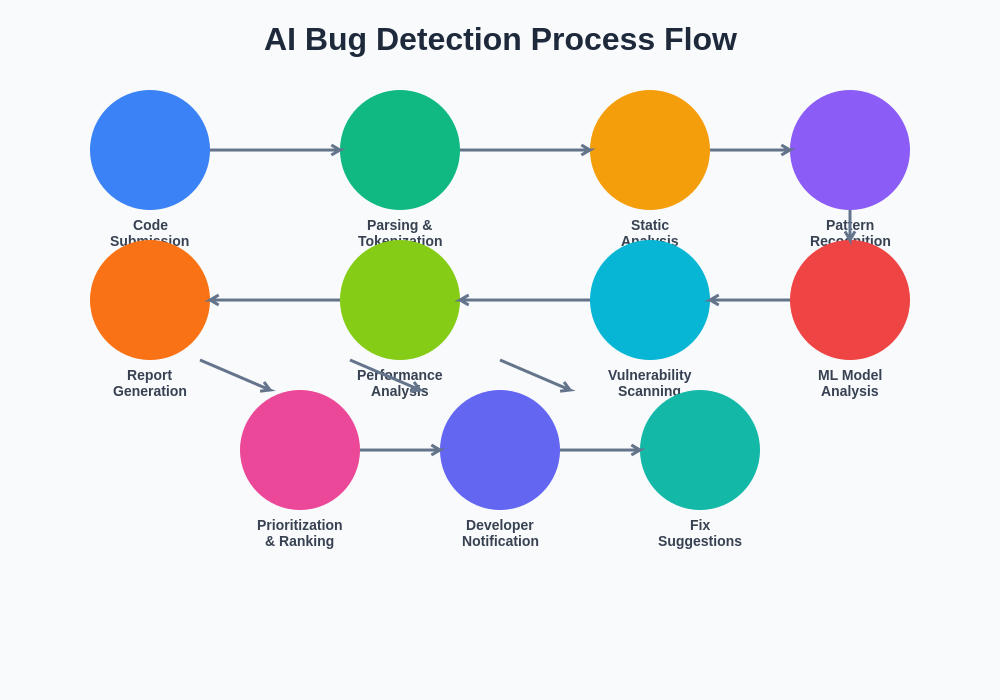

The systematic approach employed by AI-powered bug detection tools encompasses multiple analysis phases, from initial code parsing through advanced pattern recognition and final recommendation generation. This comprehensive process ensures that potential issues are identified at multiple levels of granularity and complexity.

Predictive Analytics for Bug Forecasting

Beyond identifying existing bugs, AI-powered tools are increasingly capable of predicting where bugs are most likely to occur in the future based on code complexity metrics, historical bug patterns, and development team practices. This predictive capability enables teams to proactively focus their testing and code review efforts on the areas most likely to contain issues.

Predictive analytics can identify code modules that are statistically more likely to contain bugs based on factors such as complexity, change frequency, developer experience, and historical bug density. This information helps teams allocate their quality assurance resources more effectively and implement additional safeguards in high-risk areas.

The forecasting capabilities also extend to predicting the types of bugs most likely to occur in specific code sections, enabling teams to design targeted testing strategies and implement appropriate defensive programming practices. This proactive approach significantly reduces the likelihood of bugs reaching production environments.

Leverage Perplexity’s research capabilities to stay informed about the latest developments in AI-powered software quality assurance and emerging bug detection methodologies. The rapid evolution of these technologies requires continuous learning and adaptation to maintain competitive advantages in software development.

Integration with Continuous Integration Pipelines

Modern AI bug detection tools seamlessly integrate with continuous integration and continuous deployment pipelines, automatically analyzing code changes as they are committed and providing immediate feedback about potential issues. This integration ensures that bug detection becomes an integral part of the development workflow rather than an afterthought or manual process.

The CI/CD integration enables automated quality gates that can prevent problematic code from progressing through the deployment pipeline. These systems can be configured to block deployments when critical issues are detected while allowing minor issues to be flagged for later resolution. This balanced approach maintains development velocity while ensuring that serious problems are addressed before reaching production.

Integration with version control systems also enables these tools to analyze code changes in context, understanding how modifications might affect existing functionality and identifying potential regression issues. This differential analysis capability is particularly valuable for large codebases where the impact of changes might not be immediately obvious.

Language-Specific and Framework-Aware Detection

Different programming languages and frameworks have unique characteristics, common pitfalls, and best practices that affect how bugs manifest and should be detected. AI-powered bug detection tools are increasingly sophisticated in their understanding of language-specific nuances and framework-specific patterns, providing more accurate and relevant feedback for different technology stacks.

Language-specific detection capabilities include understanding memory management patterns in languages like C and C++, recognizing common concurrency issues in Java applications, identifying asynchronous programming pitfalls in JavaScript, and detecting type-related errors in dynamically typed languages like Python. This specialized knowledge enables more precise detection and reduces false positives.

Framework-aware detection extends this capability to understand the conventions and patterns of popular frameworks like React, Django, Spring, and others. These tools can identify framework-specific anti-patterns, security vulnerabilities, and performance issues that might not be apparent to generic analysis tools.

Security Vulnerability Detection and Prevention

Security vulnerabilities represent some of the most critical bugs that can affect software systems, and AI-powered detection tools have proven particularly effective at identifying potential security issues before they can be exploited. These systems can recognize patterns associated with common vulnerabilities such as SQL injection, cross-site scripting, buffer overflows, and authentication bypasses.

The AI approach to security vulnerability detection goes beyond simple pattern matching to understand the context and data flow that could lead to security issues. These systems can trace data from input sources through processing logic to output destinations, identifying potential points where malicious data could cause problems.

Advanced security-focused AI tools can also simulate attack scenarios and identify code paths that could be exploited by malicious actors. This proactive approach to security testing helps development teams address vulnerabilities before they become entry points for actual attacks.

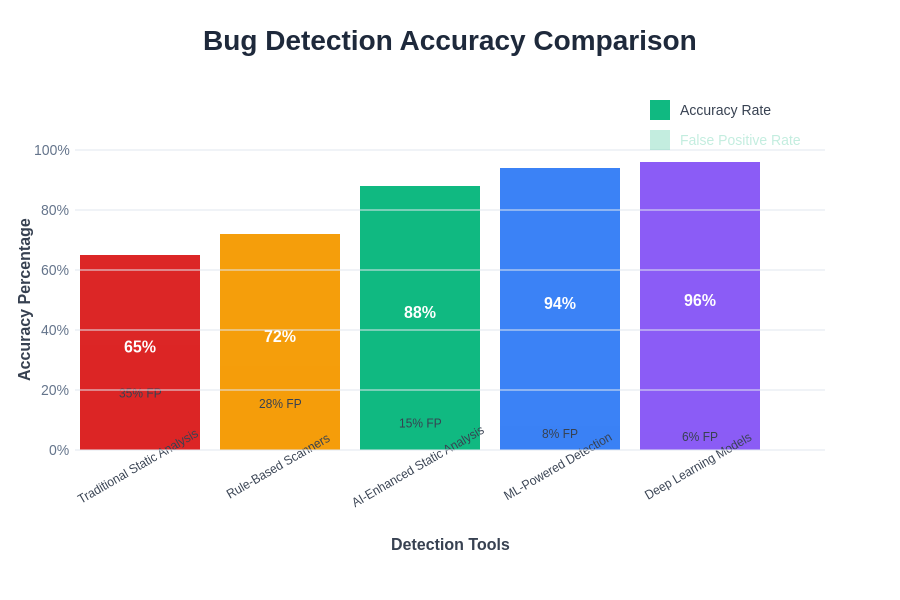

The comparative analysis of different bug detection approaches demonstrates the superior accuracy and reduced false positive rates achieved by AI-powered systems compared to traditional static analysis tools. This improvement in precision makes AI-based tools more practical for integration into daily development workflows.

Performance Bottleneck Identification

Performance-related bugs are often among the most difficult to detect through traditional testing methods, as they may only manifest under specific load conditions or with particular data sets. AI-powered bug detection tools excel at identifying potential performance bottlenecks by analyzing code patterns, algorithmic complexity, and resource usage patterns.

These systems can identify inefficient algorithms, unnecessary computational overhead, memory leaks, and database query optimization opportunities that could impact application performance. The AI analysis can simulate various execution scenarios and predict how code changes might affect system performance under different conditions.

Performance analysis capabilities also extend to identifying scalability issues that might not be apparent during initial development but could become problematic as applications grow and user loads increase. This forward-looking analysis helps teams design more robust and scalable solutions from the outset.

Code Quality Metrics and Technical Debt Analysis

AI-powered bug detection tools provide comprehensive insights into code quality metrics and technical debt accumulation, helping teams understand not just where bugs exist but also where code quality issues might lead to future problems. These systems can analyze factors such as code complexity, maintainability, test coverage, and adherence to best practices.

Technical debt analysis helps teams prioritize refactoring efforts by identifying areas where poor code quality is most likely to lead to bugs, maintenance difficulties, or future development bottlenecks. This strategic approach to code quality management enables more effective resource allocation and long-term project planning.

The metrics provided by these tools also serve as valuable feedback for development teams, helping them understand the impact of their coding practices on overall system quality and reliability. This feedback loop encourages continuous improvement in coding standards and practices.

Automated Test Case Generation

Beyond identifying existing bugs, AI-powered tools are increasingly capable of automatically generating test cases designed to expose potential issues and verify correct behavior. These generated tests can cover edge cases, boundary conditions, and unusual input scenarios that human testers might not consider.

The test generation capabilities leverage understanding of code structure, data flow, and potential failure modes to create comprehensive test suites that provide better coverage than manually written tests alone. These automated tests can continuously evolve as the codebase changes, ensuring that testing remains comprehensive and relevant.

Generated test cases also serve as documentation of expected behavior and edge cases, providing valuable insights for future development efforts and helping new team members understand the complexities and requirements of different code sections.

Collaborative Intelligence and Human-AI Partnership

The most effective AI-powered bug detection implementations recognize that the best results come from combining artificial intelligence capabilities with human expertise and judgment. These systems are designed to augment human capabilities rather than replace human developers, providing intelligent assistance while leaving final decisions about code changes to experienced professionals.

Collaborative intelligence approaches enable developers to provide feedback about the accuracy and usefulness of AI suggestions, which helps improve the system’s future performance. This feedback loop creates a continuous improvement cycle that makes the tools more valuable over time.

The partnership between human expertise and AI capabilities also enables more nuanced decision-making about which issues to prioritize, how to implement fixes, and when to accept calculated risks. This balanced approach maintains development agility while improving overall code quality.

The comprehensive ecosystem of AI-powered development tools creates a synergistic environment where bug detection, code generation, testing automation, and quality assurance work together to enhance overall software development efficiency and reliability.

Implementation Strategies and Best Practices

Successful implementation of AI-powered bug detection tools requires careful planning, appropriate tool selection, and thoughtful integration with existing development workflows. Teams need to consider factors such as technology stack compatibility, team size, project complexity, and organizational culture when selecting and deploying these tools.

Best practices for implementation include starting with pilot projects to evaluate tool effectiveness, providing adequate training for development teams, establishing clear guidelines for responding to AI-generated feedback, and continuously monitoring and adjusting tool configurations based on results and team feedback.

The implementation process should also include establishing metrics for measuring the effectiveness of AI bug detection efforts, such as reduction in production bugs, improved code quality scores, and decreased time spent on debugging activities. These metrics help demonstrate value and guide future improvements.

Future Trends and Technological Advancement

The field of AI-powered bug detection continues to evolve rapidly, with emerging technologies promising even more sophisticated capabilities in the near future. Advances in large language models, code understanding, and automated reasoning are expected to further improve the accuracy and usefulness of these tools.

Future developments may include more sophisticated understanding of business logic and user intent, enabling AI systems to identify not just technical bugs but also functional discrepancies between intended behavior and actual implementation. Integration with requirements management and specification systems could enable even more comprehensive analysis.

The convergence of AI bug detection with other development tools such as automated code generation, intelligent refactoring, and predictive project management promises to create increasingly sophisticated development environments that can significantly enhance productivity while maintaining high quality standards.

Measuring Return on Investment and Impact

Organizations implementing AI-powered bug detection tools need to establish clear metrics for measuring their impact and return on investment. Key performance indicators might include reduction in production bugs, decreased debugging time, improved code quality metrics, faster development cycles, and enhanced team productivity.

The measurement approach should consider both quantitative metrics such as bug detection rates and qualitative factors such as developer satisfaction, code maintainability improvements, and reduced stress levels associated with production issues. This comprehensive evaluation provides a complete picture of the tools’ value.

Long-term impact assessment should also consider the educational benefits of AI-powered tools, as development teams learn from continuous feedback and improve their coding practices over time. This compound effect can result in sustained improvements that extend beyond the immediate benefits of automated bug detection.

Conclusion and Strategic Considerations

AI-powered bug detection represents a fundamental shift in how software development teams approach quality assurance and error prevention. These tools offer unprecedented capabilities for identifying potential issues before they impact users, while providing valuable insights that help developers improve their skills and practices over time.

The successful adoption of AI bug detection tools requires thoughtful integration with existing workflows, appropriate training and support for development teams, and continuous evaluation and refinement of tool configurations and processes. Organizations that embrace these technologies thoughtfully and strategically are likely to see significant improvements in code quality, development efficiency, and overall software reliability.

As these technologies continue to evolve and mature, they will become increasingly essential components of modern software development toolchains, enabling teams to build more reliable, secure, and maintainable software while reducing the time and effort required for quality assurance activities.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The effectiveness of AI-powered bug detection tools may vary depending on specific use cases, technology stacks, and implementation approaches. Organizations should conduct thorough evaluations and consider their unique requirements when selecting and implementing these tools. The field of AI-powered software development is rapidly evolving, and readers should verify current capabilities and limitations of specific tools before making implementation decisions.