The deployment of artificial intelligence models in production environments represents only the beginning of a complex journey that requires continuous vigilance, systematic monitoring, and rigorous testing methodologies to ensure sustained performance excellence. AI regression testing has emerged as a critical discipline that addresses the unique challenges posed by machine learning systems, where traditional software testing paradigms must be augmented with specialized approaches that account for the probabilistic nature of AI outputs, data drift phenomena, and the evolving landscape of real-world inputs that can significantly impact model behavior over time.

Explore the latest AI testing methodologies to stay current with evolving best practices in machine learning quality assurance and automated testing frameworks. The complexity of modern AI systems demands sophisticated testing strategies that go beyond conventional software validation techniques to encompass statistical analysis, performance benchmarking, and continuous monitoring systems that can detect subtle degradations in model performance before they impact end-users or business outcomes.

Understanding AI Regression Testing Fundamentals

AI regression testing differs fundamentally from traditional software regression testing due to the inherent variability and probabilistic nature of machine learning models. While conventional software systems produce deterministic outputs for given inputs, AI models operate within probability distributions that can shift over time due to various factors including data drift, model decay, infrastructure changes, and evolving user behavior patterns. This probabilistic nature necessitates testing approaches that account for statistical significance, confidence intervals, and performance metrics that capture both accuracy and consistency across diverse scenarios and time periods.

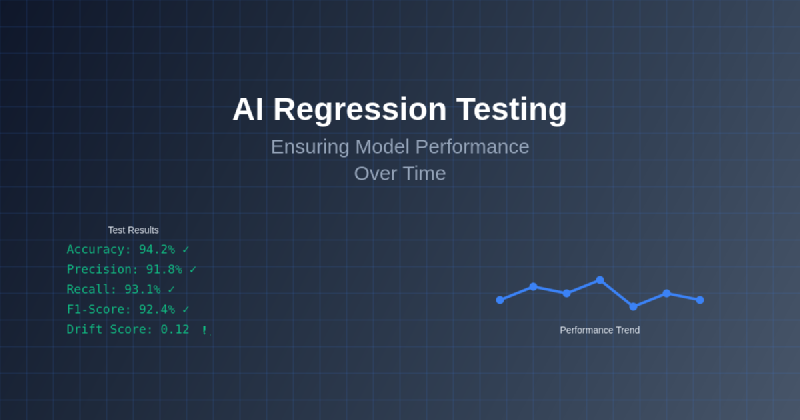

The foundation of effective AI regression testing lies in establishing comprehensive baseline performance metrics that serve as reference points for ongoing comparison and evaluation. These baselines must encompass not only primary performance indicators such as accuracy, precision, and recall, but also secondary metrics that capture model behavior under various conditions, edge cases, and stress scenarios. The establishment of robust baselines requires careful consideration of data representativeness, temporal consistency, and the inclusion of challenging test cases that stress-test model capabilities across the full spectrum of expected inputs and scenarios.

Model Performance Drift Detection Strategies

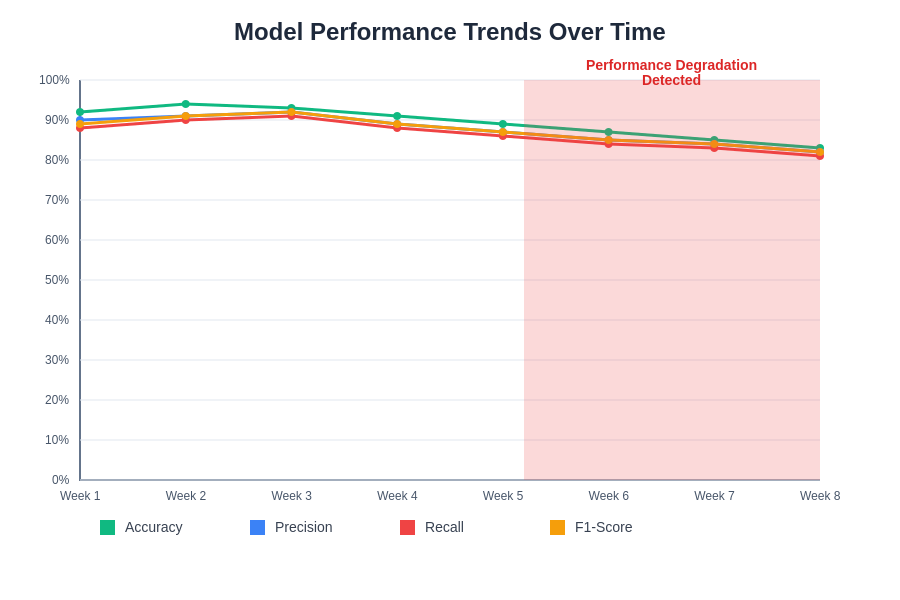

Performance drift represents one of the most insidious challenges in AI system maintenance, as it often occurs gradually and may not be immediately apparent through casual observation or basic monitoring systems. Effective drift detection requires sophisticated statistical analysis techniques that can identify subtle changes in model behavior patterns, output distributions, and prediction confidence levels that may indicate underlying performance degradation or shifts in data characteristics that affect model reliability.

The implementation of comprehensive drift detection systems involves multiple layers of analysis, including statistical tests for distribution changes, performance metric trending analysis, and correlation studies that examine relationships between input characteristics and output quality. These systems must be designed to distinguish between normal operational variation and significant performance degradation, requiring careful calibration of sensitivity thresholds and alert mechanisms that balance early warning capabilities with acceptable false positive rates that do not overwhelm monitoring personnel with spurious alerts.

Enhance your AI development workflow with advanced tools like Claude to implement sophisticated testing frameworks and monitoring systems that provide comprehensive coverage of model performance across diverse operational scenarios. The integration of intelligent analysis tools enables more nuanced understanding of model behavior patterns and facilitates the development of targeted interventions that address specific performance issues before they escalate into system-wide problems.

Comprehensive Testing Framework Architecture

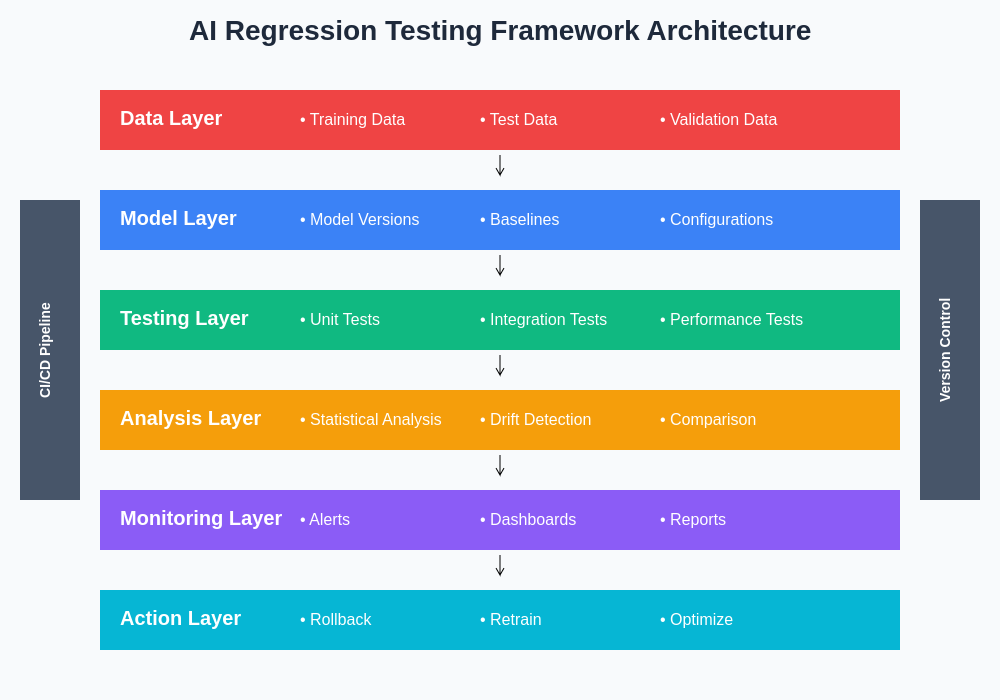

The architecture of an effective AI regression testing framework must address multiple dimensions of model performance while maintaining scalability, efficiency, and reliability across diverse deployment environments. This architecture typically encompasses automated test execution systems, comprehensive data management pipelines, performance benchmarking tools, and alert mechanisms that provide timely notification of potential issues while minimizing false positives and operational overhead for development and operations teams.

A robust testing framework incorporates multiple testing methodologies including unit tests for individual model components, integration tests that verify proper functioning across system boundaries, performance tests that validate efficiency and throughput requirements, and acceptance tests that ensure continued alignment with business objectives and user expectations. The framework must also include mechanisms for test data management, version control integration, and result analysis tools that facilitate rapid identification and resolution of performance issues.

The systematic approach to AI regression testing requires careful orchestration of multiple testing phases, each designed to evaluate specific aspects of model performance and system integration. This comprehensive framework ensures that potential issues are identified and addressed before they impact production systems or end-user experiences.

Data Quality and Input Validation Protocols

Data quality represents a fundamental prerequisite for reliable AI model performance, and regression testing must include comprehensive validation protocols that ensure input data maintains expected characteristics and quality standards over time. Data drift, quality degradation, and schema changes can significantly impact model performance even when the underlying algorithms remain unchanged, making data validation an essential component of any comprehensive regression testing strategy.

Effective data validation protocols encompass multiple dimensions including completeness checks that verify all expected data elements are present, consistency validations that ensure data relationships and constraints are maintained, accuracy assessments that validate data correctness against known benchmarks, and timeliness evaluations that confirm data freshness and relevance for current operational requirements. These protocols must be automated and integrated into continuous integration pipelines to provide immediate feedback when data quality issues are detected.

The implementation of robust data quality monitoring systems requires sophisticated anomaly detection capabilities that can identify subtle changes in data distributions, detect outliers and unusual patterns, and flag potential data corruption or schema evolution issues that might affect model performance. These systems must be calibrated to account for normal operational variation while maintaining sensitivity to significant changes that warrant investigation and potential remediation actions.

Performance Baseline Establishment and Maintenance

The establishment of reliable performance baselines represents a critical foundation for effective regression testing, requiring careful selection of representative datasets, comprehensive metric collection, and systematic documentation of expected performance characteristics under various operational conditions. These baselines must be regularly updated to reflect evolving operational requirements, data characteristics, and performance expectations while maintaining historical context that enables trend analysis and performance comparison over extended time periods.

Baseline establishment involves extensive testing across diverse scenarios including normal operating conditions, edge cases, stress conditions, and failure scenarios that might impact model performance. The testing process must generate comprehensive performance profiles that capture not only primary accuracy metrics but also secondary characteristics such as prediction confidence distributions, processing latency patterns, and resource utilization profiles that affect system scalability and operational efficiency.

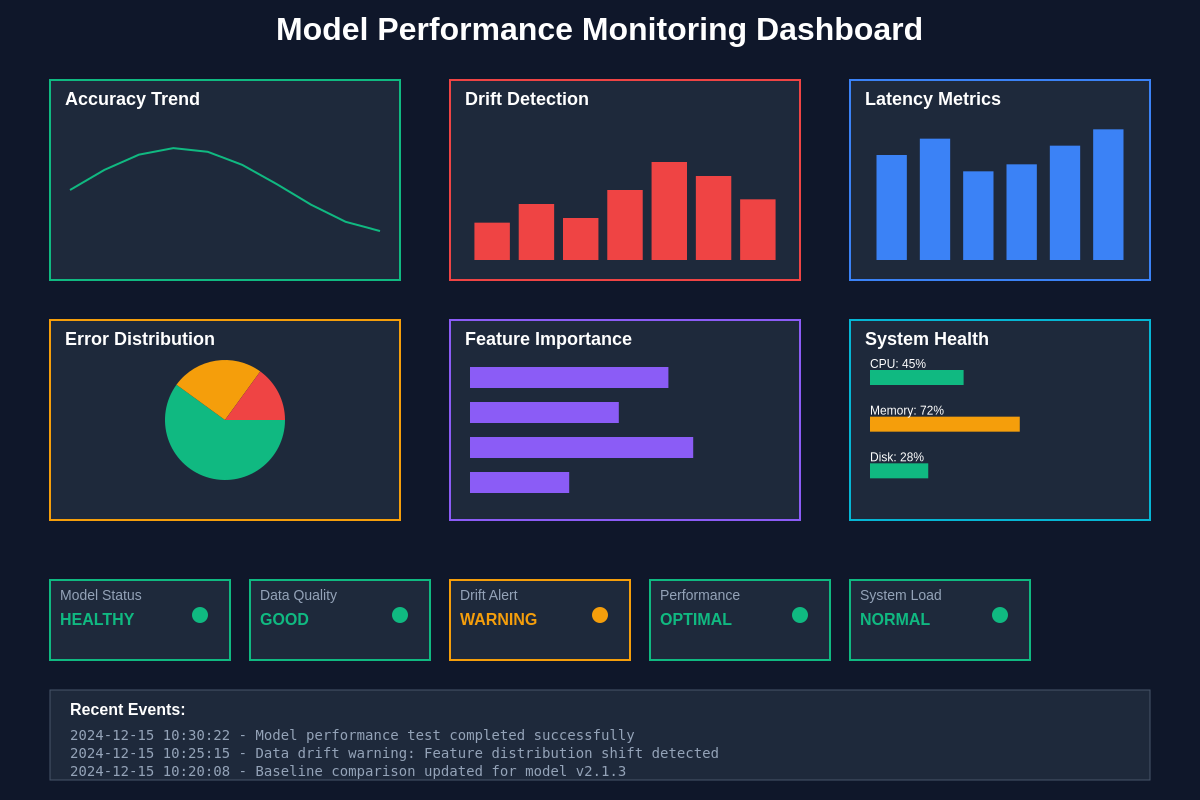

Continuous monitoring of model performance against established baselines enables early detection of performance degradation and provides actionable insights for maintaining optimal system performance. The visualization of key performance indicators facilitates rapid assessment of model health and supports data-driven decision-making regarding maintenance and optimization priorities.

Automated Testing Pipeline Implementation

The implementation of automated testing pipelines represents a crucial capability for maintaining consistent and reliable regression testing processes across diverse deployment environments and operational scales. These pipelines must integrate seamlessly with existing development workflows while providing comprehensive coverage of model performance validation, data quality assessment, and system integration verification without introducing excessive overhead or complexity that impedes development velocity.

Automated testing pipelines typically encompass multiple stages including data preparation and validation, model execution and performance measurement, result analysis and comparison, and alert generation for significant deviations from expected performance characteristics. Each stage must be designed for reliability, efficiency, and maintainability while providing detailed logging and audit trails that support troubleshooting and performance analysis activities.

The design of effective automated testing systems requires careful consideration of scalability requirements, resource constraints, and integration points with existing infrastructure and tooling ecosystems. These systems must support parallel execution, efficient resource utilization, and graceful degradation under high load conditions while maintaining accuracy and reliability of test results across diverse operational scenarios and environments.

Leverage advanced AI research capabilities with Perplexity to stay informed about cutting-edge testing methodologies and emerging best practices in AI quality assurance that can enhance the effectiveness of your regression testing strategies. Access to comprehensive research resources enables the implementation of state-of-the-art testing approaches that address the unique challenges of modern AI systems.

Statistical Analysis and Significance Testing

The probabilistic nature of AI models necessitates sophisticated statistical analysis techniques that can distinguish between meaningful performance changes and normal operational variation that occurs due to the inherent randomness of machine learning systems. Effective regression testing must incorporate rigorous statistical methods including hypothesis testing, confidence interval analysis, and significance assessment that provide objective measures of performance changes and their practical implications for system operation and user experience.

Statistical analysis frameworks for AI regression testing must account for multiple factors including sample size requirements, statistical power considerations, effect size estimation, and correction procedures for multiple comparisons that prevent false discovery rates from inflating due to extensive testing across numerous performance metrics and operational scenarios. These frameworks must be integrated into automated testing systems while remaining accessible to technical personnel who may not have extensive statistical analysis backgrounds.

The implementation of robust statistical analysis capabilities requires careful selection of appropriate statistical tests, proper handling of data dependencies and temporal correlations, and clear interpretation guidelines that translate statistical results into actionable insights for development and operations teams. These capabilities must support both real-time analysis for immediate alert generation and retrospective analysis for trend identification and performance optimization planning.

Continuous Integration and Deployment Considerations

The integration of AI regression testing into continuous integration and deployment pipelines presents unique challenges that require specialized approaches to balance testing comprehensiveness with deployment velocity and resource efficiency. Traditional CI/CD practices must be augmented with AI-specific considerations including model versioning, performance benchmarking, and validation procedures that ensure new deployments maintain or improve upon existing performance characteristics.

Effective CI/CD integration requires the development of efficient testing strategies that provide adequate performance validation within acceptable time and resource constraints while maintaining sufficient coverage to detect potential regressions and performance issues. This often involves the implementation of tiered testing approaches that combine rapid smoke tests for basic functionality verification with more comprehensive regression test suites that execute during off-peak periods or in parallel with production deployments.

The design of AI-aware CI/CD systems must account for the computational requirements of model training and evaluation, the need for representative test data management, and the complexity of performance metric collection and analysis. These systems must support rollback capabilities, canary deployment strategies, and gradual performance validation procedures that minimize risk while enabling rapid iteration and deployment of model improvements.

Model Versioning and Performance Tracking

Comprehensive model versioning strategies represent essential infrastructure for effective regression testing, enabling systematic tracking of performance changes across model iterations while providing the capability to rapidly revert to previous versions when performance regressions are detected. These versioning systems must integrate with broader software development practices while accommodating the unique requirements of machine learning artifacts including training data provenance, hyperparameter configurations, and performance metric histories.

Effective model versioning encompasses not only the model artifacts themselves but also the complete training and validation environments, data preprocessing pipelines, and evaluation frameworks that contribute to model performance characteristics. This comprehensive approach ensures reproducibility of results and enables accurate comparison of performance across different model versions and deployment configurations.

The implementation of robust model versioning systems requires careful consideration of storage efficiency, access patterns, and integration with existing development tooling while providing the metadata management and tracking capabilities necessary for effective regression analysis and performance comparison. These systems must support both automated versioning during CI/CD processes and manual versioning for experimental development and research activities.

Long-term performance tracking reveals important patterns in model behavior and enables proactive identification of performance trends that may require intervention. The systematic analysis of performance metrics across multiple model versions provides valuable insights for optimization strategies and helps establish realistic performance expectations for future development efforts.

Edge Case and Adversarial Testing Methodologies

The robustness of AI models under challenging operational conditions requires specialized testing methodologies that evaluate performance against edge cases, adversarial inputs, and unusual scenarios that may not be adequately represented in standard training and validation datasets. These testing approaches are essential for ensuring model reliability and preventing performance degradation when deployed systems encounter unexpected inputs or operational conditions.

Edge case testing involves systematic exploration of input space boundaries, extreme value scenarios, and unusual data combinations that may trigger unexpected model behaviors or performance degradation. This testing must be comprehensive enough to provide confidence in model robustness while remaining computationally feasible and operationally practical for regular execution as part of regression testing procedures.

Adversarial testing methodologies focus on evaluating model performance against deliberately crafted inputs designed to exploit potential vulnerabilities or trigger incorrect predictions. These approaches are particularly important for models deployed in security-sensitive applications or environments where malicious actors may attempt to manipulate model behavior through carefully constructed inputs that appear benign but cause significant performance degradation or incorrect outputs.

Resource Optimization and Efficiency Monitoring

The computational requirements of comprehensive AI regression testing can be substantial, necessitating careful optimization of resource utilization while maintaining adequate testing coverage and accuracy. Effective testing strategies must balance thoroughness with efficiency, implementing intelligent scheduling, parallel execution, and resource sharing approaches that maximize testing value while minimizing operational costs and infrastructure requirements.

Resource optimization involves multiple dimensions including computational efficiency through optimized test execution, storage efficiency through intelligent data management and result caching, and network efficiency through distributed testing architectures that minimize data transfer overhead while maintaining result accuracy and consistency. These optimizations must be implemented without compromising test reliability or coverage.

The monitoring of resource utilization patterns provides valuable insights for testing optimization and capacity planning while enabling cost-effective scaling of testing infrastructure to meet evolving requirements. These monitoring systems must track resource consumption across different testing phases, identify optimization opportunities, and provide predictive capabilities that support proactive resource management and budget planning.

Alert Systems and Incident Response Protocols

Effective AI regression testing requires sophisticated alert systems that can rapidly identify performance issues while minimizing false positives that overwhelm operations personnel and reduce confidence in monitoring systems. These alert systems must be carefully calibrated to detect meaningful performance changes while accounting for normal operational variation and temporary fluctuations that do not require immediate intervention.

Alert system design involves multiple considerations including threshold setting methodologies, alert severity classification, escalation procedures, and integration with existing incident response workflows. The systems must provide sufficient context and diagnostic information to enable rapid assessment of alert significance and appropriate response prioritization while maintaining operational efficiency and minimizing disruption to normal development and deployment activities.

Incident response protocols for AI system performance issues require specialized procedures that account for the unique characteristics of machine learning systems including the potential need for model retraining, data quality investigation, and performance analysis across multiple operational dimensions. These protocols must provide clear guidance for incident classification, response prioritization, and resolution tracking while integrating with broader organizational incident management practices.

Future Evolution of AI Testing Practices

The field of AI regression testing continues to evolve rapidly as new challenges emerge from increasingly sophisticated AI systems, changing operational requirements, and advancing technology capabilities. Future developments are likely to focus on automated test generation, intelligent performance optimization, and predictive maintenance approaches that anticipate performance issues before they manifest in production environments.

Emerging trends in AI testing include the application of AI techniques to testing processes themselves, creating self-improving testing systems that can adapt to changing model characteristics and operational requirements. These meta-AI approaches promise to enhance testing effectiveness while reducing manual overhead and improving coverage of complex scenario spaces that are difficult to address through traditional testing methodologies.

The integration of advanced analytics, real-time performance optimization, and predictive modeling into regression testing frameworks represents the next frontier in AI quality assurance, enabling proactive performance management and automated optimization that maintains optimal system performance with minimal human intervention. These advanced capabilities will be essential for managing the increasing complexity and scale of AI deployments across diverse operational environments and use cases.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of AI testing methodologies and their applications in machine learning systems. Readers should conduct their own research and consider their specific requirements when implementing AI regression testing strategies. The effectiveness of testing approaches may vary depending on specific use cases, model architectures, and operational requirements.