The rapid advancement of artificial intelligence technologies within academic institutions has created an unprecedented need for comprehensive ethical frameworks that govern research practices, ensure responsible innovation, and protect both individual rights and societal welfare. As universities and research institutions become the primary catalysts for AI breakthroughs that will shape humanity’s future, the imperative for establishing robust ethical guidelines and oversight mechanisms has never been more critical or urgent.

Stay informed about the latest developments in AI ethics and research to understand how academic institutions are navigating the complex landscape of responsible artificial intelligence development. The intersection of cutting-edge technological capability and moral responsibility creates a dynamic environment where ethical considerations must evolve alongside technological advancement to ensure that innovation serves the greater good while minimizing potential harm.

The Foundation of Ethical AI Research

Academic institutions serve as the primary incubators for artificial intelligence research that will eventually permeate every aspect of human society, from healthcare and education to transportation and governance systems. This fundamental role places an enormous ethical burden on researchers, institutions, and oversight bodies to ensure that the development of AI technologies proceeds with careful consideration of their potential impacts on individuals, communities, and society at large. The establishment of comprehensive ethical frameworks represents not merely a regulatory requirement but a moral imperative that shapes the trajectory of technological progress.

The complexity of AI systems and their potential for both beneficial and harmful applications requires a multidisciplinary approach to ethics that incorporates perspectives from computer science, philosophy, psychology, sociology, law, and policy studies. Academic researchers must navigate the intricate balance between advancing scientific knowledge and ensuring that their work contributes positively to human welfare. This balance becomes particularly challenging when dealing with technologies that have dual-use potential or when research outcomes may have unintended consequences that only become apparent after widespread deployment.

Institutional Responsibility and Governance Structures

Universities and research institutions bear significant responsibility for creating and maintaining ethical oversight mechanisms that guide AI research activities while fostering an environment of innovation and discovery. The development of institutional review boards specifically tailored to address the unique challenges posed by AI research represents a critical step in ensuring that ethical considerations are integrated into every stage of the research process, from initial conception through final implementation and dissemination.

Effective governance structures must balance the need for rigorous ethical oversight with the preservation of academic freedom and the promotion of scientific inquiry. This requires the establishment of clear protocols for evaluating research proposals, ongoing monitoring of research activities, and mechanisms for addressing ethical concerns that may arise during the course of research projects. Institutions must also invest in training programs that help researchers develop the knowledge and skills necessary to identify and address ethical issues in their work.

Explore advanced AI research tools and methodologies with Claude to enhance your understanding of responsible research practices and ethical considerations in artificial intelligence development. The integration of ethical thinking into research methodology represents a fundamental shift in how academic institutions approach the development of transformative technologies.

Privacy Protection and Data Governance

The development of AI systems typically requires access to vast quantities of data, much of which may contain sensitive personal information or reflect patterns of human behavior that could be used in ways that compromise individual privacy or autonomy. Academic researchers must therefore implement comprehensive data governance frameworks that protect individual privacy while enabling legitimate research activities that advance scientific understanding and contribute to the development of beneficial AI technologies.

Privacy protection in AI research extends beyond traditional concepts of data anonymization to encompass more sophisticated considerations related to inference attacks, re-identification risks, and the potential for AI systems to reveal sensitive information about individuals even when working with apparently anonymized datasets. Researchers must carefully evaluate the privacy implications of their data collection, storage, processing, and sharing practices, ensuring that appropriate safeguards are in place to protect research participants and other individuals whose data may be incorporated into research projects.

The development of privacy-preserving research methodologies represents an active area of innovation within the AI ethics community, with techniques such as differential privacy, federated learning, and homomorphic encryption offering promising approaches for conducting research while minimizing privacy risks. Academic institutions must invest in developing expertise in these areas and provide researchers with the tools and knowledge necessary to implement privacy-protecting approaches in their work.

Bias Mitigation and Fairness Considerations

Artificial intelligence systems have the potential to perpetuate and amplify existing societal biases, leading to discriminatory outcomes that can harm vulnerable populations and reinforce systemic inequalities. Academic researchers bear a particular responsibility for addressing these concerns through careful attention to bias mitigation strategies, fairness considerations, and inclusive research practices that consider the perspectives and needs of diverse communities.

The identification and mitigation of bias in AI systems requires ongoing attention throughout the research process, from initial data collection and preprocessing through model development, evaluation, and deployment. Researchers must develop sophisticated understanding of how biases can emerge in AI systems and implement appropriate techniques for detecting, measuring, and reducing these biases while maintaining the performance and utility of their systems.

Fairness considerations in AI research extend beyond technical measures to encompass broader questions about the distribution of benefits and risks associated with AI technologies. Researchers must consider whether their work is likely to benefit all members of society equitably or whether certain groups may be disadvantaged by the development and deployment of particular AI technologies. This requires engagement with diverse stakeholders and careful consideration of the social and economic contexts in which AI systems will be deployed.

Transparency and Explainability Requirements

The increasing complexity and opacity of modern AI systems raises important questions about transparency and explainability that have significant implications for both research ethics and the broader adoption of AI technologies in society. Academic researchers must balance the pursuit of technical performance with the need to develop systems that can be understood, explained, and audited by relevant stakeholders, including other researchers, policymakers, and affected communities.

Transparency in AI research encompasses multiple dimensions, including the documentation of research methodologies, the sharing of code and data where appropriate, and the clear communication of research findings and their implications. Researchers must also consider the explainability of the AI systems they develop, ensuring that these systems can provide meaningful explanations of their decision-making processes, particularly when these systems are intended for use in high-stakes applications such as healthcare, criminal justice, or financial services.

Leverage comprehensive AI research capabilities with Perplexity to access extensive information resources and analytical tools that support transparent and well-documented research practices. The commitment to transparency and explainability represents a fundamental aspect of responsible AI research that builds trust and enables informed decision-making about the adoption and regulation of AI technologies.

Human Subject Protection and Consent

AI research increasingly involves interactions with human subjects, whether through direct experimentation, the collection of behavioral data, or the deployment of AI systems that affect human decision-making and welfare. The protection of human subjects in AI research requires careful attention to traditional research ethics principles while adapting these principles to address the unique challenges posed by AI technologies.

Informed consent processes must be adapted to help participants understand the nature and implications of AI research, including the potential uses of their data, the risks associated with participation, and their rights as research subjects. This is particularly challenging when dealing with AI systems that may have emergent properties or applications that were not anticipated at the time of initial data collection.

The deployment of AI systems in real-world settings for research purposes raises additional ethical considerations related to the potential impacts on individuals and communities who may not have explicitly consented to participate in research but who are affected by the deployment of experimental systems. Researchers must carefully consider these broader impacts and implement appropriate safeguards to protect the welfare of all individuals who may be affected by their research activities.

International Collaboration and Global Standards

The global nature of AI research and development creates both opportunities and challenges for the establishment and maintenance of ethical standards in academic settings. International collaboration in AI research offers the potential for sharing knowledge, resources, and best practices while also creating challenges related to differences in ethical frameworks, regulatory environments, and cultural values across different countries and institutions.

Academic institutions engaged in international AI research collaborations must navigate complex questions about which ethical standards should apply when researchers from different countries and institutions work together on shared projects. This requires the development of harmonized ethical frameworks that can accommodate different perspectives while maintaining high standards for responsible research practices.

The establishment of global standards for AI research ethics represents an ongoing challenge that requires sustained engagement among academic institutions, professional organizations, and international bodies. These efforts must balance the need for consistency and coordination with respect for cultural diversity and national sovereignty while ensuring that ethical standards remain sufficiently robust to protect individual rights and societal welfare.

Dual-Use Research and Security Considerations

Many AI research projects have dual-use potential, meaning that their results could be applied for both beneficial and harmful purposes. Academic researchers must carefully consider the security implications of their work and implement appropriate measures to prevent the misuse of research outcomes while preserving the open and collaborative nature of academic research.

The management of dual-use research requires sophisticated risk assessment processes that evaluate the potential beneficial and harmful applications of research outcomes, the likelihood of misuse, and the availability of safeguards that can reduce security risks without unduly restricting legitimate research activities. This often involves collaboration with security experts and careful consideration of publication and dissemination strategies.

Researchers working on AI technologies with significant dual-use potential may need to implement additional security measures, such as restricted access to research materials, enhanced vetting of research personnel, and careful management of international collaborations. These measures must be balanced against the principles of academic freedom and open scientific inquiry that are fundamental to the research enterprise.

Environmental Impact and Sustainability

The development and deployment of AI systems often requires significant computational resources, leading to substantial energy consumption and environmental impacts that must be considered as part of comprehensive ethical frameworks for AI research. Academic institutions have a responsibility to consider the environmental sustainability of their research activities and to develop practices that minimize the environmental footprint of AI research while maximizing its beneficial impacts.

Environmental considerations in AI research include the energy efficiency of computational methods, the lifecycle impacts of hardware systems, and the broader environmental implications of AI applications. Researchers must balance the pursuit of technical performance with environmental sustainability, seeking to develop AI systems that achieve their intended objectives while minimizing resource consumption and environmental impact.

The development of sustainable AI research practices represents an emerging area of focus within the academic community, with researchers exploring techniques such as efficient model architectures, green computing practices, and renewable energy sources for computational infrastructure. These efforts require collaboration across disciplines and sustained investment in developing more sustainable approaches to AI research and development.

Future Directions and Emerging Challenges

The field of AI research ethics continues to evolve rapidly as new technologies emerge and our understanding of the implications of AI systems deepens. Academic institutions must remain adaptive and responsive to emerging ethical challenges while maintaining rigorous standards for responsible research practices. This requires ongoing investment in ethics education, research infrastructure, and collaborative relationships with diverse stakeholders.

Emerging challenges in AI research ethics include the development of artificial general intelligence systems, the integration of AI technologies with other emerging technologies such as biotechnology and nanotechnology, and the need to address ethical considerations across increasingly complex and interconnected technological systems. These challenges will require continued innovation in ethical frameworks and oversight mechanisms.

The future of AI research ethics will likely involve greater integration of ethical considerations into technical research methodologies, enhanced collaboration between ethicists and technologists, and more sophisticated approaches to stakeholder engagement and public participation in research governance. Academic institutions must prepare for these developments by investing in interdisciplinary research and education programs that build the capacity necessary to address future ethical challenges in AI research.

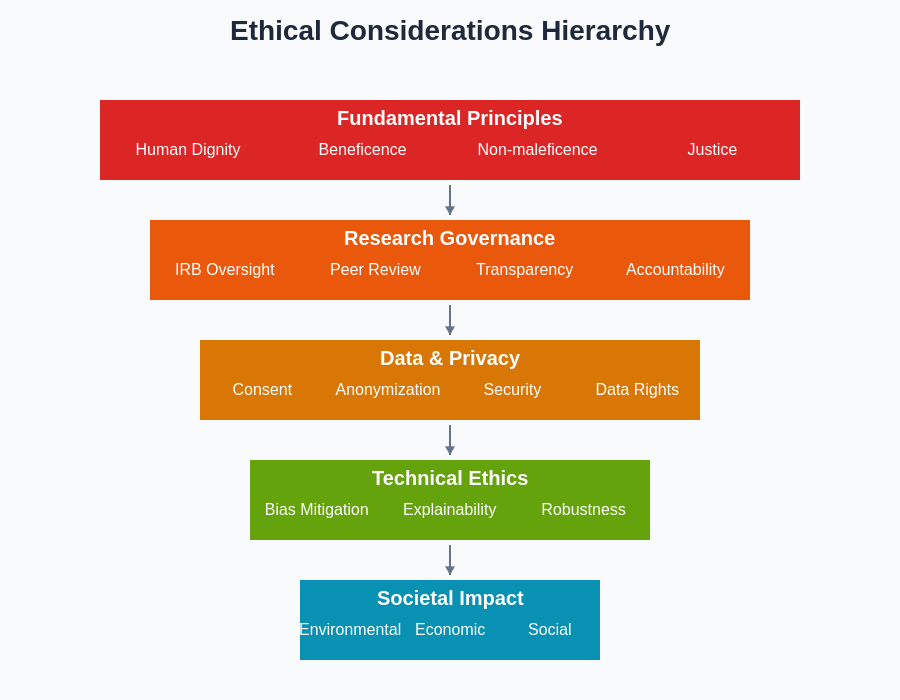

The comprehensive framework for AI research ethics encompasses multiple interconnected domains that must be carefully balanced to ensure responsible innovation while maintaining the integrity and productivity of academic research environments.

The hierarchical structure of ethical considerations in AI research demonstrates the complex relationships between different ethical principles and the need for systematic approaches to addressing ethical challenges at multiple levels of analysis.

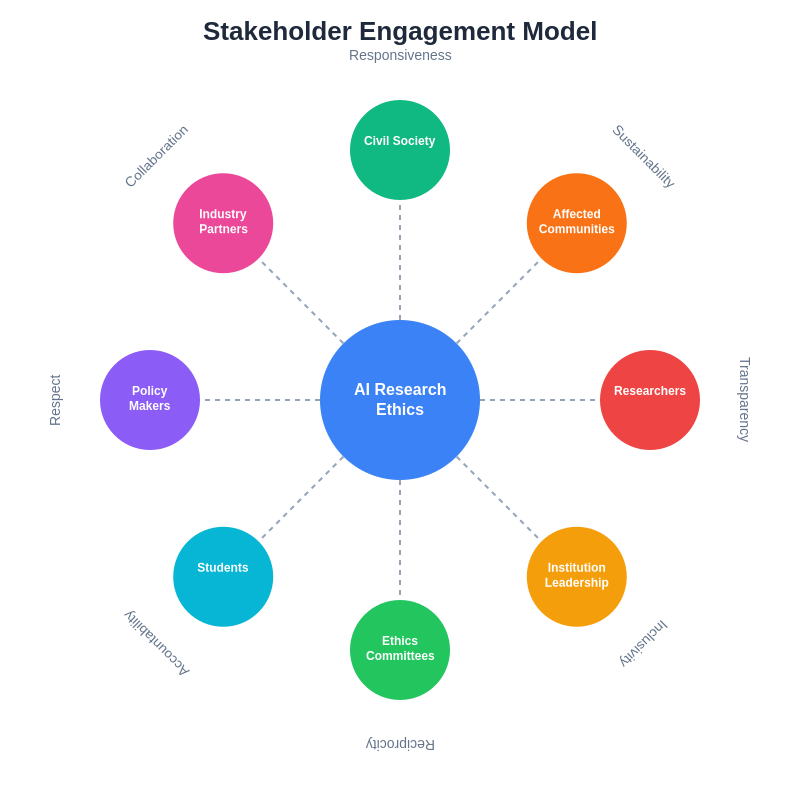

Effective stakeholder engagement represents a critical component of responsible AI research, requiring systematic approaches to identifying, involving, and responding to the perspectives and concerns of diverse communities affected by AI research and development activities.

The establishment and maintenance of robust ethical frameworks for AI research in academic settings represents one of the most important challenges facing the research community today. The decisions made by academic institutions, researchers, and oversight bodies will fundamentally shape the trajectory of AI development and its impacts on human society for generations to come. This responsibility requires sustained commitment to ethical excellence, ongoing dialogue with diverse stakeholders, and continued innovation in approaches to responsible research governance.

Disclaimer

This article is for informational and educational purposes only and does not constitute legal, ethical, or professional advice. The views expressed are based on current understanding of AI research ethics and may not reflect the policies or positions of specific academic institutions or professional organizations. Readers should consult with appropriate experts and institutional authorities when making decisions about AI research ethics and governance. Ethical frameworks and best practices in AI research continue to evolve, and researchers should stay informed about current developments in the field.