The rapid advancement and widespread adoption of artificial intelligence and machine learning technologies have created unprecedented opportunities for innovation and efficiency across virtually every industry sector. However, with these remarkable capabilities come equally significant risks that can have far-reaching consequences for organizations, individuals, and society as a whole. The complexity inherent in AI systems, combined with their increasing integration into critical decision-making processes, makes comprehensive risk assessment not just advisable but absolutely essential for responsible AI deployment.

Stay informed about the latest AI developments and risk management strategies to ensure your organization remains ahead of emerging threats and best practices in the rapidly evolving AI landscape. The stakes of AI risk management continue to escalate as these technologies become more sophisticated and pervasive, making proactive risk assessment a fundamental component of any successful AI initiative.

Understanding the Multifaceted Nature of AI Risk

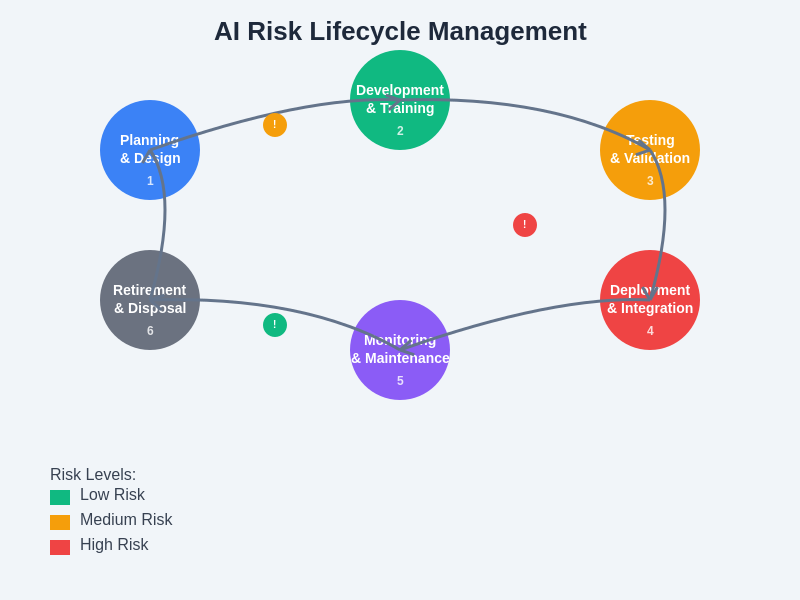

The landscape of AI and machine learning risks extends far beyond simple technical failures, encompassing a complex web of interconnected challenges that span technical, ethical, legal, operational, and strategic dimensions. These risks can manifest at various stages of the AI lifecycle, from initial data collection and model development through deployment, monitoring, and eventual decommissioning. Understanding this multifaceted nature is crucial for developing comprehensive risk mitigation strategies that address not only immediate technical concerns but also long-term implications for organizational reputation, regulatory compliance, and societal impact.

Traditional risk assessment frameworks often prove inadequate when applied to AI systems due to the unique characteristics of machine learning technologies, including their probabilistic nature, black-box decision-making processes, and dynamic behavior that can evolve over time. The interconnected nature of AI systems means that risks can cascade and amplify in unexpected ways, creating failure modes that may not be apparent through conventional analysis methods. This complexity necessitates specialized approaches to risk identification and assessment that account for the unique properties and potential failure modes of artificial intelligence systems.

Data-Related Risks and Quality Assurance

Data quality represents one of the most fundamental categories of risk in machine learning projects, as the performance and reliability of AI systems are intrinsically dependent on the quality, completeness, and representativeness of their training data. Poor data quality can manifest in numerous ways, including incomplete records, inconsistent formatting, outdated information, duplicate entries, and measurement errors that can significantly compromise model performance and lead to unreliable predictions or decisions. The impact of data quality issues often becomes apparent only after deployment, when models encounter real-world scenarios that differ from their training environment.

Beyond basic quality concerns, data-related risks include issues of bias, representativeness, and privacy that can have profound implications for fairness and compliance. Training datasets that inadequately represent the diversity of real-world populations or scenarios can lead to models that perform poorly for certain demographic groups or edge cases, potentially creating discriminatory outcomes or system failures in critical situations. Privacy risks emerge when training data contains sensitive personal information that could be exposed through model inversion attacks, membership inference attacks, or inadvertent disclosure during model sharing or publication.

Explore comprehensive AI risk management solutions with Claude to leverage advanced analytical capabilities for identifying and addressing complex data quality and privacy challenges in your machine learning initiatives. The sophistication required for effective data risk assessment often demands AI-powered tools that can analyze vast datasets and identify subtle patterns of bias or quality degradation that might escape human detection.

Model Development and Algorithm Risks

The model development phase introduces a distinct category of risks related to algorithm selection, architecture design, hyperparameter tuning, and training methodology that can significantly impact the performance, reliability, and safety of deployed AI systems. Inappropriate algorithm selection for specific problem domains can lead to suboptimal performance, while overly complex architectures may result in overfitting, poor generalization, or computational inefficiency that makes deployment impractical. The iterative nature of model development often creates opportunities for confirmation bias, where developers unconsciously favor approaches that confirm their initial hypotheses rather than objectively evaluating all available options.

Overfitting represents a particularly insidious risk in machine learning projects, as models that perform exceptionally well on training and validation datasets may fail catastrophically when confronted with real-world data that differs even slightly from their training distribution. This risk is compounded by the tendency of some machine learning practitioners to focus primarily on achieving high performance metrics during development without adequately considering the robustness and generalizability of their models. Adversarial vulnerabilities constitute another critical risk category, where models can be deliberately manipulated through carefully crafted inputs designed to cause misclassification or unexpected behavior.

Deployment and Operational Risk Management

The transition from development to production deployment introduces a new set of risks related to system integration, performance monitoring, scalability, and operational maintenance that can significantly impact the success and safety of AI initiatives. Deployment environments often differ substantially from development and testing environments in terms of data characteristics, computational resources, latency requirements, and integration complexity, creating opportunities for unexpected failures or performance degradation. The dynamic nature of real-world data means that model performance can drift over time as underlying patterns change, requiring continuous monitoring and potentially frequent retraining to maintain acceptable performance levels.

Integration risks emerge when AI models must interact with existing systems, databases, and workflows that may have different data formats, security requirements, or performance characteristics than those assumed during development. These integration challenges can create vulnerabilities or bottlenecks that compromise system reliability or security, particularly when legacy systems lack the infrastructure necessary to support modern AI workloads. Scalability risks become apparent when systems that perform adequately during testing encounter the volume and velocity of production data, potentially leading to performance degradation, system overload, or unacceptable response times that impact user experience or business operations.

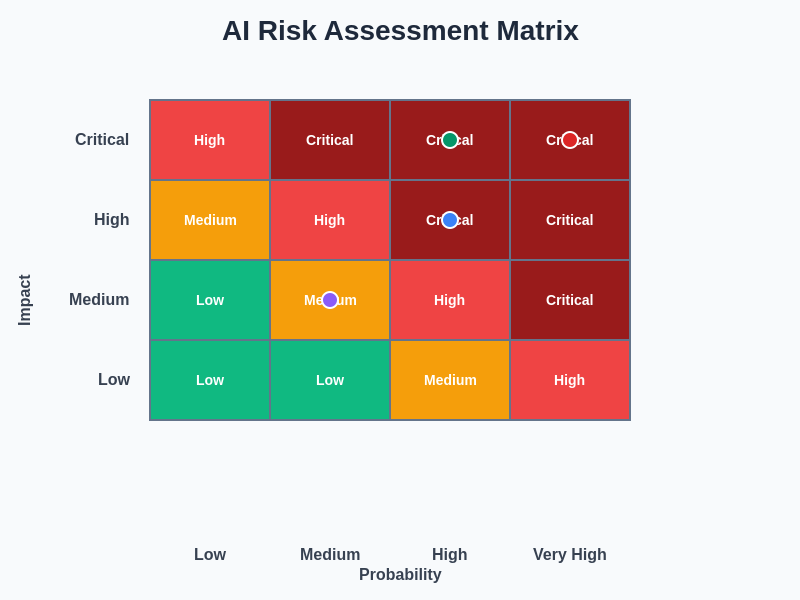

The complexity of AI risk assessment requires systematic evaluation across multiple dimensions, considering both the probability of occurrence and potential impact of various risk scenarios. This comprehensive approach enables organizations to prioritize their risk mitigation efforts and allocate resources effectively to address the most critical vulnerabilities in their AI systems.

Regulatory and Compliance Risk Landscape

The regulatory environment surrounding artificial intelligence continues to evolve rapidly, creating ongoing compliance risks for organizations developing and deploying AI systems across different jurisdictions and industry sectors. Existing regulations such as GDPR, CCPA, HIPAA, and various financial services regulations often apply to AI systems in complex ways that may not be immediately apparent to development teams focused primarily on technical considerations. The principle of privacy by design requires that data protection measures be integrated into AI systems from the earliest stages of development, while right-to-explanation requirements may necessitate the use of interpretable models or the development of sophisticated explanation systems for black-box algorithms.

Emerging AI-specific regulations, such as the EU AI Act and various national AI governance frameworks, introduce new categories of compliance requirements that may significantly impact the design, deployment, and operation of AI systems. These regulations often classify AI systems according to risk levels and impose different requirements for documentation, testing, human oversight, and transparency based on the potential impact of the system on individuals and society. The global nature of many AI applications means that organizations may need to comply with multiple regulatory frameworks simultaneously, creating complex compliance landscapes that require careful coordination and expertise.

Leverage Perplexity’s advanced research capabilities to stay current with the rapidly evolving regulatory landscape and ensure your AI risk assessment processes incorporate the latest compliance requirements and best practices from leading organizations worldwide. The dynamic nature of AI regulation requires continuous monitoring and adaptation of risk management strategies to maintain compliance across multiple jurisdictions and regulatory frameworks.

Security Vulnerabilities and Threat Modeling

AI systems present unique security challenges that extend traditional cybersecurity concerns into new domains of vulnerability related to model behavior, training data integrity, and adversarial manipulation. Adversarial attacks represent a particularly concerning category of security risk, where malicious actors craft subtle perturbations to input data that can cause models to make incorrect predictions or classifications while remaining imperceptible to human observers. These attacks can have serious consequences in applications such as autonomous vehicles, medical diagnosis, or financial fraud detection, where incorrect model predictions could result in physical harm, misdiagnosis, or financial losses.

Model poisoning attacks target the training phase of machine learning systems by introducing malicious data designed to corrupt model behavior in subtle ways that may not be detected during standard testing and validation procedures. These attacks can be particularly devastating because they compromise the fundamental integrity of the model, creating persistent vulnerabilities that may be difficult to detect and remediate. Supply chain attacks represent another emerging threat vector, where compromised training datasets, pre-trained models, or development tools can introduce vulnerabilities that propagate throughout the AI development lifecycle.

The interconnected nature of modern AI systems, which often rely on cloud services, third-party APIs, and shared computing resources, creates additional attack surfaces and dependencies that must be considered in comprehensive security risk assessments. Model extraction attacks can enable adversaries to steal intellectual property by querying deployed models and reconstructing their behavior, while membership inference attacks can reveal sensitive information about individuals whose data was used in training datasets.

Ethical Considerations and Bias Mitigation

Ethical risks in AI systems encompass a broad range of concerns related to fairness, transparency, accountability, and societal impact that can have profound implications for individuals, communities, and organizations. Algorithmic bias represents one of the most visible and consequential ethical risks, where AI systems make decisions that systematically disadvantage certain groups or individuals based on protected characteristics such as race, gender, age, or socioeconomic status. These biases can emerge from multiple sources, including biased training data, inappropriate feature selection, or flawed evaluation metrics that fail to account for different performance requirements across demographic groups.

The black-box nature of many machine learning algorithms creates transparency challenges that can undermine trust and accountability in AI decision-making processes. When individuals are unable to understand how AI systems reach decisions that affect them, it becomes difficult to identify errors, appeal unfavorable decisions, or ensure that decision-making processes are fair and consistent. This lack of transparency can be particularly problematic in high-stakes applications such as criminal justice, healthcare, or employment decisions, where the consequences of incorrect or biased decisions can be life-altering.

Societal impact risks extend beyond individual fairness concerns to encompass broader effects on employment, social structures, and power dynamics that may result from widespread AI adoption. The potential for AI systems to automate jobs, amplify existing inequalities, or create new forms of discrimination requires careful consideration during the design and deployment phases of AI projects. These considerations often involve complex tradeoffs between efficiency, fairness, and other competing values that may not have clear technical solutions.

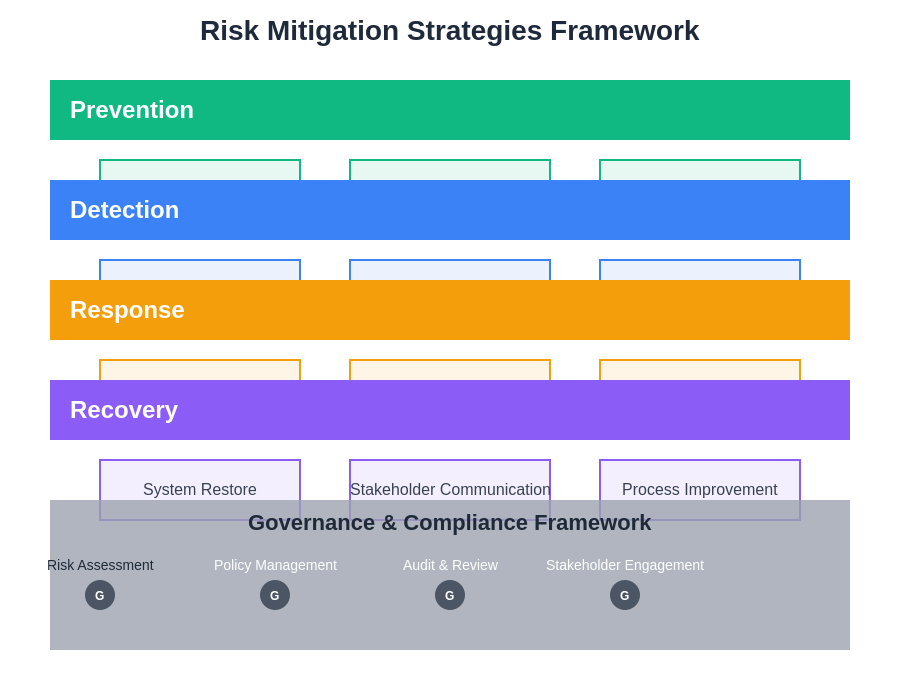

Effective risk mitigation requires a multi-layered approach that addresses technical, operational, and governance aspects of AI systems throughout their lifecycle. This comprehensive framework ensures that risks are identified early, monitored continuously, and addressed through appropriate technical and procedural controls.

Technical Risk Mitigation Strategies

Technical approaches to AI risk mitigation focus on developing and implementing specific methodologies, tools, and practices that directly address the technical vulnerabilities and failure modes inherent in machine learning systems. Robust model validation techniques, including cross-validation, holdout testing, and stress testing with adversarial examples, help identify potential weaknesses before deployment and provide confidence in model performance across diverse scenarios. Ensemble methods, which combine predictions from multiple models, can improve reliability and reduce the impact of individual model failures, while uncertainty quantification techniques help identify situations where model predictions may be unreliable.

Differential privacy and federated learning represent important technical approaches to privacy risk mitigation that enable the development of useful machine learning models while preserving individual privacy and data confidentiality. These techniques allow organizations to gain insights from sensitive data without exposing individual records or requiring centralized data collection that could create privacy vulnerabilities. Adversarial training and defensive distillation methods help improve model robustness against adversarial attacks by exposing models to adversarial examples during training or modifying the model architecture to reduce sensitivity to input perturbations.

Explainable AI techniques provide tools for understanding and interpreting model behavior, enabling developers and users to identify potential biases, errors, or unexpected patterns that might indicate underlying problems. These techniques range from simple feature importance measures to sophisticated attention mechanisms and counterfactual explanation methods that can provide insights into how models make decisions and what factors are most influential in specific predictions.

Organizational Governance and Risk Management Frameworks

Effective AI risk management requires robust organizational governance structures that establish clear roles, responsibilities, and processes for identifying, assessing, and mitigating risks throughout the AI lifecycle. AI governance frameworks should include formal risk assessment procedures that are integrated into project planning and review processes, ensuring that risk considerations are addressed systematically rather than as afterthoughts. These frameworks typically include risk registers that document identified risks, their potential impacts, and mitigation strategies, along with regular review processes that ensure risk assessments remain current and relevant as projects evolve.

Cross-functional AI ethics committees or review boards can provide valuable oversight and guidance for AI projects, bringing together expertise from technical, legal, ethical, and business domains to evaluate potential risks and ensure that diverse perspectives are considered in decision-making processes. These bodies can establish organizational standards and guidelines for AI development, review high-risk projects, and provide guidance on emerging ethical and regulatory issues that may impact AI initiatives.

Risk management processes should include clear escalation procedures for addressing high-risk scenarios or unexpected issues that arise during development or deployment, along with incident response plans that enable rapid identification and remediation of problems when they occur. Documentation standards and audit trails help ensure accountability and enable post-incident analysis that can inform improvements to risk management processes and prevent similar issues in future projects.

Monitoring, Evaluation, and Continuous Improvement

Continuous monitoring and evaluation represent critical components of comprehensive AI risk management, as the dynamic nature of both AI systems and their operating environments means that risk profiles can change significantly over time. Performance monitoring systems should track not only traditional accuracy metrics but also fairness measures, robustness indicators, and other risk-relevant metrics that provide early warning of potential problems. Automated monitoring systems can detect concept drift, data quality degradation, and performance anomalies that might indicate emerging risks or the need for model retraining.

A/B testing and canary deployment strategies enable gradual rollout of AI systems with careful monitoring of their impact, allowing organizations to identify and address problems before they affect large user populations. These approaches are particularly valuable for high-risk applications where the consequences of system failures could be severe, providing opportunities to validate system behavior in production environments while limiting exposure to potential risks.

Regular risk assessment reviews should evaluate the effectiveness of existing mitigation strategies and identify new risks that may have emerged as systems evolve or as the threat landscape changes. These reviews should incorporate lessons learned from incidents, near-misses, and industry best practices to continuously improve risk management processes and ensure that organizations remain prepared for emerging challenges in AI deployment and operation.

The complete lifecycle of AI risk management encompasses prevention, detection, response, and recovery phases that must be carefully coordinated to ensure comprehensive protection against both anticipated and emergent risks throughout the system’s operational lifetime.

Industry-Specific Risk Considerations

Different industry sectors face unique AI risk profiles that reflect their specific regulatory environments, operational constraints, and potential impact on stakeholders, requiring tailored approaches to risk assessment and mitigation. Healthcare AI applications must address risks related to patient safety, medical device regulations, clinical validation requirements, and the potential for diagnostic errors that could have life-threatening consequences. The high-stakes nature of medical decision-making requires particularly rigorous validation procedures, extensive clinical testing, and robust safety mechanisms that can detect and prevent potentially harmful errors.

Financial services applications face risks related to market manipulation, discriminatory lending practices, regulatory compliance, and the potential for algorithmic trading systems to contribute to market instability. The interconnected nature of financial markets means that AI-related failures could have systemic implications that extend far beyond individual institutions, requiring careful coordination with regulators and industry peers to ensure overall market stability.

Autonomous vehicle applications present unique risks related to physical safety, environmental complexity, and the need to make split-second decisions in unpredictable situations that may not have been adequately represented in training data. The multi-stakeholder nature of transportation systems requires careful consideration of interactions with human drivers, pedestrians, and infrastructure systems that may not be designed to accommodate autonomous vehicles.

Future Directions and Emerging Challenges

The rapid pace of AI development continues to create new categories of risks and challenges that may not be adequately addressed by current risk management frameworks and practices. Large language models and generative AI systems present novel risks related to misinformation generation, intellectual property infringement, and the potential for creating convincing but false content that could undermine trust in information systems. These systems also raise questions about the appropriate boundaries for AI capabilities and the need for human oversight in creative and decision-making processes.

The increasing integration of AI systems into critical infrastructure and decision-making processes creates systemic risks that may not be apparent when evaluating individual systems in isolation. The potential for cascading failures, unexpected interactions between AI systems, and the difficulty of maintaining human oversight in complex automated systems require new approaches to risk assessment that consider system-of-systems effects and emergent behaviors.

Quantum computing and advanced AI architectures may introduce new categories of vulnerabilities and attack vectors that current security measures cannot adequately address, requiring proactive research and development of defensive measures before these technologies become widely deployed. The international nature of AI development and deployment also creates challenges related to coordinating risk management approaches across different regulatory frameworks and cultural contexts.

The evolution of AI capabilities toward more general and autonomous systems will likely require fundamental rethinking of current risk management approaches, as traditional methods based on statistical analysis and controlled testing may prove inadequate for systems that can adapt and evolve beyond their original training parameters. This challenge emphasizes the importance of continued research into AI safety, alignment, and control mechanisms that can ensure beneficial outcomes as AI systems become more capable and autonomous.

Disclaimer

This article provides general information about AI risk assessment and mitigation strategies for educational purposes only and does not constitute professional advice. Organizations should conduct their own comprehensive risk assessments with qualified experts and consider their specific operational requirements, regulatory obligations, and risk tolerance when developing AI risk management strategies. The effectiveness of risk mitigation strategies may vary depending on specific use cases, technological implementations, and organizational contexts. Readers should consult with appropriate technical, legal, and compliance professionals before implementing AI risk management frameworks or making decisions based on the information provided in this article.