The rapid advancement of artificial intelligence and robotics has ushered in an era where autonomous systems are becoming integral to our daily lives, from self-driving vehicles navigating city streets to surgical robots performing delicate operations in hospitals. This technological revolution brings with it profound ethical questions that challenge our traditional understanding of responsibility, accountability, and moral agency. As these intelligent machines become increasingly sophisticated and autonomous in their decision-making processes, society must grapple with fundamental questions about safety protocols, legal frameworks, and the moral implications of delegating critical decisions to artificial entities.

Explore the latest developments in AI ethics and autonomous systems to understand how rapidly evolving technologies are reshaping our ethical landscape and regulatory approaches. The intersection of artificial intelligence and robotics represents one of the most complex ethical frontiers of our time, demanding careful consideration of how we balance technological progress with human safety, dignity, and moral responsibility.

The Foundations of AI Robot Ethics

The ethical framework surrounding artificial intelligence and robotics extends far beyond simple programming guidelines, encompassing fundamental questions about the nature of moral agency, the distribution of responsibility, and the preservation of human values in an increasingly automated world. As autonomous systems become more sophisticated, they begin to exhibit behaviors that appear to involve decision-making, judgment, and even creativity, challenging our traditional notions of what it means to be a moral agent and how we assign responsibility for the consequences of actions taken by non-human entities.

The development of ethical AI systems requires a multidisciplinary approach that draws from philosophy, law, computer science, psychology, and sociology to create comprehensive frameworks that can guide the design, deployment, and governance of autonomous systems. These frameworks must address not only the immediate safety concerns associated with robotic systems but also the broader implications of creating machines that can operate independently and make decisions that significantly impact human lives and societal structures.

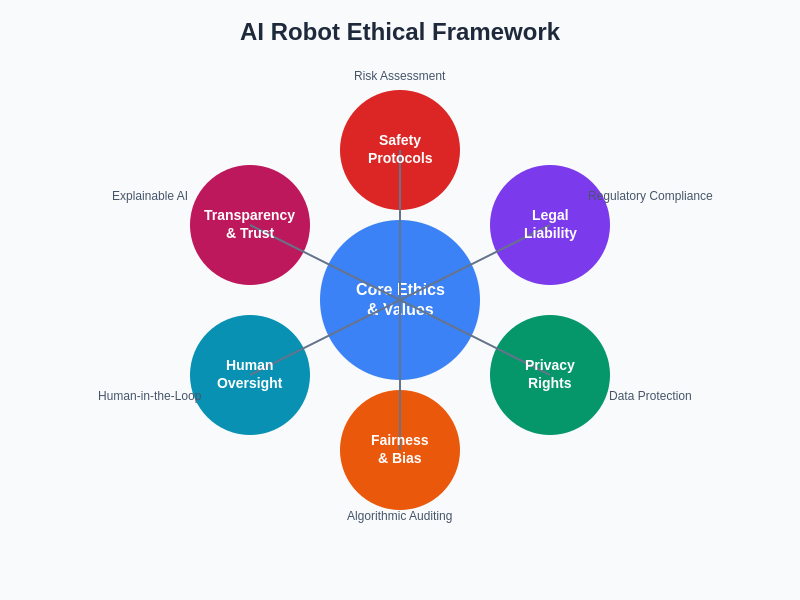

The comprehensive ethical framework for AI robots encompasses multiple interconnected principles that must work in harmony to ensure responsible development and deployment. At the core lies fundamental ethical values, surrounded by specific implementation areas including safety protocols, legal liability, privacy rights, fairness considerations, human oversight mechanisms, and transparency requirements.

The complexity of AI robot ethics is further compounded by the fact that these systems operate in dynamic, unpredictable environments where the consequences of their actions may not be immediately apparent or fully understood. Unlike traditional machines that operate within clearly defined parameters, autonomous AI systems must navigate ambiguous situations, make trade-offs between competing values, and adapt to changing circumstances in ways that may not align perfectly with human expectations or values.

Safety Protocols and Risk Management

The implementation of robust safety protocols in autonomous systems represents one of the most critical aspects of AI robot ethics, requiring comprehensive risk assessment methodologies that can anticipate and mitigate potential hazards before they materialize into real-world consequences. These safety frameworks must account for the unpredictable nature of AI decision-making processes, the complexity of real-world environments, and the potential for emergent behaviors that may not have been anticipated during the design phase.

Modern autonomous systems employ multiple layers of safety mechanisms, including redundant sensors, fail-safe protocols, and human oversight systems that can intervene when necessary. However, the challenge lies in creating safety protocols that can adapt to novel situations and unexpected scenarios without compromising the system’s ability to perform its intended functions effectively. This requires a delicate balance between safety and functionality that must be carefully calibrated for each specific application and operating environment.

Discover advanced AI safety research and methodologies through Claude, which provides comprehensive analysis and insights into the latest developments in AI safety protocols and risk management strategies. The development of effective safety protocols requires ongoing collaboration between technologists, ethicists, and regulatory bodies to ensure that autonomous systems can operate safely while continuing to provide valuable services to society.

The challenge of ensuring safety in autonomous systems is further complicated by the fact that these systems must operate in environments where they interact with humans who may not understand their capabilities or limitations. This requires the development of intuitive interfaces and communication protocols that allow humans to effectively collaborate with and supervise autonomous systems while maintaining appropriate levels of trust and situational awareness.

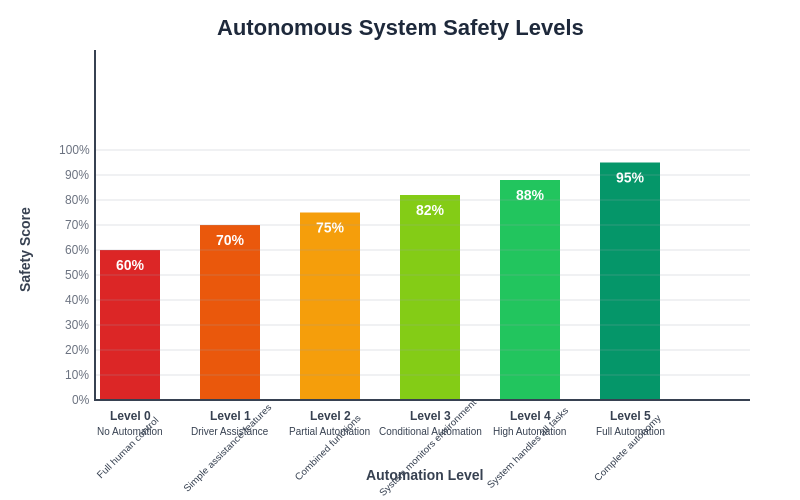

The progression of autonomous system capabilities from basic driver assistance to full automation demonstrates a clear correlation between increasing autonomy and enhanced safety performance. However, each level of automation introduces unique challenges and requires specific safety protocols, regulatory frameworks, and ethical considerations to ensure responsible deployment and operation.

Legal Liability and Accountability Frameworks

The question of legal liability in autonomous systems represents one of the most complex challenges facing legal systems worldwide, as traditional notions of responsibility and accountability must be reconsidered in light of machines that can make independent decisions with significant consequences. Current legal frameworks were developed with the assumption that all actions could ultimately be traced back to human decision-makers, but autonomous systems challenge this assumption by creating scenarios where the causal chain between human intention and machine action becomes increasingly attenuated.

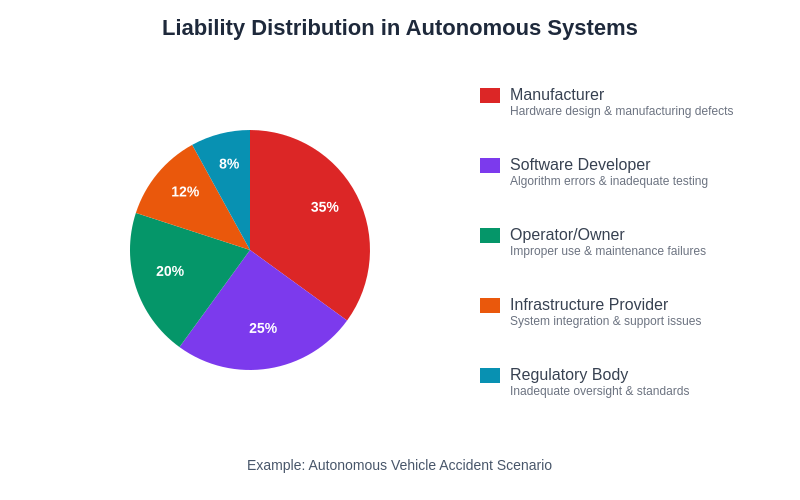

The development of appropriate liability frameworks requires careful consideration of multiple stakeholders, including manufacturers, programmers, operators, and users of autonomous systems, each of whom may bear different degrees of responsibility depending on the specific circumstances of an incident. Legal scholars and practitioners are exploring various models for distributing liability, including strict liability regimes that hold manufacturers responsible regardless of fault, negligence-based systems that focus on whether appropriate care was taken in design and deployment, and insurance-based approaches that socialize the risks associated with autonomous systems.

The distribution of liability among different stakeholders in autonomous systems reflects the complex web of responsibility that emerges when multiple parties contribute to the development, deployment, and operation of these technologies. Manufacturers typically bear the largest share of responsibility for hardware and design defects, while software developers, operators, infrastructure providers, and regulatory bodies each contribute to the overall safety and reliability of autonomous systems.

The complexity of determining liability in autonomous systems is compounded by the fact that these systems often operate using machine learning algorithms that may be opaque or difficult to interpret, making it challenging to determine exactly why a particular decision was made or whether that decision was reasonable under the circumstances. This has led to increased interest in explainable AI systems that can provide clear reasoning for their decisions, as well as audit trails that can help reconstruct the decision-making process in the event of an incident.

International coordination on liability frameworks is essential given that autonomous systems often operate across jurisdictional boundaries and may be developed in one country, manufactured in another, and deployed in a third. This requires the development of harmonized standards and legal principles that can provide clarity and consistency for all stakeholders while respecting the sovereignty and legal traditions of different nations.

Moral Agency and Decision-Making in Machines

The attribution of moral agency to artificial systems raises profound philosophical questions about the nature of consciousness, intentionality, and moral responsibility that have implications far beyond the technical specifications of robotic systems. As autonomous systems become more sophisticated in their decision-making capabilities, they begin to exhibit behaviors that appear to involve moral reasoning, weighing competing values, and making judgments about appropriate courses of action in complex ethical scenarios.

The question of whether machines can truly be moral agents or whether they are simply sophisticated tools that implement human moral judgments has significant implications for how we structure accountability frameworks and design ethical decision-making systems. Some philosophers argue that moral agency requires consciousness, intentionality, and the ability to understand the moral significance of one’s actions, qualities that current AI systems may lack despite their sophisticated behavioral capabilities.

However, the practical implications of moral agency in machines may be less important than the functional question of how we can design systems that consistently make decisions aligned with human values and ethical principles. This requires the development of robust value alignment techniques that can ensure autonomous systems pursue objectives that are compatible with human welfare and moral intuitions, even as they operate in novel situations not explicitly anticipated by their designers.

The challenge of implementing moral decision-making in machines is further complicated by the fact that human moral intuitions are often inconsistent, culturally dependent, and context-sensitive in ways that may be difficult to codify in algorithmic form. This requires the development of flexible ethical frameworks that can adapt to different cultural contexts and moral traditions while maintaining core principles of human dignity and welfare.

Human-Robot Interaction and Trust

The successful integration of autonomous systems into human society depends critically on the development of appropriate trust relationships between humans and machines, requiring careful attention to transparency, predictability, and the communication of system capabilities and limitations. Trust in autonomous systems is a complex phenomenon that depends not only on the technical reliability of these systems but also on human psychological factors, cultural attitudes toward technology, and social norms surrounding automation and delegation of authority.

Building appropriate trust relationships requires autonomous systems to be designed with human psychology and social dynamics in mind, incorporating features that allow humans to develop accurate mental models of system capabilities and limitations. This includes providing clear feedback about system status and intentions, maintaining consistent behavior patterns that allow humans to predict system responses, and implementing graceful degradation protocols that maintain human confidence even when systems encounter unexpected situations.

Enhance your understanding of human-AI interaction through Perplexity’s comprehensive research capabilities, which can provide detailed insights into the psychological and social factors that influence human acceptance and trust of autonomous systems. The development of effective human-robot interaction protocols is essential for ensuring that these systems can be successfully integrated into existing social and organizational structures.

The challenge of maintaining appropriate trust levels is particularly acute in high-stakes applications where over-reliance on autonomous systems could lead to dangerous situations, while under-reliance could prevent these systems from providing their intended benefits. This requires careful calibration of trust through training, experience, and ongoing feedback mechanisms that help users develop realistic expectations about system performance and reliability.

Privacy and Data Protection in Autonomous Systems

The operation of autonomous systems typically requires the collection, processing, and analysis of vast amounts of data about their operating environment, including information about human behavior, preferences, and activities that may raise significant privacy concerns. These systems often employ sensors, cameras, microphones, and other data collection devices that can capture detailed information about individuals and their activities, creating potential for surveillance and privacy violations that must be carefully managed through appropriate technical and legal safeguards.

The privacy implications of autonomous systems are particularly complex because these systems often need to collect and process personal data in real-time to function effectively, making it difficult to implement traditional privacy protection measures such as data minimization and purpose limitation. This requires the development of privacy-preserving technologies such as differential privacy, federated learning, and edge computing that can enable autonomous systems to function effectively while minimizing the collection and retention of personally identifiable information.

The global nature of many autonomous systems also raises complex questions about cross-border data transfers and the application of different privacy regulations and standards in different jurisdictions. Companies developing and deploying autonomous systems must navigate a complex patchwork of privacy laws and regulations while ensuring that their systems can operate effectively across different legal and cultural contexts.

The challenge of protecting privacy in autonomous systems is further complicated by the fact that these systems may generate insights and inferences about individuals that go beyond the data that was explicitly collected, potentially revealing sensitive information about personal characteristics, behaviors, and preferences that individuals did not consent to share.

Bias and Fairness in Autonomous Decision-Making

The potential for bias and discrimination in autonomous systems represents one of the most pressing ethical challenges in AI robot ethics, as these systems may perpetuate or amplify existing social inequalities through their decision-making processes. Bias can enter autonomous systems through multiple pathways, including biased training data, biased algorithms, biased evaluation metrics, and biased deployment practices that may result in unfair treatment of certain individuals or groups.

Addressing bias in autonomous systems requires comprehensive approaches that address all stages of the system development lifecycle, from data collection and algorithm design to testing, deployment, and ongoing monitoring. This includes implementing diverse and representative datasets, developing bias-aware algorithms, establishing fairness metrics and evaluation procedures, and creating governance structures that can identify and address bias issues as they emerge.

The challenge of ensuring fairness in autonomous systems is complicated by the fact that different notions of fairness may conflict with each other, requiring difficult trade-offs between competing values and objectives. For example, ensuring equal treatment across different groups may conflict with maximizing overall system performance, while ensuring equal outcomes may require treating different groups differently in ways that could be seen as discriminatory.

The dynamic nature of autonomous systems also means that bias issues may emerge over time as these systems adapt to changing environments and learn from new data, requiring ongoing monitoring and adjustment to maintain fairness and prevent the emergence of new forms of discrimination.

Environmental and Sustainability Considerations

The deployment of autonomous systems at scale raises important questions about environmental impact and sustainability that must be considered as part of comprehensive ethical frameworks for AI robot ethics. These systems often require significant computational resources, energy consumption, and material inputs that may have substantial environmental consequences, particularly as they become more widespread and sophisticated.

The environmental impact of autonomous systems extends beyond their direct energy consumption to include the environmental costs of manufacturing, deployment, maintenance, and eventual disposal of these systems. This includes the extraction of rare earth materials for sensors and processors, the carbon footprint of data centers that support cloud-based AI services, and the electronic waste generated when systems reach the end of their operational lifecycle.

However, autonomous systems also have the potential to reduce environmental impact in many applications by optimizing resource utilization, reducing waste, and enabling more efficient transportation and logistics systems. For example, autonomous vehicles could reduce traffic congestion and fuel consumption through optimized routing and coordination, while autonomous agricultural systems could reduce pesticide and fertilizer usage through precision application techniques.

The challenge lies in ensuring that the environmental benefits of autonomous systems outweigh their environmental costs, requiring careful lifecycle assessment and the development of sustainable design principles that prioritize energy efficiency, material conservation, and end-of-life recyclability.

Global Governance and International Coordination

The global nature of AI and robotics development requires international coordination and cooperation to ensure that autonomous systems are developed and deployed in ways that respect human rights, promote safety, and advance shared human values. The development of effective governance frameworks for autonomous systems must address the challenge of regulating technologies that transcend national boundaries while respecting the sovereignty and legal traditions of different nations.

International organizations, governments, and multi-stakeholder initiatives are working to develop common standards, principles, and best practices for AI robot ethics that can provide guidance for developers, deployers, and users of autonomous systems worldwide. These efforts include the development of technical standards for safety and interoperability, ethical guidelines for AI development, and regulatory frameworks that can address the unique challenges posed by autonomous systems.

The challenge of international coordination is complicated by different cultural values, legal traditions, and economic interests that may lead to divergent approaches to AI robot ethics in different regions. This requires ongoing dialogue and collaboration to identify common ground while respecting legitimate differences in values and priorities.

The rapid pace of technological development also means that governance frameworks must be adaptive and flexible enough to address emerging challenges and opportunities as they arise, while providing sufficient stability and predictability to support innovation and investment in beneficial AI technologies.

Future Directions and Emerging Challenges

The field of AI robot ethics continues to evolve rapidly as new technologies emerge and our understanding of the implications of autonomous systems deepens. Emerging technologies such as artificial general intelligence, quantum computing, and brain-computer interfaces are likely to introduce new ethical challenges that will require novel approaches and frameworks to address effectively.

The increasing sophistication of autonomous systems is also likely to blur the boundaries between human and machine decision-making in ways that may require fundamental reconceptualization of concepts such as agency, responsibility, and moral status. This may include the development of hybrid human-machine systems that combine human judgment with machine capabilities in new ways, as well as the potential emergence of artificial systems that may merit moral consideration in their own right.

The democratization of AI and robotics technologies is also likely to increase the number and diversity of stakeholders involved in the development and deployment of autonomous systems, requiring more inclusive and participatory approaches to AI robot ethics that can accommodate a broader range of perspectives and values. This includes ensuring that the benefits of autonomous systems are distributed fairly across society and that vulnerable populations are protected from potential harms.

The long-term trajectory of AI and robotics development may ultimately require us to reconsider fundamental assumptions about the relationship between humans and technology, the nature of intelligence and consciousness, and the goals and values that should guide technological development. This requires ongoing engagement between technologists, ethicists, policymakers, and society as a whole to ensure that the development of autonomous systems serves human flourishing and promotes the common good.

Conclusion

The ethical landscape surrounding AI robots and autonomous systems represents one of the most complex and consequential challenges of our technological age, requiring careful balance between promoting innovation and protecting human values, safety, and dignity. As these systems become increasingly sophisticated and ubiquitous, society must develop robust frameworks for addressing questions of safety, liability, fairness, privacy, and environmental impact while fostering continued technological advancement that benefits humanity as a whole.

The path forward requires ongoing collaboration between technologists, ethicists, policymakers, and civil society to ensure that autonomous systems are developed and deployed in ways that reflect our highest aspirations and values. This includes continued research into technical approaches for ensuring safety and fairness, the development of appropriate legal and regulatory frameworks, and ongoing public engagement to ensure that the benefits and risks of autonomous systems are understood and appropriately managed by all stakeholders.

The decisions we make today about AI robot ethics will have profound implications for the future of human-machine interaction and the kind of society we create together. By taking a proactive and thoughtful approach to these challenges, we can help ensure that autonomous systems serve as tools for human flourishing rather than sources of harm or inequality, creating a future where technology and humanity can coexist in ways that promote safety, justice, and the common good.

Disclaimer

This article is for informational purposes only and does not constitute legal, ethical, or professional advice. The views expressed are based on current understanding of AI ethics and autonomous systems. The rapidly evolving nature of AI technology and regulatory frameworks means that specific recommendations and best practices may change over time. Readers should consult with relevant experts and stay current with developments in AI ethics, safety protocols, and legal frameworks when making decisions about autonomous systems. The implementation of AI robot ethics requires careful consideration of specific contexts, applications, and stakeholder needs.