The rapid adoption of artificial intelligence and machine learning technologies across industries has introduced unprecedented security challenges that traditional cybersecurity approaches struggle to address effectively. As organizations increasingly rely on ML applications for critical business processes, the need for specialized security testing methodologies has become paramount, requiring a fundamental shift in how we approach vulnerability assessment and penetration testing for intelligent systems.

Stay updated with the latest AI security trends to understand emerging threats and defense mechanisms in the rapidly evolving landscape of AI-powered applications. The unique characteristics of machine learning systems, including their reliance on training data, complex algorithmic decision-making processes, and dynamic behavioral patterns, present novel attack vectors that demand innovative security testing approaches specifically tailored to identify and mitigate AI-specific vulnerabilities.

Understanding the AI Security Landscape

Machine learning applications present a fundamentally different security paradigm compared to traditional software systems, introducing vulnerabilities that span across data integrity, model robustness, privacy preservation, and algorithmic fairness. Unlike conventional applications where security flaws typically manifest as coding errors or configuration mistakes, AI systems face threats that can exploit the statistical nature of machine learning algorithms, manipulate training data, or leverage the opacity of complex neural networks to achieve malicious objectives.

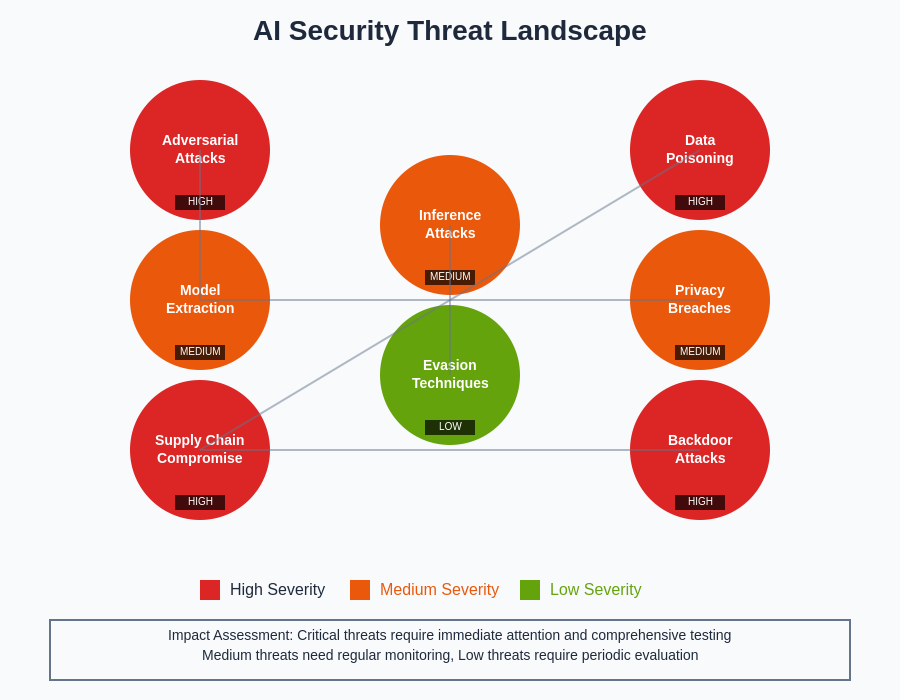

The security testing of ML applications requires understanding multiple layers of potential vulnerabilities, including data poisoning attacks that corrupt training datasets, adversarial examples that fool trained models into making incorrect predictions, model inversion attacks that extract sensitive information from trained models, and membership inference attacks that determine whether specific data points were used in model training. These sophisticated attack vectors necessitate equally sophisticated defensive strategies and testing methodologies.

The complexity of AI security extends beyond technical vulnerabilities to encompass ethical considerations, regulatory compliance requirements, and the potential for unintended bias amplification that can result in discriminatory outcomes. Security testing frameworks for ML applications must therefore address not only traditional security concerns but also the unique challenges posed by algorithmic decision-making systems that operate with varying degrees of autonomy and interpretability.

Adversarial Attack Vectors and Vulnerability Assessment

Adversarial attacks represent one of the most significant security challenges facing machine learning applications, exploiting the inherent vulnerabilities in how neural networks process and interpret input data. These attacks involve carefully crafted input modifications that remain imperceptible to human observers but can cause ML models to produce dramatically incorrect outputs, potentially leading to security breaches, financial losses, or safety incidents in critical applications.

The landscape of adversarial attacks encompasses multiple categories, including evasion attacks that occur during model inference, poisoning attacks that target the training process, and extraction attacks that attempt to steal model parameters or training data. Understanding these attack vectors is essential for developing comprehensive security testing protocols that can identify vulnerabilities across the entire ML application lifecycle from data collection and preprocessing through model deployment and ongoing operation.

Enhance your AI security knowledge with Claude’s advanced reasoning capabilities to develop robust defense strategies against sophisticated adversarial attacks targeting machine learning systems. The dynamic nature of adversarial threats requires continuous monitoring and adaptive security measures that can evolve alongside emerging attack methodologies and defensive techniques.

Penetration testing for adversarial vulnerabilities involves systematic evaluation of model robustness against various attack scenarios, including gradient-based attacks that leverage model gradients to generate adversarial examples, black-box attacks that operate without knowledge of model internals, and transfer attacks that exploit the tendency for adversarial examples to remain effective across different models trained on similar tasks. These testing approaches help identify critical vulnerabilities that could be exploited by malicious actors.

Data Poisoning and Training Security Assessment

Data poisoning represents a particularly insidious threat to machine learning security, as it targets the foundation upon which all ML models are built. These attacks involve the strategic insertion of malicious or corrupted data into training datasets, potentially compromising model integrity from the ground up and creating vulnerabilities that persist throughout the model’s operational lifetime. The subtle nature of data poisoning makes it exceptionally difficult to detect using traditional security monitoring approaches.

Effective security testing for data poisoning vulnerabilities requires comprehensive analysis of data collection pipelines, validation processes, and quality assurance mechanisms. This includes evaluating the integrity of data sources, assessing the effectiveness of data sanitization procedures, and testing the resilience of training processes against various forms of input manipulation. Security assessments must also consider the potential for supply chain attacks that could introduce poisoned data through compromised third-party sources or insider threats.

The complexity of modern ML training pipelines, which often involve multiple data sources, preprocessing steps, and feature engineering processes, creates numerous potential entry points for poisoning attacks. Security testing frameworks must therefore adopt a holistic approach that examines the entire data lifecycle, from initial collection through final model training, identifying vulnerabilities that could allow attackers to influence model behavior through strategic data manipulation.

Model Extraction and Intellectual Property Protection

Model extraction attacks pose significant threats to organizations that have invested substantial resources in developing proprietary machine learning models. These attacks attempt to reverse-engineer trained models by querying them systematically and using the responses to train surrogate models that approximate the original’s functionality. The success of such attacks can result in intellectual property theft, competitive disadvantage, and the creation of adversarial models that can be used to develop more effective attacks against the original system.

Security testing for model extraction vulnerabilities involves evaluating the information leakage potential of ML APIs, assessing the effectiveness of query limiting mechanisms, and testing the robustness of model protection strategies. This includes analyzing response patterns that could reveal model architecture details, evaluating the effectiveness of differential privacy techniques, and assessing the potential for reconstruction attacks that attempt to recover training data from model responses.

The protection of machine learning intellectual property requires implementation of sophisticated defensive measures that balance security with functionality, including techniques such as output perturbation, query monitoring, and adaptive response strategies that can detect and mitigate extraction attempts. Security testing must validate the effectiveness of these protective mechanisms while ensuring they do not significantly impact legitimate user experiences or model performance.

Privacy-Preserving ML and Inference Security

Privacy considerations in machine learning extend beyond traditional data protection concerns to encompass the unique risks associated with inference-time privacy breaches and the potential for sensitive information extraction from trained models. Machine learning applications often process highly sensitive personal data, and the statistical nature of ML algorithms can inadvertently reveal information about individual data points or population characteristics that should remain confidential.

Security testing for privacy-preserving ML applications must evaluate the effectiveness of privacy protection mechanisms such as differential privacy, federated learning protocols, and secure multi-party computation techniques. This includes assessing the privacy-utility tradeoffs inherent in these approaches, testing the robustness of privacy guarantees under various attack scenarios, and validating the implementation correctness of complex cryptographic protocols used to protect sensitive data during processing.

Explore comprehensive AI research capabilities with Perplexity to stay informed about cutting-edge privacy-preserving techniques and emerging threats to ML privacy that require specialized testing approaches. The rapidly evolving landscape of privacy-preserving machine learning technologies demands continuous updating of security testing methodologies to address new privacy risks and evaluate novel protective mechanisms.

The testing of privacy-preserving ML systems requires specialized expertise in both cryptographic protocols and statistical privacy analysis, as vulnerabilities can manifest through subtle information leakage channels that may not be apparent through conventional security testing approaches. Comprehensive privacy testing must therefore combine theoretical analysis with practical experimentation to identify potential privacy breaches across different attack models and adversarial capabilities.

Automated Security Testing Frameworks for AI Systems

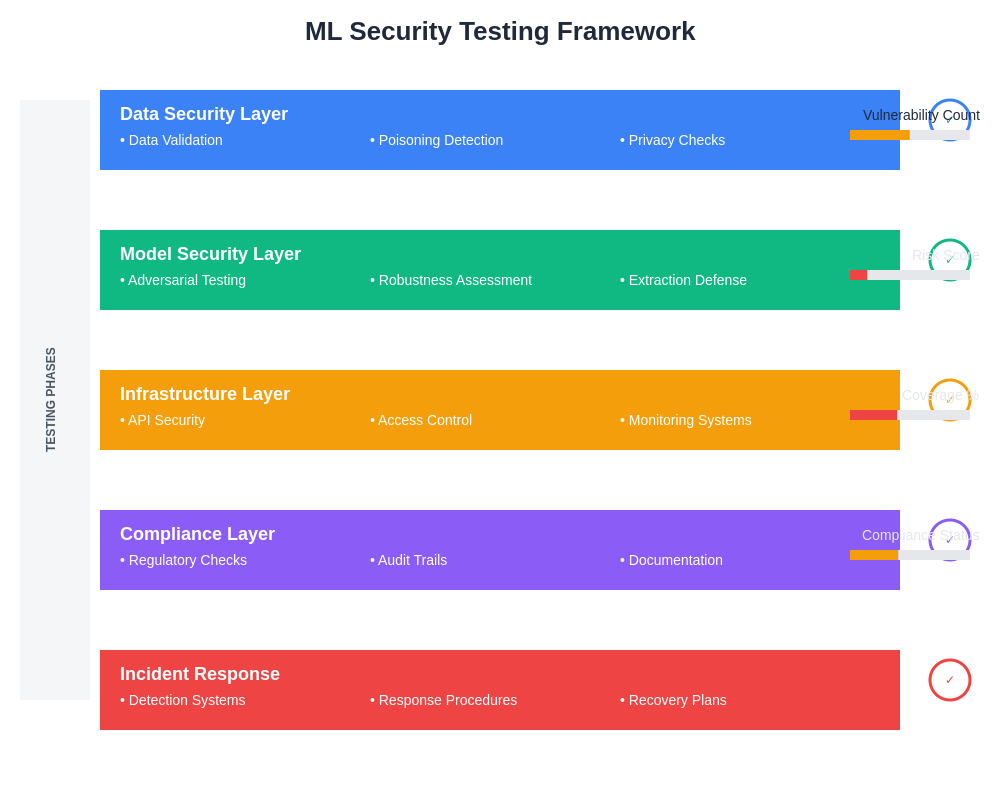

The complexity and scale of modern machine learning deployments necessitate automated security testing frameworks that can systematically evaluate AI systems for vulnerabilities across multiple threat vectors. Traditional manual penetration testing approaches, while valuable, are insufficient for addressing the dynamic and multifaceted security challenges posed by ML applications that may process millions of requests daily and continuously adapt their behavior based on new data.

Automated security testing frameworks for AI systems must incorporate specialized techniques for generating adversarial test cases, evaluating model robustness across diverse input scenarios, and monitoring for indicators of security compromise during ongoing operations. These frameworks typically combine static analysis of model architectures and training procedures with dynamic testing that evaluates runtime behavior under various attack conditions and stress scenarios.

The development of effective automated testing frameworks requires integration of multiple security testing methodologies, including fuzzing techniques adapted for ML inputs, property-based testing that verifies security-relevant model behaviors, and continuous monitoring systems that can detect anomalous patterns indicative of ongoing attacks. These frameworks must also provide comprehensive reporting capabilities that help security teams understand identified vulnerabilities and prioritize remediation efforts.

Compliance and Regulatory Considerations for AI Security

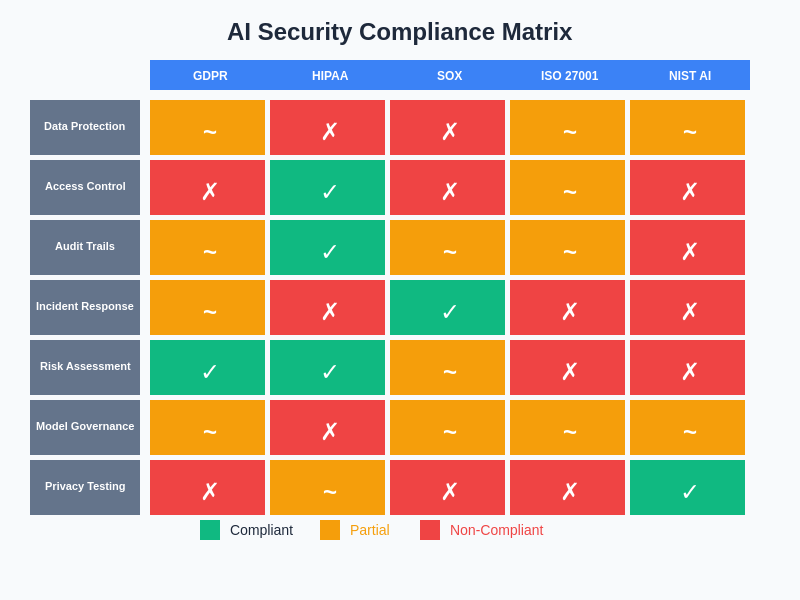

The regulatory landscape surrounding AI security is rapidly evolving, with new frameworks and requirements emerging at both national and international levels that mandate specific security practices for machine learning applications in regulated industries. Organizations deploying AI systems must navigate complex compliance requirements that address not only traditional cybersecurity concerns but also AI-specific risks related to algorithmic bias, transparency, and accountability.

Security testing programs for AI applications must incorporate compliance validation procedures that ensure adherence to relevant regulatory frameworks, industry standards, and organizational policies governing AI deployment. This includes testing for compliance with data protection regulations, evaluating the implementation of required security controls, and documenting security testing procedures to demonstrate due diligence in risk management practices.

The intersection of AI security and regulatory compliance creates unique challenges that require specialized expertise in both technical security assessment and regulatory interpretation. Security testing frameworks must therefore be designed to produce compliance-ready documentation while maintaining the technical rigor necessary to identify genuine security vulnerabilities that could impact organizational risk posture.

Incident Response and Recovery for AI Security Breaches

The unique characteristics of AI security incidents require specialized incident response procedures that address the complex interdependencies between data integrity, model performance, and system security. Unlike traditional security incidents that typically involve clear indicators of compromise and well-defined remediation procedures, AI security breaches may manifest through subtle performance degradations, biased outputs, or privacy violations that can be difficult to detect and attribute to malicious activity.

Effective incident response for AI security requires comprehensive monitoring systems that can detect indicators of compromise across multiple dimensions, including model performance metrics, data quality indicators, and behavioral anomalies that may suggest ongoing attacks. Response procedures must also address the unique challenges of forensic analysis in ML systems, where traditional log analysis may be insufficient to understand the full scope and impact of security incidents.

The recovery process for AI security incidents often involves complex decisions about model retraining, data decontamination, and system restoration that require careful balancing of security considerations with business continuity requirements. Organizations must therefore develop specialized incident response playbooks that address AI-specific scenarios while maintaining the rapid response capabilities necessary to minimize the impact of security breaches on critical business operations.

Emerging Threats and Future Defense Strategies

The AI security landscape continues to evolve rapidly, with new attack vectors and defense mechanisms emerging as both attackers and defenders develop increasingly sophisticated techniques. Emerging threats include multi-modal attacks that target AI systems processing multiple data types simultaneously, supply chain attacks that compromise AI development tools and frameworks, and advanced persistent threats specifically designed to maintain long-term access to AI systems while evading detection.

Future defense strategies for AI security will likely incorporate advanced techniques such as adversarial training that improves model robustness through exposure to attack examples, automated defense systems that can adapt to new threats in real-time, and collaborative defense networks that enable organizations to share threat intelligence and defense strategies. The development of these advanced defense mechanisms requires ongoing research and experimentation with novel security testing methodologies.

The integration of quantum computing capabilities into both attack and defense strategies represents another significant factor that will shape the future of AI security. Organizations must begin preparing for the potential impact of quantum-enabled attacks on current cryptographic protections while exploring the defensive capabilities offered by quantum-enhanced security mechanisms.

Best Practices for Secure AI Development and Deployment

Implementing robust security practices throughout the AI development lifecycle requires integration of security considerations into every phase of machine learning system development, from initial problem formulation through ongoing operational monitoring. This security-by-design approach ensures that security controls are built into AI systems rather than retrofitted after deployment, reducing the likelihood of vulnerabilities and improving the overall security posture of ML applications.

Key security practices for AI development include implementing secure data handling procedures that protect sensitive information throughout the training process, adopting robust model validation techniques that can identify potential security vulnerabilities before deployment, and establishing comprehensive monitoring systems that can detect and respond to security incidents in operational environments. These practices must be supported by appropriate organizational policies, training programs, and governance structures.

The establishment of security-focused AI development practices requires collaboration between security professionals, data scientists, and software engineers to ensure that security considerations are appropriately balanced with functional requirements and performance objectives. This interdisciplinary approach helps create AI systems that are both secure and effective in achieving their intended business objectives.

Conclusion: Building Resilient AI Security Architectures

The security of artificial intelligence and machine learning applications represents one of the most significant challenges facing organizations in the digital age, requiring innovative approaches that address the unique vulnerabilities inherent in intelligent systems. Effective AI security testing must encompass traditional cybersecurity concerns while addressing novel threats such as adversarial attacks, data poisoning, and privacy breaches that are specific to machine learning applications.

The development of comprehensive AI security programs requires ongoing investment in specialized expertise, advanced testing tools, and adaptive defense mechanisms that can evolve alongside the rapidly changing threat landscape. Organizations that successfully implement robust AI security practices will be better positioned to realize the benefits of machine learning technologies while minimizing the associated risks and maintaining stakeholder trust in their AI-powered systems.

As the AI security field continues to mature, we can expect to see the emergence of standardized testing frameworks, automated security tools, and best practice guidelines that will help organizations navigate the complex security challenges associated with machine learning deployment. The future success of AI initiatives will increasingly depend on the ability to balance innovation with security, ensuring that the transformative potential of artificial intelligence is realized in a secure and responsible manner.

Disclaimer

This article is for informational and educational purposes only and does not constitute professional security advice. The information provided is based on current understanding of AI security challenges and should not be considered as comprehensive guidance for specific security implementations. Organizations should consult with qualified security professionals and conduct thorough risk assessments before implementing AI security measures. The effectiveness of security practices may vary depending on specific use cases, threat models, and organizational contexts. Readers are advised to stay updated with the latest developments in AI security research and industry best practices.