The concept of technological singularity stands as one of the most provocative and contentious theories in contemporary discussions about artificial intelligence and humanity’s future. This fundamental debate centers around whether artificial intelligence will eventually surpass human intelligence in all domains, leading to an unprecedented transformation of civilization itself. At the heart of this discourse lies a profound philosophical and scientific divide between those who embrace exponential technological optimism and those who advocate for measured skepticism regarding such dramatic predictions about our technological trajectory.

Explore the latest discussions on AI development trends to understand how current technological advances relate to singularity predictions and theories. The singularity debate encompasses not merely technical considerations but extends into realms of consciousness, ethics, economics, and the fundamental nature of intelligence itself, making it one of the defining intellectual challenges of our era.

Ray Kurzweil’s Vision of Exponential Transformation

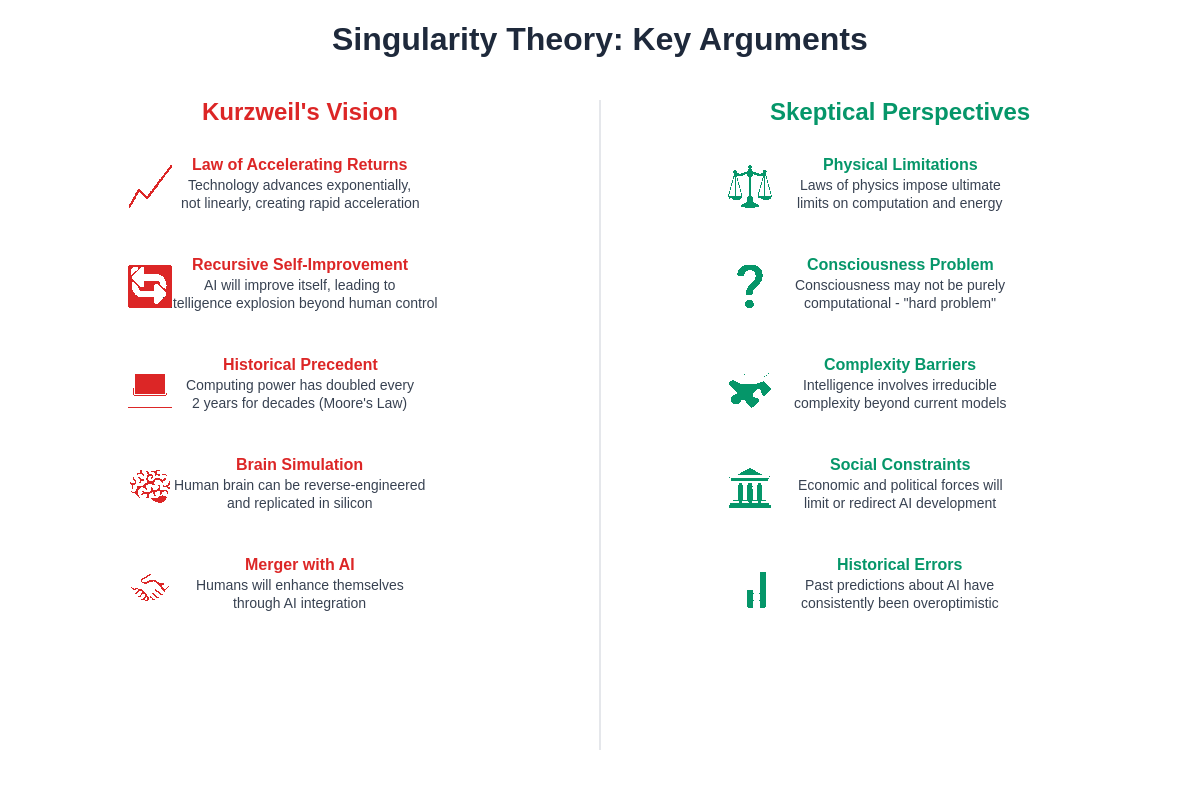

Ray Kurzweil, often regarded as the most prominent advocate of singularity theory, has constructed an elaborate framework predicting that artificial intelligence will achieve and surpass human-level performance across all cognitive domains by approximately 2045. His theoretical foundation rests upon what he terms the “Law of Accelerating Returns,” which posits that technological progress follows exponential rather than linear trajectories, with each advancement building upon previous innovations to create increasingly rapid cycles of improvement and transformation.

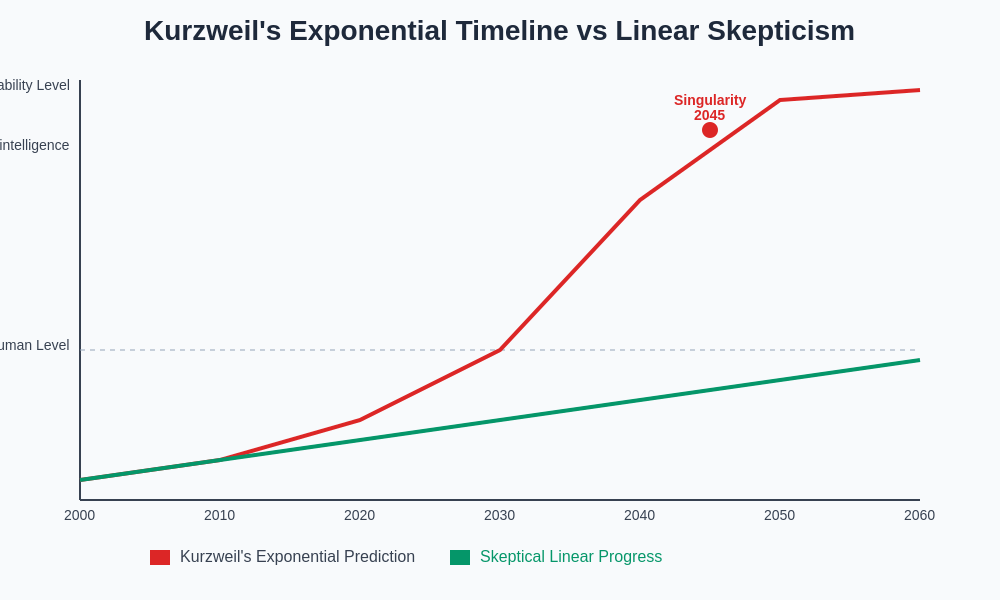

Kurzweil’s analysis draws extensively from historical patterns of technological development, pointing to consistent examples of exponential growth in computing power, information processing capabilities, and digital connectivity. He argues that these trends represent fundamental characteristics of technological evolution rather than temporary phenomena, suggesting that current advances in machine learning, neural networks, and computational processing represent early indicators of an approaching inflection point where artificial systems will rapidly surpass biological intelligence in sophistication and capability.

Central to Kurzweil’s vision is the concept of recursive self-improvement, whereby artificial intelligence systems will eventually become capable of enhancing their own cognitive architectures and processing capabilities. This recursive enhancement process, he argues, will create a feedback loop of accelerating intelligence growth that transcends the limitations of human-directed development, ultimately leading to intelligence levels that are fundamentally incomprehensible from our current perspective and capabilities.

The stark contrast between exponential and linear progression models reveals the fundamental disagreement about AI development trajectories. While Kurzweil predicts dramatic acceleration leading to singularity by 2045, skeptical perspectives suggest more gradual, incremental progress that may never reach the predicted inflection point.

The Philosophical Foundations of Singularity Theory

The theoretical underpinnings of singularity theory extend beyond mere technological predictions to encompass fundamental questions about the nature of consciousness, intelligence, and human identity. Kurzweil and other singularity proponents argue that consciousness and intelligence are substrate-independent phenomena that can be replicated and enhanced within artificial systems, provided those systems achieve sufficient computational sophistication and architectural complexity.

This materialist perspective on consciousness suggests that human cognitive processes, including creativity, emotional understanding, and abstract reasoning, represent computational patterns that can be analyzed, understood, and eventually replicated within artificial systems. Proponents argue that once artificial systems achieve human-level performance in these domains, there are no theoretical barriers preventing them from surpassing human capabilities through continued computational enhancement and architectural refinement.

Discover advanced AI research and developments with Claude to explore how current AI capabilities relate to theoretical discussions about consciousness and intelligence replication. The philosophical implications of this perspective challenge traditional notions of human uniqueness and suggest that the boundary between biological and artificial intelligence may be more permeable than previously assumed.

Skeptical Perspectives on Exponential Predictions

Despite the compelling nature of exponential growth arguments, numerous researchers, philosophers, and technologists have raised substantive objections to singularity theory’s core assumptions and predictions. These skeptical perspectives highlight several fundamental limitations and oversights in exponential extrapolation approaches, arguing that technological development faces inherent constraints that prevent indefinite exponential acceleration across all domains of human endeavor and technological capability.

One of the most significant challenges to singularity theory comes from researchers who argue that intelligence and consciousness represent phenomena that are far more complex and multifaceted than current computational models suggest. Critics contend that human intelligence emerges from intricate biological processes, evolutionary adaptations, and embodied experiences that cannot be easily replicated through purely computational approaches, regardless of processing power or architectural sophistication.

Leading AI researchers such as Gary Marcus, Rodney Brooks, and others have argued that current approaches to artificial intelligence, while impressive within specific domains, demonstrate fundamental limitations in generalization, common-sense reasoning, and adaptive learning that suggest the path to human-level artificial general intelligence may be significantly more complex and time-consuming than singularity proponents anticipate.

The fundamental divide between singularity advocates and skeptics centers on several key theoretical and practical considerations. While proponents emphasize exponential technological trends and the potential for recursive self-improvement, skeptics highlight physical limitations, consciousness complexities, and historical patterns of overoptimistic AI predictions.

The Hard Problem of Consciousness

Perhaps the most formidable challenge to singularity theory lies in what philosophers term the “hard problem of consciousness” – the question of how subjective, first-person experiences arise from physical processes. Skeptics argue that even if artificial systems achieve human-level performance in cognitive tasks, this functional equivalence does not necessarily imply genuine consciousness, understanding, or subjective experience comparable to human awareness and comprehension.

This distinction between functional performance and genuine consciousness raises profound questions about whether artificial systems can truly understand meaning, experience emotions, or possess genuine creative insights, or whether they represent sophisticated pattern-matching and statistical processing systems that simulate understanding without genuine comprehension. Critics argue that consciousness may involve biological processes, quantum effects, or emergent properties that cannot be replicated through conventional computational architectures.

The implications of this debate extend beyond theoretical considerations to practical questions about the rights, responsibilities, and ethical status of advanced artificial systems. If consciousness cannot be reliably identified or replicated in artificial systems, then predictions about superintelligent artificial minds may be fundamentally misguided, regardless of their functional capabilities and performance metrics.

Physical and Computational Limitations

Skeptical perspectives on singularity theory frequently emphasize physical and computational constraints that may prevent indefinite exponential growth in artificial intelligence capabilities. These limitations include fundamental physical laws governing energy consumption, heat dissipation, and information processing that impose ultimate boundaries on computational performance and efficiency, regardless of architectural innovations and technological advances.

Moore’s Law, which has historically driven exponential improvements in computer processing power, faces increasing challenges from quantum effects, manufacturing limitations, and physical constraints as transistor sizes approach atomic scales. While alternative computing paradigms such as quantum computing, neuromorphic architectures, and biological computing offer potential solutions, skeptics argue that these alternatives face their own fundamental limitations and may not provide the unlimited scalability required for singularity scenarios.

Utilize Perplexity for comprehensive research on the latest developments in computing technology and physical limitations affecting AI development trajectories. Energy consumption represents another significant constraint, as advanced artificial intelligence systems require enormous computational resources that translate into substantial energy requirements, potentially creating environmental and economic barriers to unlimited intelligence enhancement.

Economic and Social Constraints

Beyond technical limitations, skeptical perspectives highlight economic and social factors that may constrain or redirect artificial intelligence development in ways that prevent singularity scenarios from materializing as predicted. The development of advanced artificial intelligence systems requires massive financial investments, specialized expertise, and social acceptance that may not be available or sustainable over the extended timeframes required for singularity-level developments.

Economic considerations include the practical costs of research and development, the commercial viability of advanced AI systems, and the potential for economic disruption that might prompt regulatory intervention or social resistance. Critics argue that economic forces tend to direct technological development toward practical, commercially viable applications rather than abstract theoretical capabilities, potentially slowing progress toward general artificial intelligence.

Social and political factors also play crucial roles in technology adoption and development trajectories. Public concerns about artificial intelligence safety, employment displacement, privacy implications, and social control may generate political pressure for regulation, restriction, or redirection of AI research priorities. These social dynamics could significantly influence the pace and direction of AI development, potentially preventing the unconstrained exponential growth that singularity theory requires.

Alternative Timelines and Gradual Development

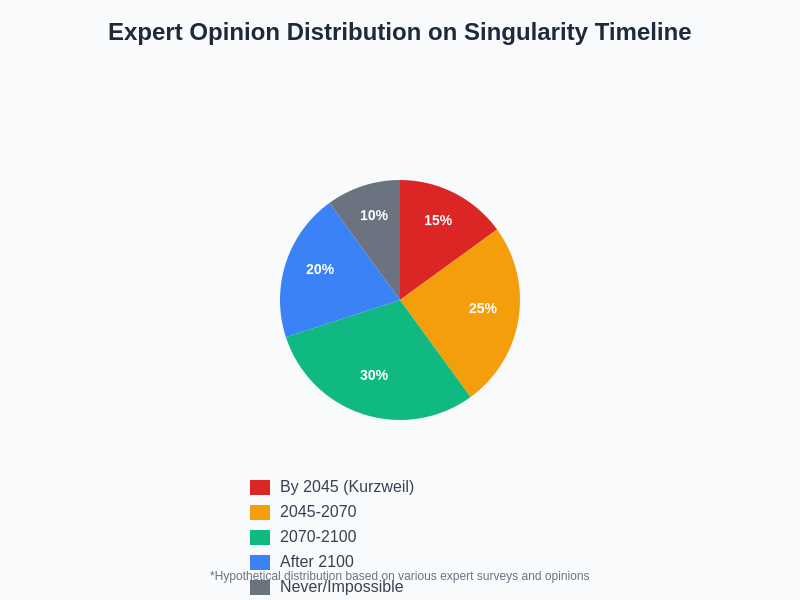

Rather than accepting Kurzweil’s specific timeline predictions, many researchers propose alternative scenarios characterized by gradual, incremental progress in artificial intelligence capabilities without dramatic singularity events. These perspectives suggest that AI development will continue to advance through steady improvements in specific domains while facing persistent challenges in achieving general intelligence comparable to human cognitive flexibility and adaptability.

The distribution of expert opinions regarding singularity timelines reveals significant uncertainty and disagreement within the scientific community. While some researchers align with Kurzweil’s 2045 prediction, many favor more conservative timelines or question whether technological singularity will occur at all within any predictable timeframe.

Gradual development scenarios emphasize the likelihood that artificial intelligence will continue to excel in narrow domains such as pattern recognition, data analysis, and optimization while struggling with tasks requiring common-sense reasoning, creative problem-solving, and contextual understanding. This pattern of development suggests a future characterized by human-AI collaboration rather than AI dominance, with artificial systems serving as powerful tools that enhance human capabilities rather than replacing them entirely.

These alternative perspectives often emphasize the importance of addressing current limitations in AI systems, such as robustness, interpretability, and alignment with human values, rather than focusing primarily on achieving superintelligence. Proponents of gradual development argue that solving these immediate challenges represents a more practical and achievable approach to beneficial AI development than pursuing singularity objectives.

The Role of Uncertainty and Prediction Challenges

A fundamental criticism of singularity theory involves the inherent difficulty of making accurate predictions about complex technological and social systems over extended timeframes. Historical examples of technological prediction demonstrate consistent patterns of both overestimation and underestimation, with experts frequently failing to anticipate breakthrough developments while simultaneously overestimating the pace of predictable improvements.

Skeptics argue that the complexity of artificial intelligence development, involving interactions between technical capabilities, economic factors, social acceptance, and regulatory responses, makes precise timeline predictions highly unreliable. The history of AI research itself includes multiple periods of inflated expectations followed by periods of reduced funding and interest, known as “AI winters,” which demonstrate the unpredictable nature of technological progress in this domain.

Furthermore, the concept of exponential growth assumes that current trends will continue indefinitely without encountering unexpected obstacles, breakthrough developments, or paradigm shifts that could dramatically alter development trajectories. Critics argue that complex systems typically encounter constraints, saturation effects, and discontinuities that prevent simple extrapolation from current trends to future capabilities.

Safety and Control Considerations

Both singularity proponents and skeptics share concerns about the safety implications of advanced artificial intelligence systems, though they approach these concerns from different perspectives. Singularity advocates often emphasize the importance of ensuring that superintelligent systems remain aligned with human values and interests, arguing that successful navigation of the singularity transition requires careful attention to AI safety research and development.

Skeptical perspectives, while generally less concerned about near-term superintelligence scenarios, often emphasize the importance of addressing current AI safety challenges, including bias, robustness, interpretability, and unintended consequences. These researchers argue that focusing on immediate safety concerns represents a more practical approach than preparing for hypothetical superintelligence scenarios that may not materialize as predicted.

The debate over AI safety reflects broader disagreements about the appropriate allocation of research resources and attention. While singularity proponents advocate for significant investment in long-term AI safety research, skeptics often argue for greater focus on understanding and addressing the limitations and risks of current AI systems before pursuing more advanced capabilities.

Implications for Research and Development

The singularity debate has significant implications for the direction and priorities of artificial intelligence research and development. Kurzweil’s vision suggests that researchers should focus on achieving artificial general intelligence and preparing for post-singularity scenarios, while skeptical perspectives often emphasize the importance of incremental progress, robustness, and practical applications within current technological constraints.

These different philosophical approaches influence funding decisions, research priorities, and technology development strategies across academia, industry, and government institutions. Organizations aligned with singularity thinking may prioritize long-term research projects aimed at achieving general AI, while those influenced by skeptical perspectives may focus on improving current AI capabilities within specific domains while addressing known limitations and risks.

The debate also influences public discourse and policy discussions about artificial intelligence regulation, education, and social preparation. Singularity perspectives often emphasize the transformative potential of AI and the need for fundamental changes in social, economic, and educational systems, while skeptical approaches may advocate for more gradual adaptation and incremental policy responses to technological change.

Emerging Middle Ground Perspectives

As the singularity debate has evolved, several researchers and thinkers have developed middle ground positions that acknowledge both the potential for significant AI advancement and the limitations of exponential extrapolation. These perspectives often emphasize the uncertainty surrounding AI development timelines while recognizing the importance of preparing for multiple possible scenarios rather than committing to specific predictions.

Middle ground approaches frequently focus on developing AI systems that are robust, beneficial, and aligned with human values regardless of their ultimate capabilities or development timelines. These perspectives emphasize the importance of incremental progress, safety research, and social adaptation while remaining open to the possibility of more dramatic technological developments than current skeptical positions might suggest.

Such balanced approaches may provide more practical frameworks for navigating AI development uncertainties while avoiding both excessive optimism and unwarranted pessimism about artificial intelligence capabilities and implications. They offer pathways for continued research and development that remain responsive to empirical evidence and changing circumstances rather than being constrained by rigid theoretical commitments.

Contemporary Evidence and Developments

Recent developments in artificial intelligence, including large language models, advanced robotics, and machine learning applications, provide empirical data that both singularity proponents and skeptics can interpret as supporting their respective positions. Singularity advocates point to rapid improvements in AI capabilities, increasing generality of AI systems, and accelerating research progress as evidence supporting exponential development trajectories.

Skeptical observers emphasize persistent limitations in current AI systems, including brittleness, lack of genuine understanding, and difficulties with generalization beyond training domains. They argue that recent advances, while impressive, represent incremental improvements within existing paradigms rather than fundamental breakthroughs toward general intelligence or consciousness.

The interpretation of contemporary AI developments reflects the broader philosophical differences underlying the singularity debate. Whether current progress represents early indicators of approaching singularity or evidence of AI’s fundamental limitations depends significantly on one’s theoretical framework and assumptions about the nature of intelligence, consciousness, and technological development trajectories.

Future Research Directions and Resolutions

The resolution of debates between singularity theory and skeptical perspectives may ultimately depend on empirical developments in artificial intelligence research, neuroscience, cognitive science, and related fields. Continued progress in understanding biological intelligence, consciousness, and cognitive processes may provide crucial insights into whether and how these phenomena can be replicated or surpassed in artificial systems.

Advances in AI safety research, interpretability techniques, and robustness evaluation may help clarify the current limitations of artificial intelligence systems and provide better estimates of the challenges involved in achieving more general capabilities. Similarly, developments in alternative computing paradigms, neuromorphic architectures, and biological computing may reveal new possibilities or constraints for artificial intelligence advancement.

The singularity debate serves an important function in encouraging rigorous thinking about artificial intelligence capabilities, limitations, and implications regardless of one’s position on specific timeline predictions or theoretical commitments. By engaging seriously with both optimistic and skeptical perspectives, researchers and policymakers can develop more robust approaches to AI development that remain responsive to emerging evidence while preparing for multiple possible futures.

Conclusion: Navigating Uncertainty and Possibility

The debate between Ray Kurzweil’s singularity vision and skeptical perspectives represents more than an academic disagreement about technological timelines and capabilities. It reflects fundamental differences in how we understand intelligence, consciousness, and the relationship between biological and artificial systems. These differences have practical implications for research priorities, policy decisions, and social preparation for artificial intelligence development.

Rather than seeking definitive resolution of these debates, perhaps the most productive approach involves maintaining intellectual humility about the limits of prediction while taking seriously both the transformative potential of artificial intelligence and the genuine challenges involved in its development. This balanced perspective encourages continued research and development while emphasizing safety, robustness, and alignment with human values regardless of specific capability predictions or timeline expectations.

The singularity debate ultimately serves as a valuable framework for exploring some of the most profound questions about technology, consciousness, and human destiny. Whether or not Kurzweil’s specific predictions prove accurate, the discussions generated by singularity theory continue to advance our understanding of artificial intelligence, its possibilities, and its limitations in ways that benefit both research and broader social understanding of these crucial technological developments.

Disclaimer

This article presents various theoretical perspectives on artificial intelligence development and does not constitute predictions about future technological capabilities or timelines. The views expressed represent current academic and professional discussions about AI development and should not be considered definitive statements about future outcomes. Readers should consult multiple sources and expert opinions when forming their own views about artificial intelligence development and its implications.