The exponential growth of artificial intelligence and machine learning applications has created unprecedented demands on storage infrastructure, fundamentally challenging traditional storage paradigms and driving the development of revolutionary storage technologies. As AI models become increasingly sophisticated and datasets reach astronomical proportions, the choice between different storage solutions can dramatically impact training times, inference performance, and overall system efficiency. Two technologies have emerged as leading contenders for high-performance AI workloads: NVMe SSDs and Intel Optane memory, each offering distinct advantages and addressing specific performance bottlenecks that plague modern machine learning operations.

Explore the latest AI infrastructure trends to understand how storage technologies are evolving to meet the demanding requirements of next-generation artificial intelligence applications. The strategic selection of appropriate storage solutions has become a critical factor in determining the success and scalability of AI initiatives, influencing everything from model training efficiency to real-time inference capabilities.

Understanding Modern AI Storage Requirements

The computational demands of contemporary artificial intelligence applications extend far beyond traditional database operations or file system access patterns, requiring storage solutions capable of handling massive parallel data streams, random access patterns, and sustained high-throughput operations. Machine learning workloads typically involve processing enormous datasets that can span terabytes or petabytes, while simultaneously requiring rapid access to training data, model parameters, and intermediate computational results. These requirements create unique challenges for storage infrastructure, demanding solutions that can deliver consistent performance under highly variable workload conditions.

Modern deep learning frameworks generate complex I/O patterns that combine sequential data streaming for large dataset processing with random access patterns for model parameter updates and gradient computations. The storage subsystem must efficiently handle these mixed workloads while maintaining low latency for critical operations such as checkpoint saving, model loading, and real-time inference serving. Additionally, the collaborative nature of many AI development environments necessitates storage solutions that can support concurrent access from multiple training processes, distributed computing frameworks, and data preprocessing pipelines without significant performance degradation.

The scale and complexity of modern AI applications have also introduced new considerations around data locality, cache efficiency, and memory hierarchy optimization. Training large language models or computer vision systems often requires careful orchestration of data movement between different storage tiers, making the performance characteristics and integration capabilities of storage technologies crucial factors in overall system design and optimization.

NVMe SSD Technology Deep Dive

Non-Volatile Memory Express represents a revolutionary approach to solid-state storage that was specifically designed to overcome the limitations of legacy storage interfaces and fully exploit the performance potential of NAND flash memory. Unlike traditional SATA or SAS interfaces that were originally developed for mechanical hard drives, NVMe was engineered from the ground up to leverage the parallelism and low latency characteristics of solid-state storage devices. This fundamental architectural advantage translates into significant performance improvements for AI workloads that rely heavily on fast data access and high-throughput storage operations.

The NVMe protocol utilizes the PCIe bus directly, bypassing the bottlenecks inherent in older storage interfaces and enabling much higher bandwidth and lower latency connections between the storage device and the host system. Modern NVMe SSDs can achieve sequential read speeds exceeding 7,000 MB/s and random I/O operations per second (IOPS) in the hundreds of thousands, making them exceptionally well-suited for machine learning applications that require rapid access to training datasets and frequent model parameter updates.

Enhance your AI infrastructure with advanced tools like Claude for optimizing storage configurations and analyzing performance bottlenecks in machine learning pipelines. The sophisticated command queuing capabilities of NVMe, with support for up to 65,536 queues and 65,536 commands per queue, enable efficient handling of the highly parallel I/O patterns typical in AI workloads, while advanced features like namespace management and end-to-end data protection provide the reliability and flexibility required for production machine learning environments.

Contemporary NVMe SSDs incorporate advanced controller architectures with multiple processing cores, sophisticated wear leveling algorithms, and intelligent caching mechanisms that optimize performance for mixed workloads. The latest generation of NVMe devices also includes features specifically beneficial for AI applications, such as hardware-accelerated compression and encryption, multi-stream support for segregating different data types, and advanced power management capabilities that help maintain consistent performance under varying thermal conditions.

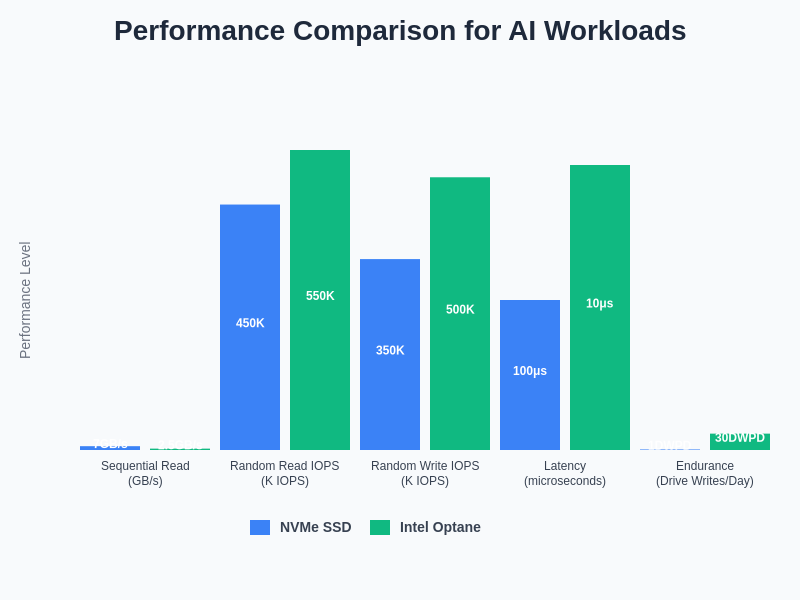

The performance characteristics of NVMe SSDs and Optane technology vary significantly across different metrics that are crucial for AI applications. While NVMe excels in sequential operations essential for large dataset processing, Optane demonstrates superior performance in random access patterns and ultra-low latency scenarios critical for real-time AI inference and interactive machine learning applications.

Intel Optane Technology Architecture

Intel Optane represents a paradigm-shifting approach to storage and memory technology, utilizing 3D XPoint memory architecture to bridge the performance gap between traditional DRAM and NAND flash storage. This revolutionary memory technology offers unique characteristics that make it particularly attractive for certain AI workloads, combining the non-volatility of storage with latency and endurance characteristics that approach those of system memory. The fundamental advantage of Optane lies in its ability to provide persistent storage with dramatically lower latency than traditional SSDs while offering significantly higher capacity and lower cost per gigabyte compared to DRAM.

The 3D XPoint architecture employed by Optane utilizes a crosspoint memory cell design that enables individual bit addressability without the need for transistors in each memory cell, resulting in higher density and improved performance compared to traditional memory technologies. This architectural innovation allows Optane devices to achieve read latencies measured in microseconds rather than milliseconds, while providing write endurance capabilities that far exceed those of NAND flash memory, making it ideal for write-intensive AI applications such as checkpoint creation and model parameter updates.

Optane technology is available in multiple form factors and configurations, including Optane SSDs that connect via NVMe interfaces and Optane DC Persistent Memory modules that integrate directly into system memory slots. This flexibility enables system architects to deploy Optane in various configurations depending on specific application requirements, from high-performance storage tier acceleration to persistent memory extensions that can maintain large model parameters and intermediate computations across system restarts.

The unique characteristics of Optane make it particularly valuable for AI applications that require frequent random access to large datasets, such as recommendation systems processing sparse feature vectors or graph neural networks traversing complex relationship structures. The technology’s ability to maintain consistent performance regardless of data access patterns, combined with its exceptional endurance characteristics, makes it an attractive solution for AI workloads that generate sustained write-intensive operations.

Performance Analysis for Machine Learning Workloads

The performance characteristics of storage technologies under machine learning workloads differ significantly from traditional enterprise applications, requiring careful analysis of metrics beyond simple sequential throughput measurements. Machine learning applications typically generate complex I/O patterns that combine large sequential reads during dataset loading with frequent random writes during model parameter updates, creating unique performance challenges that must be addressed through appropriate storage technology selection and configuration optimization.

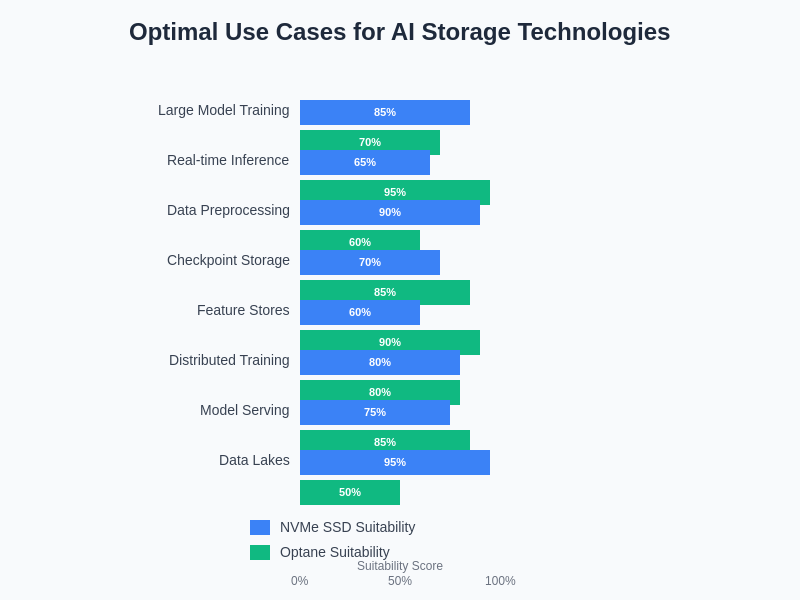

NVMe SSDs excel in machine learning scenarios that prioritize high sequential throughput, such as loading large training datasets, streaming media data for computer vision applications, or processing natural language datasets for transformer model training. The high bandwidth capabilities of modern NVMe devices enable efficient data pipeline operations, while their excellent random read performance supports scenarios requiring rapid access to diverse data samples during training epochs. However, the write endurance limitations of NAND flash memory can become a constraining factor in applications that frequently save model checkpoints or perform intensive hyperparameter optimization with extensive logging.

Optane technology demonstrates superior performance in machine learning scenarios that require frequent random access patterns or write-intensive operations. Applications such as reinforcement learning with experience replay buffers, online learning systems with continuous model updates, or large-scale feature stores with frequent read-write operations benefit significantly from Optane’s consistent low-latency performance and exceptional write endurance. The technology’s ability to maintain performance consistency under mixed workloads makes it particularly valuable for production AI systems serving real-time inference requests while simultaneously updating model parameters based on new data.

Leverage advanced AI research capabilities with Perplexity to analyze performance benchmarks and optimize storage configurations for specific machine learning use cases. The performance comparison between these technologies often depends on specific application characteristics, with factors such as dataset size, model architecture, training methodology, and deployment requirements all influencing the optimal storage solution selection.

Cost-Benefit Analysis and Economic Considerations

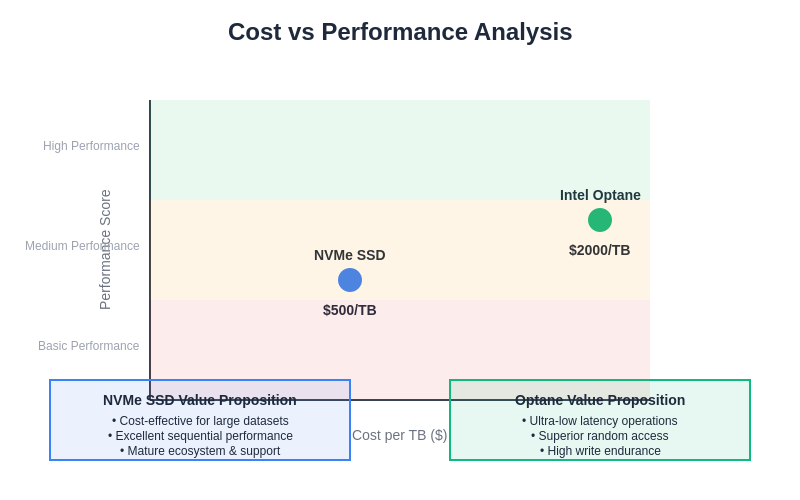

The economic implications of storage technology selection for AI applications extend far beyond initial hardware acquisition costs, encompassing factors such as power consumption, cooling requirements, maintenance overhead, and total system efficiency. While NVMe SSDs typically offer lower cost per gigabyte compared to Optane technology, the total cost of ownership analysis must consider the performance benefits, operational efficiency gains, and potential infrastructure simplification that different storage technologies can provide.

NVMe SSD deployments often require larger storage capacities to achieve equivalent performance levels in certain workloads, particularly those involving frequent random access patterns or write-intensive operations. However, the mature ecosystem surrounding NVMe technology, including extensive vendor options, standardized interfaces, and well-established management tools, can result in lower operational overhead and reduced integration complexity. The competitive market for NVMe SSDs has also driven significant cost reductions over time, making high-performance storage more accessible for AI applications with budget constraints.

Optane technology, while commanding premium pricing, can deliver substantial value in applications where its unique performance characteristics enable significant efficiency improvements or architectural simplifications. The ability to use Optane as both high-performance storage and memory extension can reduce the total amount of DRAM required in AI systems, potentially offsetting the higher cost per gigabyte through reduced memory subsystem expenses. Additionally, the exceptional endurance characteristics of Optane can result in longer operational lifespans and reduced replacement frequency compared to NAND flash-based solutions.

The economic analysis must also consider the indirect costs associated with suboptimal storage performance, including extended training times, reduced development velocity, and potential revenue impact from delayed model deployments. In many cases, the productivity improvements enabled by superior storage performance can justify premium technology investments through faster time-to-market for AI solutions and improved resource utilization efficiency.

The relationship between cost and performance in AI storage solutions requires careful evaluation of total value proposition rather than simple cost per terabyte comparisons. While NVMe SSDs offer excellent cost-effectiveness for general-purpose AI workloads, Optane technology provides superior performance capabilities that can justify its premium pricing in applications where ultra-low latency and high random access performance translate to significant operational advantages.

Implementation Strategies and Best Practices

Successful deployment of advanced storage technologies for AI applications requires careful consideration of system architecture, workload characteristics, and integration requirements. The optimal implementation strategy often involves a tiered storage approach that leverages the strengths of different technologies while minimizing their respective limitations. This hybrid approach can provide both the high-capacity storage required for large datasets and the high-performance access needed for active model training and inference operations.

For NVMe SSD deployments, best practices include careful selection of drive specifications based on workload requirements, with particular attention to endurance ratings, performance consistency, and thermal management capabilities. The implementation should consider factors such as over-provisioning requirements, wear leveling optimization, and integration with existing data management systems. Advanced NVMe features such as namespace management and multi-stream support should be evaluated for their potential benefits in specific AI application scenarios.

Optane implementations require consideration of the technology’s unique characteristics and optimal usage patterns. Deployment strategies may include using Optane SSDs as high-performance cache tiers for frequently accessed data, implementing Optane DC Persistent Memory as extended system memory for large model parameters, or utilizing Optane devices as dedicated storage for write-intensive operations such as model checkpointing and logging. The persistent nature of Optane memory enables innovative architectural approaches such as maintaining training state across system restarts or implementing efficient distributed training coordination mechanisms.

The integration of advanced storage technologies with existing AI frameworks and infrastructure requires careful planning and testing to ensure optimal performance and compatibility. This includes evaluation of file system selection, I/O scheduling policies, and data placement strategies that can maximize the benefits of the underlying storage technology while maintaining compatibility with existing AI development workflows and deployment pipelines.

Real-World Performance Benchmarks

Empirical performance evaluation of storage technologies under realistic AI workloads provides crucial insights for technology selection and system optimization decisions. Comprehensive benchmarking efforts have demonstrated significant variations in performance characteristics depending on specific application patterns, dataset characteristics, and system configurations. These real-world evaluations reveal that theoretical performance specifications may not always translate directly to practical benefits in AI applications, highlighting the importance of workload-specific testing and evaluation.

Training large language models on NVMe SSD storage has demonstrated excellent performance for sequential dataset loading operations, with sustained throughput often approaching the theoretical limits of the storage devices. However, performance can vary significantly during phases involving frequent model checkpointing or distributed training coordination, where the random write performance characteristics become more critical. Benchmark studies have shown that NVMe SSDs can effectively support training operations for models with moderate checkpoint frequency requirements while maintaining cost-effectiveness for large-capacity storage needs.

Optane technology has demonstrated exceptional performance in AI applications involving frequent random access patterns or write-intensive operations. Benchmarks of recommendation systems utilizing Optane storage for feature stores have shown substantial improvements in query response times and system throughput compared to traditional SSD configurations. Similarly, reinforcement learning applications with large experience replay buffers have demonstrated significant training acceleration when utilizing Optane technology for rapid random access to historical training data.

The performance characteristics of different storage technologies can also vary significantly depending on the specific AI framework and implementation details. Deep learning frameworks with sophisticated data pipeline optimizations may achieve different relative performance improvements compared to simpler implementations, highlighting the importance of comprehensive testing under realistic deployment conditions.

Integration with AI Frameworks and Ecosystems

The successful integration of advanced storage technologies with modern AI development ecosystems requires careful consideration of framework-specific optimizations, data pipeline architectures, and compatibility requirements. Popular machine learning frameworks such as TensorFlow, PyTorch, and JAX have evolved sophisticated data loading and preprocessing systems that can leverage high-performance storage technologies effectively, but optimal configuration often requires detailed understanding of both the framework internals and storage technology characteristics.

TensorFlow’s tf.data API provides extensive optimization capabilities that can effectively utilize high-bandwidth NVMe storage through parallel data loading, prefetching, and caching mechanisms. The framework’s support for distributed training scenarios can also benefit significantly from the consistent performance characteristics of Optane technology, particularly in configurations requiring frequent gradient aggregation and parameter synchronization operations. Advanced features such as TensorFlow’s experimental I/O optimizations can further enhance the integration between AI frameworks and underlying storage infrastructure.

PyTorch’s DataLoader implementations offer similar capabilities for leveraging high-performance storage, with additional flexibility for custom data loading strategies that can be optimized for specific storage technology characteristics. The framework’s dynamic computation graph approach can particularly benefit from the low-latency characteristics of Optane technology in applications requiring frequent model structure modifications or adaptive computation patterns.

The integration considerations extend beyond individual framework optimizations to encompass broader ecosystem compatibility, including container orchestration systems, distributed computing platforms, and cloud deployment environments. Modern AI deployment architectures increasingly rely on Kubernetes and similar orchestration systems that must be configured appropriately to expose and utilize advanced storage technology capabilities effectively.

Different AI applications and use cases demonstrate varying degrees of suitability for NVMe SSD versus Optane storage technologies. Understanding these application-specific performance requirements enables optimal technology selection that aligns storage capabilities with workload characteristics, ensuring maximum efficiency and cost-effectiveness in production AI deployments.

Future Outlook and Technology Evolution

The storage technology landscape for AI applications continues to evolve rapidly, with emerging technologies and architectural innovations promising further improvements in performance, efficiency, and cost-effectiveness. The development of next-generation storage interfaces such as PCIe 5.0 and emerging memory technologies will likely provide new opportunities for AI performance optimization while potentially reshaping the competitive landscape between different storage approaches.

The evolution of NVMe technology continues with developments in areas such as computational storage, which integrates processing capabilities directly into storage devices to enable data processing closer to storage locations. These innovations could provide significant benefits for AI applications by reducing data movement overhead and enabling more efficient preprocessing and feature extraction operations. Additionally, advances in NAND flash memory technology, including the transition to more advanced process nodes and improved error correction capabilities, promise continued improvements in performance and cost-effectiveness.

Optane technology evolution includes the development of next-generation 3D XPoint memory architectures with improved density, performance, and cost characteristics. Intel’s roadmap includes plans for enhanced Optane products with greater capacity and improved integration capabilities, potentially expanding the technology’s applicability to a broader range of AI workloads. The development of alternative persistent memory technologies from other vendors may also increase competition and drive innovation in this space.

The broader trend toward heterogeneous computing architectures, including the integration of specialized AI accelerators, neuromorphic processors, and quantum computing elements, will likely create new requirements and opportunities for storage technology optimization. The storage subsystem will need to evolve to support these diverse computing paradigms while maintaining compatibility with existing AI development workflows and deployment infrastructure.

Conclusion and Strategic Recommendations

The selection between NVMe SSDs and Optane technology for AI storage applications requires careful analysis of specific workload characteristics, performance requirements, and economic constraints. NVMe SSDs provide an excellent balance of performance, capacity, and cost-effectiveness for many AI applications, particularly those emphasizing sequential data access patterns and requiring large storage capacities. The mature ecosystem and competitive pricing make NVMe technology an attractive choice for organizations seeking to optimize AI infrastructure while maintaining reasonable budget constraints.

Optane technology offers compelling advantages for AI applications with specific performance requirements, particularly those involving frequent random access patterns, write-intensive operations, or requiring consistent low-latency performance. While the premium pricing may limit adoption in cost-sensitive deployments, the unique performance characteristics and architectural flexibility can provide substantial value in applications where these capabilities translate to significant efficiency improvements or enable innovative solutions that would not be feasible with traditional storage technologies.

The optimal approach for many organizations will likely involve a strategic combination of both technologies, leveraging NVMe SSDs for high-capacity general-purpose storage while utilizing Optane technology for specific high-performance use cases where its unique characteristics provide clear advantages. This hybrid approach can maximize the benefits of both technologies while optimizing overall system cost and performance.

As AI applications continue to evolve and new storage technologies emerge, organizations should maintain flexibility in their storage architecture decisions and continue to evaluate new options based on changing workload requirements and technology capabilities. The storage subsystem will remain a critical component of AI infrastructure, and strategic investment in appropriate technologies can provide significant competitive advantages through improved development velocity, enhanced system performance, and reduced operational overhead.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of storage technologies and their applications in AI systems. Readers should conduct their own research and consider their specific requirements when selecting storage solutions for AI applications. Performance characteristics and cost considerations may vary depending on specific use cases, system configurations, and vendor implementations. Technology specifications and availability are subject to change as products evolve and new solutions enter the market.