The reliability and robustness of machine learning systems have become paramount concerns as artificial intelligence applications increasingly penetrate critical infrastructure, healthcare systems, financial services, and autonomous technologies. Unlike traditional software systems that follow deterministic logic paths, machine learning systems exhibit complex, often unpredictable behaviors under stress conditions that can lead to catastrophic failures if not properly understood and mitigated. This comprehensive exploration of AI stress testing methodologies reveals the sophisticated techniques required to identify breaking points, analyze failure modes, and implement robust testing frameworks that ensure machine learning systems perform reliably even under extreme operational conditions.

Stay updated with the latest AI testing methodologies and trends to understand how the industry is evolving to meet the growing demands for reliable and robust artificial intelligence systems. The complexity of modern AI systems demands equally sophisticated testing approaches that go beyond traditional software validation techniques to address the unique challenges posed by probabilistic decision-making, data-dependent behavior, and emergent system properties.

Understanding AI System Vulnerability Landscapes

Machine learning systems exhibit fundamentally different failure characteristics compared to conventional software applications, primarily due to their probabilistic nature and dependence on training data distributions. These systems can fail gracefully under some conditions while experiencing catastrophic degradation under seemingly similar circumstances, making traditional stress testing approaches insufficient for comprehensive reliability assessment. The vulnerability landscape of AI systems encompasses multiple dimensions including adversarial inputs, distribution shift, model drift, resource constraints, and emergent behaviors that arise from complex interactions between different system components.

The challenge of AI stress testing begins with recognizing that machine learning models operate within learned decision boundaries that may not generalize effectively to extreme or unusual input conditions. Unlike rule-based systems that have predictable failure modes, AI systems can exhibit unexpected behaviors when confronted with inputs that fall outside their training distribution or when system resources become constrained. This unpredictability necessitates comprehensive stress testing strategies that systematically explore the operational envelope of AI systems to identify potential failure modes before they manifest in production environments.

Comprehensive Breaking Point Analysis Frameworks

Effective breaking point analysis for machine learning systems requires sophisticated frameworks that can systematically stress-test multiple system dimensions simultaneously while maintaining visibility into internal system states and decision processes. These frameworks must account for the multi-layered nature of AI systems, which typically include data preprocessing components, feature extraction mechanisms, model inference engines, post-processing logic, and integration interfaces with external systems. Each layer introduces potential failure modes that may not manifest until the system operates under extreme conditions or encounters adversarial inputs designed to exploit specific vulnerabilities.

The development of comprehensive breaking point analysis frameworks involves creating systematic methodologies for generating stress conditions that span the entire operational envelope of AI systems. This includes creating synthetic datasets that push the boundaries of acceptable input ranges, implementing resource constraint scenarios that test system behavior under limited computational or memory resources, and developing adversarial testing protocols that attempt to exploit known vulnerabilities in machine learning architectures. The framework must also incorporate mechanisms for monitoring system performance degradation patterns and identifying the specific conditions that trigger different types of failures.

Experience advanced AI development with Claude to implement sophisticated testing frameworks that can handle the complexity of modern machine learning systems. The integration of advanced AI tools into testing workflows enables more comprehensive analysis of system behavior and more effective identification of potential failure modes before they impact production systems.

Adversarial Input Generation and Resilience Testing

Adversarial input generation represents one of the most critical aspects of AI stress testing, as it directly addresses the vulnerability of machine learning systems to carefully crafted inputs designed to cause misclassification or system failure. These adversarial examples often appear normal to human observers but contain subtle perturbations that can cause even sophisticated AI systems to make egregious errors or behave in unexpected ways. The generation of effective adversarial inputs requires deep understanding of model architectures, loss functions, and optimization landscapes that govern how machine learning systems process and respond to different types of input data.

The systematic generation of adversarial inputs involves implementing multiple attack strategies ranging from gradient-based methods that exploit the differentiable nature of neural networks to evolutionary approaches that use genetic algorithms to discover inputs that maximize system confusion or failure rates. These testing methodologies must account for different threat models including white-box scenarios where attackers have complete knowledge of system internals, black-box situations where only input-output behavior is observable, and gray-box conditions that represent realistic operational environments where partial system knowledge may be available to potential attackers.

Resilience testing extends beyond simple adversarial input generation to encompass comprehensive evaluation of system behavior under various forms of input corruption, including noise injection, data poisoning, and systematic bias introduction. These testing approaches help identify not only obvious failure modes but also subtle degradation patterns that may accumulate over time and lead to gradual system performance deterioration that could go unnoticed without systematic monitoring and testing protocols.

Resource Constraint and Performance Degradation Analysis

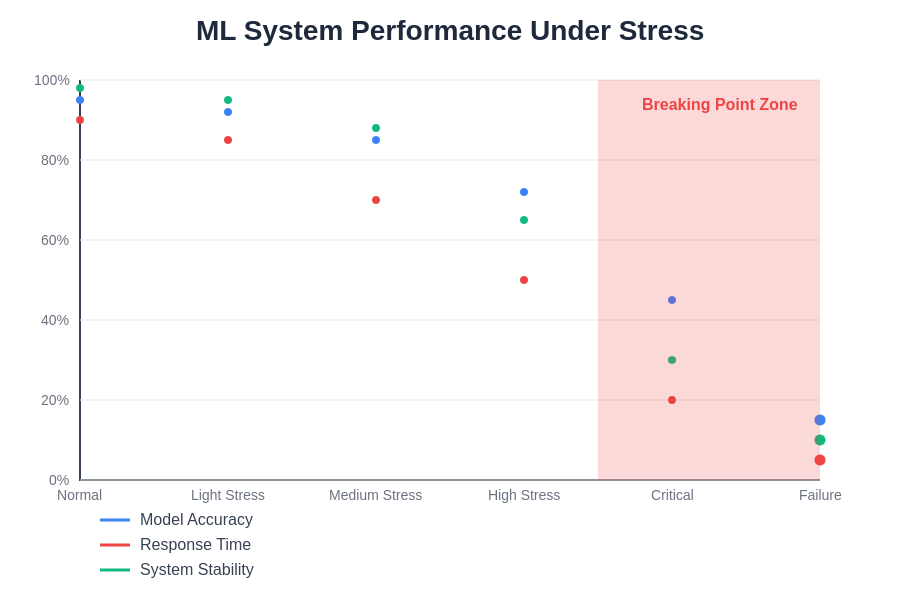

Machine learning systems often operate under varying resource constraints in production environments, and understanding how these systems behave as computational resources become limited is crucial for ensuring reliable operation across different deployment scenarios. Resource constraint analysis involves systematically reducing available computational resources including processing power, memory allocation, network bandwidth, and storage capacity while monitoring system performance and identifying the specific resource limitations that trigger different types of performance degradation or system failure.

The analysis of performance degradation under resource constraints requires sophisticated monitoring infrastructure that can track multiple system metrics simultaneously while correlating resource utilization patterns with model accuracy, inference latency, and system stability indicators. This analysis must account for the complex interdependencies between different resource types and how limitations in one area may cascade to affect overall system performance in unexpected ways. For example, memory constraints may force more frequent garbage collection cycles that increase inference latency, which in turn may trigger timeout conditions in downstream systems.

Performance degradation analysis must also consider the dynamic nature of resource availability in cloud computing environments and how auto-scaling mechanisms may interact with machine learning workloads to create complex feedback loops that can lead to system instability. Understanding these interactions requires comprehensive testing scenarios that simulate realistic production environments including variable load patterns, resource contention with other applications, and the impact of infrastructure failures on AI system performance.

Data Distribution Shift and Model Drift Detection

One of the most insidious challenges in maintaining reliable AI systems involves detecting and responding to gradual changes in input data distributions that can cause model performance to degrade over time without triggering obvious failure conditions. Data distribution shift represents a fundamental challenge for machine learning systems because models are trained on historical data that may not accurately represent future operational conditions, leading to a gradual erosion of system reliability that may not be detected until significant performance degradation has already occurred.

Effective detection of data distribution shift requires implementing comprehensive monitoring systems that can track multiple statistical properties of input data streams while maintaining historical baselines that enable identification of significant deviations from expected patterns. These monitoring systems must be sensitive enough to detect subtle changes in data characteristics while being robust enough to avoid false alarms triggered by normal operational variations. The challenge involves distinguishing between acceptable variations in input data and systematic shifts that indicate potential model degradation or emerging threats to system reliability.

Model drift detection extends beyond simple data distribution monitoring to encompass systematic tracking of model behavior patterns including prediction confidence distributions, error patterns, and decision boundary stability. This monitoring requires sophisticated analytical capabilities that can identify subtle changes in model behavior that may indicate degrading performance even when overall accuracy metrics appear stable. The detection system must also account for the complex interactions between different types of drift including concept drift where the underlying relationships between inputs and outputs change, and covariate shift where input distributions change while underlying relationships remain stable.

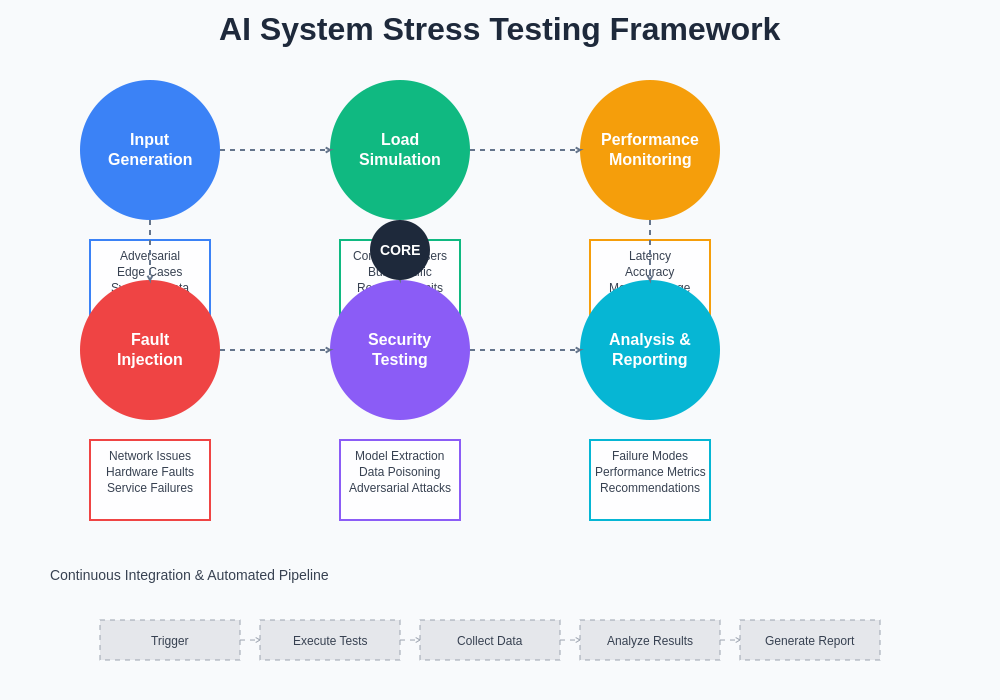

The comprehensive stress testing framework illustrates the multi-dimensional approach required for effective AI system validation, encompassing adversarial testing, resource constraint analysis, performance monitoring, and continuous evaluation protocols that ensure robust system operation across diverse operational conditions.

Scalability Testing and Load Distribution Analysis

The scalability characteristics of AI systems present unique challenges that differ significantly from traditional web applications or database systems, primarily due to the computational intensity of machine learning inference operations and the complex dependencies between different system components. Scalability testing for AI systems must account for the non-linear relationship between input complexity and computational requirements, where seemingly minor increases in input size or complexity can result in disproportionate increases in processing time and resource utilization.

Effective scalability testing requires implementing comprehensive load generation systems that can simulate realistic operational conditions including varying input complexities, concurrent request patterns, and burst load scenarios that may occur during peak usage periods. These testing systems must be capable of generating diverse input patterns that exercise different computational paths through machine learning models while maintaining realistic distributions of input characteristics that reflect actual operational conditions rather than synthetic test cases that may not reveal true system limitations.

Load distribution analysis involves understanding how computational workloads are distributed across system resources and identifying potential bottlenecks that may limit overall system throughput or create instability under high load conditions. This analysis must consider the complex dependencies between preprocessing operations, model inference computations, post-processing logic, and result aggregation mechanisms that collectively determine system performance characteristics under different load conditions.

Enhance your research capabilities with Perplexity to stay current with the latest developments in AI system testing methodologies and performance optimization techniques. The rapidly evolving field of AI testing requires continuous learning and adaptation to new challenges and emerging best practices.

Fault Injection and Resilience Validation

Fault injection testing represents a critical component of comprehensive AI stress testing that involves deliberately introducing various types of system faults and monitoring how the AI system responds to these controlled failure conditions. This testing approach helps identify potential failure modes that may not be apparent during normal operation but could manifest during real-world scenarios where hardware failures, network interruptions, or software bugs introduce unexpected error conditions into the system operation.

The systematic approach to fault injection in AI systems requires sophisticated testing infrastructure that can introduce faults at multiple system layers including hardware-level failures such as memory corruption or processor errors, network-level issues including packet loss or connection timeouts, and software-level problems such as exception conditions or resource allocation failures. Each type of fault requires different injection mechanisms and monitoring approaches to ensure that the testing accurately reflects realistic failure scenarios while providing meaningful insights into system resilience characteristics.

Resilience validation extends beyond simple fault injection to encompass comprehensive evaluation of system recovery mechanisms including checkpoint and restart capabilities, graceful degradation protocols, and fail-safe behaviors that ensure system safety even when primary operational modes become unavailable. This validation process must verify that AI systems can maintain acceptable performance levels even when operating under degraded conditions and that recovery mechanisms function correctly across different types of failure scenarios.

Continuous Monitoring and Adaptive Testing Strategies

The dynamic nature of AI systems operating in production environments requires continuous monitoring strategies that can adapt to changing operational conditions while maintaining comprehensive visibility into system behavior patterns and performance characteristics. Traditional static testing approaches are insufficient for AI systems because these systems may exhibit different behaviors as they encounter new data patterns, operate under varying resource constraints, or interact with evolving external systems that may introduce new sources of variability or stress.

Continuous monitoring systems for AI applications must implement sophisticated analytical capabilities that can process large volumes of operational data while identifying subtle patterns that may indicate emerging issues or degrading performance before these problems manifest as obvious system failures. These monitoring systems must be capable of tracking multiple performance dimensions simultaneously while correlating different types of metrics to provide comprehensive insights into system health and operational status.

Adaptive testing strategies involve implementing automated testing protocols that can adjust their testing parameters and focus areas based on observed system behavior patterns and emerging operational challenges. These adaptive systems must be capable of identifying new potential failure modes as they emerge and automatically generating appropriate test cases to validate system behavior under these newly identified stress conditions. The adaptive approach ensures that testing efforts remain relevant and effective even as system characteristics evolve and new operational challenges emerge.

This comprehensive analysis of machine learning system performance degradation patterns reveals the complex relationships between different stress factors and system reliability, highlighting the importance of multi-dimensional testing approaches that can identify potential failure modes before they impact production operations.

Security Testing and Vulnerability Assessment

Security testing for AI systems encompasses a broad range of specialized testing approaches that address the unique security challenges posed by machine learning applications including model extraction attacks, membership inference vulnerabilities, and backdoor injection threats that can compromise system integrity in ways that traditional security testing approaches may not detect. These security challenges require sophisticated testing methodologies that can systematically evaluate system resilience against various forms of adversarial attacks while ensuring that security measures do not compromise system functionality or performance.

The comprehensive approach to AI security testing involves implementing multi-layered testing protocols that evaluate system security at different levels including input validation mechanisms, model protection measures, output sanitization procedures, and access control implementations that collectively determine the overall security posture of AI systems. Each security layer requires different testing approaches and evaluation criteria to ensure comprehensive coverage of potential attack vectors and vulnerability scenarios.

Vulnerability assessment for AI systems must account for the evolving nature of security threats in the machine learning domain including newly discovered attack methodologies and emerging threat vectors that may not be addressed by existing security measures. This assessment process requires continuous updating of testing protocols and threat models to ensure that security evaluations remain current and effective against the latest known attack techniques while anticipating potential future threat developments.

Integration Testing and System Interaction Analysis

AI systems rarely operate in isolation but instead function as components within larger system architectures that include databases, web services, user interfaces, and external APIs that collectively determine overall system behavior and performance characteristics. Integration testing for AI systems must account for the complex interactions between machine learning components and these external systems while ensuring that the AI system performs reliably even when external dependencies experience failures or performance degradation.

The systematic approach to integration testing requires implementing comprehensive test environments that accurately simulate production system architectures including realistic data flows, timing constraints, and error handling mechanisms that govern how AI systems interact with external components. These test environments must be capable of simulating various failure scenarios in external systems while monitoring how these failures propagate through the AI system and affect overall application behavior.

System interaction analysis involves understanding the complex feedback loops and dependencies that exist between AI systems and their operational environments including how changes in external system performance may affect AI system behavior and how AI system outputs may influence the behavior of downstream systems. This analysis requires sophisticated monitoring and analytical capabilities that can track system interactions across multiple time scales and identify potential instability conditions that may arise from complex system interdependencies.

Performance Benchmarking and Baseline Establishment

Effective stress testing of AI systems requires establishing comprehensive performance baselines that provide reference points for evaluating system behavior under different operational conditions and stress scenarios. These baselines must encompass multiple performance dimensions including accuracy metrics, latency characteristics, resource utilization patterns, and stability indicators that collectively define acceptable system performance levels across different operational scenarios.

The establishment of meaningful performance baselines requires systematic evaluation of AI system behavior across representative operational conditions including various input data characteristics, load patterns, and environmental conditions that reflect realistic deployment scenarios. These baseline measurements must account for the inherent variability in AI system performance while identifying stable performance characteristics that can serve as reliable reference points for ongoing monitoring and testing activities.

Performance benchmarking for AI systems must also account for the evolving nature of system requirements and operational conditions that may necessitate periodic updates to baseline performance expectations. This adaptive approach to benchmarking ensures that performance evaluations remain relevant and meaningful even as system capabilities evolve and operational requirements change over time.

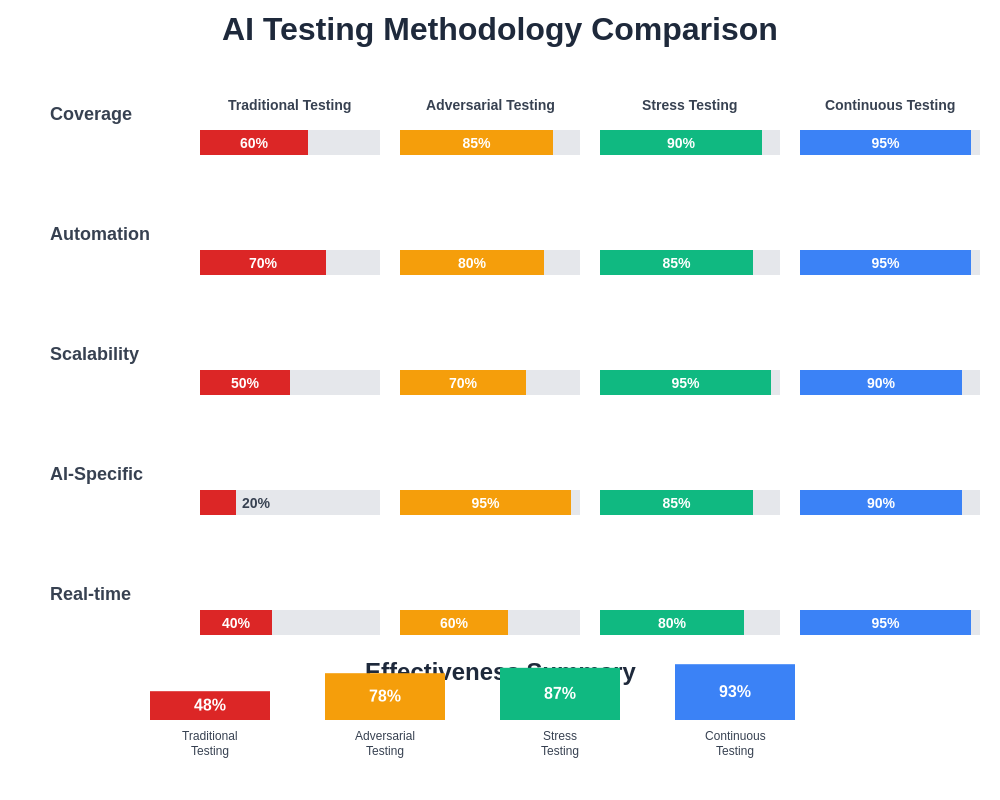

This detailed comparison of different AI testing methodologies highlights the strengths and limitations of various approaches while providing guidance for selecting appropriate testing strategies based on specific system requirements and operational constraints.

Automated Testing Pipeline Development

The complexity and scale of modern AI systems necessitate sophisticated automated testing pipelines that can systematically evaluate system behavior across multiple dimensions while maintaining consistent testing coverage and reducing the manual effort required for comprehensive system validation. These automated pipelines must be capable of coordinating multiple types of testing activities including functional validation, performance evaluation, security assessment, and resilience testing while providing comprehensive reporting and analysis capabilities.

The development of effective automated testing pipelines for AI systems requires implementing flexible testing frameworks that can adapt to different model architectures, input data characteristics, and operational requirements while maintaining consistent testing quality and coverage. These frameworks must support both scheduled testing activities that provide regular system health assessments and triggered testing protocols that can respond to specific events or conditions that may indicate potential system issues.

Automated testing pipelines must also incorporate sophisticated result analysis capabilities that can process large volumes of testing data while identifying meaningful patterns and trends that provide insights into system behavior and performance characteristics. These analysis capabilities must be able to correlate results across different types of tests while providing actionable recommendations for system improvements or additional testing activities that may be needed to address identified issues.

Future Directions and Emerging Challenges

The field of AI stress testing continues to evolve rapidly as new machine learning architectures, deployment patterns, and operational challenges emerge that require corresponding advances in testing methodologies and tools. Future developments in AI stress testing are likely to focus on addressing the unique challenges posed by emerging technologies such as federated learning systems, edge AI deployments, and multi-modal AI applications that combine different types of machine learning models within integrated system architectures.

The increasing adoption of AI systems in safety-critical applications including autonomous vehicles, medical diagnosis systems, and industrial control applications is driving demand for more rigorous and comprehensive testing approaches that can provide higher levels of assurance regarding system reliability and safety. These applications require testing methodologies that can validate system behavior under extreme conditions while providing statistical guarantees about system performance and failure rates that meet regulatory requirements and industry standards.

The continued evolution of adversarial attack techniques and security threats targeting AI systems necessitates ongoing development of defensive testing strategies and security validation approaches that can keep pace with emerging threat vectors while maintaining practical applicability for real-world system deployments. This evolutionary process requires close collaboration between security researchers, AI developers, and testing professionals to ensure that testing approaches remain effective against the latest known threats while anticipating potential future attack developments.

The integration of AI systems into increasingly complex distributed architectures including cloud computing environments, edge computing networks, and hybrid deployment scenarios introduces new testing challenges that require sophisticated approaches to system validation and performance evaluation. These distributed architectures create complex interdependencies and failure modes that may not be apparent from testing individual system components in isolation, necessitating comprehensive integration testing approaches that can validate system behavior across entire distributed system architectures.

Conclusion and Best Practices

The comprehensive stress testing of AI systems represents a critical component of responsible AI deployment that requires sophisticated methodologies, specialized tools, and ongoing commitment to system validation and improvement. The unique characteristics of machine learning systems including their probabilistic behavior, data dependence, and complex failure modes necessitate testing approaches that go well beyond traditional software validation techniques to address the specific challenges and risks associated with AI system deployment.

Effective AI stress testing requires implementing multi-dimensional testing strategies that systematically evaluate system behavior across different types of stress conditions while maintaining comprehensive visibility into system performance characteristics and failure modes. These testing strategies must be supported by sophisticated monitoring and analysis capabilities that can process large volumes of testing data while identifying meaningful patterns and trends that provide actionable insights for system improvement and risk mitigation.

The successful implementation of comprehensive AI stress testing programs requires significant investment in testing infrastructure, specialized expertise, and ongoing commitment to continuous improvement and adaptation as new challenges and requirements emerge. Organizations deploying AI systems must recognize that stress testing represents an essential component of system development and deployment rather than an optional validation activity that can be deferred or minimized without significant risk to system reliability and safety.

Disclaimer

This article is for educational and informational purposes only and does not constitute professional advice regarding AI system testing or deployment. The methodologies and techniques discussed should be adapted to specific system requirements and operational contexts. Organizations implementing AI stress testing programs should consult with qualified professionals and consider applicable regulatory requirements and industry standards. The effectiveness of testing approaches may vary depending on specific system characteristics, operational environments, and implementation details.