The deployment of artificial intelligence systems in production environments requires rigorous validation processes that go beyond traditional automated testing approaches. User Acceptance Testing for AI systems represents a critical intersection where human judgment meets machine intelligence, ensuring that AI applications not only function correctly but also meet real-world user expectations and business requirements. This comprehensive validation methodology combines systematic testing protocols with human expertise to create robust, reliable, and user-centric AI solutions.

Stay updated with the latest AI testing trends to understand emerging methodologies and best practices in AI validation. The evolution of AI testing requires continuous adaptation to new technologies and methodologies that ensure both technical accuracy and human usability in intelligent systems.

Understanding AI User Acceptance Testing

Traditional User Acceptance Testing focuses on verifying that software systems meet specified business requirements and user expectations through predefined test cases and scenarios. However, AI systems introduce unique complexities that require specialized validation approaches. Unlike deterministic software applications, AI systems exhibit probabilistic behavior, learn from data, and may produce different outputs for similar inputs based on their training and contextual understanding.

AI User Acceptance Testing encompasses the evaluation of machine learning models, natural language processing systems, computer vision applications, and other intelligent systems through human-centered validation processes. This approach recognizes that AI systems must not only demonstrate technical proficiency but also align with human cognitive patterns, ethical considerations, and practical usability requirements that cannot be fully captured through automated testing alone.

The integration of human expertise into AI validation processes ensures that systems perform appropriately across diverse scenarios, edge cases, and real-world conditions that may not have been anticipated during development. This human-in-the-loop approach provides essential feedback for improving AI system performance while maintaining the critical human oversight necessary for responsible AI deployment.

The Human-in-the-Loop Validation Framework

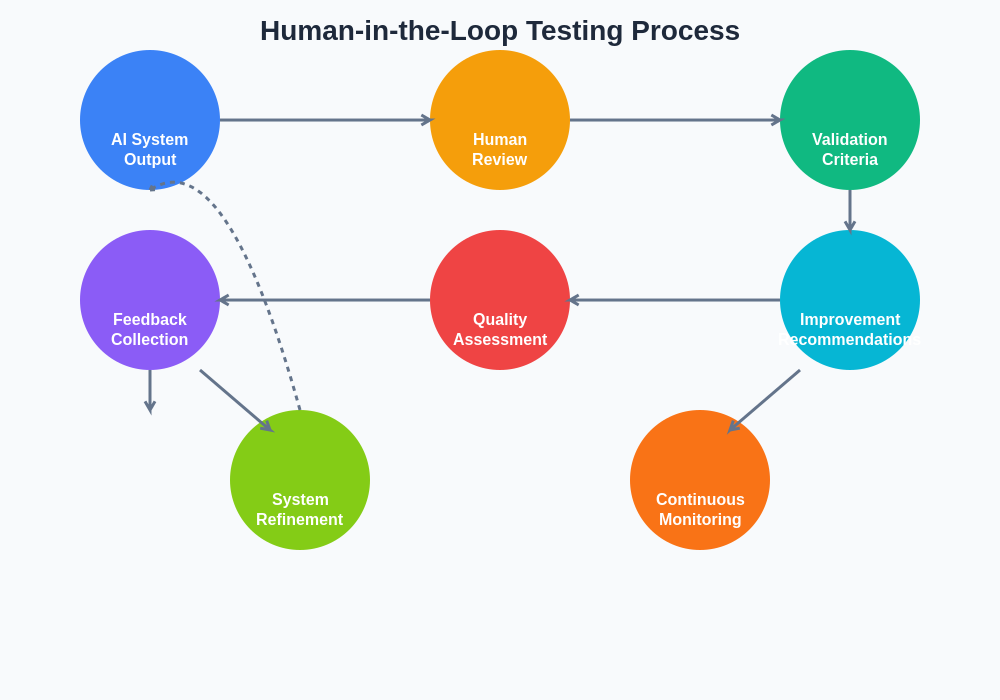

Human-in-the-loop validation represents a systematic approach to AI testing that strategically incorporates human judgment, expertise, and feedback throughout the validation process. This methodology recognizes that human intelligence provides essential context, ethical reasoning, and domain expertise that complement AI capabilities while identifying limitations and potential failure modes that automated testing might miss.

The framework operates on multiple levels, from individual prediction validation to system-wide behavior assessment. Human validators examine AI outputs for accuracy, appropriateness, bias, and alignment with intended use cases. This process involves both subject matter experts who understand the domain-specific requirements and end users who represent the ultimate consumers of AI-powered applications.

Enhance your AI development process with Claude’s advanced capabilities for comprehensive analysis and validation support. The combination of AI assistance in testing with human oversight creates a robust validation ecosystem that ensures both technical excellence and practical usability.

The human-in-the-loop approach also facilitates continuous learning and improvement of AI systems through feedback mechanisms that capture nuanced human insights and preferences. This iterative process helps refine AI behavior to better align with human expectations and requirements while maintaining system performance and reliability.

Establishing Validation Criteria and Metrics

Effective AI User Acceptance Testing requires the establishment of comprehensive validation criteria that address both technical performance and human-centered outcomes. These criteria must encompass accuracy metrics, usability assessments, ethical considerations, and business value indicators that collectively determine the acceptability of AI system performance in real-world applications.

Technical validation criteria include traditional metrics such as precision, recall, F1-scores, and accuracy measures, but extend to include fairness metrics, robustness assessments, and explainability evaluations. These technical measures provide quantitative foundations for assessing AI system performance while establishing baseline requirements for acceptable functionality.

Human-centered validation criteria focus on user experience, cognitive load, trust factors, and practical utility of AI systems in real-world contexts. These criteria assess whether AI systems enhance human capabilities, integrate seamlessly into existing workflows, and provide value that justifies their implementation and ongoing maintenance requirements.

Business-oriented validation criteria evaluate the alignment between AI system performance and organizational objectives, including cost-effectiveness, scalability, maintainability, and strategic value creation. These criteria ensure that AI systems not only function correctly but also contribute meaningfully to business outcomes and user satisfaction.

Designing Human-Centered Test Scenarios

The development of effective test scenarios for AI systems requires careful consideration of real-world usage patterns, edge cases, and potential failure modes that may not be apparent through automated testing approaches. Human-centered test scenarios must reflect the complexity and unpredictability of actual user interactions while providing systematic coverage of critical functionality and use cases.

Scenario design begins with comprehensive user journey mapping that identifies key interaction points, decision nodes, and outcome expectations throughout the AI system usage lifecycle. These journeys must account for different user types, skill levels, and contextual factors that influence how individuals interact with and perceive AI system performance.

Edge case scenarios represent particularly important components of AI validation, as they test system behavior under unusual or unexpected conditions that may reveal hidden biases, performance limitations, or safety concerns. These scenarios often require human creativity and domain expertise to identify and properly evaluate, as they may involve subtle contextual factors that automated testing cannot adequately address.

The iterative nature of scenario refinement ensures that test cases evolve based on discovered issues, user feedback, and changing requirements. This continuous improvement process helps maintain the relevance and effectiveness of validation efforts as AI systems mature and deployment contexts evolve.

Implementing Bias Detection and Fairness Assessment

Bias detection and fairness assessment represent critical components of AI User Acceptance Testing that require sophisticated human judgment and domain expertise to identify and address effectively. AI systems can inadvertently perpetuate or amplify existing biases present in training data, algorithmic design choices, or evaluation methodologies, making human oversight essential for ensuring equitable and fair system behavior.

Fairness assessment involves evaluating AI system performance across different demographic groups, use cases, and contextual scenarios to identify disparities that may indicate biased behavior. This process requires both statistical analysis and qualitative evaluation by domain experts who understand the implications of differential performance across various user populations.

Human validators play essential roles in identifying subtle forms of bias that may not be apparent through automated metrics alone. These include cultural biases, contextual appropriateness, and nuanced discriminatory patterns that require human understanding of social, cultural, and ethical considerations to properly evaluate and address.

The implementation of bias detection processes must include diverse validation teams that represent different perspectives, backgrounds, and expertise areas. This diversity ensures comprehensive coverage of potential bias sources while providing multiple viewpoints on fairness and appropriateness of AI system behavior across different contexts and user populations.

Usability and User Experience Validation

AI systems must not only function correctly but also provide intuitive, efficient, and satisfying user experiences that encourage adoption and effective utilization. Usability validation for AI systems involves unique considerations related to transparency, explainability, and user control that distinguish it from traditional software usability testing.

User experience validation encompasses the evaluation of AI system interfaces, interaction patterns, feedback mechanisms, and integration with existing user workflows. Human evaluators assess whether AI systems enhance or hinder user productivity, provide appropriate levels of automation and control, and maintain user confidence through reliable and predictable behavior.

Leverage Perplexity’s research capabilities to gather comprehensive insights on user experience best practices and emerging trends in AI interface design. The combination of research-backed methodologies with practical user feedback creates robust validation approaches for AI system usability.

Transparency and explainability represent particularly important aspects of AI user experience validation, as users need to understand AI system reasoning and limitations to effectively utilize these tools. Human evaluators assess the clarity, relevance, and usefulness of AI explanations while ensuring that transparency features enhance rather than complicate user interactions.

Performance Monitoring and Continuous Validation

AI systems require ongoing validation throughout their operational lifecycle, as performance may degrade over time due to data drift, changing user patterns, or evolving requirements. Continuous validation processes must combine automated monitoring with regular human evaluation to maintain system quality and user satisfaction over extended deployment periods.

Performance monitoring frameworks must capture both technical metrics and user-reported issues to provide comprehensive visibility into AI system behavior in production environments. These frameworks should include alerting mechanisms that trigger human review when performance degrades below acceptable thresholds or when unusual patterns are detected.

Human-in-the-loop monitoring involves regular review of AI system outputs, user feedback analysis, and periodic comprehensive assessments that evaluate ongoing alignment with business objectives and user expectations. This continuous oversight ensures that AI systems maintain their intended functionality while adapting appropriately to changing conditions and requirements.

The integration of user feedback mechanisms into production AI systems enables continuous collection of human insights that inform ongoing validation and improvement efforts. These mechanisms must balance the need for comprehensive feedback with user convenience and privacy considerations while providing actionable insights for system enhancement.

Collaborative Validation Methodologies

Effective AI validation requires collaboration between multiple stakeholders, including data scientists, domain experts, end users, and quality assurance professionals, each contributing unique perspectives and expertise to the validation process. Collaborative methodologies must facilitate effective communication and coordination while ensuring comprehensive coverage of validation requirements and scenarios.

Cross-functional validation teams bring together diverse expertise areas to provide holistic assessment of AI system performance across technical, business, and user-centered dimensions. These teams must establish clear roles, responsibilities, and communication protocols that enable efficient collaboration while maintaining validation quality and consistency.

Stakeholder involvement strategies must account for different levels of technical expertise, availability constraints, and organizational priorities while ensuring meaningful participation in validation processes. This may involve providing training, creating accessible evaluation interfaces, and establishing feedback mechanisms that accommodate different stakeholder needs and preferences.

The documentation and knowledge sharing processes within collaborative validation efforts ensure that insights, lessons learned, and best practices are captured and disseminated across the organization. This knowledge management supports continuous improvement of validation methodologies while building organizational capabilities for future AI validation efforts.

Risk Assessment and Mitigation Strategies

AI systems introduce unique risks related to algorithmic decision-making, data privacy, security vulnerabilities, and potential unintended consequences that require specialized risk assessment and mitigation approaches. Human expertise is essential for identifying, evaluating, and addressing these risks through comprehensive validation processes.

Risk assessment for AI systems must consider both technical risks related to system performance and broader risks related to societal impact, ethical implications, and regulatory compliance. Human evaluators must assess the potential consequences of AI system failures, misuse, or unintended behavior across different scenarios and contexts.

Mitigation strategies for identified risks may include technical modifications, process improvements, additional safeguards, or deployment restrictions that reduce the likelihood or impact of adverse outcomes. These strategies must be validated through testing and evaluation to ensure their effectiveness while maintaining system functionality and user experience.

The ongoing nature of risk management for AI systems requires continuous monitoring, assessment, and adaptation of mitigation strategies as systems evolve and deployment contexts change. This dynamic approach ensures that risk management remains effective and relevant throughout the AI system lifecycle.

Regulatory Compliance and Standards Alignment

AI systems increasingly operate within regulatory frameworks and industry standards that require specific validation approaches and documentation practices. Human expertise is essential for interpreting regulatory requirements, ensuring compliance, and adapting validation processes to meet evolving regulatory expectations.

Compliance validation must address data protection requirements, algorithmic transparency obligations, fairness mandates, and safety standards that vary across different jurisdictions and application domains. Human validators must understand these requirements and ensure that AI systems meet applicable compliance obligations through appropriate testing and documentation.

Standards alignment involves ensuring that AI validation processes conform to relevant industry standards, best practice frameworks, and professional guidelines that govern AI development and deployment. This alignment helps ensure validation quality while facilitating regulatory approval and industry acceptance.

The documentation requirements for regulatory compliance often exceed those of traditional software systems, requiring comprehensive records of validation processes, results, and decision-making rationale. Human oversight ensures that documentation meets regulatory expectations while providing valuable insights for ongoing system improvement and compliance maintenance.

Quality Assurance Integration and Process Optimization

AI User Acceptance Testing must integrate effectively with broader quality assurance processes to ensure comprehensive coverage of system requirements while avoiding duplication of effort and maintaining efficiency. This integration requires careful coordination between AI-specific validation activities and traditional QA processes.

Process optimization involves streamlining validation workflows, automating routine tasks where appropriate, and focusing human effort on activities that require judgment, creativity, and domain expertise. This optimization helps maximize the value of human involvement while maintaining thorough validation coverage.

The development of reusable validation assets, including test scenarios, evaluation criteria, and assessment tools, supports efficient scaling of AI validation efforts across multiple projects and systems. These assets must be maintained and updated to reflect evolving requirements and lessons learned from validation experiences.

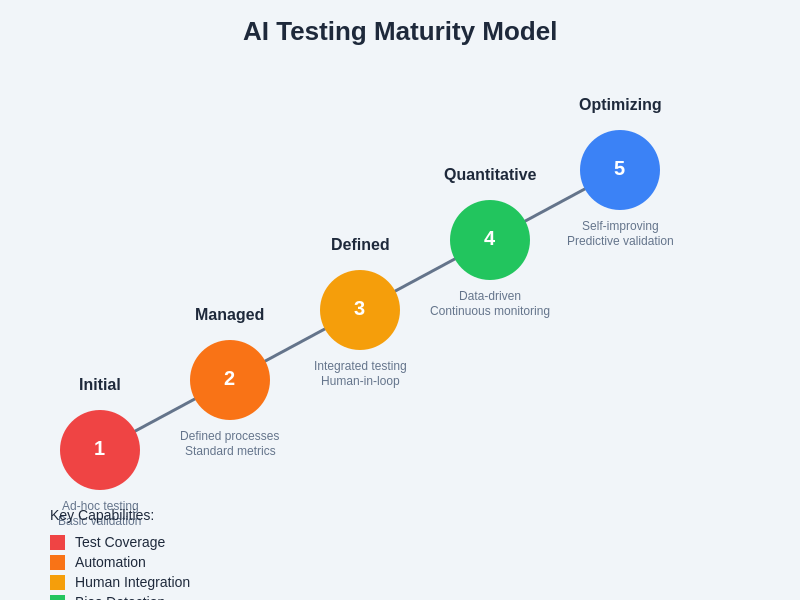

Continuous improvement of validation processes requires regular assessment of methodology effectiveness, stakeholder feedback incorporation, and adaptation to emerging best practices and technologies. This evolution ensures that validation approaches remain current and effective as AI technologies and deployment contexts continue to advance.

Training and Capability Development

Effective implementation of AI User Acceptance Testing requires building organizational capabilities and expertise in AI validation methodologies. Training programs must address both technical aspects of AI testing and human-centered evaluation approaches that require judgment and domain knowledge.

Validation team development involves identifying individuals with appropriate backgrounds, providing necessary training, and creating career development paths that encourage expertise building in AI validation. This capability development ensures sustainable validation capacity while building organizational knowledge assets.

The integration of AI validation skills into existing QA and testing roles requires targeted training programs that help professionals adapt their expertise to AI-specific requirements and challenges. This integration leverages existing testing expertise while building new capabilities needed for effective AI validation.

Knowledge sharing and community building within organizations and across industry networks supports the development of AI validation expertise and the evolution of best practices. These communities provide forums for sharing experiences, discussing challenges, and collaborating on solutions to common validation problems.

Future Directions and Emerging Approaches

The field of AI User Acceptance Testing continues to evolve as AI technologies advance and deployment contexts become more complex and diverse. Emerging approaches include enhanced automation of routine validation tasks, improved integration of human feedback into AI system improvement processes, and development of more sophisticated validation methodologies for complex AI systems.

The integration of AI technologies into validation processes themselves presents opportunities for enhancing efficiency while maintaining human oversight and judgment. These AI-assisted validation approaches may automate routine assessments while flagging cases that require human review and expertise.

Advanced validation methodologies for complex AI systems, including multi-modal AI, federated learning systems, and AI system ensembles, require continued development of specialized validation approaches that address the unique challenges of these advanced architectures.

The evolution of regulatory frameworks and industry standards will continue to influence AI validation requirements and methodologies, requiring ongoing adaptation of validation processes to meet emerging compliance obligations and best practice expectations.

Disclaimer

This article provides general guidance on AI User Acceptance Testing methodologies and should not be considered as specific technical, legal, or regulatory advice. Organizations should consult with qualified professionals and conduct thorough assessments of their specific requirements, regulatory obligations, and risk profiles when implementing AI validation processes. The effectiveness of validation approaches may vary depending on specific AI technologies, deployment contexts, and organizational capabilities.