The intersection of artificial intelligence and virtualization technology has created a transformative landscape where the efficient orchestration of machine learning workloads determines the success or failure of AI initiatives. As organizations increasingly rely on complex AI models and distributed computing environments, the choice between containerization technologies like Docker and traditional virtualization platforms such as VMware becomes crucial for optimizing performance, scalability, and resource utilization in machine learning deployments.

Explore the latest AI infrastructure trends to understand how virtualization technologies are evolving to meet the demanding requirements of modern machine learning applications. The strategic selection of virtualization architecture fundamentally impacts development velocity, operational efficiency, and the ability to scale AI solutions across diverse computing environments, making this decision one of the most critical considerations in contemporary AI infrastructure planning.

Understanding AI Virtualization Requirements

Machine learning workloads present unique challenges that distinguish them from traditional application deployments. These workloads typically require massive computational resources, specialized hardware acceleration through GPUs and TPUs, complex dependency management, and the ability to scale dynamically based on training or inference demands. The virtualization layer must accommodate these requirements while maintaining optimal performance, resource isolation, and operational simplicity.

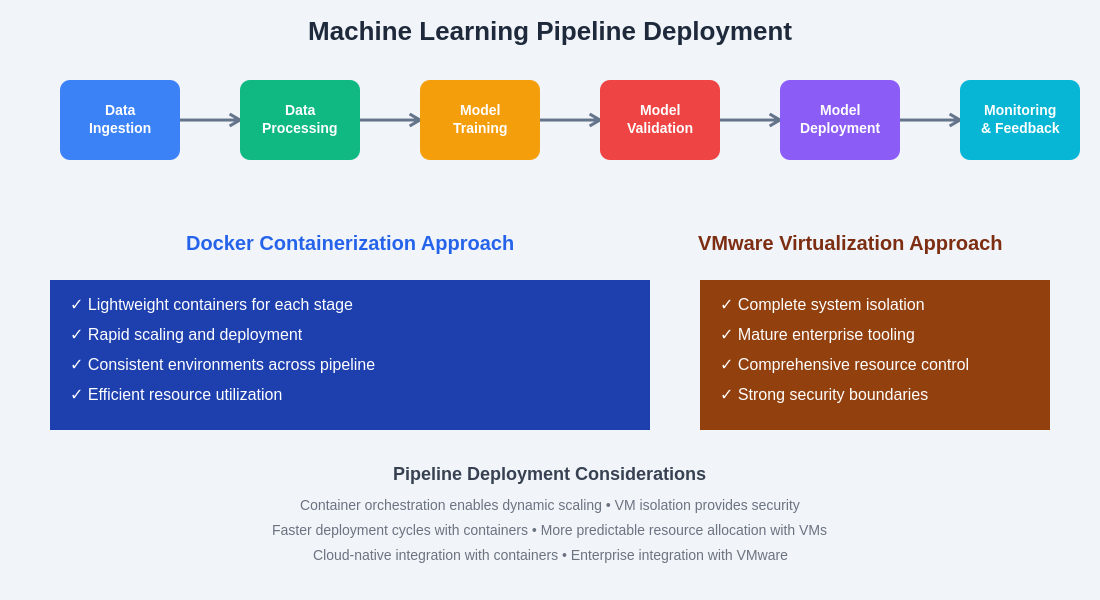

The complexity of AI model deployment extends beyond simple application containerization, encompassing multi-stage pipelines that include data preprocessing, model training, validation, and real-time inference serving. Each stage may have different resource requirements, dependency configurations, and scaling characteristics, necessitating a virtualization approach that can adapt to these diverse operational patterns while maintaining consistency and reliability across the entire machine learning lifecycle.

Docker’s Container Revolution in AI

Docker has emerged as the dominant force in AI virtualization through its lightweight containerization approach that packages applications and their dependencies into portable, executable units. This technology has revolutionized machine learning deployment by enabling consistent environments across development, testing, and production stages, eliminating the notorious “it works on my machine” problem that has historically plagued AI model deployment.

The containerization paradigm offered by Docker provides significant advantages for AI workloads, including rapid deployment capabilities, efficient resource utilization, and simplified dependency management. Machine learning models can be packaged with their exact runtime requirements, including specific versions of Python libraries, CUDA drivers, and framework dependencies, ensuring consistent behavior regardless of the underlying infrastructure. This consistency is particularly valuable in AI development where subtle differences in library versions or system configurations can significantly impact model performance and accuracy.

Leverage advanced AI development tools like Claude to enhance your containerization strategies and optimize machine learning deployment pipelines with intelligent automation and best practice recommendations. The integration of AI-powered development assistance with container orchestration creates powerful synergies that accelerate deployment cycles and improve operational reliability.

VMware’s Enterprise Virtualization Legacy

VMware represents the traditional virtualization approach, utilizing hypervisor technology to create complete virtual machines that encapsulate entire operating systems along with applications and dependencies. This approach has dominated enterprise computing for decades, providing robust isolation, comprehensive resource management, and mature operational tooling that many organizations have built their infrastructure strategies around.

In the context of AI and machine learning, VMware’s virtualization approach offers distinct advantages in scenarios requiring complete system isolation, complex multi-service architectures, and integration with existing enterprise infrastructure. The ability to provision entire virtual machines with dedicated resources, specific operating system configurations, and full administrative control can be particularly valuable for research environments, compliance-sensitive deployments, and scenarios where complete workload isolation is paramount.

The mature ecosystem surrounding VMware virtualization includes sophisticated monitoring, backup, disaster recovery, and resource management capabilities that have been refined through years of enterprise deployment. These operational advantages can be particularly valuable for organizations with existing VMware investments and specialized expertise in traditional virtualization management.

Performance Considerations for ML Workloads

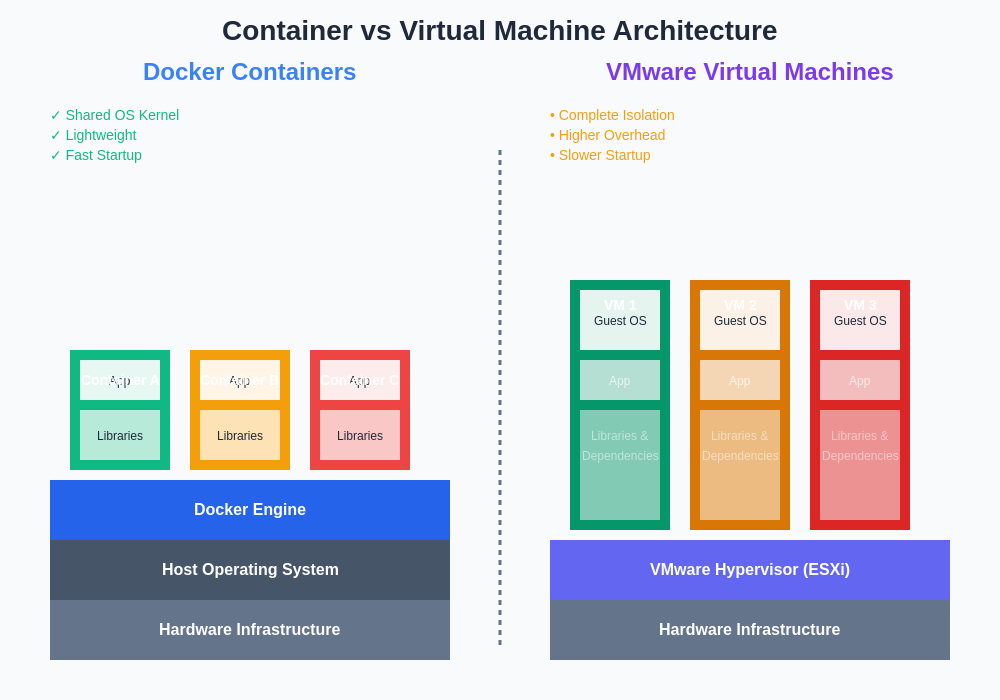

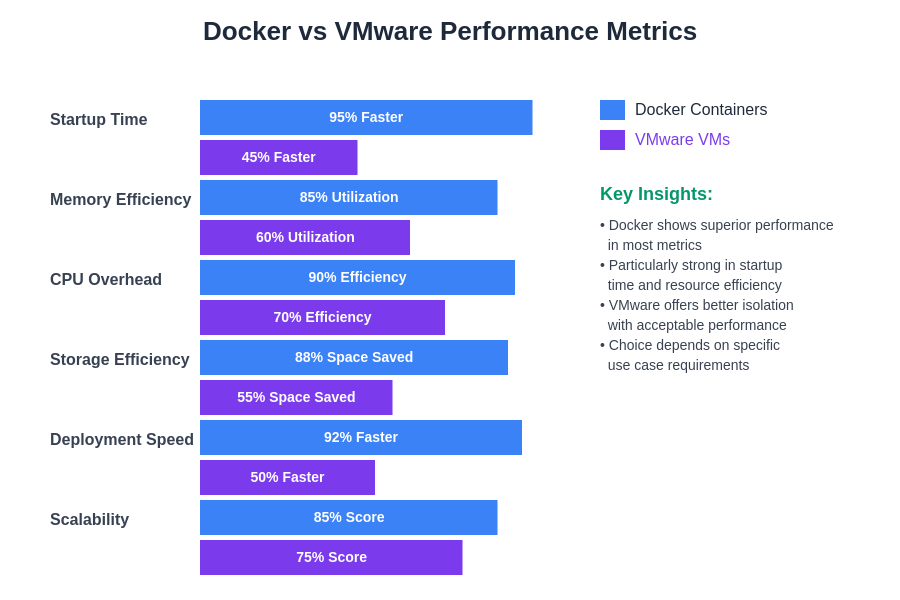

The performance characteristics of Docker and VMware virtualization differ significantly when applied to machine learning workloads, with each approach offering distinct advantages depending on specific use case requirements. Docker containers share the host operating system kernel, resulting in minimal virtualization overhead and near-native performance for CPU-intensive machine learning tasks. This efficiency is particularly pronounced in training scenarios where computational resources are the primary bottleneck.

GPU acceleration, which is critical for modern deep learning applications, presents different performance profiles between the two virtualization approaches. Docker containers can achieve excellent GPU performance through NVIDIA Container Toolkit and similar technologies that provide direct hardware access while maintaining containerization benefits. VMware virtualization traditionally required more complex configuration for GPU passthrough, though recent developments in vGPU technology have improved this capability significantly.

Memory management represents another critical performance consideration, particularly for large-scale machine learning models that may require substantial RAM allocations. Docker’s shared kernel architecture enables more efficient memory utilization and faster startup times, while VMware’s complete system virtualization provides stronger memory isolation at the cost of higher overhead and slower provisioning times.

Scalability and Orchestration Capabilities

The scalability requirements of modern AI applications demand sophisticated orchestration capabilities that can dynamically adjust resources based on workload demands, manage complex multi-service architectures, and maintain high availability across distributed computing environments. Docker’s integration with Kubernetes has created a powerful ecosystem for container orchestration that excels at managing large-scale, distributed machine learning workloads.

Kubernetes orchestration enables automatic scaling of containerized AI services based on CPU utilization, memory consumption, or custom metrics such as inference request rates or model training progress. This dynamic scaling capability is particularly valuable for machine learning inference services that experience variable load patterns and batch processing workloads that require burst scaling capabilities.

VMware’s orchestration capabilities, while mature and robust, typically operate at a different abstraction level, focusing on virtual machine lifecycle management rather than application-level orchestration. VMware vSphere and related technologies provide excellent capabilities for managing virtual machine clusters, but may require additional orchestration layers for application-specific scaling and management requirements common in AI deployments.

The fundamental architectural differences between containerization and traditional virtualization create distinct operational characteristics that significantly impact machine learning deployment strategies. Understanding these architectural trade-offs is essential for making informed decisions about virtualization technology selection.

Development and Deployment Workflow Integration

Modern machine learning development workflows increasingly emphasize continuous integration and continuous deployment practices that enable rapid experimentation, model iteration, and production deployment. Docker’s lightweight nature and extensive tooling ecosystem have made it the preferred choice for implementing MLOps pipelines that automate the entire machine learning lifecycle from data ingestion through model deployment.

The Docker ecosystem includes specialized tools for machine learning workflows, such as MLflow for experiment tracking, Kubeflow for Kubernetes-native ML pipelines, and various container registry solutions optimized for storing and versioning machine learning models. This comprehensive tooling ecosystem enables sophisticated automation capabilities that can significantly accelerate machine learning development cycles.

VMware environments, while capable of supporting machine learning workflows, typically require more complex integration strategies to achieve similar levels of automation and pipeline integration. The overhead associated with virtual machine provisioning and management can slow development iteration cycles, though this may be acceptable in scenarios where other VMware advantages outweigh development velocity considerations.

Enhance your research capabilities with Perplexity for comprehensive analysis of virtualization technologies and their impact on machine learning infrastructure optimization. Access to advanced search and analysis capabilities can significantly improve decision-making processes when evaluating complex infrastructure choices.

Resource Management and Cost Optimization

Efficient resource utilization directly impacts the cost-effectiveness of machine learning infrastructure, particularly given the expensive nature of GPU-accelerated computing resources commonly required for AI workloads. Docker’s lightweight containerization approach enables higher density deployments, allowing multiple machine learning services to share underlying hardware resources more efficiently than traditional virtual machine approaches.

Container orchestration platforms like Kubernetes provide sophisticated resource management capabilities including resource quotas, quality of service classes, and bin-packing algorithms that optimize hardware utilization across distributed machine learning workloads. These capabilities enable organizations to maximize the value of their computing investments while maintaining performance and isolation requirements.

VMware virtualization offers different resource management advantages, including more predictable resource allocation, comprehensive resource monitoring, and mature capacity planning tools. The ability to guarantee specific resource allocations to virtual machines can be valuable for mission-critical AI applications where consistent performance is more important than maximum resource efficiency.

Security and Compliance Considerations

Security requirements for machine learning deployments often include data protection, model intellectual property security, and compliance with various regulatory frameworks that may impact virtualization technology choices. Both Docker and VMware offer distinct security advantages that may be more suitable for different organizational requirements and threat models.

Docker containers provide process-level isolation that is generally sufficient for most machine learning applications while enabling efficient resource sharing. Container security can be enhanced through various mechanisms including network policies, security contexts, and specialized container security platforms that monitor and protect containerized workloads.

VMware virtualization offers stronger isolation guarantees through complete system virtualization, which may be required for highly sensitive machine learning applications or regulatory compliance scenarios. The mature security ecosystem surrounding VMware platforms includes comprehensive audit trails, security monitoring, and compliance reporting capabilities that may be essential for certain organizational requirements.

Multi-Cloud and Hybrid Deployment Strategies

The increasing adoption of multi-cloud strategies and hybrid infrastructure approaches creates additional considerations for AI virtualization technology selection. Docker containers provide excellent portability across different cloud platforms and on-premises infrastructure, enabling organizations to avoid vendor lock-in and optimize costs through dynamic workload placement.

Container orchestration platforms like Kubernetes have achieved broad adoption across major cloud providers, creating consistent operational experiences regardless of underlying infrastructure. This standardization enables sophisticated multi-cloud deployment strategies that can optimize costs, improve availability, and provide disaster recovery capabilities for critical machine learning applications.

VMware’s traditional focus on on-premises infrastructure has expanded to include cloud-based offerings and hybrid deployment models, though these may require more complex integration strategies compared to the cloud-native approach offered by containerization technologies.

Quantitative performance analysis reveals significant differences between Docker and VMware virtualization approaches across key metrics that directly impact machine learning workload effectiveness. These performance characteristics should be carefully considered when designing AI infrastructure architectures.

Operational Complexity and Management

The operational overhead associated with managing virtualization infrastructure represents a significant factor in technology selection, particularly for organizations with limited infrastructure expertise or resources. Docker’s approach to containerization generally requires less operational overhead for basic deployments, though sophisticated orchestration scenarios may introduce substantial complexity.

Container management platforms provide automated capabilities for deployment, scaling, updates, and monitoring that can significantly reduce operational burden for machine learning applications. The declarative configuration approach common in container orchestration enables infrastructure-as-code practices that improve consistency and reduce manual intervention requirements.

VMware virtualization typically requires more specialized expertise and operational procedures, though this complexity may be offset by organizational familiarity and existing operational processes. The mature management tools and extensive documentation available for VMware platforms can facilitate operational efficiency for organizations with appropriate expertise and resources.

Integration with Machine Learning Frameworks

The compatibility and integration capabilities of virtualization technologies with popular machine learning frameworks significantly impact development productivity and deployment flexibility. Docker’s extensive ecosystem includes pre-built containers for virtually all major machine learning frameworks, including TensorFlow, PyTorch, Scikit-learn, and specialized frameworks for computer vision, natural language processing, and reinforcement learning.

Framework-specific optimizations available through containerization include CUDA-optimized base images, pre-configured development environments, and specialized runtime configurations that can significantly accelerate development and deployment processes. The availability of official framework containers maintained by framework developers ensures compatibility and optimal performance characteristics.

VMware environments can certainly support machine learning frameworks, though this typically requires more manual configuration and optimization effort compared to the plug-and-play approach available through containerization. The additional configuration overhead may be justified in scenarios where VMware’s other advantages outweigh the increased complexity.

Future Technology Trends and Evolution

The evolution of virtualization technologies continues to address the specific requirements of machine learning workloads through innovations in hardware acceleration, network optimization, and storage integration. Emerging technologies such as confidential computing, serverless containers, and edge AI deployment are shaping the future landscape of AI virtualization.

Container technology continues to evolve with innovations such as lightweight container runtimes, improved security isolation, and specialized optimizations for machine learning workloads. The integration of container technology with serverless computing models creates new deployment patterns that can significantly reduce operational overhead while maintaining scalability benefits.

VMware’s technology roadmap includes enhanced support for containerized workloads through technologies such as VMware Tanzu, which bridges traditional virtualization and modern container orchestration approaches. This convergence of virtualization technologies may reduce the distinction between container and virtual machine approaches in future infrastructure architectures.

The implementation of comprehensive machine learning pipelines requires careful consideration of virtualization technology capabilities across all stages of the model lifecycle. Different virtualization approaches offer distinct advantages for various pipeline components and operational requirements.

Cost-Benefit Analysis and ROI Considerations

The financial implications of virtualization technology selection extend beyond initial licensing and infrastructure costs to include operational expenses, development productivity impacts, and scalability economics. Docker containerization typically requires lower initial investment and operational overhead, making it attractive for organizations with budget constraints or those prioritizing rapid deployment capabilities.

The total cost of ownership analysis must consider factors including infrastructure utilization efficiency, operational staffing requirements, development velocity impacts, and scalability economics. Container orchestration can enable significant cost savings through improved resource utilization and automation capabilities, though these benefits must be weighed against the complexity of implementing and maintaining sophisticated orchestration platforms.

VMware virtualization may involve higher initial costs and operational overhead but can provide value through mature operational tooling, comprehensive support options, and integration with existing enterprise infrastructure investments. Organizations with significant VMware expertise and infrastructure may find this approach more cost-effective despite higher per-unit virtualization costs.

Strategic Decision Framework

Selecting the optimal virtualization approach for machine learning workloads requires careful evaluation of organizational requirements, technical constraints, and strategic objectives. Key decision factors include existing infrastructure investments, team expertise, scalability requirements, security constraints, and integration requirements with existing systems and processes.

Organizations prioritizing rapid development cycles, cost efficiency, and cloud-native deployment patterns will likely benefit from Docker containerization approaches. The extensive ecosystem, operational simplicity, and excellent performance characteristics make containers particularly suitable for organizations embracing modern DevOps and MLOps practices.

Enterprises with significant investments in VMware infrastructure, complex compliance requirements, or needs for comprehensive system isolation may find traditional virtualization approaches more suitable despite higher complexity and costs. The decision should align with broader organizational technology strategies and operational capabilities.

The future of AI virtualization lies not necessarily in choosing between Docker and VMware, but in understanding how these technologies can complement each other within comprehensive infrastructure strategies that optimize for specific organizational requirements and use case characteristics. The continued evolution of both containerization and traditional virtualization technologies will likely create hybrid approaches that combine the best aspects of each technology paradigm.

Disclaimer

This article is for informational purposes only and does not constitute professional infrastructure or technology advice. The analysis presented reflects current understanding of virtualization technologies and their applications to machine learning workloads. Organizations should conduct thorough evaluations of their specific requirements, constraints, and objectives when making infrastructure technology decisions. Performance characteristics and operational considerations may vary significantly based on specific implementation details, workload characteristics, and infrastructure configurations.