The foundation of successful machine learning development lies not just in algorithmic expertise and data quality, but fundamentally in the computational infrastructure that powers your AI endeavors. Building an optimal AI workstation represents a critical investment that can dramatically accelerate model training times, enable experimentation with larger datasets, and facilitate the development of more sophisticated neural network architectures. The complexity of modern machine learning workflows demands hardware configurations that can handle massive parallel computations, extensive memory operations, and sustained high-performance workloads that would overwhelm conventional computer systems.

Explore the latest AI hardware trends to understand how cutting-edge technology developments are shaping the future of machine learning infrastructure. The rapid evolution of AI hardware has created unprecedented opportunities for developers to build powerful workstations that rival traditional server configurations while maintaining the flexibility and cost-effectiveness that individual researchers and small development teams require for productive machine learning development.

Understanding AI Workstation Requirements

The distinctive computational demands of machine learning workloads require a fundamental departure from traditional computing hardware configurations. Unlike conventional software development that primarily relies on CPU performance and standard memory configurations, AI development places extraordinary demands on parallel processing capabilities, memory bandwidth, and sustained computational throughput. Modern deep learning frameworks leverage highly parallelized operations that can simultaneously process thousands of mathematical operations across multiple data points, creating workload patterns that are ideally suited to specialized hardware architectures.

The scale and complexity of contemporary machine learning models have grown exponentially, with state-of-the-art language models containing billions of parameters and requiring terabytes of training data. These requirements translate into specific hardware needs that must be carefully balanced to create an optimal development environment. Understanding these requirements forms the foundation for making informed decisions about component selection and system architecture that will deliver exceptional performance while maintaining reasonable cost parameters.

The evolution of machine learning algorithms has also introduced new computational patterns that affect hardware selection. Transformer architectures, convolutional neural networks, and reinforcement learning algorithms each present unique computational characteristics that influence optimal hardware configurations. Additionally, the increasing popularity of multi-modal AI systems that process text, images, and audio simultaneously creates additional complexity in hardware requirements that must be addressed through thoughtful system design.

Graphics Processing Units: The Heart of AI Computing

Graphics processing units represent the most critical component in any AI workstation configuration, fundamentally determining the system’s capability to handle complex machine learning workloads efficiently. The parallel architecture of modern GPUs, originally designed for rendering graphics through simultaneous processing of multiple pixels, proves exceptionally well-suited for the matrix operations and tensor computations that form the backbone of neural network training and inference. The selection of appropriate GPU hardware can mean the difference between model training cycles measured in hours versus weeks, making this decision pivotal for productive AI development.

Experience advanced AI capabilities with Claude to understand how powerful hardware acceleration enables sophisticated machine learning applications and real-time AI processing. The symbiotic relationship between software optimization and hardware acceleration has created opportunities for developers to achieve remarkable performance gains through intelligent GPU utilization and optimized computational workflows.

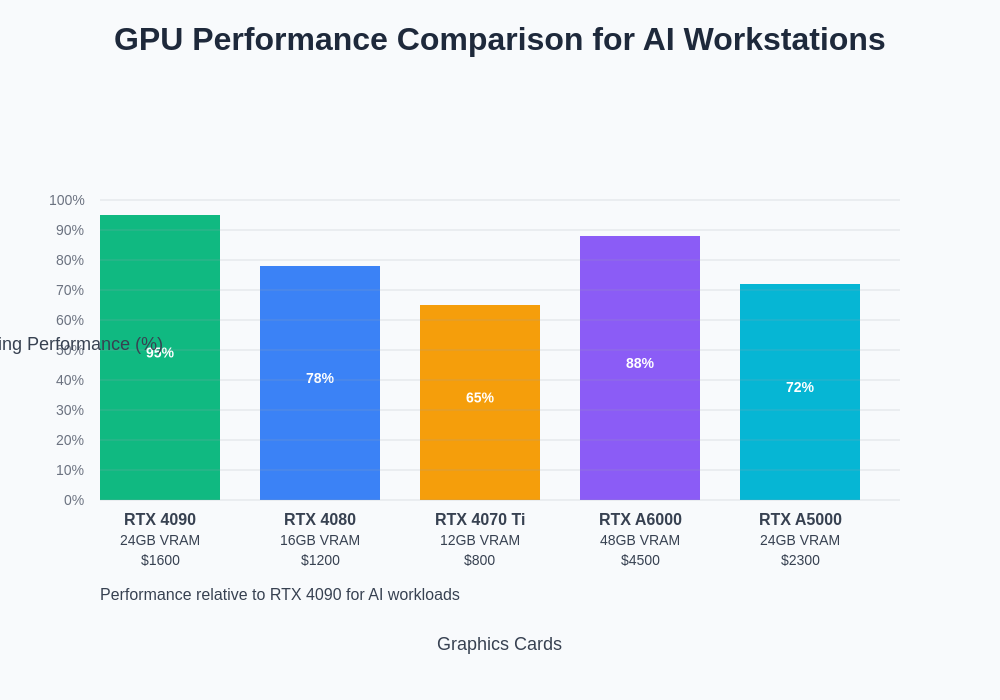

Contemporary GPU architectures specifically designed for AI workloads incorporate specialized tensor processing units, enhanced memory bandwidth, and optimized floating-point performance that deliver substantial improvements over traditional graphics cards. NVIDIA’s RTX 4090 and RTX 4080 represent exceptional choices for individual developers and small teams, offering substantial CUDA core counts and generous VRAM allocations that can handle most machine learning projects effectively. For more demanding applications, professional-grade options like the RTX A6000 or A5000 provide enhanced reliability, larger memory configurations, and additional features that support extended training sessions and production workloads.

The performance characteristics of different GPU options reveal significant variations in computational capability, memory capacity, and cost-effectiveness that directly impact machine learning workflow efficiency. Understanding these performance relationships enables informed decision-making that balances computational requirements with budget constraints while ensuring adequate headroom for future project expansion.

The emergence of AMD’s competitive GPU offerings has introduced additional options for AI workstation builders, particularly with the Radeon RX 7900 XTX and RX 7900 XT graphics cards that offer compelling performance characteristics for specific machine learning frameworks. However, the broader ecosystem support and software optimization for NVIDIA CUDA architecture continues to provide advantages for most AI development scenarios, particularly when working with popular frameworks like TensorFlow, PyTorch, and JAX that have been extensively optimized for CUDA acceleration.

Memory considerations for GPU selection extend beyond simple VRAM capacity to encompass memory bandwidth, error correction capabilities, and thermal management characteristics that affect sustained performance during extended training sessions. The ability to maintain consistent performance without thermal throttling becomes particularly important for machine learning workloads that may run continuously for days or weeks during model training cycles.

Central Processing Unit Selection for AI Workloads

While graphics processing units handle the primary computational workload for machine learning applications, the central processing unit remains critically important for orchestrating complex AI workflows, managing data preprocessing operations, and supporting the diverse software ecosystem that surrounds machine learning development. Modern AI workstations require CPUs that can efficiently handle multiple parallel threads, manage large memory configurations, and provide sufficient I/O bandwidth to prevent bottlenecks in data pipeline operations.

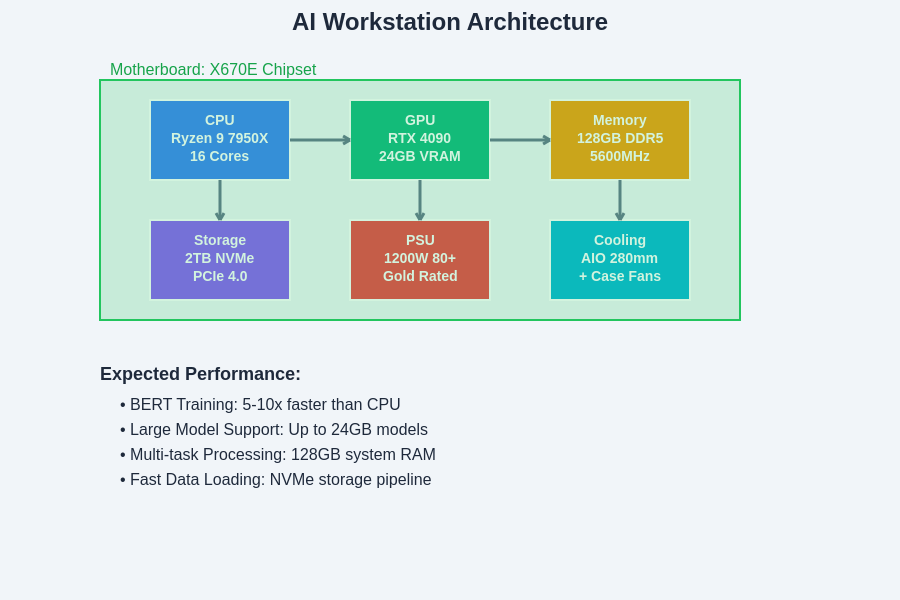

AMD’s Ryzen 7000 series processors, particularly the Ryzen 9 7950X and 7900X models, offer exceptional multi-core performance that proves ideal for AI workstation applications. These processors provide substantial core counts, high clock speeds, and efficient power consumption characteristics that support sustained performance during demanding machine learning operations. The architecture’s support for large memory configurations and multiple PCIe lanes enables optimal utilization of high-performance GPUs and fast storage systems that are essential for efficient AI development workflows.

Intel’s 13th generation Core processors, including the Core i9-13900K and i7-13700K, represent competitive alternatives that offer strong single-threaded performance alongside robust multi-core capabilities. These processors excel in scenarios requiring mixed workloads that combine AI development with other computational tasks, providing versatility that many developers find valuable for comprehensive development environments.

The selection between AMD and Intel processors often depends on specific workflow requirements, budget considerations, and ecosystem preferences. AMD processors typically offer superior multi-core performance per dollar, making them attractive for budget-conscious builders who prioritize parallel processing capabilities. Intel processors may provide advantages in specific software applications that have been optimized for Intel architectures, though these differences have diminished significantly with recent generation processors from both manufacturers.

Memory Architecture and Configuration

Memory subsystem design represents a critical yet often underestimated component in AI workstation configuration, directly impacting data loading speeds, model training efficiency, and overall system responsiveness during complex machine learning operations. The unprecedented data requirements of modern AI applications demand memory configurations that can support large datasets, multiple concurrent training processes, and sophisticated data augmentation pipelines without creating performance bottlenecks that limit overall system effectiveness.

Contemporary AI workstations should incorporate a minimum of 32GB of high-speed DDR5 memory, though 64GB or 128GB configurations provide substantial advantages for advanced machine learning applications and large-scale data processing operations. The increased memory capacity enables efficient caching of training datasets, reduces dependency on slower storage systems during training cycles, and supports more sophisticated preprocessing operations that can significantly accelerate overall development workflows.

Memory speed and timing characteristics play increasingly important roles in AI workstation performance, particularly for applications that involve frequent data transfers between system memory and GPU memory. DDR5-5600 or faster memory configurations provide optimal bandwidth for supporting high-performance GPU utilization and efficient data pipeline operations. The investment in premium memory components typically delivers measurable performance improvements that justify the additional cost through reduced training times and improved development productivity.

Discover comprehensive AI research capabilities with Perplexity to explore the latest developments in memory technology and computational architecture that are driving advances in machine learning hardware optimization. The rapid evolution of memory technology continues to create new opportunities for improving AI workstation performance through intelligent system design and component selection.

Storage Solutions for AI Development

Storage architecture represents a fundamental component of AI workstation design that directly impacts data loading performance, training iteration speed, and overall development workflow efficiency. The massive datasets typical in machine learning applications, combined with the frequent read/write operations required during model training and validation, create storage demands that far exceed traditional computing applications and require specialized storage solutions optimized for sustained high-performance operations.

Solid-state drives utilizing NVMe technology provide the foundation for optimal AI workstation storage configurations, delivering the random access performance and sustained bandwidth necessary for efficient handling of large datasets and model checkpoints. PCIe 4.0 NVMe drives, such as the Samsung 990 Pro or Western Digital SN850X, offer exceptional performance characteristics that can dramatically reduce data loading times and accelerate training iterations compared to traditional storage solutions.

The storage configuration should incorporate both high-speed primary storage for active projects and datasets, as well as high-capacity secondary storage for long-term data retention and model archival. A typical configuration might include a 2TB NVMe drive for active development work, complemented by a larger capacity drive or network-attached storage system for dataset storage and backup operations. This tiered approach optimizes both performance and cost while ensuring adequate capacity for expanding AI development requirements.

Advanced storage configurations may incorporate multiple NVMe drives in RAID configurations to provide enhanced performance and redundancy for critical AI development workflows. However, the complexity and cost of such configurations should be carefully weighed against the specific performance requirements and budget constraints of individual development scenarios.

Power Supply and Thermal Management

The substantial power requirements and thermal output of high-performance AI workstations necessitate careful attention to power supply selection and thermal management solutions that ensure stable operation during extended training sessions and peak computational loads. Modern GPU configurations for AI workloads can consume 400-500 watts or more under full utilization, requiring power supply units with adequate capacity and efficiency ratings to support sustained high-performance operation without compromising system stability or longevity.

A quality 1000-1200 watt power supply with 80+ Gold or higher efficiency certification provides the foundation for reliable AI workstation operation, offering sufficient headroom for peak power demands while maintaining efficient operation during typical workloads. Modular power supplies enable clean cable management that improves airflow and reduces thermal issues that can impact system performance during extended operation periods.

Thermal management extends beyond simple cooling to encompass system design considerations that optimize airflow patterns, component placement, and thermal dissipation strategies that maintain optimal operating temperatures for all critical components. High-performance CPU coolers, such as the Noctua NH-D15 or be quiet! Dark Rock Pro 4, provide excellent thermal performance for air cooling solutions, while all-in-one liquid cooling systems offer enhanced thermal management capabilities for the most demanding applications.

Case selection plays a crucial role in thermal management effectiveness, with full-tower cases providing optimal airflow characteristics and component accessibility for high-performance AI workstation configurations. Cases with multiple fan mounting positions and optimized airflow designs enable effective thermal management that maintains consistent performance during extended machine learning training sessions.

Motherboard and Connectivity Considerations

Motherboard selection for AI workstations must balance multiple competing requirements including GPU support capabilities, memory capacity and speed support, storage connectivity options, and expansion potential for future upgrades and enhancements. The motherboard serves as the foundation that connects all system components and directly impacts overall system performance, stability, and upgrade potential throughout the workstation’s operational lifetime.

The integrated architecture of a high-performance AI workstation demonstrates the critical interconnections between major components and the importance of balanced system design that ensures no single component becomes a performance bottleneck during intensive machine learning operations.

Modern AI workstations require motherboards with robust PCIe slot configurations that can support high-performance graphics cards while maintaining optimal bandwidth allocation for storage and expansion devices. X670E or Z790 chipset motherboards provide excellent foundations for AMD and Intel-based AI workstations respectively, offering comprehensive feature sets that support demanding AI development requirements while providing room for future expansion and enhancement.

The motherboard’s memory support capabilities directly impact system performance and upgrade potential, making support for high-speed DDR5 memory and large capacity configurations essential considerations for AI workstation applications. Additionally, comprehensive storage connectivity including multiple M.2 slots and SATA connections enables flexible storage configurations that can adapt to evolving project requirements and data management needs.

Network connectivity has become increasingly important for AI workstations as machine learning workflows increasingly incorporate cloud-based resources, distributed training capabilities, and collaborative development environments. Built-in 2.5GbE or 10GbE networking provides substantial advantages for high-speed data transfers and remote development scenarios that are becoming common in modern AI development workflows.

Performance Optimization and Configuration

System configuration and optimization represent critical steps in maximizing AI workstation performance that extend far beyond simple hardware selection to encompass software configuration, driver optimization, and systematic performance tuning that can deliver substantial improvements in machine learning workflow efficiency. The complex interactions between hardware components, operating system configurations, and machine learning frameworks create numerous opportunities for performance enhancement through intelligent system setup and ongoing optimization efforts.

Driver configuration for GPU acceleration requires careful attention to version selection and configuration parameters that optimize performance for specific machine learning frameworks and application requirements. NVIDIA’s CUDA toolkit installation and configuration directly impact the performance of popular frameworks like TensorFlow and PyTorch, while proper driver selection ensures compatibility and optimal performance across different machine learning applications and development environments.

Operating system optimization for AI workloads involves careful consideration of memory management policies, process scheduling parameters, and system resource allocation strategies that prioritize machine learning applications during training and inference operations. Linux distributions optimized for machine learning development, such as Ubuntu with appropriate kernel optimizations, typically provide superior performance compared to general-purpose operating system configurations.

Budget Considerations and Build Recommendations

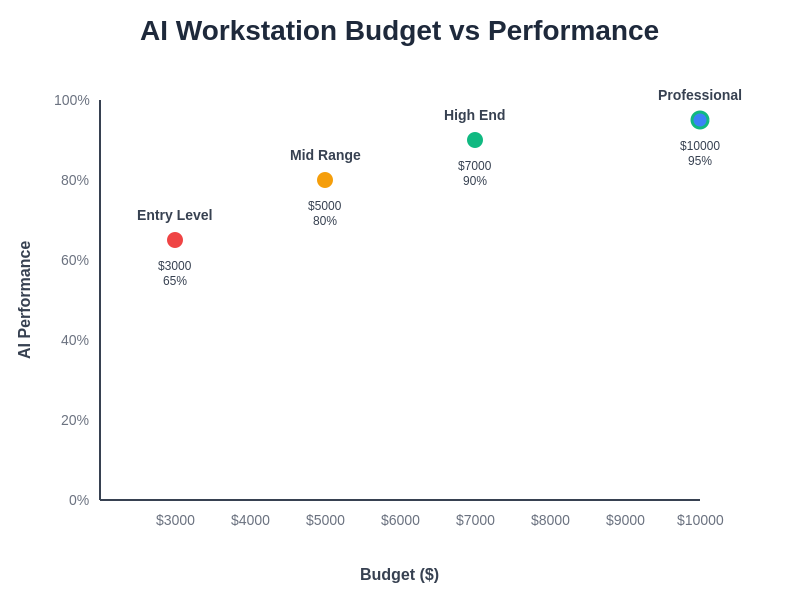

Creating effective AI workstation configurations across different budget ranges requires careful prioritization of components based on their impact on machine learning performance and overall development productivity. Understanding the performance implications of various component choices enables builders to create systems that deliver exceptional value while meeting specific development requirements and budget constraints that vary significantly across different development scenarios and organizational contexts.

Entry-level AI workstations targeting budgets around $3000-4000 can deliver impressive machine learning performance through careful component selection that prioritizes GPU performance while maintaining adequate support components. A configuration featuring an RTX 4070 Ti or RTX 4080, paired with a Ryzen 7 7700X processor and 32GB of DDR5 memory, provides excellent capabilities for most machine learning development projects while maintaining reasonable cost parameters for individual developers and small teams.

Mid-range configurations in the $5000-7000 range enable substantial performance improvements through upgraded GPU options like the RTX 4090, enhanced memory configurations reaching 64GB or 128GB, and premium storage solutions that dramatically improve data loading and training iteration speeds. These configurations provide the performance headroom necessary for advanced machine learning projects and professional development work that demands higher performance capabilities.

High-end AI workstation configurations exceeding $8000-10000 incorporate professional-grade components like the RTX A6000 or multiple GPU configurations that enable advanced research applications and production-scale model development. These systems provide the computational capabilities necessary for cutting-edge AI research and development of large-scale machine learning systems that push the boundaries of current technology capabilities.

Future-Proofing and Upgrade Strategies

The rapid evolution of machine learning hardware and software creates both opportunities and challenges for AI workstation builders who must balance current performance requirements with future upgrade potential and evolving technology standards. Designing systems with appropriate upgrade potential ensures that initial investments remain productive as requirements evolve and new technologies become available, maximizing the long-term value of AI workstation investments.

Component selection strategies that prioritize upgrade flexibility include choosing motherboards with additional PCIe slots for future GPU upgrades, power supplies with headroom for expanded configurations, and memory configurations that can be enhanced rather than replaced as requirements grow. These design decisions create systems that can adapt to evolving requirements without requiring complete system replacement, extending the productive lifetime of AI workstation investments.

The emerging landscape of AI-specific hardware, including specialized inference accelerators and next-generation GPU architectures, suggests that successful AI workstations will need to accommodate diverse hardware configurations and evolving software frameworks. Building systems with appropriate expansion capabilities and upgrade potential ensures that current investments remain relevant as the AI hardware ecosystem continues to evolve and mature.

The integration of emerging technologies like PCIe 5.0, DDR5 memory, and next-generation storage interfaces provides opportunities for substantial performance improvements through strategic upgrade planning that leverages new technology capabilities while maintaining compatibility with existing system components and software configurations.

Conclusion and Implementation Guidance

Building an optimal AI workstation represents a significant investment in development capabilities that can dramatically accelerate machine learning projects and enable more ambitious AI development initiatives. The careful selection and integration of high-performance components creates a foundation for productive AI development that can adapt to evolving requirements and support increasingly sophisticated machine learning applications over extended operational lifetimes.

The success of any AI workstation configuration depends not only on component selection but also on thoughtful system integration, proper configuration, and ongoing optimization efforts that maximize performance and reliability. The investment in quality components and professional assembly often pays dividends through improved stability, better performance, and reduced maintenance requirements that enhance overall development productivity.

The rapidly evolving landscape of AI hardware continues to create new opportunities for performance improvements and cost optimization that benefit developers working across all scales of machine learning applications. Staying informed about emerging technologies and upgrade opportunities ensures that AI workstation investments remain productive and competitive as the field continues to advance and mature.

Disclaimer

This article provides general guidance on AI workstation building and does not constitute specific technical recommendations for particular use cases or configurations. Hardware performance may vary based on specific applications, software configurations, and individual requirements. Readers should conduct thorough research and consider professional consultation when building high-performance computing systems. Component availability and pricing are subject to market conditions and may vary by region and supplier.