The orchestration of artificial intelligence workflows has emerged as a critical component in the successful deployment and management of machine learning systems at scale. As organizations increasingly adopt complex AI pipelines that span data ingestion, preprocessing, model training, evaluation, and deployment, the choice of workflow orchestration platform becomes paramount to operational success. Two platforms have risen to prominence in this space: Apache Airflow, the established open-source standard, and Prefect, the modern challenger designed specifically for the complexities of contemporary data science workflows.

Explore the latest AI workflow trends to understand how orchestration platforms are evolving to meet the demands of increasingly sophisticated machine learning operations. The decision between Airflow and Prefect represents more than a simple tool selection; it fundamentally shapes how data science teams architect their infrastructure, manage dependencies, handle failures, and scale their AI capabilities across diverse computing environments.

Understanding Workflow Orchestration in AI Context

Workflow orchestration in artificial intelligence encompasses the automated coordination of complex, interdependent tasks that form the backbone of machine learning operations. Unlike traditional batch processing or simple automation scripts, AI workflows require sophisticated handling of dynamic dependencies, resource allocation, error recovery, and state management across distributed computing environments. These workflows must accommodate the unique characteristics of machine learning workloads, including long-running training processes, resource-intensive computations, and the need for reproducible experiments.

The complexity of modern AI workflows stems from their multifaceted nature, involving data validation, feature engineering, hyperparameter optimization, model training across multiple algorithms, performance evaluation, and automated deployment pipelines. Each component requires careful orchestration to ensure optimal resource utilization, maintain data lineage, and provide comprehensive monitoring and logging capabilities. The orchestration platform must seamlessly integrate with diverse technologies including cloud storage systems, container orchestration platforms, machine learning frameworks, and monitoring tools.

Effective workflow orchestration platforms for AI must address several critical requirements including dynamic task generation based on runtime conditions, sophisticated retry mechanisms that understand the nuances of machine learning failures, comprehensive metadata tracking for experiment reproducibility, and flexible scheduling that can accommodate both batch and streaming processing patterns. The platform must also provide intuitive interfaces for data scientists while offering the robustness and scalability demanded by production environments.

Apache Airflow: The Established Standard

Apache Airflow has established itself as the de facto standard for workflow orchestration across numerous industries, with particular strength in data engineering and traditional ETL processes. Originally developed by Airbnb and later donated to the Apache Software Foundation, Airflow has evolved into a mature platform with extensive community support, comprehensive documentation, and a rich ecosystem of plugins and integrations. The platform’s strength lies in its proven track record, extensive operator library, and deep integration capabilities with virtually every major data platform and cloud service.

The architecture of Airflow centers around Directed Acyclic Graphs (DAGs) defined in Python code, providing data engineers with familiar programming constructs while maintaining the flexibility to implement complex workflow logic. This code-centric approach allows for sophisticated conditional logic, dynamic task generation, and integration with existing Python-based data science workflows. The platform’s scheduler provides robust task execution with configurable retry policies, dependency management, and resource allocation across distributed computing clusters.

The architectural differences between these platforms reflect fundamentally different design philosophies that impact everything from deployment complexity to development workflow patterns. While Airflow follows a traditional distributed system architecture with separate components for web serving, scheduling, and execution, Prefect embraces a more modern, cloud-native approach that abstracts infrastructure concerns behind a unified API layer.

Enhance your AI workflows with Claude’s advanced reasoning to optimize orchestration strategies and improve pipeline reliability. Airflow’s maturity manifests in its comprehensive monitoring capabilities, extensive logging infrastructure, and sophisticated user interface that provides detailed visibility into workflow execution, task dependencies, and system performance. The platform’s plugin architecture enables seamless integration with cloud services, databases, message queues, and machine learning platforms, making it particularly attractive for organizations with diverse technology stacks.

However, Airflow’s design philosophy, rooted in traditional batch processing paradigms, can present challenges when applied to modern AI workflows that require more dynamic execution patterns, real-time adaptability, and native support for machine learning-specific operations. The platform’s learning curve can be steep for data scientists unfamiliar with its operational concepts, and the management of complex dependencies across large DAGs can become unwieldy as workflows grow in sophistication.

Prefect: The Modern AI-Native Approach

Prefect represents a new generation of workflow orchestration platforms designed from the ground up to address the limitations of traditional workflow managers when applied to modern data science and AI operations. Founded by the team behind several influential data science tools, Prefect emphasizes developer experience, native cloud integration, and AI-specific workflow patterns that align closely with contemporary machine learning development practices. The platform’s architecture prioritizes flexibility, ease of use, and robust handling of the dynamic, experimental nature of AI workloads.

The fundamental design philosophy of Prefect centers around the concept of “dataflow” rather than traditional task scheduling, enabling more intuitive modeling of machine learning workflows where data flows through various transformation and processing stages. This approach provides natural support for dynamic workflows, conditional execution paths, and the iterative experimentation patterns common in AI development. The platform’s Python-native design allows data scientists to define workflows using familiar programming constructs while automatically handling the complexities of distributed execution, state management, and error recovery.

Prefect’s modern architecture includes built-in support for cloud-native deployment patterns, containerization, and serverless execution models that align with contemporary AI infrastructure preferences. The platform provides sophisticated failure handling mechanisms that understand the nuances of machine learning failures, including automatic retries with exponential backoff, circuit breaker patterns, and intelligent resource reallocation. These features are particularly valuable in AI workflows where individual tasks may have varying resource requirements and failure modes.

The platform’s user interface and monitoring capabilities are specifically designed for the needs of data science teams, providing intuitive visualization of experiment results, model performance metrics, and workflow execution patterns. Prefect’s approach to workflow versioning and experiment tracking aligns naturally with machine learning development practices, enabling reproducible experiments and seamless transitions from development to production environments.

Architecture and Design Philosophy Comparison

The architectural differences between Airflow and Prefect reflect fundamentally different approaches to workflow orchestration that have significant implications for AI workload management. Airflow’s architecture, built around the concept of directed acyclic graphs (DAGs) with fixed task dependencies, excels in scenarios where workflow structure is well-defined and relatively static. This approach provides excellent visibility into task relationships and enables sophisticated dependency management, making it ideal for traditional data engineering workflows with predictable patterns and well-established data lineage requirements.

Prefect’s architecture, conversely, emphasizes dynamic execution patterns and functional programming concepts that align more naturally with the experimental and iterative nature of AI development. The platform’s “dataflow” paradigm allows for more flexible task relationships, dynamic parameter passing, and conditional execution paths that adapt based on runtime conditions. This flexibility is particularly valuable in machine learning workflows where task execution may depend on model performance metrics, data quality assessments, or experimental results that are only available at runtime.

The scheduling and execution models of these platforms also differ significantly in their approach to resource management and task coordination. Airflow’s scheduler operates on a traditional cron-like model with support for complex scheduling expressions, making it well-suited for batch processing scenarios with predictable timing requirements. The platform’s execution model emphasizes reliability and consistency, with comprehensive logging and state tracking that provides excellent auditability for compliance and debugging purposes.

Prefect’s execution model is designed around the concept of “flows” and “tasks” that can be executed locally, in cloud environments, or across hybrid infrastructure with minimal configuration changes. This approach provides greater flexibility for AI teams that need to adapt their execution environment based on workload characteristics, resource availability, or cost optimization requirements. The platform’s native support for containerization and serverless execution models aligns well with modern AI infrastructure patterns and cloud-native development practices.

AI-Specific Workflow Capabilities

The handling of AI-specific workflow requirements represents a key differentiator between these platforms, with implications for everything from experiment tracking to model deployment automation. Machine learning workflows present unique challenges including long-running training processes, resource-intensive computations, dynamic hyperparameter optimization, and the need for comprehensive experiment reproducibility. These requirements demand specialized features that go beyond traditional workflow orchestration capabilities.

Airflow’s approach to AI workflows relies heavily on its extensive ecosystem of operators and plugins specifically designed for machine learning platforms and cloud services. The platform provides robust integration with popular ML frameworks including TensorFlow, PyTorch, Scikit-learn, and MLflow, enabling seamless orchestration of training pipelines, model evaluation workflows, and deployment processes. The platform’s XCom mechanism facilitates parameter passing and result sharing between tasks, though this approach can become cumbersome for workflows with complex data dependencies or large intermediate results.

Prefect’s AI-specific capabilities are more deeply integrated into the platform’s core architecture, providing native support for common machine learning patterns including parameter sweeps, hyperparameter optimization, and conditional model selection based on performance metrics. The platform’s task library includes specialized components for popular ML frameworks and cloud services, with particular strength in areas such as distributed training coordination and automated model versioning. The platform’s handling of large data objects and intermediate results is more sophisticated than traditional workflow managers, with built-in support for efficient data serialization and cloud storage integration.

Both platforms provide capabilities for experiment tracking and model versioning, though their approaches differ significantly in implementation and user experience. Airflow’s experiment tracking typically relies on integration with external tools such as MLflow or Weights & Biases, providing flexibility but requiring additional configuration and maintenance overhead. Prefect includes more built-in experiment tracking capabilities with native support for parameter logging, metric collection, and result visualization that integrate seamlessly with the workflow execution environment.

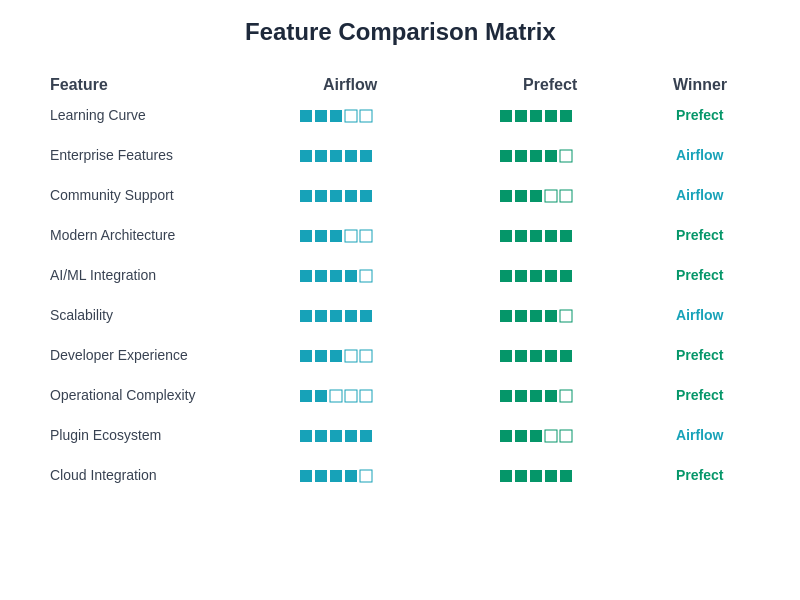

The comprehensive feature comparison reveals distinct strengths and trade-offs between the platforms across critical dimensions including ease of use, enterprise readiness, community ecosystem, and technical architecture. Understanding these differences enables organizations to make informed decisions based on their specific requirements and strategic priorities.

Performance and Scalability Considerations

The performance characteristics and scalability patterns of workflow orchestration platforms become critical factors when deploying AI systems at enterprise scale. Machine learning workflows often involve computationally intensive operations, large data transfers, and resource coordination across distributed computing clusters, requiring orchestration platforms that can efficiently manage these demanding workloads while maintaining system reliability and performance.

Airflow’s performance profile reflects its maturity and optimization for large-scale deployment scenarios. The platform’s scheduler has been extensively optimized for handling thousands of concurrent tasks across multiple workflow instances, with sophisticated queuing mechanisms and resource allocation algorithms that prevent system overload. The platform’s database backend provides robust state management and metadata storage capabilities that support extensive workflow history retention and complex querying requirements. However, Airflow’s performance can degrade significantly when managing workflows with very large numbers of tasks or complex dependency relationships, requiring careful tuning and architecture optimization.

Leverage advanced AI research capabilities with Perplexity to stay informed about the latest developments in workflow orchestration and performance optimization techniques. Prefect’s performance architecture is designed around modern distributed computing paradigms with native support for cloud-based execution models that can scale dynamically based on workload requirements. The platform’s execution model provides better performance isolation between workflows, reducing the impact of resource-intensive tasks on overall system performance. This approach is particularly beneficial for AI workloads where individual tasks may have vastly different resource requirements and execution characteristics.

The scalability patterns of these platforms also differ in their approach to multi-tenant deployments and resource sharing across different teams or projects. Airflow’s traditional deployment model typically involves shared infrastructure with workflow-level isolation, which can be efficient for organizations with similar workload patterns but may present challenges for diverse AI teams with varying resource requirements and security constraints. Prefect’s cloud-native architecture provides more granular isolation and resource allocation capabilities, enabling better support for multi-tenant deployments with diverse workload characteristics.

Memory management and resource utilization represent critical considerations for AI workflow orchestration, particularly when dealing with large datasets, complex models, and distributed training scenarios. Airflow’s memory management relies heavily on proper task design and external resource management tools, requiring careful configuration to prevent memory leaks or resource exhaustion. Prefect includes more sophisticated built-in resource management capabilities with automatic cleanup mechanisms and intelligent resource allocation that adapts based on task requirements and system constraints.

Cloud Integration and Deployment Models

The cloud integration capabilities and deployment flexibility of workflow orchestration platforms significantly impact their suitability for modern AI operations that increasingly rely on cloud-based infrastructure, containerization, and serverless execution models. The ability to seamlessly operate across multiple cloud providers, integrate with managed services, and adapt to varying infrastructure requirements has become essential for AI teams operating in diverse environments.

Airflow’s cloud integration capabilities have evolved significantly over its development history, with extensive support for major cloud providers including AWS, Google Cloud Platform, and Microsoft Azure. The platform provides comprehensive operators for cloud services including managed databases, storage systems, container orchestration platforms, and machine learning services. This extensive integration ecosystem makes Airflow particularly attractive for organizations with complex multi-cloud deployments or specific requirements for particular cloud services. However, managing these integrations often requires significant configuration and maintenance overhead, particularly when operating across multiple cloud environments.

The deployment models supported by Airflow range from traditional server-based installations to modern containerized deployments using Kubernetes operators. The platform’s Kubernetes integration provides sophisticated pod management capabilities, resource allocation controls, and isolation mechanisms that align well with cloud-native deployment practices. However, the complexity of Airflow’s deployment architecture can present challenges for teams seeking simple, low-maintenance deployment options or those operating in environments with limited infrastructure management capabilities.

Prefect’s approach to cloud integration emphasizes simplicity and native cloud functionality, with built-in support for major cloud providers and managed services that requires minimal configuration overhead. The platform’s architecture is designed around cloud-native principles from the ground up, providing seamless operation across different cloud environments and execution models. This approach reduces the operational burden on AI teams while maintaining the flexibility to adapt to specific infrastructure requirements or constraints.

The deployment flexibility of Prefect extends to its support for hybrid execution models where workflows can span local development environments, cloud-based execution platforms, and edge computing resources. This capability is particularly valuable for AI teams that need to balance cost optimization, data locality requirements, and computational resource availability across diverse deployment scenarios. The platform’s approach to secrets management, configuration handling, and environment isolation provides robust support for production AI deployments while maintaining developer productivity.

User Experience and Learning Curve

The user experience and accessibility of workflow orchestration platforms significantly influence their adoption success and long-term effectiveness within AI teams. Data scientists and machine learning engineers bring diverse backgrounds and skill sets to workflow orchestration, ranging from traditional software engineering experience to domain-specific expertise in statistics and machine learning theory. The platform’s ability to accommodate this diversity while providing powerful orchestration capabilities directly impacts team productivity and solution quality.

Airflow’s user experience reflects its origins in the data engineering community, with interfaces and concepts that align closely with traditional software engineering practices. The platform’s code-centric approach to workflow definition provides significant power and flexibility for users comfortable with Python programming and software development practices. The comprehensive documentation, extensive community resources, and abundance of examples make Airflow accessible to users willing to invest time in learning its concepts and best practices. However, the platform’s complexity and operational requirements can present significant barriers for data scientists focused primarily on model development rather than infrastructure management.

The learning curve for Airflow can be substantial, particularly for users new to workflow orchestration concepts or those working primarily in research environments where infrastructure concerns are typically abstracted away. Understanding Airflow’s execution model, dependency management, and operational requirements requires investment in training and hands-on experience that may not align with the immediate priorities of AI development teams focused on model accuracy and experimental results.

Prefect’s user experience design prioritizes developer productivity and ease of use, with intuitive interfaces that align more closely with modern software development practices and data science workflows. The platform’s approach to workflow definition uses familiar Python programming constructs while abstracting away much of the operational complexity associated with distributed execution and error handling. This design philosophy enables data scientists to focus on workflow logic rather than infrastructure concerns, reducing the time required to achieve productive workflow orchestration capabilities.

The onboarding experience for Prefect emphasizes rapid time-to-value with minimal configuration requirements and extensive automation of operational concerns. The platform’s documentation and examples are specifically tailored to data science use cases, providing relevant examples and best practices that align with common AI workflow patterns. This approach significantly reduces the learning curve for AI teams while maintaining the sophistication required for production deployment scenarios.

Cost Considerations and Total Cost of Ownership

The economic implications of workflow orchestration platform selection extend beyond simple licensing costs to encompass infrastructure requirements, operational overhead, development productivity, and long-term maintenance considerations. For AI organizations operating at scale, these factors can represent significant portions of overall technology budgets and substantially impact the return on investment from machine learning initiatives.

Airflow’s open-source nature eliminates direct licensing costs but introduces operational expenses associated with infrastructure management, maintenance, and support. The platform’s resource requirements can be substantial, particularly for large-scale deployments managing hundreds or thousands of concurrent workflows. The need for dedicated database infrastructure, scheduler coordination, and web server management adds complexity and cost to deployment scenarios. Additionally, the expertise required to effectively operate and maintain Airflow deployments represents a significant investment in specialized knowledge and training.

The total cost of ownership for Airflow deployments must account for the ongoing maintenance burden associated with platform updates, security patches, and infrastructure management. Organizations must also consider the opportunity cost of engineering time devoted to platform administration rather than core AI development activities. However, the platform’s maturity and extensive community support can provide long-term cost advantages through shared knowledge, established best practices, and reduced vendor lock-in risks.

Prefect’s pricing model includes both open-source and commercial offerings that provide different cost-benefit trade-offs depending on organizational requirements and scale. The platform’s cloud-native architecture can reduce infrastructure management overhead while potentially increasing direct service costs, particularly for organizations with high-volume workflow execution requirements. The simplified operational model can significantly reduce the engineering effort required for platform management, enabling teams to focus on AI development activities rather than infrastructure concerns.

The economic value proposition of each platform must be evaluated in the context of specific organizational requirements, including workflow complexity, execution volume, team expertise, and strategic technology preferences. Organizations with existing strong operational capabilities and preference for self-managed infrastructure may find Airflow’s total cost of ownership attractive, while teams prioritizing rapid development cycles and reduced operational overhead may benefit from Prefect’s managed service approach.

Integration Ecosystem and Community Support

The breadth and quality of integration ecosystem and community support significantly influence the long-term viability and effectiveness of workflow orchestration platforms. AI workflows typically require integration with diverse technologies including cloud services, databases, machine learning frameworks, monitoring tools, and specialized AI infrastructure components. The availability of pre-built integrations, community-contributed plugins, and comprehensive documentation directly impacts development velocity and solution reliability.

Airflow’s ecosystem represents one of its greatest strengths, with hundreds of operators and plugins providing integration with virtually every major cloud service, database system, and data processing framework. The platform’s mature provider system enables sophisticated integration patterns with services from AWS, Google Cloud, Microsoft Azure, and numerous specialized platforms. This extensive ecosystem is supported by a large, active community that continuously contributes new integrations, bug fixes, and feature enhancements. The Apache Software Foundation governance model ensures long-term project sustainability and vendor neutrality.

The community support for Airflow includes comprehensive documentation, extensive training resources, and active forums where users can obtain assistance with complex deployment scenarios and troubleshooting. The platform’s maturity and widespread adoption have resulted in substantial knowledge sharing through blogs, conferences, and professional training programs. This ecosystem provides significant value for organizations requiring extensive integration capabilities or operating in complex technology environments.

Prefect’s integration ecosystem, while newer and smaller than Airflow’s, focuses on providing high-quality integrations with the most commonly used services and frameworks in modern AI deployments. The platform’s integration library emphasizes developer experience and ease of use, with comprehensive testing and documentation that reduces implementation complexity. The company’s commercial focus enables dedicated support for key integrations while maintaining compatibility with the broader Python ecosystem.

The community around Prefect is growing rapidly, supported by active engagement from the company’s development team and increasing adoption among forward-thinking AI organizations. The platform benefits from modern development practices including comprehensive testing, continuous integration, and responsive issue resolution. While the ecosystem is smaller than Airflow’s, the focus on quality and developer experience often compensates for the reduced breadth of available integrations.

Security and Compliance Considerations

Security and compliance requirements represent critical factors in enterprise AI deployments where sensitive data, proprietary models, and regulatory constraints must be carefully managed throughout the workflow orchestration process. The platforms’ approaches to authentication, authorization, data protection, and audit capabilities directly impact their suitability for regulated industries and security-conscious organizations.

Airflow’s security model has evolved significantly over its development history, with comprehensive support for role-based access control, integration with enterprise authentication systems, and sophisticated permission management capabilities. The platform provides extensive audit logging, encryption support for data in transit and at rest, and integration with secret management systems. These capabilities enable compliance with regulatory requirements including GDPR, HIPAA, and SOX while maintaining the flexibility required for diverse deployment scenarios.

The platform’s approach to secret management includes integration with external systems such as HashiCorp Vault, cloud provider secret managers, and environment variable systems. This flexibility enables organizations to maintain consistent security practices across their technology stack while leveraging Airflow’s workflow orchestration capabilities. The platform’s extensive logging and monitoring capabilities provide the audit trails and compliance documentation required for regulated environments.

Prefect’s security architecture emphasizes modern security practices including zero-trust principles, comprehensive encryption, and sophisticated access control mechanisms. The platform’s cloud-native design enables integration with modern identity management systems and provides granular permission controls that align with contemporary security best practices. The company’s focus on enterprise requirements has resulted in robust security features including advanced threat detection, comprehensive audit logging, and compliance reporting capabilities.

The platform’s approach to data protection includes sophisticated handling of sensitive information throughout the workflow execution process, with automatic redaction of sensitive data in logs and comprehensive encryption of all communications. These capabilities are particularly important for AI workflows that process personal information, financial data, or other sensitive information requiring careful protection throughout the processing pipeline.

Making the Strategic Choice

The selection between Apache Airflow and Prefect represents a strategic decision that should align with organizational priorities, technical requirements, and long-term AI infrastructure goals. Organizations with strong operational capabilities, extensive integration requirements, and preference for self-managed infrastructure may find Airflow’s proven capabilities and extensive ecosystem compelling. The platform’s maturity, comprehensive feature set, and vendor neutrality provide long-term stability and flexibility that appeal to enterprise environments with complex requirements.

Conversely, organizations prioritizing rapid development cycles, modern deployment practices, and reduced operational overhead may benefit significantly from Prefect’s developer-focused approach and cloud-native architecture. The platform’s emphasis on user experience, intelligent automation, and AI-specific workflow patterns can accelerate time-to-value for teams focused on machine learning outcomes rather than infrastructure management.

The decision should also consider the existing technical landscape, team expertise, and strategic technology directions within the organization. Teams with significant investment in Apache ecosystem technologies may find Airflow’s integration naturally complementary, while organizations embracing modern cloud-native practices may prefer Prefect’s architectural alignment with contemporary deployment patterns.

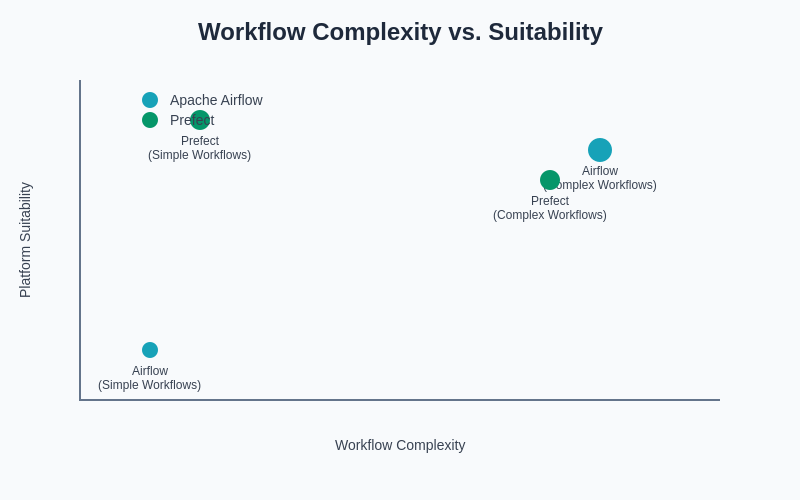

The relationship between workflow complexity and platform suitability reveals important considerations for platform selection. While both platforms can handle various complexity levels, their effectiveness varies significantly based on the specific characteristics of the workflow requirements and organizational constraints.

Ultimately, both platforms provide robust capabilities for AI workflow orchestration, with the optimal choice depending on the specific balance of requirements including functionality, operational preferences, cost considerations, and strategic alignment with organizational technology directions. The continued evolution of both platforms ensures that either choice can provide a foundation for sophisticated AI workflow orchestration capabilities that scale with organizational requirements and technological advancement.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of workflow orchestration technologies and their applications in AI environments. Readers should conduct their own research and consider their specific requirements when selecting workflow orchestration platforms. The effectiveness and suitability of these platforms may vary depending on specific use cases, organizational requirements, and technical constraints.