The convergence of artificial intelligence and embedded systems has reached a remarkable milestone with the emergence of machine learning capabilities on low-power microcontrollers like Arduino. This revolutionary development, known as TinyML, represents a paradigm shift that brings intelligent decision-making directly to the edge of computing networks, enabling previously impossible applications in resource-constrained environments. The ability to run sophisticated machine learning models on devices with mere kilobytes of memory and processing power has opened new frontiers in Internet of Things applications, autonomous systems, and intelligent sensor networks.

Explore the latest AI developments and trends to understand how edge computing and embedded intelligence are reshaping the technology landscape. The democratization of machine learning through accessible platforms like Arduino has made it possible for makers, researchers, and engineers to create intelligent systems that operate independently of cloud connectivity while consuming minimal power and maintaining real-time responsiveness.

Understanding TinyML and Edge Intelligence

TinyML represents the intersection of machine learning and embedded systems, focusing on ultra-low power implementations that can operate on microcontrollers with severe resource constraints. Unlike traditional machine learning deployments that require powerful servers or cloud infrastructure, TinyML models are specifically designed to function within the limitations of devices that typically have less than 1MB of memory and operate on battery power for extended periods. This approach to machine learning enables intelligence to be embedded directly into everyday objects, creating a new category of smart devices that can make autonomous decisions without requiring constant connectivity to external systems.

The fundamental principle behind TinyML lies in model optimization and quantization techniques that dramatically reduce the computational requirements while maintaining acceptable accuracy levels. Through careful selection of algorithms, pruning of neural networks, and aggressive optimization of inference engines, it becomes possible to deploy models that would normally require gigabytes of memory and powerful processors onto devices with kilobytes of available resources. This transformation has been enabled by advances in both hardware design and software optimization tools that specifically target the constraints of embedded systems.

The implications of this technology extend far beyond simple sensor applications. TinyML enables the creation of intelligent systems that can operate in environments where traditional computing approaches would be impractical due to power consumption, connectivity requirements, or physical constraints. From wildlife monitoring systems that can identify species calls in remote locations to predictive maintenance sensors that can detect equipment failures before they occur, the possibilities for embedded intelligence are virtually limitless.

Arduino Ecosystem for Machine Learning

The Arduino ecosystem has evolved dramatically to support machine learning applications, with new hardware platforms, software libraries, and development tools specifically designed for TinyML implementations. The Arduino Nano 33 BLE Sense represents a particularly compelling platform for machine learning projects, incorporating a powerful ARM Cortex-M4 processor alongside an impressive array of sensors including accelerometers, gyroscopes, magnetometers, barometric pressure sensors, temperature sensors, humidity sensors, microphones, and gesture detection capabilities.

Enhance your AI development skills with Claude to better understand the complexities of embedded machine learning and optimize your TinyML implementations for maximum performance and efficiency. The integration of multiple sensing modalities on a single, affordable platform creates opportunities for sophisticated multi-sensor fusion applications that can extract meaningful insights from complex environmental data.

The software ecosystem supporting Arduino-based machine learning has expanded significantly with the introduction of specialized libraries and frameworks. Arduino IDE now supports TensorFlow Lite for Microcontrollers, providing a streamlined path for deploying pre-trained models onto Arduino hardware. Additionally, the Arduino_LSM9DS1 library enables easy access to inertial measurement unit data, while the PDM library facilitates audio processing applications. These tools collectively create a comprehensive development environment that lowers the barrier to entry for implementing machine learning solutions on embedded systems.

The community-driven nature of the Arduino ecosystem has accelerated the development of machine learning applications through shared examples, tutorials, and open-source projects. This collaborative environment has produced numerous reference implementations that demonstrate best practices for model deployment, sensor integration, and power optimization on resource-constrained devices. The availability of these resources has significantly reduced the learning curve for developers transitioning from traditional software development to embedded machine learning.

TensorFlow Lite for Microcontrollers

TensorFlow Lite for Microcontrollers represents Google’s strategic approach to bringing machine learning capabilities to the smallest computing devices. This framework has been specifically optimized for deployment on microcontrollers with as little as a few kilobytes of memory, making it an ideal solution for Arduino-based projects. The framework supports a subset of TensorFlow operations that have been carefully selected and optimized for embedded deployment, ensuring that models can execute efficiently within the severe constraints of microcontroller environments.

The process of deploying models using TensorFlow Lite for Microcontrollers involves several critical optimization steps. Models trained using standard TensorFlow must first be converted to TensorFlow Lite format, which involves quantization from 32-bit floating-point to 8-bit integer representations. This quantization process typically reduces model size by approximately 75% while maintaining acceptable accuracy levels for most applications. The resulting models are then further optimized for specific microcontroller architectures, taking advantage of hardware-specific acceleration features where available.

The framework includes a specialized interpreter designed for microcontroller deployment that minimizes memory allocation and computational overhead. Unlike traditional machine learning frameworks that assume abundant memory and processing power, the microcontroller interpreter operates within a pre-allocated memory arena and uses highly optimized kernel implementations specifically designed for embedded systems. This approach ensures predictable memory usage and deterministic execution times, both critical requirements for real-time embedded applications.

Hardware Considerations and Optimization

Successful implementation of machine learning on Arduino requires careful consideration of hardware limitations and optimization strategies. Memory management becomes critically important when working with models that may consume a significant portion of available RAM, leaving limited resources for application logic and sensor data processing. Effective memory management strategies include careful selection of data types, efficient buffer management, and strategic use of program memory for storing model weights and intermediate calculations.

Processing power optimization involves understanding the computational characteristics of different machine learning operations and selecting algorithms that align with microcontroller capabilities. Operations that are computationally intensive on traditional processors, such as floating-point arithmetic, may need to be replaced with integer-based alternatives or implemented using specialized hardware acceleration features. The Arduino ecosystem includes several microcontroller options with varying levels of processing power and specialized features that can be leveraged for machine learning applications.

Power consumption represents another critical consideration for battery-powered applications. Machine learning inference can be relatively power-hungry compared to traditional microcontroller applications, necessitating careful optimization of inference frequency, sleep modes, and sensor sampling rates. Effective power management strategies often involve implementing intelligent wake-up mechanisms that activate machine learning processing only when necessary, combined with aggressive power-down modes during idle periods.

The selection of sensors and peripherals also significantly impacts the feasibility and performance of machine learning applications. High-resolution sensors may provide better input data for machine learning models but at the cost of increased power consumption and data processing requirements. Finding the optimal balance between sensor quality, power consumption, and model accuracy requires careful analysis of specific application requirements and thorough testing under realistic operating conditions.

Popular TinyML Applications

The practical applications of TinyML on Arduino platforms span numerous domains, each demonstrating unique advantages of embedded intelligence. Audio classification applications represent one of the most popular and accessible entry points into Arduino-based machine learning. These applications can identify specific sounds, such as breaking glass for security systems, machinery noise patterns for predictive maintenance, or bird calls for environmental monitoring. The combination of Arduino’s built-in microphone capabilities with optimized audio processing models creates powerful systems that can operate continuously while consuming minimal power.

Motion recognition and gesture detection applications leverage the accelerometer and gyroscope sensors commonly found on Arduino platforms. These systems can recognize specific movements, gestures, or activity patterns without requiring external connectivity or cloud processing. Applications range from fitness tracking devices that can identify different types of exercises to industrial safety systems that can detect falls or unusual movement patterns. The real-time nature of these applications demonstrates the value of edge-based processing for time-sensitive decision-making.

Environmental monitoring applications showcase the potential for deploying intelligent sensor networks that can make autonomous decisions based on complex environmental data. These systems can process multiple sensor inputs simultaneously, identifying patterns and anomalies that would be difficult to detect using traditional threshold-based approaches. Applications include smart agriculture systems that can optimize irrigation based on plant stress indicators, air quality monitoring systems that can identify pollution sources, and wildlife monitoring systems that can track animal behavior patterns.

Leverage Perplexity’s research capabilities to explore cutting-edge applications and research developments in the rapidly evolving field of embedded machine learning and TinyML implementations. The intersection of multiple sensing modalities with intelligent processing capabilities continues to enable new applications that were previously impossible with traditional embedded systems approaches.

Development Workflow and Tools

The development workflow for Arduino-based machine learning projects involves several distinct phases, each requiring specialized tools and techniques. The initial phase focuses on data collection and model development, typically performed using traditional machine learning frameworks such as TensorFlow or PyTorch running on desktop or cloud environments. This phase involves gathering training data, preprocessing inputs, designing model architectures, and training models using standard machine learning techniques.

Model optimization and conversion represent critical phases in the TinyML development workflow. Models trained using full-featured machine learning frameworks must be carefully optimized for deployment on resource-constrained devices. This optimization process includes quantization, pruning, and architecture simplification techniques that reduce model size and computational requirements while maintaining acceptable accuracy levels. The TensorFlow Lite converter provides automated tools for this optimization process, though manual tuning is often required to achieve optimal results.

The deployment phase involves integrating optimized models into Arduino applications, managing memory allocation, and implementing inference logic. This phase requires understanding of both machine learning concepts and embedded systems programming, as developers must balance model performance with real-time requirements and resource constraints. Debugging and testing tools specifically designed for embedded systems become essential for identifying performance bottlenecks and optimizing system behavior.

Testing and validation of TinyML applications require specialized approaches that account for the unique characteristics of embedded deployment. Unlike traditional software applications that can be easily modified and redeployed, embedded machine learning systems must be thoroughly tested under realistic operating conditions. This testing process includes validation of model accuracy under various environmental conditions, assessment of power consumption under different usage patterns, and evaluation of real-time performance characteristics.

Performance Optimization Strategies

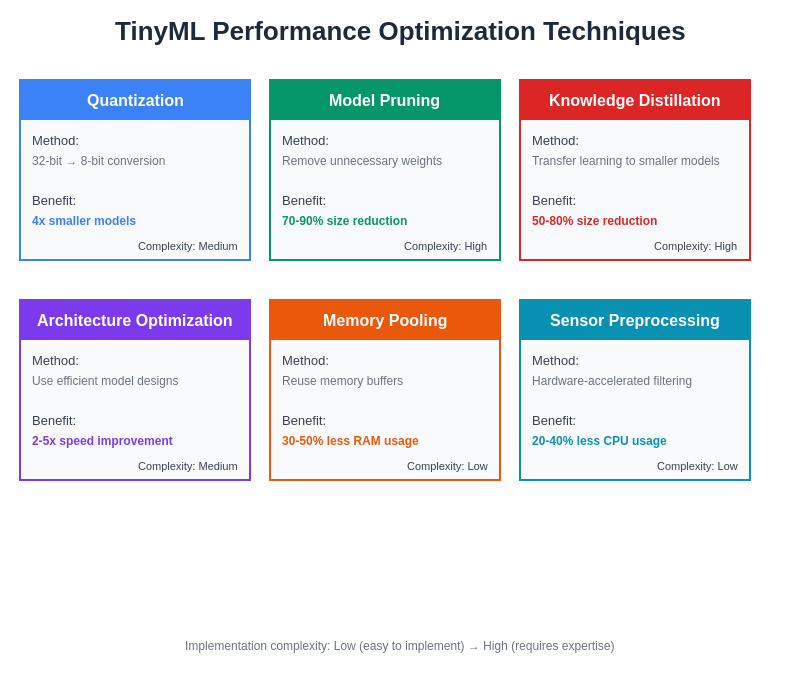

Optimizing machine learning performance on Arduino requires a multi-faceted approach that addresses both algorithmic and implementation considerations. Model architecture optimization involves selecting neural network designs that are inherently efficient for embedded deployment. Techniques such as depthwise separable convolutions, bottleneck architectures, and knowledge distillation can significantly reduce computational requirements while maintaining model effectiveness. The choice of activation functions, normalization techniques, and network depth all impact the feasibility of embedded deployment.

Quantization represents one of the most effective optimization techniques for reducing model size and improving inference speed on microcontrollers. Converting from 32-bit floating-point to 8-bit integer representations typically provides 4x reduction in model size and significant improvements in inference speed on integer-optimized processors. Advanced quantization techniques, such as mixed-precision quantization and dynamic quantization, can further optimize specific layers or operations based on their sensitivity to precision reduction.

Memory management optimization involves careful analysis of memory usage patterns and implementation of strategies to minimize peak memory requirements. Techniques such as in-place operations, memory pooling, and strategic tensor allocation can significantly reduce memory footprint. Understanding the memory access patterns of different operations enables optimization of data layout and caching strategies that improve performance on microcontroller architectures with limited cache memory.

Preprocessing optimization can significantly impact overall system performance by reducing the computational burden during inference. Techniques such as sensor data fusion, feature extraction in hardware, and intelligent sampling strategies can reduce the amount of data that must be processed by machine learning models. Implementing preprocessing operations using dedicated hardware peripherals or optimized library functions can free up processing resources for machine learning inference.

Power Management and Battery Life

Power consumption optimization represents a critical challenge for battery-powered Arduino machine learning applications. The computational requirements of machine learning inference can significantly impact battery life, necessitating careful analysis of power consumption patterns and implementation of aggressive power management strategies. Understanding the power characteristics of different operations enables developers to optimize inference frequency and processing intensity based on available power budget and application requirements.

Dynamic power management strategies involve implementing intelligent sleep modes and wake-up mechanisms that minimize power consumption during idle periods while maintaining responsiveness to important events. Machine learning applications can leverage predictive models to anticipate when inference will be needed, enabling proactive wake-up and sleep scheduling that optimizes power efficiency. Advanced power management techniques include voltage scaling, clock frequency adjustment, and selective peripheral power-down based on current operating requirements.

The selection of sensors and peripherals significantly impacts overall power consumption, as many sensors consume substantial power during active operation. Implementing sensor fusion techniques that combine data from multiple low-power sensors can provide equivalent functionality to single high-power sensors while reducing overall power consumption. Additionally, implementing intelligent sensor sampling strategies that adjust sampling rates based on detected activity levels can dramatically improve battery life without sacrificing application performance.

Power optimization also involves careful consideration of inference scheduling and batch processing strategies. Rather than performing inference continuously, many applications can achieve equivalent functionality by implementing event-driven inference triggered by sensor thresholds or temporal patterns. This approach enables aggressive power savings during periods of low activity while maintaining rapid response to significant events.

Real-World Implementation Examples

The practical implementation of Arduino-based machine learning projects demonstrates the versatility and effectiveness of TinyML approaches across diverse application domains. A wildlife monitoring system developed for conservation research illustrates the potential for long-term autonomous operation in remote environments. This system combines audio classification models trained to recognize specific bird calls with environmental sensors that monitor habitat conditions. The system operates continuously for months on battery power while collecting valuable research data that would be impossible to gather using traditional monitoring approaches.

Industrial predictive maintenance applications showcase the value of embedded intelligence for continuous monitoring of critical equipment. These systems use vibration sensors and accelerometers to collect data on machinery operation, applying machine learning models to detect subtle changes that indicate impending failures. The real-time processing capabilities of Arduino-based systems enable immediate alerts and responses that can prevent costly equipment failures and production downtime.

Smart home applications demonstrate the potential for privacy-preserving intelligent systems that operate entirely without cloud connectivity. Voice command recognition systems running on Arduino can identify specific wake words and commands while maintaining complete user privacy by processing all audio data locally. These systems avoid the privacy concerns associated with cloud-based voice assistants while providing responsive interaction capabilities.

Agricultural monitoring systems illustrate the scalability of TinyML approaches for large-scale sensor networks. Multiple Arduino-based sensors distributed throughout agricultural fields can monitor soil moisture, plant health indicators, and environmental conditions while applying machine learning models to optimize irrigation and fertilization schedules. The low power consumption and autonomous operation capabilities of these systems enable cost-effective deployment across large areas without requiring extensive infrastructure investment.

Challenges and Limitations

Despite the remarkable capabilities of TinyML on Arduino platforms, several significant challenges and limitations must be considered when implementing machine learning solutions on resource-constrained devices. Model accuracy constraints represent a fundamental limitation, as the aggressive optimization required for embedded deployment often results in reduced accuracy compared to full-scale implementations. Finding the optimal balance between model complexity and deployment feasibility requires careful analysis of application requirements and thorough testing under realistic operating conditions.

Memory limitations impose strict constraints on model architecture and application complexity. With typical Arduino platforms providing only a few hundred kilobytes of program memory and even less RAM, developers must carefully manage memory allocation and consider trade-offs between model sophistication and available resources. These constraints often necessitate simplified models or reduced feature sets compared to what would be possible with more capable hardware platforms.

Processing power limitations affect the types of models and inference frequencies that are practical for real-time applications. Complex models may require several seconds or minutes to complete inference on Arduino hardware, limiting their applicability for time-sensitive applications. Understanding the computational characteristics of different model architectures enables developers to select approaches that align with real-time requirements while maintaining acceptable accuracy levels.

Development complexity represents another significant challenge, as TinyML development requires expertise in both machine learning and embedded systems programming. The interdisciplinary nature of this field necessitates understanding of optimization techniques, hardware constraints, and domain-specific requirements that may not be familiar to developers with backgrounds in only one of these areas. The limited debugging and profiling tools available for embedded systems further complicate the development and optimization process.

Future Directions and Emerging Trends

The future of machine learning on Arduino and similar microcontroller platforms promises significant advances in both hardware capabilities and software optimization techniques. Next-generation microcontrollers with dedicated machine learning acceleration units will provide substantial improvements in inference speed and power efficiency. These specialized processors include dedicated multiply-accumulate units, optimized memory architectures, and hardware support for common machine learning operations that will dramatically expand the capabilities of embedded AI systems.

Software framework evolution continues to improve the accessibility and performance of TinyML implementations. Advanced compiler optimization techniques, automated model optimization tools, and improved quantization algorithms will reduce the expertise required for embedded machine learning deployment while improving performance characteristics. The development of domain-specific languages and higher-level abstractions will further democratize access to TinyML capabilities for developers with limited machine learning expertise.

The integration of multiple sensing modalities and sensor fusion techniques will enable more sophisticated applications that can understand complex environmental conditions and user interactions. Advances in ultra-low-power sensor technologies, combined with improved machine learning algorithms for multi-modal data processing, will create opportunities for intelligent systems that can operate autonomously for years on battery power while providing rich interaction capabilities.

Edge computing ecosystem integration will create new opportunities for distributed intelligence networks where Arduino-based devices can collaborate with more powerful edge computing platforms. This hierarchical approach will enable sophisticated applications that combine local real-time processing with more complex analysis and decision-making performed on nearby edge computing resources, creating intelligent systems that are both responsive and capable of handling complex analytical tasks.

Conclusion and Practical Recommendations

The implementation of machine learning on Arduino platforms represents a transformative capability that enables intelligent behavior in previously static embedded systems. Success in TinyML development requires careful consideration of hardware constraints, thoughtful model optimization, and strategic application design that aligns with the unique characteristics of embedded deployment. Developers entering this field should begin with simple applications that demonstrate core concepts before progressing to more complex implementations that require advanced optimization techniques.

The selection of appropriate hardware platforms, development tools, and optimization strategies significantly impacts the success of Arduino-based machine learning projects. Understanding the trade-offs between different approaches enables informed decision-making that balances performance, power consumption, and development complexity based on specific application requirements. The rapidly evolving ecosystem of tools and frameworks continues to improve the accessibility and performance of TinyML implementations, making this technology increasingly practical for diverse applications.

The future potential of embedded machine learning extends far beyond current implementations, with emerging hardware capabilities and software optimizations promising to unlock new application domains and use cases. Developers who invest in understanding the fundamental principles and practical techniques of TinyML will be well-positioned to leverage these advancing capabilities as they become available, creating innovative solutions that bring intelligence to the edge of computing networks.

Disclaimer

This article is for educational and informational purposes only and does not constitute professional advice. The implementation of machine learning on embedded systems requires careful consideration of specific application requirements, hardware limitations, and safety considerations. Readers should conduct thorough testing and validation of any implementations before deploying them in production environments. The effectiveness and suitability of TinyML approaches may vary depending on specific use cases, hardware platforms, and environmental conditions.