The pursuit of Artificial General Intelligence represents one of humanity’s most ambitious technological endeavors, promising to fundamentally transform every aspect of human civilization while presenting unprecedented challenges that push the boundaries of our current understanding of intelligence, cognition, and computation. As we stand at the precipice of potentially revolutionary breakthroughs in artificial intelligence, the question of when we might achieve AGI and what technical hurdles must be overcome has become increasingly urgent for researchers, policymakers, and society at large.

Stay updated with the latest AI developments as the field rapidly evolves toward more sophisticated and capable systems. The journey toward AGI encompasses not merely incremental improvements to existing technologies but requires fundamental breakthroughs in our understanding of intelligence itself, necessitating interdisciplinary collaboration between computer scientists, neuroscientists, cognitive psychologists, and philosophers who collectively work toward deciphering the mysteries of general intelligence.

Understanding Artificial General Intelligence

Artificial General Intelligence fundamentally differs from the narrow AI systems that currently dominate the technological landscape, representing a theoretical form of artificial intelligence that possesses the ability to understand, learn, and apply knowledge across diverse domains with the same flexibility and adaptability exhibited by human intelligence. While contemporary AI systems excel within specific, well-defined parameters such as playing chess, recognizing images, or translating languages, AGI would demonstrate the capacity to transfer learning from one domain to another, engage in abstract reasoning, and solve novel problems without extensive retraining or domain-specific programming.

The conceptualization of AGI encompasses several critical characteristics that distinguish it from narrow AI applications. These systems would demonstrate autonomous learning capabilities, enabling them to acquire new knowledge and skills through experience rather than explicit programming. They would exhibit robust generalization abilities, applying learned concepts to entirely new situations and domains. Most importantly, AGI systems would possess what researchers term “general problem-solving ability,” allowing them to approach unfamiliar challenges with the same cognitive flexibility that characterizes human intelligence.

The implications of achieving AGI extend far beyond technological advancement, potentially triggering what researchers term an “intelligence explosion” where AGI systems begin improving themselves, leading to rapid recursive self-improvement that could result in superintelligence. This possibility has profound implications for economic systems, social structures, and the fundamental relationship between humanity and technology, making the timeline and technical challenges associated with AGI development matters of critical importance for global planning and risk management.

Current Expert Timeline Predictions

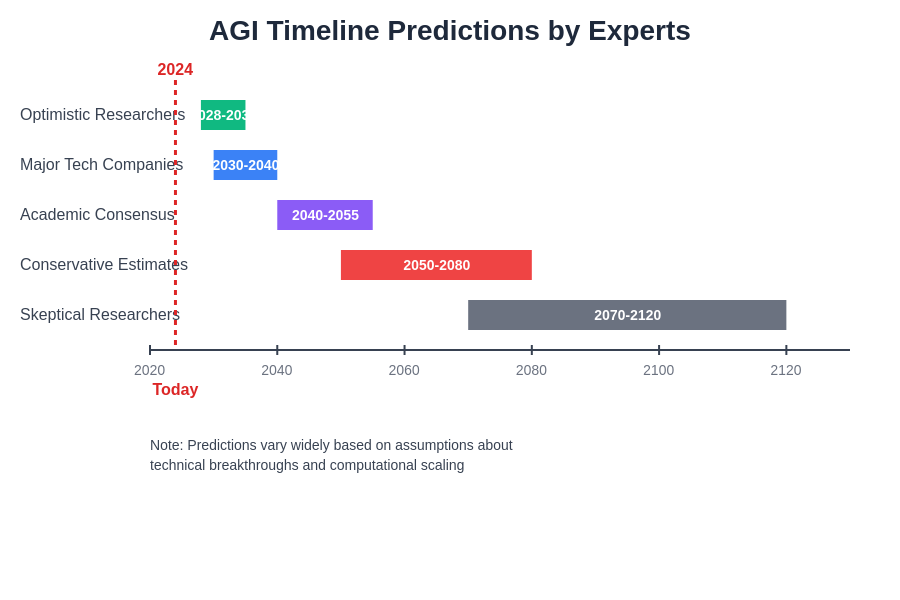

The scientific community remains deeply divided regarding the timeline for achieving AGI, with predictions ranging from optimistic projections suggesting achievement within the next decade to more conservative estimates extending several decades into the future. Leading AI researchers and institutions have provided varying timeline estimates based on different methodologies, assumptions about technological progress, and philosophical interpretations of what constitutes true general intelligence.

Explore advanced AI capabilities with Claude to understand the current state of AI development and its trajectory toward more general forms of intelligence. Recent surveys of AI researchers indicate a median prediction of AGI achievement by 2045, though significant variation exists within expert opinions. Some prominent researchers, including those at major technology companies and research institutions, suggest that current deep learning approaches, when scaled sufficiently and combined with novel architectural innovations, could achieve AGI-level performance within 10-20 years.

However, other respected voices in the field argue that fundamental conceptual breakthroughs are necessary before AGI becomes achievable, potentially extending the timeline to 50-100 years or beyond. These researchers emphasize that current AI systems, despite their impressive capabilities, lack crucial aspects of intelligence such as genuine understanding, consciousness, and the ability to form abstract concepts in the manner that humans do. The debate reflects deeper philosophical questions about the nature of intelligence and whether computational approaches alone are sufficient for achieving general intelligence.

The divergence in timeline predictions reflects not only technical uncertainties but also fundamental disagreements about the path to AGI. Some researchers advocate for scaling existing transformer-based architectures, believing that sufficient computational resources and data will eventually produce AGI-level capabilities. Others argue for revolutionary approaches incorporating novel architectures, neuromorphic computing, or hybrid symbolic-connectionist systems that more closely mimic biological intelligence.

Fundamental Technical Challenges

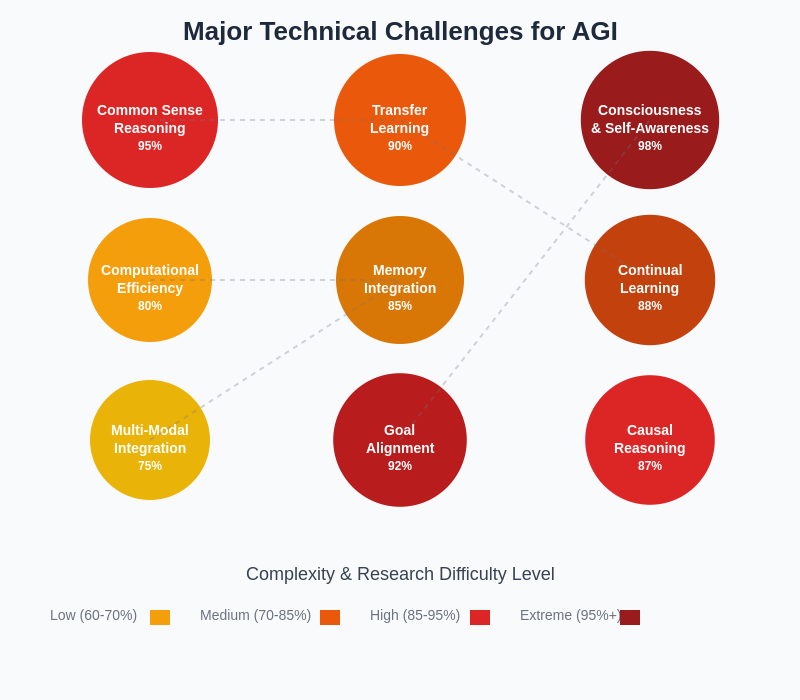

The path to AGI is fraught with numerous technical challenges that represent some of the most difficult problems in computer science and cognitive science. These challenges span multiple disciplines and require breakthroughs that may fundamentally alter our understanding of computation, learning, and intelligence itself.

One of the most significant challenges lies in developing systems capable of genuine understanding rather than sophisticated pattern matching. Current large language models and neural networks excel at identifying patterns in training data and generating responses that appear intelligent, but they lack deep comprehension of the concepts they manipulate. Achieving AGI requires systems that can form genuine understanding of abstract concepts, causal relationships, and the underlying structure of knowledge domains.

The challenge of transfer learning represents another critical obstacle. While humans effortlessly apply knowledge gained in one domain to solve problems in entirely different areas, AI systems typically require extensive retraining when confronted with new domains. Developing systems capable of robust transfer learning requires fundamental advances in how artificial systems represent and manipulate knowledge, potentially necessitating novel approaches to memory, abstraction, and conceptual representation.

Common sense reasoning poses perhaps one of the most intractable challenges in AGI development. Humans possess vast amounts of implicit knowledge about how the world works, enabling them to make reasonable inferences about everyday situations and interactions. Encoding this common sense knowledge into artificial systems, or developing mechanisms by which such systems can acquire this knowledge autonomously, remains an unsolved problem despite decades of research effort.

The complexity and interdependence of these technical challenges create a multifaceted research landscape where progress in one area often depends on breakthroughs in several others. The difficulty levels shown reflect not only the inherent complexity of each challenge but also the current state of research and the resources required for meaningful progress.

Computational and Architectural Limitations

Current computational architectures present significant limitations for AGI development, particularly in terms of energy efficiency, parallel processing capabilities, and the fundamental von Neumann architecture that underlies most digital computers. The human brain operates with remarkable energy efficiency, consuming approximately 20 watts while performing complex cognitive tasks that require massive computational resources when implemented on traditional digital computers.

The scalability challenge represents a critical concern as current AI systems require exponentially increasing computational resources to achieve marginal improvements in performance. This scaling limitation suggests that achieving AGI through brute-force computational approaches may be economically and environmentally unsustainable. Novel computational paradigms, such as neuromorphic computing, quantum computing, or optical computing, may be necessary to achieve the computational efficiency required for practical AGI systems.

Memory and storage limitations pose additional architectural challenges. Human intelligence relies on sophisticated memory systems that seamlessly integrate short-term working memory, long-term declarative memory, and procedural memory. Current AI architectures struggle to implement analogous memory systems that can efficiently store, retrieve, and manipulate the vast amounts of knowledge required for general intelligence while maintaining the flexibility and associative capabilities demonstrated by biological memory systems.

Discover comprehensive AI research with Perplexity to explore the latest developments in computational architectures and memory systems designed for advanced AI applications. The integration of multiple memory systems, attention mechanisms, and knowledge representation schemes represents a complex engineering challenge that may require fundamental innovations in computer architecture and system design.

Learning and Adaptation Mechanisms

Developing AGI requires sophisticated learning mechanisms that go far beyond current supervised and unsupervised learning approaches. Human intelligence demonstrates remarkable capacity for few-shot learning, meta-learning, and continual learning that enables rapid adaptation to new situations with minimal training data. Current AI systems typically require vast amounts of training data and extensive computational resources to achieve competency in specific domains, making them unsuitable for the flexible, efficient learning required for general intelligence.

The challenge of catastrophic forgetting represents a significant obstacle in developing systems capable of continual learning. When neural networks are trained on new tasks, they typically forget previously learned information, requiring complex regularization techniques or architectural modifications to maintain knowledge across different learning episodes. Solving this problem is crucial for developing AGI systems that can continuously acquire new knowledge and skills throughout their operational lifetime.

Meta-learning, or learning to learn, represents another critical capability required for AGI. Humans demonstrate remarkable ability to rapidly acquire new skills by leveraging prior learning experiences and transferring abstract learning strategies to new domains. Developing artificial systems with comparable meta-learning capabilities requires fundamental advances in how systems represent and manipulate learning algorithms themselves, potentially requiring recursive self-modification capabilities that raise complex technical and safety considerations.

The temporal credit assignment problem presents additional challenges for learning systems. Determining which actions or decisions contribute to successful outcomes in complex, multi-step reasoning tasks requires sophisticated mechanisms for tracking causality across extended time horizons. Current reinforcement learning approaches struggle with long-term dependencies and sparse reward signals, limiting their applicability to the complex reasoning tasks required for general intelligence.

Consciousness and Self-Awareness Challenges

The relationship between consciousness and intelligence remains one of the most contentious and poorly understood aspects of AGI development. While some researchers argue that consciousness is unnecessary for achieving general intelligence, others contend that subjective experience and self-awareness are fundamental requirements for flexible, adaptive behavior in complex environments.

Developing systems with self-awareness capabilities presents profound technical challenges related to self-modeling, introspection, and recursive self-reference. AGI systems would need to maintain accurate models of their own capabilities, limitations, and internal states while using this self-knowledge to guide decision-making and learning processes. This requires sophisticated metacognitive abilities that current AI systems entirely lack.

The hard problem of consciousness, as formulated by philosopher David Chalmers, poses fundamental questions about whether computational systems can ever achieve genuine subjective experience. While this philosophical debate may seem abstract, it has practical implications for AGI development, particularly regarding the system’s ability to form genuine preferences, values, and motivations that guide autonomous behavior in complex social and ethical contexts.

Integration and Coordination Challenges

Achieving AGI requires integrating multiple cognitive capabilities into coherent, coordinated systems that can seamlessly combine perception, reasoning, memory, planning, and action. Current AI systems typically excel in isolated domains but struggle to integrate multiple capabilities in the flexible, dynamic manner characteristic of human intelligence.

The binding problem represents a specific instance of this integration challenge, relating to how distributed neural processes combine to form unified perceptual experiences and coherent behavioral responses. Solving this problem requires developing mechanisms for coordinating information processing across multiple subsystems while maintaining the modularity and efficiency advantages of specialized processing components.

Attention and cognitive control mechanisms present additional integration challenges. Human intelligence demonstrates sophisticated ability to direct attention, manage cognitive resources, and coordinate multiple parallel processes. Implementing analogous capabilities in artificial systems requires complex control architectures that can dynamically allocate computational resources while maintaining coherent global behavior.

Safety and Alignment Considerations

The development of AGI raises profound safety concerns that directly impact technical development approaches. Ensuring that AGI systems remain aligned with human values and behave safely requires solving complex technical problems related to goal specification, reward modeling, and robustness to distributional shifts in operating environments.

The AI alignment problem encompasses challenges in specifying objectives that accurately capture human intentions while avoiding perverse instantiation or specification gaming behaviors. Current AI systems often exploit loopholes in reward functions or optimize for metrics that do not correspond to intended outcomes, suggesting that more sophisticated approaches to goal specification and value learning are necessary for safe AGI development.

Robustness and reliability requirements for AGI systems exceed those for narrow AI applications due to the broader scope of potential impact and the difficulty of constraining system behavior within safe bounds. Developing verification and validation techniques for complex, adaptive systems that operate across multiple domains presents unprecedented challenges for software engineering and system design methodologies.

Economic and Resource Constraints

The development of AGI faces significant economic constraints that influence technical approaches and timeline projections. Current AI research requires substantial computational resources, specialized hardware, and highly skilled researchers, creating barriers that may limit the pace of progress and concentrate development efforts within well-funded organizations.

The economic sustainability of AGI development approaches represents a critical consideration as computational requirements continue to grow exponentially. Energy consumption for training large AI models has increased dramatically, raising questions about the environmental and economic feasibility of scaling current approaches to AGI-level capabilities.

Research and development costs for AGI projects involve not only direct computational expenses but also the opportunity costs of allocating limited research talent and resources to long-term, high-risk projects with uncertain outcomes. The economic incentives driving AI development may favor commercially viable narrow AI applications over fundamental research required for AGI breakthroughs.

Interdisciplinary Collaboration Requirements

Achieving AGI requires unprecedented collaboration across multiple disciplines, including computer science, neuroscience, cognitive psychology, philosophy, mathematics, and engineering. Each discipline contributes essential insights and methodologies, but integrating diverse perspectives and approaches presents significant coordination challenges.

The communication barriers between disciplines with different terminologies, methodologies, and theoretical frameworks can impede collaborative progress. Developing common languages and frameworks for interdisciplinary collaboration requires significant investment in translation efforts and cross-disciplinary education initiatives.

Institutional and funding structures often favor disciplinary research over interdisciplinary collaboration, creating systemic barriers to the coordinated efforts required for AGI development. Addressing these structural challenges requires reforms in academic and research institutions that support long-term, collaborative research projects.

Future Implications and Considerations

The successful development of AGI would represent a watershed moment in human history, potentially triggering rapid technological advancement that could solve many of humanity’s greatest challenges while simultaneously creating new risks and uncertainties. The transformative potential of AGI extends across virtually every domain of human activity, from scientific research and medical discovery to economic systems and social organization.

The possibility of an intelligence explosion following AGI achievement creates both tremendous opportunities and existential risks that require careful consideration and preparation. Developing governance frameworks, safety protocols, and international cooperation mechanisms for AGI development represents a critical priority that must proceed in parallel with technical research efforts.

The timeline uncertainty surrounding AGI achievement necessitates flexible planning approaches that can adapt to various scenarios while maintaining focus on safety and beneficial outcomes. Whether AGI arrives in decades or centuries, the preparation work required for safe and beneficial development must begin immediately to ensure humanity is prepared for this transformative technology.

The technical challenges facing AGI development are formidable, spanning fundamental questions about the nature of intelligence, consciousness, and computation. While significant obstacles remain, the rapid pace of advancement in AI research suggests that many of these challenges may be surmountable given sufficient time, resources, and coordinated effort. The key to successful AGI development lies not only in solving individual technical problems but in coordinating interdisciplinary efforts toward comprehensive solutions that address the full complexity of general intelligence.

Disclaimer

This article presents current perspectives on AGI development based on available research and expert opinions. Timeline predictions and technical assessments are inherently uncertain and should not be considered definitive forecasts. The views expressed reflect the current state of knowledge in rapidly evolving fields, and actual developments may differ significantly from current projections. Readers should consult multiple sources and expert opinions when making decisions related to AI development and policy.