The software testing landscape has undergone a revolutionary transformation with the integration of machine learning technologies into test case generation processes. Traditional manual testing approaches, while thorough, are increasingly inadequate for modern software development cycles that demand rapid deployment, comprehensive coverage, and continuous quality assurance. Machine learning-powered automated test case generation represents a paradigm shift that promises to address the scalability challenges of contemporary software testing while maintaining and often exceeding the quality standards established by manual testing methodologies.

Explore the latest AI testing innovations to understand how cutting-edge technologies are reshaping software quality assurance practices across industries. The convergence of artificial intelligence and software testing has created unprecedented opportunities for enhancing testing efficiency, improving bug detection rates, and reducing the overall cost of quality assurance in software development projects.

The Evolution of Software Testing Methodologies

Traditional software testing has long relied on human expertise to design test cases, identify edge cases, and validate software functionality across diverse scenarios. This manual approach, while comprehensive in many aspects, faces significant limitations when applied to modern software systems characterized by complex architectures, frequent updates, and extensive feature sets. The time-intensive nature of manual test case creation often becomes a bottleneck in agile development environments where rapid iteration and continuous deployment are essential for maintaining competitive advantage.

Machine learning has emerged as a transformative solution to these challenges by introducing intelligent automation capabilities that can analyze code patterns, understand software behavior, and generate comprehensive test suites with minimal human intervention. This technological advancement represents more than mere automation; it embodies a fundamental shift toward intelligent testing systems that can adapt, learn, and improve their effectiveness over time based on accumulated testing data and outcomes.

The integration of machine learning into test case generation processes has democratized access to sophisticated testing methodologies that were previously available only to organizations with extensive testing resources and expertise. Small development teams and individual developers now have access to testing capabilities that rival those of large-scale enterprise testing environments, leveling the playing field and enabling higher quality software development across the entire industry spectrum.

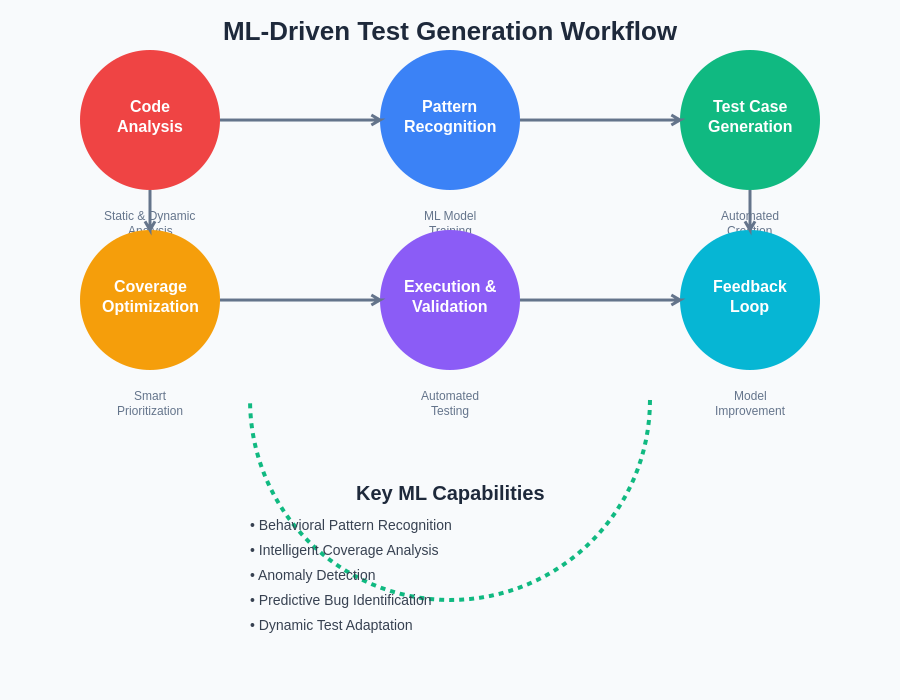

The machine learning-enhanced testing workflow represents a fundamental shift from traditional linear testing approaches to an intelligent, adaptive system that continuously learns and improves its test generation capabilities based on accumulated experience and feedback from real-world testing outcomes.

Understanding Machine Learning in Testing Context

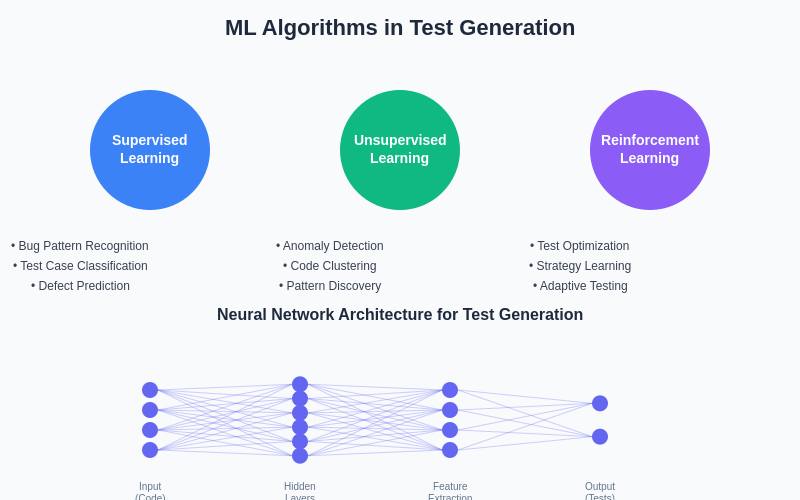

Machine learning applications in software testing leverage various algorithmic approaches to analyze source code, execution patterns, and historical testing data to generate effective test cases. These systems employ supervised learning techniques to understand relationships between code structures and potential failure modes, unsupervised learning to identify anomalous behavior patterns, and reinforcement learning to optimize test case selection and prioritization strategies based on their effectiveness in detecting bugs and ensuring comprehensive coverage.

The fundamental principle underlying machine learning-based test generation involves training models on large datasets of existing code bases, test cases, and associated outcomes to develop an understanding of software behavior patterns and potential vulnerability areas. These trained models can then analyze new code bases and automatically generate test cases that target the most likely failure scenarios while ensuring comprehensive coverage of critical functionality paths.

Enhance your testing capabilities with Claude’s advanced reasoning to develop more sophisticated test generation strategies that combine human expertise with machine learning insights. The synergy between human domain knowledge and machine learning capabilities creates testing frameworks that are both comprehensive and efficient, addressing the dual challenges of coverage and maintainability in modern software testing environments.

Technological Foundations and Algorithms

The implementation of machine learning-driven test case generation relies on several key technological foundations that enable the analysis and understanding of software systems. Natural language processing techniques are employed to analyze code comments, documentation, and requirement specifications to understand intended functionality and identify potential testing scenarios. Static code analysis combined with machine learning algorithms enables the identification of code patterns that are statistically associated with higher defect rates, allowing test generation systems to prioritize these areas for more intensive testing.

Dynamic analysis techniques integrated with machine learning models provide insights into runtime behavior patterns that can inform test case generation strategies. These approaches monitor software execution during development and testing phases to identify frequently executed code paths, performance bottlenecks, and resource utilization patterns that should be covered by comprehensive test suites. The combination of static and dynamic analysis provides a holistic view of software behavior that informs more effective test case generation.

Graph neural networks have emerged as particularly effective tools for analyzing software dependency structures and control flow patterns to generate test cases that exercise complex interaction scenarios. These neural network architectures can understand the relationships between different software components and generate test cases that specifically target integration points and component interactions where bugs are statistically more likely to occur.

The application of different machine learning paradigms to test case generation creates a comprehensive approach that leverages supervised learning for pattern recognition, unsupervised learning for anomaly detection, and reinforcement learning for adaptive optimization of testing strategies.

Code Coverage Analysis and Optimization

Traditional code coverage metrics, while useful, often fail to capture the true quality of test suites in terms of their ability to detect bugs and ensure software reliability. Machine learning-enhanced coverage analysis goes beyond simple line coverage to analyze the semantic significance of different code paths and prioritize test case generation for areas that are more likely to contain defects or exhibit unexpected behavior under various conditions.

Machine learning models trained on historical bug data can predict which areas of code are most susceptible to defects based on factors such as code complexity, change frequency, developer experience, and architectural patterns. This predictive capability enables test generation systems to allocate testing resources more effectively by focusing on areas with higher defect probability while still maintaining baseline coverage across the entire codebase.

Intelligent coverage optimization also considers the cost-benefit ratio of different test cases, generating suites that maximize defect detection capability while minimizing execution time and resource requirements. This optimization is particularly valuable in continuous integration environments where test execution time directly impacts development velocity and deployment frequency.

Behavioral Pattern Recognition

One of the most sophisticated applications of machine learning in test case generation involves the recognition and modeling of behavioral patterns within software systems. These systems analyze execution traces, user interaction patterns, and system state transitions to understand how software behaves under normal operating conditions and identify scenarios that deviate from expected behavior patterns.

Anomaly detection algorithms play a crucial role in this process by identifying unusual execution patterns that may indicate the presence of bugs or potential security vulnerabilities. Machine learning models trained on normal system behavior can generate test cases specifically designed to explore edge cases and boundary conditions that might not be obvious to human testers but represent potential failure scenarios.

The recognition of behavioral patterns also enables the generation of test cases that simulate realistic user interaction scenarios rather than isolated functional tests. This approach results in more comprehensive testing that better reflects actual software usage patterns and is more likely to identify issues that would be encountered by end users in production environments.

Automated Test Data Generation

The generation of appropriate test data represents a significant challenge in traditional testing approaches, often requiring extensive manual effort to create datasets that adequately exercise software functionality across diverse scenarios. Machine learning approaches to test data generation can analyze code requirements, database schemas, and existing data patterns to automatically generate test datasets that provide comprehensive coverage while maintaining realistic data characteristics.

Synthetic data generation using machine learning techniques enables the creation of large-scale test datasets that preserve the statistical properties of production data while avoiding privacy and security concerns associated with using actual user data for testing purposes. These approaches are particularly valuable for testing data-intensive applications where comprehensive testing requires diverse and voluminous datasets that would be impractical to create manually.

Leverage Perplexity’s research capabilities to stay informed about the latest developments in synthetic data generation and testing methodologies that are shaping the future of software quality assurance. The continuous evolution of data generation techniques provides testing teams with increasingly sophisticated tools for creating comprehensive and realistic testing environments.

Integration with Continuous Integration Pipelines

The practical implementation of machine learning-driven test case generation requires seamless integration with existing development workflows and continuous integration pipelines. Modern test generation systems are designed to operate as integral components of automated build and deployment processes, continuously analyzing code changes and generating appropriate test cases to validate new functionality and ensure that existing features remain unaffected by recent modifications.

This integration enables dynamic test suite adaptation where test cases are automatically updated and expanded in response to code changes, ensuring that testing remains comprehensive and relevant throughout the development lifecycle. The ability to automatically generate regression test suites that focus on areas potentially affected by recent changes represents a significant advancement over static test suites that may become outdated as software evolves.

Real-time test generation capabilities also enable immediate feedback during the development process, allowing developers to identify and address potential issues before they propagate through the development pipeline. This early detection capability significantly reduces the cost and complexity of bug resolution while maintaining high quality standards throughout the development process.

Quality Metrics and Validation

The effectiveness of machine learning-generated test cases must be rigorously validated through comprehensive quality metrics that go beyond traditional coverage measurements. Mutation testing techniques combined with machine learning analysis provide insights into the actual bug-detection capability of generated test suites by introducing controlled defects and measuring the ability of test cases to identify these artificial bugs.

Statistical analysis of test case effectiveness over time enables continuous improvement of test generation algorithms through feedback loops that incorporate real-world testing outcomes into model training processes. This continuous learning approach ensures that test generation systems become more effective over time as they accumulate experience with different types of software systems and defect patterns.

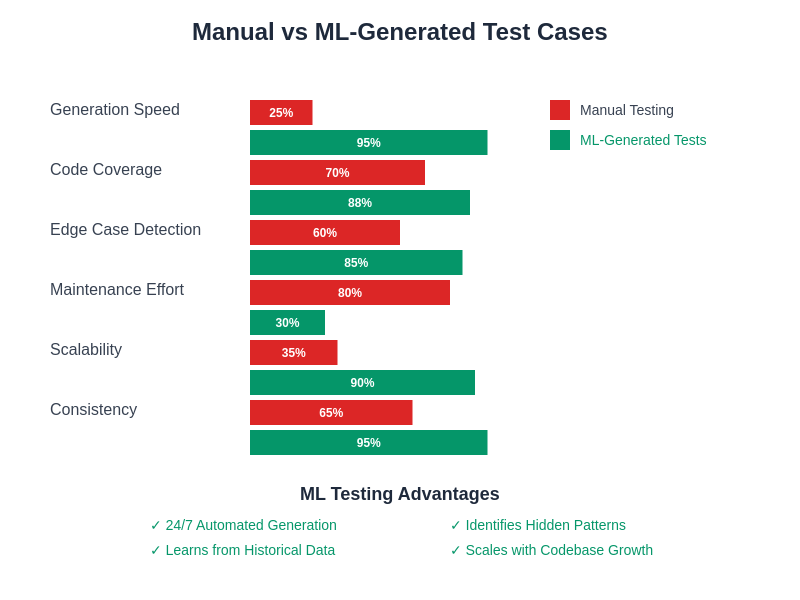

Comparative analysis between machine learning-generated test cases and manually created test suites provides valuable insights into the strengths and limitations of automated approaches, enabling organizations to develop hybrid testing strategies that leverage the benefits of both automated and manual testing methodologies.

The performance advantages of machine learning-generated test cases become evident across multiple dimensions, with particularly significant improvements in generation speed, scalability, and consistency compared to traditional manual testing approaches.

Challenges and Limitations

Despite the significant advantages offered by machine learning-driven test case generation, several challenges and limitations must be acknowledged and addressed for successful implementation. The quality of generated test cases is fundamentally dependent on the quality and representativeness of training data, which can be difficult to obtain for specialized or novel software domains where historical testing data may be limited or non-representative.

Machine learning models may exhibit bias toward certain types of defects or testing scenarios based on their training data, potentially resulting in test suites that are comprehensive in some areas while missing important edge cases or failure modes that were not well-represented in the training dataset. This bias can be particularly problematic when testing systems that operate in domains or contexts that differ significantly from the training environment.

The interpretability of machine learning-generated test cases can be challenging, making it difficult for human testers to understand the rationale behind specific test scenarios or to modify and extend test cases based on changing requirements. This lack of transparency can hinder the adoption of automated test generation systems in environments where test case rationale and traceability are important for regulatory compliance or quality assurance processes.

Industry Applications and Case Studies

The practical application of machine learning-driven test case generation has demonstrated significant success across various industry sectors, each presenting unique challenges and requirements that have driven innovation in testing methodologies. Financial services organizations have leveraged automated test generation to ensure comprehensive coverage of complex regulatory compliance requirements while maintaining the agility needed for rapid product development in competitive markets.

Healthcare software development has benefited from machine learning approaches that can generate test cases covering critical safety scenarios and edge cases that might be overlooked in manual testing processes. The ability to automatically generate comprehensive test suites for medical device software and health information systems has improved patient safety while reducing the time and cost associated with regulatory approval processes.

E-commerce platforms have utilized machine learning-generated test cases to ensure robust performance under diverse user interaction patterns and high-load scenarios. The ability to automatically generate test cases that simulate realistic user behavior patterns has enabled more effective performance testing and improved customer experience through better system reliability and responsiveness.

Future Directions and Emerging Trends

The future evolution of machine learning-driven test case generation promises even more sophisticated capabilities as artificial intelligence technologies continue to advance. Large language models are beginning to demonstrate the ability to understand software requirements expressed in natural language and generate corresponding test cases that validate compliance with these requirements, potentially bridging the gap between business requirements and technical testing implementation.

Federated learning approaches may enable the development of more robust test generation models by allowing organizations to collaborate on model training while preserving the confidentiality of their proprietary code and testing data. This collaborative approach could accelerate the development of more effective test generation systems while addressing concerns about data privacy and intellectual property protection.

The integration of machine learning with emerging technologies such as quantum computing and edge computing will create new challenges and opportunities for test case generation systems. These emerging paradigms will require novel testing approaches that current methodologies may not adequately address, driving continued innovation in automated testing technologies.

Implementation Strategies and Best Practices

Successful implementation of machine learning-driven test case generation requires careful planning and strategic approach that considers organizational capabilities, existing development processes, and specific quality requirements. Organizations should begin with pilot projects that demonstrate value and build confidence in automated testing approaches before expanding to mission-critical applications where testing failures could have significant consequences.

The selection of appropriate machine learning algorithms and techniques should be based on careful analysis of software characteristics, testing requirements, and available training data. Different types of software systems may benefit from different approaches, and organizations should be prepared to adapt their strategies based on experience and changing requirements.

Training and education of development and testing teams is crucial for successful adoption of machine learning-driven testing approaches. Team members need to understand both the capabilities and limitations of automated test generation systems to effectively integrate these tools into their workflows and make informed decisions about when to rely on automated approaches versus manual testing methods.

Economic Impact and Return on Investment

The economic benefits of machine learning-driven test case generation extend beyond simple cost reduction to encompass improvements in software quality, development velocity, and market competitiveness. Organizations that have successfully implemented automated test generation report significant reductions in testing costs, faster time-to-market for new features, and improved customer satisfaction due to higher software quality.

The scalability advantages of automated test generation become particularly evident in large-scale software development projects where manual testing approaches would require proportionally larger testing teams and longer testing cycles. The ability to automatically generate comprehensive test suites enables organizations to maintain high quality standards while scaling their development efforts to meet growing market demands.

Long-term return on investment calculations must consider not only direct cost savings but also indirect benefits such as reduced production defects, improved developer productivity, and enhanced ability to respond quickly to market opportunities. These broader economic impacts often justify the initial investment in machine learning-driven testing technologies even when direct cost savings alone might not provide sufficient justification.

The transformation of software testing through machine learning represents a fundamental shift toward more intelligent, efficient, and effective quality assurance processes that are essential for success in modern software development environments. As these technologies continue to mature and evolve, they will undoubtedly play an increasingly central role in ensuring the quality and reliability of software systems that underpin our digital economy and society.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of machine learning technologies and their applications in software testing. Readers should conduct their own research and consider their specific requirements when implementing automated test generation systems. The effectiveness of machine learning-driven testing approaches may vary depending on specific use cases, software characteristics, and organizational factors.