The convergence of computer vision and deep learning has catalyzed one of the most transformative technological revolutions in transportation history, giving birth to autonomous vehicles that promise to fundamentally reshape how we move through the world. These sophisticated machines represent the culmination of decades of research in artificial intelligence, sensor technology, and computational engineering, creating vehicles capable of perceiving their environment, making complex decisions, and navigating safely through dynamic real-world scenarios without human intervention.

Stay updated with the latest AI developments that are driving innovations in autonomous vehicle technology and transforming the future of transportation. The integration of advanced neural networks, sophisticated sensor fusion systems, and real-time processing capabilities has enabled autonomous vehicles to achieve levels of perception and decision-making that were once thought impossible, opening new frontiers in mobility, safety, and urban planning.

The Foundation of Autonomous Perception

At the heart of every autonomous vehicle lies a sophisticated perception system that relies heavily on computer vision algorithms powered by deep learning neural networks. These systems must process vast amounts of visual data in real-time, identifying and tracking objects, understanding road geometry, interpreting traffic signs and signals, and predicting the behavior of other road users. The complexity of this task cannot be overstated, as autonomous vehicles must operate reliably across diverse weather conditions, lighting scenarios, and traffic patterns while maintaining the highest standards of safety and performance.

The evolution from traditional computer vision techniques to deep learning-based approaches has been revolutionary for autonomous vehicle development. While early systems relied on hand-crafted features and rule-based algorithms, modern autonomous vehicles leverage convolutional neural networks and advanced architectures that can learn complex patterns and representations directly from training data. This shift has enabled unprecedented levels of accuracy in object detection, semantic segmentation, and scene understanding, forming the foundation upon which safe autonomous navigation depends.

Multi-Modal Sensor Fusion and Processing

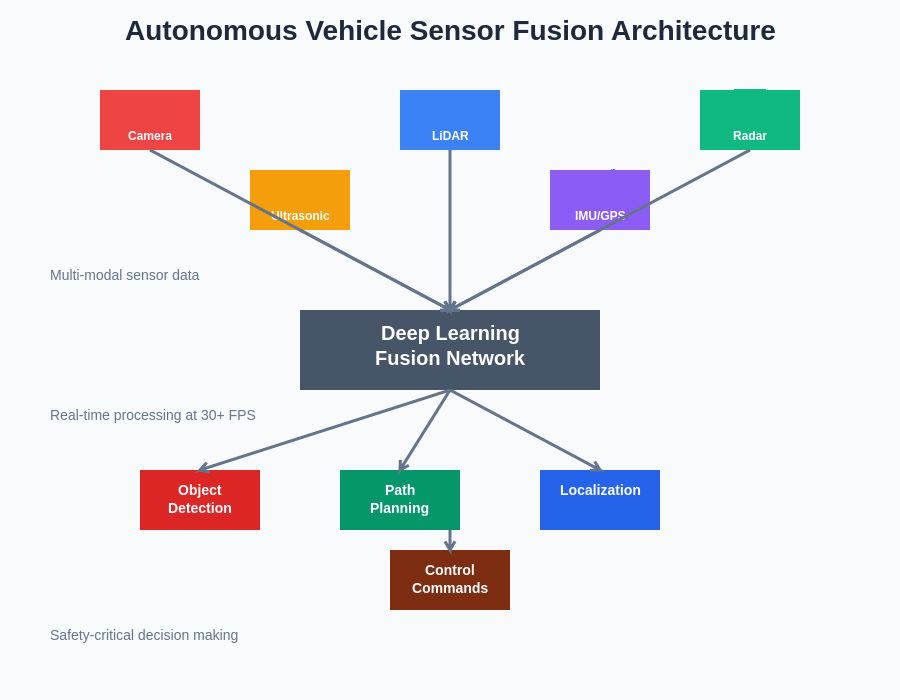

Contemporary autonomous vehicles employ sophisticated sensor fusion systems that combine data from multiple sources including cameras, LiDAR sensors, radar units, and ultrasonic sensors to create comprehensive three-dimensional representations of their environment. Each sensor modality contributes unique strengths to the overall perception system, with cameras providing rich visual information and color data, LiDAR offering precise distance measurements and geometric understanding, radar delivering reliable performance in adverse weather conditions, and ultrasonic sensors enabling accurate close-proximity detection.

The integration of these diverse sensor streams requires advanced deep learning architectures capable of processing multi-modal data simultaneously while maintaining real-time performance constraints. Modern fusion algorithms employ attention mechanisms, transformer architectures, and specialized neural network designs that can effectively combine information from different sensor types, resolving conflicts between sensors, filling gaps in individual sensor coverage, and creating robust perception systems that perform reliably across varying environmental conditions and operational scenarios.

The sophisticated sensor fusion architecture demonstrates how multiple data streams are processed through deep learning networks to create comprehensive environmental understanding. This multi-modal approach ensures redundancy and robustness while enabling real-time decision-making capabilities essential for safe autonomous vehicle operation.

Experience advanced AI capabilities with Claude for research and development in autonomous systems that require sophisticated reasoning and multi-modal data processing. The complexity of autonomous vehicle perception systems demands cutting-edge AI technologies that can handle the intricate relationships between different types of sensory information while maintaining the computational efficiency required for real-time operation.

Deep Learning Architectures for Object Detection

Object detection represents one of the most critical components of autonomous vehicle perception systems, requiring the ability to identify, classify, and precisely locate various objects within the vehicle’s environment including other vehicles, pedestrians, cyclists, traffic signs, road markings, and infrastructure elements. Modern autonomous vehicles employ state-of-the-art deep learning architectures such as YOLO (You Only Look Once), SSD (Single Shot Detector), and transformer-based detection systems that can process high-resolution imagery at speeds exceeding thirty frames per second while maintaining exceptional accuracy.

The development of specialized neural network architectures for autonomous vehicle applications has led to significant innovations in real-time object detection, including the implementation of multi-scale feature extraction, anchor-free detection methods, and attention-based mechanisms that can focus computational resources on the most relevant regions of the visual field. These advancements have enabled autonomous vehicles to detect objects at various distances and scales, from nearby pedestrians to distant vehicles, while maintaining the precision necessary for safe navigation decisions.

Semantic Segmentation and Scene Understanding

Beyond simple object detection, autonomous vehicles require comprehensive scene understanding capabilities that can perform pixel-level classification of the entire visual field, identifying road surfaces, lane markings, sidewalks, vegetation, buildings, and other environmental elements. This process, known as semantic segmentation, relies on advanced neural network architectures such as U-Net, DeepLab, and transformer-based segmentation models that can produce dense predictions across high-resolution imagery while maintaining real-time processing capabilities.

The importance of accurate semantic segmentation extends far beyond basic navigation, as these systems enable autonomous vehicles to understand road topology, identify drivable areas, recognize construction zones, and adapt to unusual road configurations that may not be present in high-definition maps. Advanced segmentation systems can distinguish between different types of road surfaces, identify temporary road markings, and even infer the three-dimensional structure of the environment from two-dimensional imagery, providing crucial information for path planning and vehicle control systems.

Temporal Modeling and Prediction Systems

Autonomous vehicles must not only understand the current state of their environment but also predict the future behavior of dynamic objects such as other vehicles, pedestrians, and cyclists. This predictive capability relies on sophisticated deep learning models that can analyze temporal sequences of sensor data to forecast the likely trajectories and actions of surrounding entities. Recurrent neural networks, LSTM (Long Short-Term Memory) networks, and transformer architectures specialized for sequential data processing enable autonomous vehicles to model complex behavioral patterns and make informed predictions about future scenarios.

The development of accurate prediction systems represents one of the most challenging aspects of autonomous vehicle technology, as it requires understanding not only physical constraints and motion dynamics but also the intentions and behaviors of human road users. Advanced prediction models incorporate multiple factors including historical trajectories, contextual information about the environment, traffic rules and conventions, and even social and cultural factors that influence human behavior, creating comprehensive models that can anticipate a wide range of possible future scenarios.

Explore cutting-edge AI research with Perplexity to stay informed about the latest developments in predictive modeling and temporal reasoning systems that are advancing autonomous vehicle capabilities. The ability to accurately predict future scenarios is fundamental to safe autonomous navigation, enabling vehicles to make proactive decisions and avoid potentially dangerous situations before they develop.

Path Planning and Decision Making

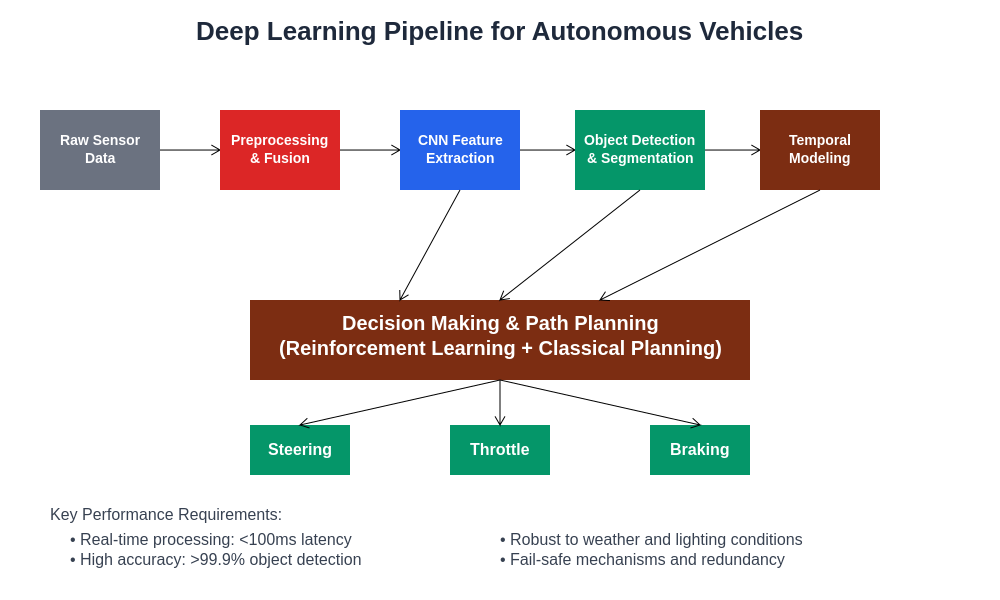

The translation of perceptual understanding into actionable driving decisions requires sophisticated path planning and decision-making systems that can evaluate multiple possible courses of action while optimizing for safety, efficiency, and passenger comfort. Modern autonomous vehicles employ hierarchical planning systems that operate at multiple temporal and spatial scales, from long-term route planning that considers traffic patterns and road networks to millisecond-level control decisions that determine steering, acceleration, and braking commands.

Deep reinforcement learning has emerged as a powerful paradigm for developing decision-making systems that can learn optimal driving policies through interaction with simulated environments and real-world experience. These systems can balance competing objectives such as minimizing travel time while maintaining safe following distances, optimizing fuel efficiency while ensuring passenger comfort, and adhering to traffic rules while adapting to unexpected situations. The integration of learned policies with traditional planning algorithms creates robust systems that can handle both routine driving scenarios and exceptional circumstances that require creative problem-solving.

The comprehensive deep learning pipeline illustrates the complex flow of information from raw sensor data through multiple processing stages to final control commands. This architecture demonstrates how modern autonomous vehicles integrate classical computer vision techniques with advanced neural networks to achieve reliable real-time performance in safety-critical applications.

Neural Network Training and Data Management

The development of effective deep learning systems for autonomous vehicles requires massive amounts of high-quality training data that captures the full diversity of real-world driving scenarios. Modern autonomous vehicle companies have invested heavily in data collection infrastructure, deploying fleets of vehicles equipped with comprehensive sensor suites to gather petabytes of driving data across diverse geographical regions, weather conditions, and traffic patterns. This data serves as the foundation for training neural networks that must generalize effectively to previously unseen scenarios while maintaining consistent performance across operational domains.

The challenges of managing and processing such vast datasets have led to innovations in distributed computing, data pipeline optimization, and active learning systems that can identify the most valuable training examples from massive data streams. Advanced data augmentation techniques, synthetic data generation, and simulation-based training approaches have become essential tools for creating robust neural networks that can perform reliably in edge cases and unusual scenarios that may be rare in real-world driving data but critical for safety-critical applications.

Safety Validation and Testing Frameworks

The deployment of autonomous vehicles in real-world environments requires unprecedented levels of safety validation and testing to ensure that deep learning systems perform reliably across all possible scenarios. Traditional software testing approaches are insufficient for neural network-based systems, leading to the development of specialized validation frameworks that can assess the safety and reliability of learned behaviors through extensive simulation, closed-course testing, and carefully managed real-world deployment programs.

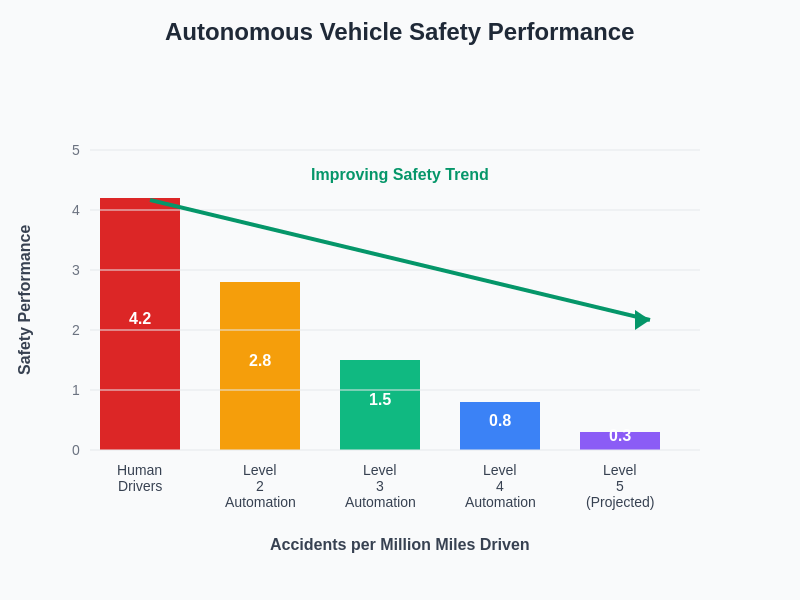

Modern safety validation approaches employ adversarial testing methods that actively search for failure modes and edge cases where autonomous vehicle systems might behave unexpectedly. These techniques include adversarial attacks on perception systems, corner case generation through evolutionary algorithms, and formal verification methods adapted for neural network architectures. The integration of safety monitoring systems, redundant perception pathways, and fail-safe mechanisms ensures that autonomous vehicles can detect and respond appropriately to system failures or unexpected scenarios that exceed the capabilities of their trained models.

The safety performance metrics demonstrate the progressive improvement in autonomous vehicle safety as automation levels increase. These data-driven insights highlight the potential for AI-powered systems to significantly reduce traffic accidents and save lives through more reliable and consistent decision-making compared to human drivers.

Edge Computing and Real-Time Processing

The computational demands of autonomous vehicle perception and decision-making systems require sophisticated edge computing architectures that can deliver the processing power necessary for real-time operation while meeting strict constraints on power consumption, thermal management, and system reliability. Modern autonomous vehicles employ specialized hardware platforms including GPUs optimized for neural network inference, dedicated AI acceleration chips, and distributed computing architectures that can parallelize processing across multiple computational units.

The optimization of neural network architectures for deployment on resource-constrained automotive hardware has led to innovations in model compression, quantization techniques, and efficient neural architecture design. These approaches enable complex deep learning models to operate within the computational and memory constraints of automotive systems while maintaining the accuracy and reliability required for safety-critical applications. The development of specialized software frameworks and runtime optimization techniques further enhances the efficiency of neural network inference in automotive environments.

Regulatory Frameworks and Ethical Considerations

The deployment of autonomous vehicles powered by deep learning systems raises complex regulatory and ethical questions that require careful consideration of safety standards, liability frameworks, and societal impacts. Regulatory agencies worldwide are developing comprehensive frameworks for testing, validation, and deployment of autonomous vehicle technologies, establishing standards for performance evaluation, safety assessment, and operational oversight that ensure these systems meet the highest standards of public safety.

The ethical dimensions of autonomous vehicle decision-making present particularly challenging questions about how artificial intelligence systems should behave in scenarios where harm cannot be completely avoided. The development of ethical frameworks for autonomous vehicle behavior requires interdisciplinary collaboration between technologists, ethicists, policymakers, and society at large to establish principles and guidelines that reflect shared values and expectations about the role of artificial intelligence in transportation systems.

Integration with Smart Infrastructure

The evolution of autonomous vehicles is closely linked to the development of smart transportation infrastructure that can communicate with and support autonomous vehicle operations. Vehicle-to-infrastructure (V2I) communication systems enable autonomous vehicles to receive real-time information about traffic conditions, road hazards, and infrastructure status, while vehicle-to-vehicle (V2V) communication allows coordination between multiple autonomous vehicles to optimize traffic flow and enhance safety through cooperative behaviors.

The integration of autonomous vehicles with smart city systems creates opportunities for optimizing transportation networks at unprecedented scales, reducing congestion, minimizing environmental impacts, and improving mobility access for all members of society. Advanced traffic management systems that can coordinate the movements of autonomous vehicles, optimize signal timing, and dynamically adjust traffic patterns based on real-time demand represent the next frontier in transportation system optimization.

Future Technological Developments

The continued advancement of autonomous vehicle technology depends on ongoing innovations in deep learning architectures, sensor technologies, and computational platforms that can further enhance the capabilities and reliability of autonomous systems. Emerging technologies such as neuromorphic computing, quantum machine learning, and advanced sensor fusion techniques promise to unlock new levels of performance and efficiency in autonomous vehicle perception and decision-making systems.

The development of more sophisticated world models that can understand and predict complex interactions between multiple agents in dynamic environments represents a key frontier for future autonomous vehicle research. These systems will need to incorporate not only physical understanding of vehicle dynamics and environmental constraints but also social and behavioral models that can anticipate human reactions and adapt to cultural differences in driving behaviors across different regions and contexts.

The convergence of autonomous vehicle technology with other emerging technologies such as 5G communications, edge computing, and distributed artificial intelligence creates opportunities for entirely new approaches to transportation system design and optimization. The future of autonomous vehicles lies not just in individual vehicle capabilities but in the creation of comprehensive transportation ecosystems that can deliver safe, efficient, and sustainable mobility solutions for communities worldwide.

Disclaimer

This article is for informational purposes only and does not constitute professional or technical advice. The views expressed are based on current understanding of autonomous vehicle technologies and their applications. Readers should conduct their own research and consult with qualified professionals when considering autonomous vehicle technologies or related investments. The development and deployment of autonomous vehicles involve complex technical, regulatory, and safety considerations that continue to evolve as the technology matures.