The enterprise adoption of artificial intelligence has reached a pivotal moment where organizations must choose between competing cloud AI platforms that will shape their digital transformation strategies for years to come. Two dominant forces have emerged in this landscape: Amazon Web Services Bedrock and Microsoft Azure OpenAI Service, each offering distinct approaches to delivering AI capabilities at enterprise scale. These platforms represent fundamentally different philosophies in AI service delivery, with AWS emphasizing a diverse foundation model marketplace and Azure focusing on deep integration with OpenAI’s cutting-edge technologies.

Explore the latest AI service developments to understand how cloud AI platforms are evolving to meet enterprise demands. The decision between AWS Bedrock and Azure OpenAI extends beyond simple feature comparison; it encompasses strategic considerations around vendor ecosystem integration, long-term AI roadmap alignment, and organizational technical infrastructure requirements that will influence enterprise AI success for the foreseeable future.

Platform Architecture and Foundation Models

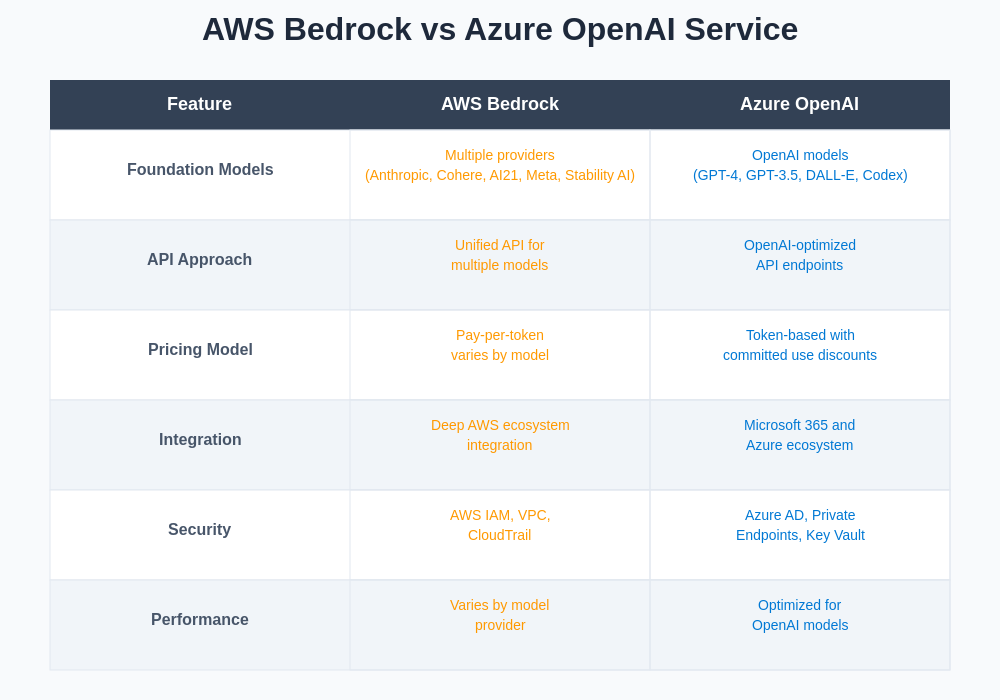

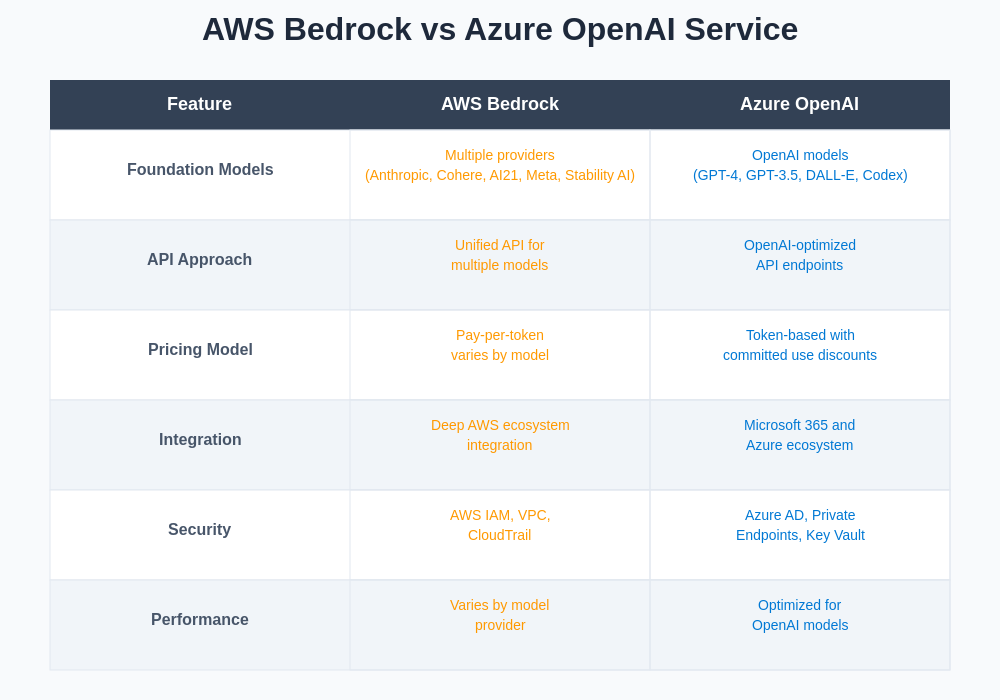

AWS Bedrock presents a comprehensive foundation model marketplace that democratizes access to multiple AI providers through a unified API interface. The platform’s architecture enables organizations to leverage models from Anthropic, AI21 Labs, Cohere, Meta, Stability AI, and Amazon’s own Titan models without the complexity of managing separate vendor relationships or integration challenges. This multi-provider approach offers enterprises unprecedented flexibility in selecting the most appropriate AI models for specific use cases while maintaining consistent operational workflows across different AI capabilities.

The architectural philosophy underlying Bedrock emphasizes choice and interoperability, allowing organizations to experiment with various foundation models and switch between providers based on performance requirements, cost considerations, or specific capability needs. This approach reduces vendor lock-in risks while enabling organizations to leverage the strengths of different AI providers within a cohesive platform environment that simplifies deployment, monitoring, and management of AI workloads.

Azure OpenAI Service adopts a more focused approach, concentrating primarily on OpenAI’s industry-leading models including GPT-4, GPT-3.5, DALL-E, and Codex within Microsoft’s enterprise cloud ecosystem. This strategic partnership provides organizations with direct access to the most advanced language models available while ensuring enterprise-grade security, compliance, and integration capabilities. The platform’s architecture is optimized specifically for OpenAI models, resulting in highly efficient performance and seamless integration with Microsoft’s broader productivity and development tools ecosystem.

The concentrated focus on OpenAI technologies enables Azure to offer deeper optimization and more sophisticated enterprise features specifically tailored to these models, including advanced fine-tuning capabilities, comprehensive content filtering, and specialized deployment options that maximize the potential of OpenAI’s AI capabilities within enterprise environments.

Model Variety and Capabilities

The breadth of available models represents one of the most significant differentiators between these platforms. AWS Bedrock offers an extensive catalog of foundation models spanning text generation, image creation, code generation, and embedding capabilities from multiple providers. Organizations can access Claude models from Anthropic for sophisticated reasoning tasks, Cohere models for natural language processing, AI21 Labs models for specialized text applications, and Meta’s Llama models for open-source flexibility, all through a consistent API interface.

This diversity enables organizations to optimize their AI implementations by selecting models that best match specific requirements rather than adapting use cases to available models. For instance, organizations can leverage Stability AI’s Stable Diffusion models for image generation while simultaneously utilizing Anthropic’s Claude for complex reasoning tasks and Amazon Titan for embedding generation, creating comprehensive AI solutions that leverage the strengths of different specialized models.

Enhance your AI development with Claude’s advanced capabilities for complex reasoning and analysis tasks that require sophisticated understanding and generation capabilities. Azure OpenAI Service concentrates on delivering the most advanced models from OpenAI, including the latest GPT-4 variants, GPT-3.5 Turbo, DALL-E 3 for image generation, and specialized models like Whisper for speech recognition and Codex for code generation. While the model variety is more limited compared to Bedrock’s marketplace approach, the available models represent the current state-of-the-art in AI capabilities.

The focus on OpenAI models provides access to capabilities that are often months ahead of competing offerings, particularly in areas such as multi-modal understanding, advanced reasoning, and natural language generation quality. Organizations choosing Azure OpenAI gain access to the most sophisticated AI models available, with the assurance that they are utilizing the same technology that powers ChatGPT and other breakthrough AI applications.

Integration and Ecosystem Compatibility

AWS Bedrock’s integration capabilities extend throughout the comprehensive Amazon Web Services ecosystem, providing seamless connectivity with existing AWS infrastructure, data services, and security frameworks. Organizations already invested in AWS can leverage existing Identity and Access Management policies, Virtual Private Cloud configurations, and data storage solutions while adding AI capabilities without architectural disruption or additional security complexity.

The platform integrates naturally with AWS Lambda for serverless AI processing, Amazon S3 for data storage and retrieval, Amazon CloudWatch for monitoring and logging, and AWS Step Functions for complex AI workflow orchestration. This ecosystem integration enables organizations to build sophisticated AI applications that leverage existing AWS investments while maintaining consistent operational procedures and security policies across their entire cloud infrastructure.

Azure OpenAI Service offers deep integration with Microsoft’s enterprise productivity ecosystem, including seamless connectivity with Microsoft 365, Power Platform, Azure Cognitive Services, and the broader Azure cloud infrastructure. Organizations utilizing Microsoft technologies can embed AI capabilities directly into familiar tools like Teams, SharePoint, and Office applications while leveraging existing Azure Active Directory authentication and security policies.

The integration extends to development environments through Azure DevOps, Visual Studio, and GitHub integration, enabling developers to incorporate AI capabilities into existing development workflows without significant tooling changes. This ecosystem alignment is particularly valuable for organizations with significant Microsoft technology investments, as it enables AI adoption without disrupting established productivity and collaboration patterns.

The architectural differences between these platforms reflect distinct strategic approaches to enterprise AI deployment, with AWS emphasizing breadth and choice while Azure focuses on depth and integration within the Microsoft ecosystem.

Pricing Models and Cost Considerations

The pricing structures of AWS Bedrock and Azure OpenAI reflect their different strategic approaches to AI service delivery. AWS Bedrock employs a pay-per-use model that varies by foundation model provider, with pricing based on input and output tokens processed. This granular pricing approach enables organizations to optimize costs by selecting models that provide the best value for specific use cases while paying only for actual usage without minimum commitments or reserved capacity requirements.

Bedrock’s pricing transparency extends to detailed cost breakdowns by model and usage type, enabling organizations to understand exactly where AI spending occurs and optimize accordingly. The platform also offers provisioned throughput options for high-volume applications that require predictable performance and costs, providing flexibility to balance cost optimization with performance requirements based on specific application needs.

Azure OpenAI Service utilizes a similar token-based pricing model but with pricing tiers optimized specifically for OpenAI models. The platform offers both pay-as-you-go and committed use discounts for organizations with predictable AI workloads. The pricing structure is designed to provide cost advantages for organizations that commit to specific usage levels while maintaining flexibility for variable workloads through standard pay-per-use options.

The cost considerations extend beyond raw pricing to include factors such as data transfer costs, storage requirements, and integration expenses. Organizations must evaluate total cost of ownership including these additional factors when comparing platforms, as the most cost-effective choice depends on specific usage patterns, data requirements, and existing infrastructure investments.

Leverage advanced AI research capabilities with Perplexity to analyze and compare detailed pricing scenarios for your specific AI implementation requirements. The economic impact of AI platform selection extends beyond direct service costs to include development time, maintenance requirements, and long-term scalability considerations that significantly influence total cost of ownership.

Security and Compliance Features

Enterprise security requirements demand comprehensive protection for AI workloads, and both platforms provide extensive security capabilities designed for regulated industries and sensitive data processing. AWS Bedrock inherits the full security framework of Amazon Web Services, including encryption at rest and in transit, VPC endpoint support for private connectivity, detailed audit logging through CloudTrail, and integration with AWS Key Management Service for cryptographic key control.

The platform supports sophisticated access control through AWS Identity and Access Management, enabling organizations to implement fine-grained permissions for AI model access, data processing capabilities, and administrative functions. Bedrock’s security model extends to model inference processing, ensuring that customer data never leaves the AWS infrastructure and is not used for model training or improvement by foundation model providers.

Azure OpenAI Service provides enterprise-grade security through Azure’s comprehensive security framework, including advanced threat protection, private endpoint connectivity, customer-managed encryption keys, and integration with Azure Active Directory for identity management. The platform ensures that all data processing occurs within Microsoft’s secure cloud infrastructure with no data retention for model improvement purposes.

The service includes sophisticated content filtering capabilities that can be customized for specific organizational requirements, enabling automated detection and filtering of potentially harmful or inappropriate content. This capability is particularly valuable for organizations deploying AI in customer-facing applications where content safety is paramount.

The comprehensive feature comparison reveals distinct strengths in each platform’s approach to enterprise AI service delivery, with AWS Bedrock emphasizing model diversity and Azure OpenAI focusing on advanced capabilities through deep OpenAI integration.

Performance and Scalability Characteristics

Performance characteristics vary significantly between platforms based on their architectural approaches and optimization strategies. AWS Bedrock provides performance that varies by foundation model provider, with each model offering different latency and throughput characteristics optimized for specific use cases. The platform’s multi-model architecture enables organizations to select models that best match performance requirements rather than accepting one-size-fits-all performance characteristics.

Bedrock supports both on-demand and provisioned throughput options, enabling organizations to optimize for either cost efficiency or predictable performance based on application requirements. The provisioned throughput option provides guaranteed capacity and consistent response times for high-volume applications, while on-demand access offers cost-effective performance for variable workloads.

Azure OpenAI Service delivers highly optimized performance specifically tuned for OpenAI models, with Microsoft’s deep partnership enabling performance optimizations not available through other channels. The platform provides predictable latency and throughput characteristics optimized for OpenAI’s model architectures, with global deployment options that minimize latency for distributed user bases.

The service offers both standard and provisioned throughput units, enabling organizations to balance cost and performance based on specific requirements. The provisioned option provides dedicated capacity with guaranteed availability and consistent performance, while standard throughput offers cost-effective access with shared infrastructure.

Development Tools and APIs

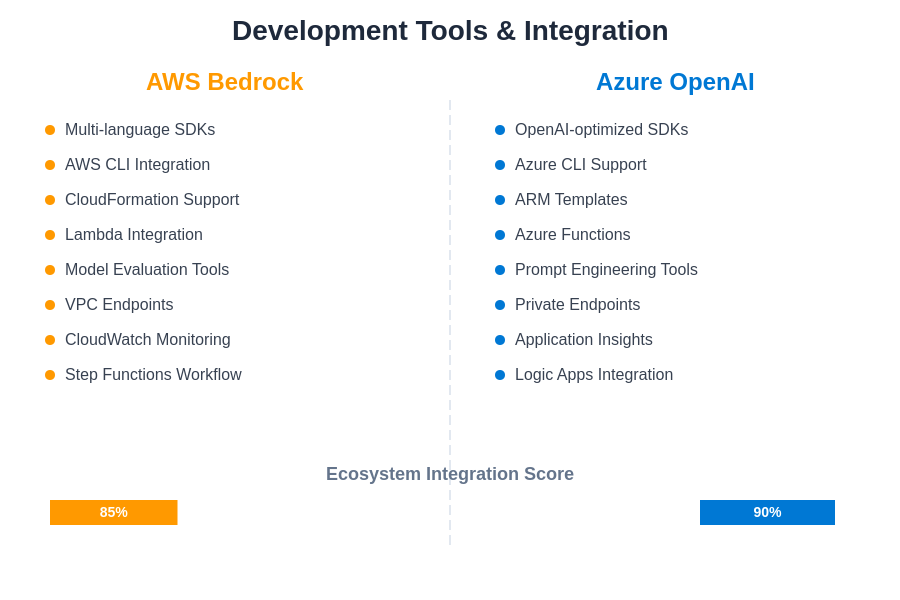

The development experience represents a crucial factor in AI platform adoption, influencing both initial implementation speed and long-term maintenance requirements. AWS Bedrock provides comprehensive SDKs for popular programming languages including Python, Java, JavaScript, and .NET, along with detailed documentation and code examples for common use cases. The platform’s API design emphasizes consistency across different foundation models while exposing model-specific capabilities when needed.

Bedrock integrates with AWS development tools including CloudFormation for infrastructure as code, AWS CLI for command-line management, and various AWS developer services for comprehensive application development workflows. The platform also provides model evaluation capabilities that enable organizations to test and compare different foundation models using their own data and use cases.

Azure OpenAI Service offers robust development tools specifically optimized for OpenAI models, including comprehensive SDKs, detailed API documentation, and extensive code samples. The platform integrates seamlessly with Microsoft development tools including Visual Studio, Azure DevOps, and GitHub, providing familiar development experiences for organizations using Microsoft technologies.

The service includes sophisticated prompt engineering tools, model fine-tuning capabilities, and comprehensive monitoring and analytics features that help organizations optimize their AI implementations. The development experience is enhanced by deep integration with Azure’s broader development ecosystem, enabling streamlined deployment and management workflows.

The development experience differs significantly between platforms, with each offering distinct advantages based on existing technology investments and development team preferences. Azure OpenAI’s slight edge in ecosystem integration reflects its tight coupling with Microsoft’s comprehensive productivity and development suite.

Use Case Optimization and Industry Applications

Different AI platforms excel in various use case scenarios based on their architectural strengths and model capabilities. AWS Bedrock’s multi-model approach makes it particularly well-suited for organizations requiring diverse AI capabilities across multiple applications. The platform excels in scenarios requiring specialized models for specific tasks, such as using Cohere models for customer service applications while leveraging Stability AI models for marketing content creation.

The platform’s flexibility enables organizations to implement comprehensive AI strategies that leverage the strengths of different foundation models without the complexity of managing multiple vendor relationships. This approach is particularly valuable for large enterprises with diverse AI requirements across different business units or geographical regions.

Azure OpenAI Service is optimized for organizations requiring the most advanced AI capabilities available, particularly in areas such as conversational AI, content generation, and complex reasoning tasks. The platform’s focus on OpenAI models makes it ideal for applications requiring state-of-the-art natural language understanding and generation capabilities.

The service excels in scenarios requiring integration with Microsoft productivity tools, enabling AI-enhanced workflows within familiar business applications. Organizations can implement AI capabilities that seamlessly integrate with existing Microsoft technology investments while leveraging the most advanced AI models available.

Data Privacy and Governance

Data privacy and governance requirements significantly influence AI platform selection, particularly for organizations in regulated industries or those handling sensitive customer information. AWS Bedrock provides comprehensive data protection through its commitment that customer data is never used for model training or improvement, with all processing occurring within the customer’s AWS environment under their control.

The platform supports sophisticated data governance through integration with AWS data services including AWS Glue for data cataloging, Amazon Macie for data discovery and classification, and AWS Config for compliance monitoring. These capabilities enable organizations to maintain comprehensive oversight of their AI data processing while meeting regulatory requirements.

Azure OpenAI Service ensures data privacy through Microsoft’s commitment to data protection, with customer data remaining within the customer’s Azure tenant and never being used for model training. The platform provides detailed data residency controls, enabling organizations to specify where their data is processed and stored to meet regulatory requirements.

The service integrates with Microsoft Purview for comprehensive data governance, providing data lineage tracking, sensitivity labeling, and compliance monitoring capabilities that help organizations maintain regulatory compliance while leveraging AI capabilities.

Migration and Vendor Lock-in Considerations

The long-term strategic implications of AI platform selection include migration complexity and vendor lock-in risks that can significantly impact future flexibility and costs. AWS Bedrock’s multi-model architecture provides inherent protection against vendor lock-in by enabling organizations to switch between different foundation model providers without changing their application architecture or operational procedures.

This flexibility extends to the ability to gradually migrate from one model to another based on performance, cost, or capability improvements, providing organizations with negotiating leverage and strategic flexibility. The platform’s API abstraction layer enables model switching with minimal code changes, reducing migration costs and complexity.

Azure OpenAI Service’s focused approach creates stronger integration with Microsoft’s ecosystem, which can provide significant operational advantages for organizations using Microsoft technologies but may create switching costs for organizations considering alternative platforms. The deep integration capabilities that provide operational benefits also create dependencies that must be considered in long-term strategic planning.

Organizations must evaluate their long-term AI strategy and technology roadmap when considering vendor lock-in implications, balancing the benefits of deep integration against the flexibility provided by multi-vendor approaches.

Future Roadmap and Innovation Trajectory

The innovation trajectories of AWS Bedrock and Azure OpenAI Service reflect their different strategic approaches to AI service evolution. AWS Bedrock’s roadmap emphasizes expanding the foundation model marketplace, adding new providers, and enhancing cross-model capabilities. The platform’s future development focuses on providing access to the latest AI innovations from multiple providers while improving the tools and capabilities for managing diverse AI workloads.

This approach positions Bedrock to benefit from innovations across the entire AI industry rather than depending on a single provider’s research and development efforts. Organizations choosing Bedrock gain access to a continuously expanding ecosystem of AI capabilities without vendor-specific constraints.

Azure OpenAI Service’s roadmap is closely aligned with OpenAI’s research and development efforts, providing early access to breakthrough AI capabilities as they become available. The platform’s future development focuses on deeper integration with Microsoft’s productivity and development tools while maximizing the potential of OpenAI’s advancing AI technologies.

This approach ensures that organizations have access to the most advanced AI capabilities available while benefiting from Microsoft’s enterprise-grade infrastructure and integration capabilities. The close partnership with OpenAI provides competitive advantages in AI capability advancement and feature development.

Strategic Decision Framework

The choice between AWS Bedrock and Azure OpenAI Service requires careful consideration of multiple factors including existing technology investments, AI use case requirements, organizational capabilities, and long-term strategic objectives. Organizations with diverse AI requirements and a preference for vendor flexibility may find AWS Bedrock’s multi-model marketplace approach more suitable for their needs.

The platform’s strength lies in providing access to specialized models optimized for specific use cases while maintaining operational consistency and reducing vendor lock-in risks. Organizations prioritizing choice, flexibility, and protection against vendor dependency should carefully evaluate Bedrock’s capabilities against their requirements.

Organizations requiring the most advanced AI capabilities available and those with significant Microsoft technology investments may find Azure OpenAI Service better aligned with their needs. The platform’s strength lies in providing access to state-of-the-art AI models with deep integration into Microsoft’s enterprise ecosystem.

The decision ultimately depends on organizational priorities regarding AI capability advancement, ecosystem integration requirements, vendor relationship preferences, and long-term strategic flexibility considerations. Both platforms provide enterprise-grade AI capabilities with different strengths that align with different organizational needs and strategic approaches.

The evolution of enterprise AI continues to accelerate, and the platform selected today will significantly influence an organization’s AI capabilities and competitive positioning for years to come. Careful evaluation of these platforms against specific requirements and strategic objectives is essential for making the optimal choice for long-term AI success.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The comparisons presented are based on publicly available information and may not reflect the most current service capabilities or pricing. Organizations should conduct their own detailed evaluation and testing before making platform selection decisions. Service capabilities, pricing, and features are subject to change by the respective providers. Readers should consult with qualified technical professionals and conduct thorough due diligence before implementing enterprise AI solutions.