The proliferation of artificial intelligence systems across critical decision-making domains has brought unprecedented attention to the issue of algorithmic bias and fairness in machine learning models. As these systems increasingly influence hiring decisions, loan approvals, criminal justice assessments, and healthcare diagnoses, the imperative to detect and mitigate unfairness has become not merely an ethical consideration but a fundamental requirement for responsible AI deployment. Understanding how to systematically test for bias and implement comprehensive fairness measures represents one of the most crucial challenges facing the modern AI development community.

Stay updated with the latest AI fairness research and developments to understand emerging methodologies and industry best practices for creating more equitable artificial intelligence systems. The intersection of technical excellence and ethical responsibility demands continuous learning and adaptation as our understanding of fairness in AI continues to evolve through ongoing research and real-world deployment experiences.

Understanding the Nature of AI Bias

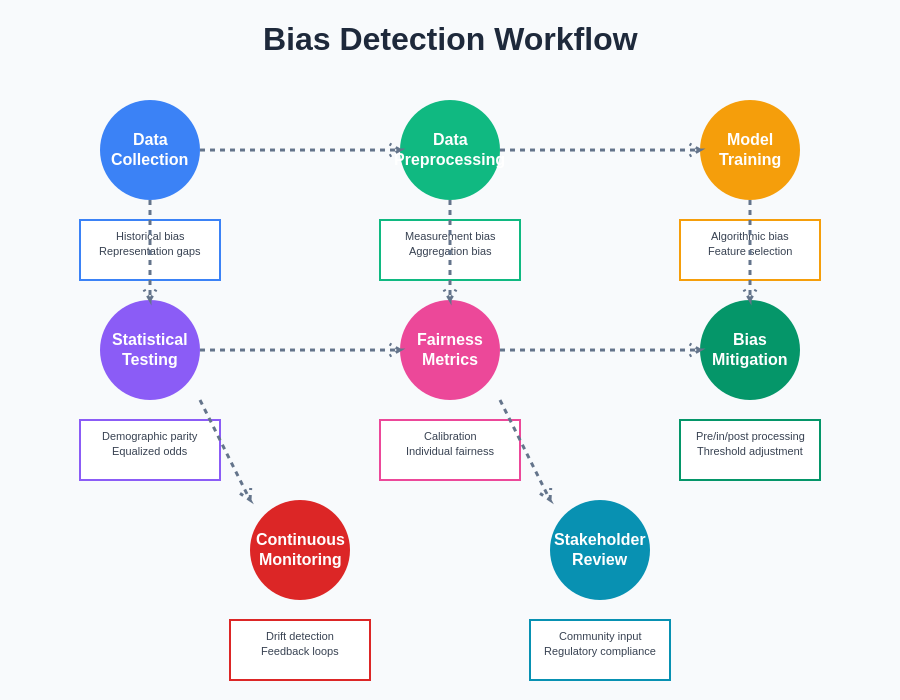

Artificial intelligence bias manifests in numerous forms throughout the machine learning pipeline, from data collection and preprocessing to model training and deployment phases. Historical bias embedded within training datasets reflects past discriminatory practices and societal inequalities, while representation bias occurs when certain demographic groups are underrepresented or misrepresented in the training data. Measurement bias emerges from differences in data quality or collection methods across different groups, and aggregation bias results from assuming that models trained on aggregate data will perform equally well across all subgroups within that population.

The complexity of bias detection stems from the multifaceted nature of fairness itself, as different stakeholders may have varying definitions of what constitutes fair treatment. Legal fairness might focus on compliance with anti-discrimination laws, while statistical fairness emphasizes equal accuracy rates across demographic groups. Individual fairness seeks to ensure similar treatment for similar individuals, whereas group fairness aims for equitable outcomes across different demographic categories. These competing definitions often create tension and require careful consideration of context-specific requirements and stakeholder priorities.

Understanding the sources of bias requires examining the entire machine learning lifecycle, from problem formulation and data collection through model design, training, evaluation, and deployment. Bias can be introduced at any stage, and seemingly neutral technical decisions can have profound implications for fairness outcomes. The challenge lies not only in detecting these biases but also in understanding their root causes and implementing effective mitigation strategies that preserve model performance while promoting equitable outcomes.

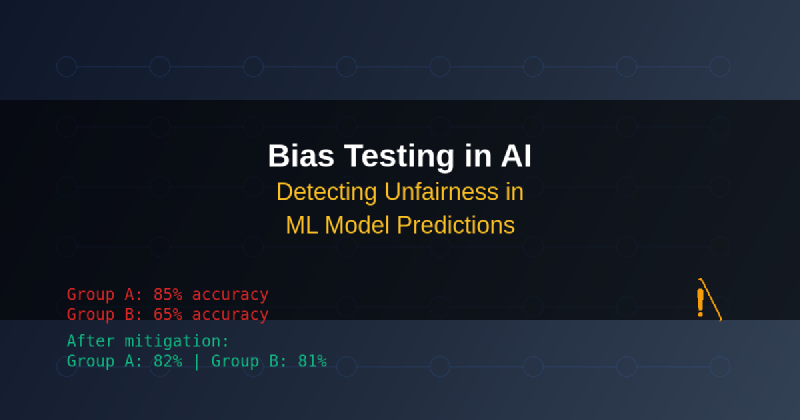

The comprehensive bias detection workflow encompasses multiple stages of analysis, from initial data collection through continuous monitoring of deployed systems. Each stage presents unique challenges and opportunities for identifying and addressing different forms of bias that may emerge throughout the machine learning pipeline.

Comprehensive Bias Detection Methodologies

Effective bias detection requires systematic application of multiple complementary methodologies that examine different aspects of model behavior and performance across demographic groups. Statistical parity testing evaluates whether positive prediction rates are equal across protected groups, while equalized odds assessment examines whether true positive and false positive rates are consistent across different demographic categories. Calibration analysis investigates whether predicted probabilities correspond to actual outcomes with equal accuracy across all groups.

Individual fairness testing employs counterfactual analysis to examine how model predictions change when sensitive attributes are modified while keeping all other relevant features constant. This approach helps identify whether models make decisions based on protected characteristics rather than legitimate predictive factors. Intersectionality analysis extends bias detection to consider the compound effects of multiple protected characteristics, recognizing that individuals may face unique forms of discrimination based on the intersection of various demographic categories.

Explore advanced AI ethics tools and methodologies with Claude to develop comprehensive bias testing frameworks that address the multifaceted nature of fairness in machine learning systems. The integration of multiple detection methodologies provides a more complete picture of potential bias issues and enables more targeted mitigation strategies.

Temporal bias analysis examines how model performance and fairness metrics change over time, recognizing that bias can emerge or evolve as data distributions shift and societal conditions change. This longitudinal perspective is crucial for maintaining fairness in deployed systems and identifying when models require retraining or adjustment to maintain equitable performance across all user groups.

Statistical Fairness Metrics and Evaluation

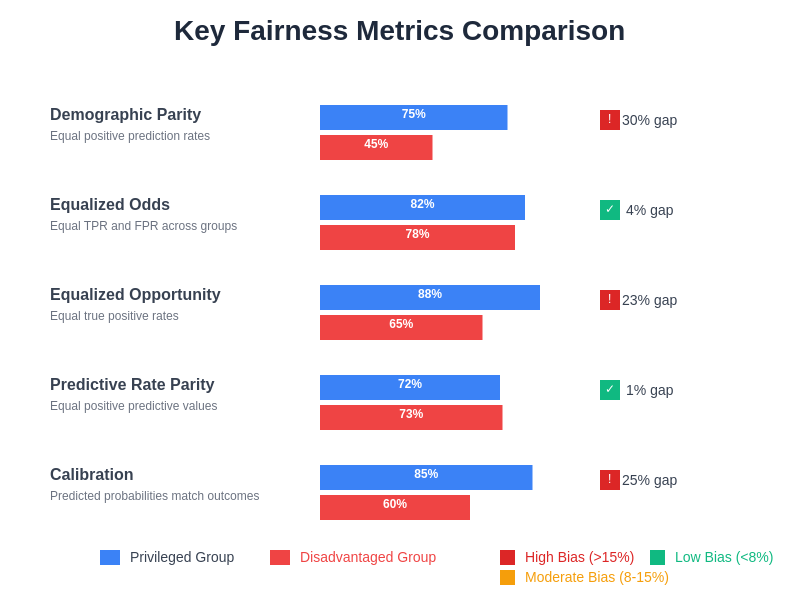

The quantification of fairness requires careful selection and application of appropriate statistical metrics that align with specific use cases and fairness objectives. Demographic parity measures whether positive prediction rates are equal across protected groups, while equalized opportunity focuses on equal true positive rates for different demographic categories. Equalized odds extends this concept by requiring equal true positive and false positive rates across all groups, providing a more comprehensive view of predictive fairness.

Predictive rate parity examines whether positive predictive values are consistent across different demographic groups, ensuring that the meaning of a positive prediction remains constant regardless of group membership. Calibration metrics assess whether predicted probabilities accurately reflect actual outcome probabilities for all demographic groups, which is particularly important for applications where probability estimates inform decision-making processes.

The selection of appropriate fairness metrics depends heavily on the specific application domain and stakeholder requirements. Medical diagnosis applications might prioritize equal sensitivity across demographic groups to ensure that all patients have equal chances of receiving necessary treatment, while loan approval systems might focus on equalized odds to balance both approval rates and default risk across different applicant populations. Understanding the implications of different metric choices and their trade-offs is essential for developing effective bias testing strategies.

The comparative analysis of key fairness metrics reveals significant disparities between privileged and disadvantaged groups across different measurement approaches. These gaps highlight the importance of comprehensive testing across multiple fairness dimensions to identify areas requiring targeted mitigation efforts.

Advanced Bias Testing Frameworks

Modern bias testing requires sophisticated frameworks that can handle the complexity of real-world machine learning systems and provide actionable insights for bias mitigation. Automated bias testing pipelines integrate multiple detection methodologies and statistical metrics to provide comprehensive assessments of model fairness across various dimensions and demographic intersections. These frameworks often incorporate visualization tools that help stakeholders understand bias patterns and their implications for different user groups.

Adversarial bias testing employs specialized models designed to detect discriminatory patterns that might not be apparent through traditional statistical analysis. These adversarial approaches can identify subtle forms of bias that emerge from complex feature interactions or nonlinear model behaviors that would otherwise remain hidden from conventional testing methodologies. The adversarial framework continuously evolves to identify new forms of potential discrimination as models become more sophisticated.

Simulation-based testing creates synthetic scenarios that explore edge cases and potential bias manifestations under different conditions and data distributions. This approach enables proactive identification of fairness issues before they impact real users and provides insights into how bias might emerge under various deployment scenarios. Simulation testing is particularly valuable for understanding the robustness of fairness interventions and their effectiveness across different contexts and populations.

Intersectionality and Multi-Dimensional Bias Analysis

Traditional bias testing often focuses on single demographic characteristics, but real-world discrimination frequently occurs at the intersection of multiple protected attributes. Intersectionality analysis recognizes that individuals with multiple minority characteristics may experience unique forms of bias that are not captured by examining each characteristic independently. This multi-dimensional approach to bias detection requires sophisticated analytical techniques that can identify compound discrimination effects.

The mathematical complexity of intersectionality analysis increases exponentially with the number of protected characteristics under consideration, requiring careful balance between comprehensive coverage and statistical power. Sparse data problems emerge when examining highly specific demographic intersections, necessitating innovative approaches such as hierarchical modeling and transfer learning techniques that can provide meaningful insights even with limited data for certain subgroups.

Leverage Perplexity’s research capabilities to stay current with the latest academic research on intersectionality in AI bias detection and mitigation strategies. The field continues to evolve rapidly as researchers develop new methodologies for understanding and addressing the complex nature of multi-dimensional discrimination in algorithmic systems.

Practical implementation of intersectionality analysis requires careful consideration of privacy implications and the potential for creating additional surveillance mechanisms that could further marginalize vulnerable populations. Balancing comprehensive bias detection with privacy protection represents an ongoing challenge that requires collaboration between technical teams, ethicists, and affected communities to develop appropriate approaches.

Bias Mitigation Strategies and Implementation

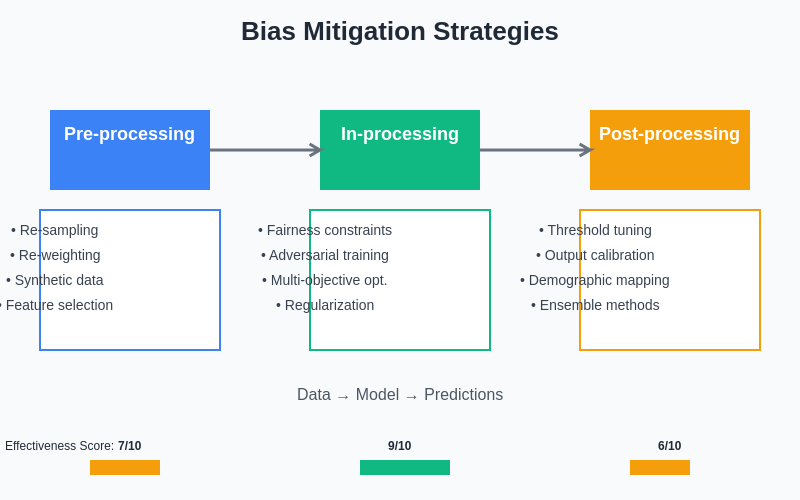

Once bias has been detected and characterized, implementing effective mitigation strategies requires careful consideration of trade-offs between fairness, accuracy, and other performance metrics. Pre-processing approaches address bias at the data level through techniques such as re-sampling, re-weighting, and synthetic data generation that aim to create more balanced and representative training datasets. These methods can be particularly effective for addressing representation bias and historical discrimination embedded in training data.

In-processing mitigation techniques modify the model training process itself to incorporate fairness constraints directly into the optimization objective. Fairness-aware machine learning algorithms balance predictive accuracy with fairness metrics through multi-objective optimization approaches that seek Pareto-optimal solutions. These methods often provide more principled approaches to bias mitigation but may require more sophisticated implementation and tuning procedures.

Post-processing interventions adjust model outputs to improve fairness metrics while preserving as much predictive performance as possible. Threshold optimization techniques adjust decision boundaries for different demographic groups to achieve desired fairness outcomes, while output calibration methods modify predicted probabilities to ensure consistent meaning across all groups. These approaches offer flexibility and can be applied to existing trained models without requiring complete retraining.

The three-stage approach to bias mitigation provides multiple intervention points throughout the machine learning pipeline. Pre-processing methods address data-level issues, in-processing techniques incorporate fairness directly into model training, and post-processing approaches adjust outputs to achieve desired fairness properties while maintaining predictive performance.

Continuous Monitoring and Bias Drift Detection

Bias in deployed machine learning systems is not a static phenomenon but rather an evolving challenge that requires continuous monitoring and adaptive responses. Data distribution shifts, changing societal conditions, and evolving user behaviors can all contribute to the emergence or evolution of bias over time. Establishing robust monitoring systems that can detect these changes and alert stakeholders to potential fairness issues is essential for maintaining equitable AI systems in production environments.

Automated bias monitoring systems continuously evaluate deployed models against established fairness metrics and trigger alerts when performance degrades beyond acceptable thresholds. These systems must balance sensitivity to genuine fairness issues with robustness against false alarms that could lead to unnecessary interventions. The design of effective monitoring systems requires careful consideration of statistical significance, practical significance, and the potential consequences of both false positive and false negative alerts.

Feedback loops between monitoring systems and model update procedures enable automated responses to detected bias issues through techniques such as online learning, model retraining, and dynamic threshold adjustment. However, these automated responses must be carefully designed to avoid introducing new forms of bias or creating unstable system behaviors that could harm user experiences or perpetuate existing inequalities.

Regulatory Compliance and Legal Considerations

The regulatory landscape surrounding AI bias and fairness continues to evolve rapidly, with new legislation and guidelines emerging at local, national, and international levels. Understanding and complying with relevant anti-discrimination laws, privacy regulations, and emerging AI-specific legislation requires ongoing attention and adaptation of bias testing and mitigation practices. Legal compliance represents not just a regulatory requirement but also a fundamental aspect of responsible AI development that protects both users and organizations.

Documentation and audit trails for bias testing and mitigation efforts play crucial roles in demonstrating compliance with regulatory requirements and supporting legal defenses against discrimination claims. Comprehensive records of bias detection methodologies, mitigation strategies, and monitoring results provide evidence of due diligence and good faith efforts to ensure fairness in AI systems. These documentation practices must balance transparency requirements with competitive considerations and privacy protections.

The intersection of technical fairness measures and legal fairness standards often creates complex challenges that require collaboration between technical teams, legal experts, and domain specialists. Legal definitions of discrimination may not align perfectly with technical fairness metrics, necessitating careful translation between legal requirements and implementation strategies that can be effectively measured and validated through technical means.

Stakeholder Engagement and Community Input

Effective bias testing and mitigation requires meaningful engagement with affected communities and stakeholders throughout the development and deployment process. Technical teams alone cannot fully understand the implications of bias or define appropriate fairness standards without input from those who will be impacted by AI system decisions. Community engagement helps identify relevant fairness concerns, validate technical approaches, and ensure that bias mitigation efforts address real-world needs rather than theoretical constructs.

Participatory design approaches involve affected communities in the definition of fairness metrics and the evaluation of mitigation strategies, recognizing that fairness is fundamentally a social and political concept rather than purely a technical challenge. These collaborative approaches can reveal blind spots in technical analysis and provide valuable insights into the practical implications of different bias testing and mitigation approaches.

Transparency and explainability of bias testing results enable stakeholders to understand how fairness assessments are conducted and what the results mean for different user groups. Clear communication of both the capabilities and limitations of bias detection and mitigation approaches helps build appropriate trust and enables informed decision-making about AI system deployment and use.

Emerging Technologies and Future Directions

The field of AI bias detection and mitigation continues to evolve rapidly with the development of new methodologies, tools, and theoretical frameworks. Causal inference approaches offer promising avenues for understanding the root causes of bias and developing more targeted mitigation strategies that address underlying causal mechanisms rather than just statistical patterns. These methods provide deeper insights into how bias emerges and propagates through AI systems.

Federated learning and privacy-preserving machine learning techniques present both opportunities and challenges for bias testing and mitigation. While these approaches can enable bias analysis across distributed datasets without compromising privacy, they also introduce new complexities in terms of statistical power, coordination, and validation of fairness interventions across different participants and data sources.

The integration of human feedback and reinforcement learning from human preferences offers new paradigms for bias mitigation that can adapt to evolving fairness standards and community values. These approaches enable continuous refinement of fairness objectives based on real-world feedback and changing societal expectations, but they also require careful design to avoid encoding existing biases present in human feedback mechanisms.

Implementation Best Practices and Organizational Considerations

Successful implementation of comprehensive bias testing requires organizational commitment and systematic integration of fairness considerations throughout the machine learning development lifecycle. Establishing clear governance structures, responsibility assignments, and accountability mechanisms ensures that bias testing receives appropriate attention and resources throughout project development and deployment phases.

Training and education programs for technical teams, product managers, and organizational leadership help build the knowledge and skills necessary for effective bias detection and mitigation. Understanding both the technical aspects of bias testing and the broader social and ethical implications of AI fairness enables more informed decision-making and more effective implementation of fairness interventions.

Cross-functional collaboration between technical teams, domain experts, legal counsel, and community representatives creates the diverse perspectives necessary for comprehensive bias assessment and effective mitigation strategy development. These collaborative approaches help ensure that technical solutions align with legal requirements, ethical principles, and community needs while maintaining practical feasibility and implementation effectiveness.

The future of AI bias testing and mitigation lies in the continued development of more sophisticated technical approaches combined with deeper integration of social and ethical considerations throughout the AI development process. As our understanding of fairness in AI continues to evolve, so too must our approaches to detecting and addressing bias in machine learning systems. The ultimate goal is not perfect fairness, which may be theoretically impossible, but rather continuous improvement toward more equitable and just AI systems that serve all members of society fairly and effectively.

Disclaimer

This article is for informational and educational purposes only and does not constitute legal, professional, or technical advice. The views expressed are based on current understanding of AI bias detection and mitigation techniques. Readers should conduct their own research and consult with relevant experts when implementing bias testing and mitigation strategies. The effectiveness of different approaches may vary depending on specific use cases, datasets, and regulatory requirements. Organizations should seek appropriate legal and technical guidance to ensure compliance with applicable laws and regulations.