The explosive growth of machine learning applications and the increasing complexity of data processing requirements have created an urgent need for programming languages that can effectively harness the power of parallel computing architectures. Chapel, developed by Cray Inc. and now stewarded by Hewlett Packard Enterprise, has emerged as a revolutionary solution that bridges the gap between high-level programming expressiveness and low-level parallel computing performance, making it an ideal choice for large-scale machine learning applications that demand both computational efficiency and programming productivity.

Explore the latest AI trends and developments to understand how parallel computing languages like Chapel are shaping the future of machine learning infrastructure. The convergence of advanced programming languages with modern hardware architectures represents a fundamental shift in how we approach computational challenges, particularly in the realm of artificial intelligence where processing massive datasets efficiently can determine the success or failure of entire projects.

Understanding Chapel’s Revolutionary Approach

Chapel represents a paradigm shift in parallel programming by providing a unified language that can express both task parallelism and data parallelism with remarkable elegance and clarity. Unlike traditional approaches that require developers to manage complex threading models, memory hierarchies, and communication protocols manually, Chapel abstracts these low-level details while maintaining fine-grained control over performance-critical aspects of parallel execution. This unique combination of high-level expressiveness and low-level control makes Chapel particularly well-suited for machine learning applications where algorithmic complexity often intersects with computational intensity.

The language’s design philosophy centers around the concept of global-view programming, where developers can reason about computations across distributed memory systems as if they were working with a single, unified address space. This abstraction significantly reduces the cognitive burden associated with parallel programming while enabling Chapel’s sophisticated compiler and runtime system to generate highly optimized code for diverse hardware architectures ranging from multicore processors to large-scale supercomputing clusters.

Chapel’s type system and memory management model have been specifically designed to support the complex data structures and access patterns commonly encountered in machine learning workloads. The language provides native support for multidimensional arrays, sparse data structures, and irregular computation patterns that are essential for implementing sophisticated ML algorithms efficiently across parallel computing environments.

Parallel Computing Foundations in Machine Learning

The intersection of parallel computing and machine learning represents one of the most computationally demanding areas in modern computer science, where the ability to process vast amounts of data quickly and efficiently can determine the feasibility of entire research directions and commercial applications. Traditional serial programming approaches become increasingly inadequate as datasets grow from gigabytes to terabytes and beyond, while the complexity of modern neural network architectures demands computational resources that can only be satisfied through effective parallelization strategies.

Chapel addresses these challenges by providing language-level constructs that naturally express the parallel patterns commonly found in machine learning algorithms. Whether implementing gradient descent optimization across distributed parameter spaces, performing parallel matrix operations for neural network forward propagation, or orchestrating complex data preprocessing pipelines that must scale to handle massive datasets, Chapel’s programming model aligns naturally with the computational requirements of modern ML systems.

The language’s support for hierarchical parallelism enables developers to express nested parallel constructs that can efficiently utilize the multi-level parallelism inherent in modern computing architectures. This capability is particularly valuable for machine learning applications that often exhibit parallelism at multiple granularities, from fine-grained vector operations within individual computations to coarse-grained parallelism across training batches or ensemble model components.

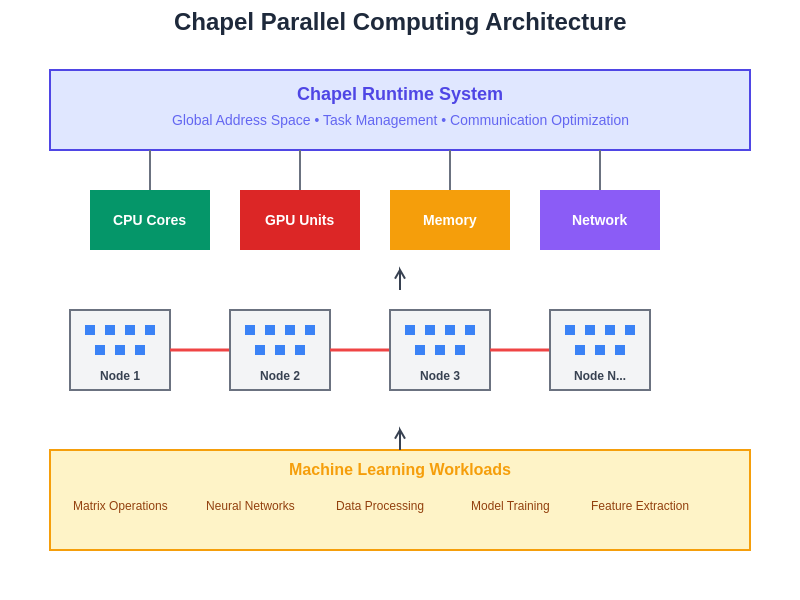

The architectural foundation of Chapel’s parallel computing model demonstrates how the language seamlessly bridges high-level programming abstractions with underlying hardware resources, enabling efficient execution across diverse computing environments from multicore processors to distributed supercomputing clusters.

Discover advanced AI capabilities with Claude to enhance your parallel computing projects with intelligent code generation and optimization assistance. The integration of AI-powered development tools with languages like Chapel creates powerful synergies that accelerate both the development process and the performance optimization of parallel computing applications.

Chapel’s Syntax and Expressiveness for ML Workloads

One of Chapel’s most compelling features lies in its ability to express complex parallel computations with syntax that closely resembles mathematical notation, making it particularly accessible to researchers and practitioners in the machine learning community who may not have extensive backgrounds in systems programming or parallel computing optimization. The language’s array-oriented programming model provides intuitive constructs for expressing the tensor operations, linear algebra computations, and statistical analyses that form the foundation of most machine learning algorithms.

Chapel’s support for ranges, domains, and distributed arrays enables developers to work with multidimensional data structures that can automatically scale across distributed memory systems without requiring explicit management of data distribution, communication, or synchronization. This abstraction is particularly powerful for machine learning applications that frequently involve operations on high-dimensional tensors where the underlying data distribution strategy can significantly impact performance but should not complicate the algorithmic expression of the computation.

The language’s iterator mechanism provides a powerful abstraction for expressing complex traversal patterns over distributed data structures, enabling the implementation of sophisticated sampling strategies, data augmentation pipelines, and feature engineering workflows that can efficiently utilize parallel computing resources while maintaining clear and maintainable code. This capability is essential for machine learning applications where data preprocessing and feature extraction often represent the most computationally intensive phases of the overall workflow.

Chapel’s support for generic programming and polymorphic functions enables the development of reusable algorithm implementations that can work efficiently across different data types, precision levels, and distributed computing configurations. This flexibility is crucial for machine learning research where the same algorithmic approach may need to be applied to diverse datasets with varying characteristics and computational requirements.

Scalability and Performance Characteristics

The scalability characteristics of Chapel make it exceptionally well-suited for the demanding computational requirements of large-scale machine learning applications that must process massive datasets and train complex models across distributed computing environments. Chapel’s compiler and runtime system have been specifically designed to generate efficient code for a wide range of parallel computing architectures, from shared-memory multicore systems to distributed-memory supercomputing clusters with thousands of processing nodes.

Chapel’s global-view programming model enables the development of applications that can scale seamlessly from small-scale prototyping environments to large-scale production deployments without requiring fundamental changes to the algorithmic implementation. This scalability characteristic is particularly valuable for machine learning research and development workflows where algorithms are typically developed and validated on smaller datasets before being applied to production-scale problems that may require orders of magnitude more computational resources.

The language’s sophisticated memory management system automatically handles the complex details of data distribution, caching, and communication optimization that are essential for achieving high performance in distributed computing environments. This automation enables machine learning practitioners to focus on algorithmic innovation and model development rather than low-level performance optimization, while still achieving performance levels that are competitive with hand-optimized implementations in lower-level languages.

Chapel’s support for adaptive load balancing and dynamic work distribution enables applications to maintain high efficiency even when processing datasets with irregular structure or when computational requirements vary significantly across different portions of the workload. This capability is particularly important for machine learning applications that often exhibit highly variable computational intensity depending on data characteristics and algorithmic phases.

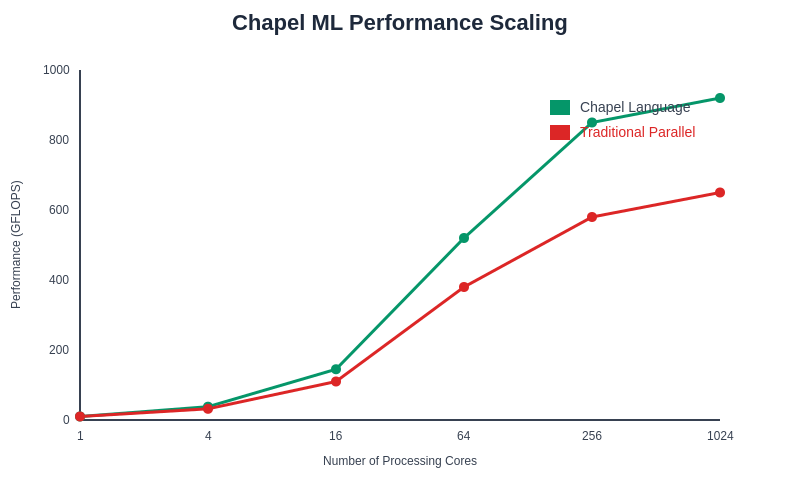

The performance scaling characteristics of Chapel demonstrate its ability to maintain near-linear performance improvements as computational resources increase, making it particularly well-suited for large-scale machine learning applications that require substantial parallel processing capabilities.

Integration with Machine Learning Frameworks

The integration of Chapel with existing machine learning frameworks and libraries represents a critical factor in its practical adoption for real-world ML applications. Chapel’s foreign function interface enables seamless integration with high-performance libraries written in C, C++, and Fortran, allowing developers to leverage existing optimized implementations of fundamental computational kernels while benefiting from Chapel’s high-level parallel programming abstractions for application-level coordination and control flow.

Chapel’s interoperability with popular machine learning frameworks such as TensorFlow, PyTorch, and scikit-learn enables hybrid development approaches where performance-critical components can be implemented in Chapel while maintaining compatibility with existing ML development workflows and tool chains. This integration capability reduces barriers to adoption and enables organizations to incrementally incorporate Chapel into their existing machine learning infrastructure without requiring wholesale technology migrations.

The language’s support for standard data formats and communication protocols enables Chapel applications to integrate seamlessly with distributed computing frameworks such as Apache Spark, Hadoop, and Kubernetes, providing flexibility in deployment architectures and enabling Chapel-based ML applications to coexist with other components in complex data processing pipelines.

Chapel’s native support for GPU computing through its support for accelerator programming models enables machine learning applications to leverage the computational power of modern graphics processing units while maintaining the high-level programming abstractions that make Chapel attractive for complex algorithm development. This GPU integration is essential for modern machine learning workloads where specialized hardware acceleration often provides order-of-magnitude performance improvements for appropriate computational kernels.

Enhance your research capabilities with Perplexity for comprehensive information gathering and analysis when working with complex parallel computing systems and machine learning frameworks. The combination of advanced AI research tools with Chapel’s parallel computing capabilities creates powerful opportunities for accelerating both research and development in machine learning applications.

Real-World Applications and Case Studies

The practical application of Chapel in real-world machine learning scenarios has demonstrated its effectiveness across diverse domains ranging from scientific computing and data analytics to commercial machine learning applications that require high-performance parallel processing capabilities. Organizations working with large-scale genomic datasets have successfully employed Chapel to implement parallel algorithms for sequence analysis, phylogenetic reconstruction, and population genetics studies that would be computationally prohibitive using traditional serial programming approaches.

Financial services companies have leveraged Chapel’s parallel computing capabilities to implement high-frequency trading algorithms, risk assessment models, and fraud detection systems that must process massive streams of market data in real-time while maintaining strict latency and throughput requirements. The language’s ability to express complex mathematical computations clearly while automatically generating efficient parallel code has proven particularly valuable in these applications where algorithmic sophistication must be balanced with performance demands.

Climate modeling and environmental science applications have benefited from Chapel’s ability to handle the complex multidimensional datasets and irregular computational patterns that characterize atmospheric and oceanic simulations. These applications often require sophisticated parallel algorithms that can adapt to varying computational loads and data distributions, capabilities that align well with Chapel’s flexible programming model and runtime system optimizations.

Machine learning applications in computer vision and natural language processing have successfully utilized Chapel to implement parallel training algorithms for deep neural networks, distributed hyperparameter optimization procedures, and large-scale feature extraction pipelines that can process terabytes of multimedia data efficiently across cluster computing environments.

Comparison with Traditional Parallel Computing Approaches

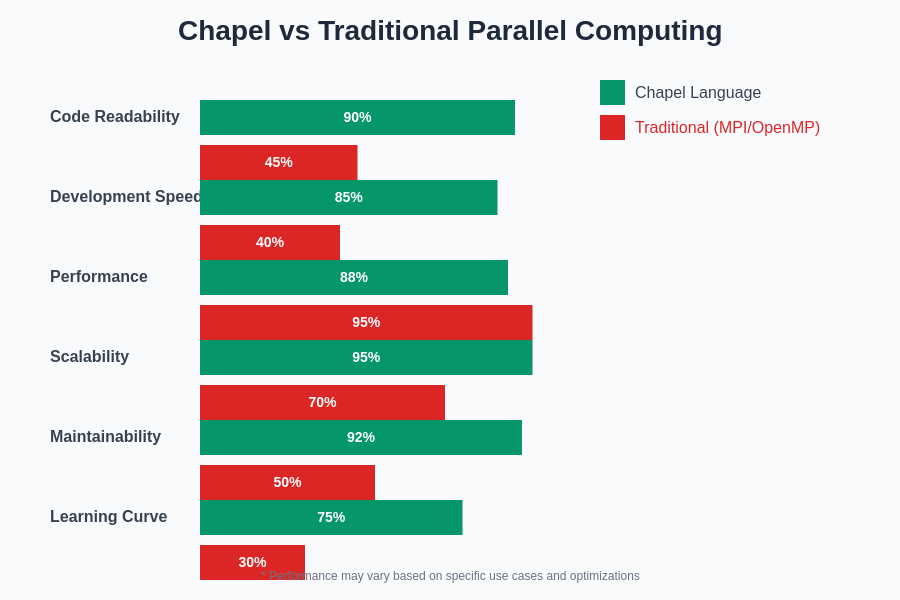

When compared to traditional parallel computing approaches such as MPI, OpenMP, and CUDA, Chapel provides significant advantages in terms of programming productivity, code maintainability, and scalability without sacrificing computational performance. Traditional approaches typically require developers to manage complex details of process coordination, memory hierarchy optimization, and communication protocol implementation, creating barriers to adoption and increasing the likelihood of subtle bugs that can be difficult to diagnose and resolve.

Chapel’s high-level programming abstractions eliminate much of this complexity while providing performance that is competitive with carefully optimized implementations using lower-level parallel programming models. The language’s sophisticated compiler and runtime system automatically generate efficient implementations of communication patterns, load balancing strategies, and memory management policies that would require extensive manual optimization in traditional parallel programming environments.

The debugging and performance analysis capabilities available for Chapel applications are significantly more advanced than those typically available for traditional parallel programming approaches. Chapel’s global-view programming model enables debugging tools that can provide coherent views of distributed application state, while performance analysis tools can provide insights into the efficiency of parallel execution patterns that would be difficult to obtain with traditional approaches.

Chapel’s support for incremental parallelization enables developers to begin with serial implementations and gradually introduce parallelism as performance requirements dictate, providing a development path that is much more accessible than traditional approaches that typically require parallel design considerations from the beginning of the development process.

Performance Optimization Strategies

Effective performance optimization in Chapel requires understanding both the language’s high-level abstractions and the underlying parallel computing principles that govern efficient execution across distributed computing environments. Chapel provides extensive profiling and performance analysis tools that enable developers to identify bottlenecks, understand communication patterns, and optimize data distribution strategies for specific application requirements and hardware configurations.

The language’s support for explicit locality control enables fine-grained optimization of data placement and computation distribution when default compiler optimizations are insufficient for achieving desired performance levels. This capability is particularly important for machine learning applications where memory access patterns and computational intensity can vary significantly across different algorithmic phases and dataset characteristics.

Chapel’s support for custom data distributions and specialized iterators enables the implementation of domain-specific optimizations that can significantly improve performance for particular classes of machine learning algorithms. These optimizations can often achieve performance improvements that would be difficult or impossible to realize using more general-purpose parallel programming approaches.

The comparative analysis between Chapel and traditional parallel computing approaches reveals significant advantages in code readability, development speed, and maintainability while achieving competitive performance levels, making Chapel an attractive choice for modern machine learning development workflows.

The language’s integration with performance monitoring and analysis tools enables continuous optimization workflows where performance characteristics can be monitored and analyzed across different deployment environments and dataset scales, enabling data-driven optimization strategies that can adapt to changing computational requirements and resource availability.

Development Workflow and Toolchain

The Chapel development environment provides comprehensive support for the entire software development lifecycle, from initial algorithm prototyping through production deployment and maintenance of large-scale machine learning applications. The language’s compiler provides extensive diagnostic capabilities that help developers identify potential performance issues, correctness problems, and opportunities for optimization early in the development process.

Chapel’s integration with modern development tools and workflows includes support for version control systems, continuous integration platforms, and automated testing frameworks that are essential for managing complex machine learning projects with multiple contributors and evolving requirements. The language’s module system and package management capabilities enable the development of reusable components that can be shared across projects and organizations.

The Chapel community has developed extensive documentation, tutorials, and educational resources that support developers at all skill levels, from those new to parallel computing to experts seeking to optimize complex distributed algorithms. This educational ecosystem is particularly important for machine learning practitioners who may have strong domain expertise but limited experience with parallel programming concepts and techniques.

Chapel’s support for interactive development environments and notebook-style interfaces enables exploratory data analysis and algorithm prototyping workflows that are common in machine learning research and development. These interactive capabilities bridge the gap between the exploratory nature of data science work and the performance requirements of production machine learning systems.

Future Directions and Evolution

The future development of Chapel continues to focus on enhancing its capabilities for machine learning and data science applications while maintaining its core strengths in parallel computing expressiveness and performance. Ongoing development efforts include improved support for heterogeneous computing environments that combine traditional processors with specialized accelerators such as GPUs, FPGAs, and tensor processing units.

Chapel’s evolution includes enhanced integration with cloud computing platforms and container orchestration systems that are increasingly important for deploying machine learning applications at scale. These developments will enable Chapel applications to leverage the elasticity and cost-effectiveness of cloud computing while maintaining the performance characteristics that make the language attractive for computationally intensive applications.

The language’s type system and compiler infrastructure continue to evolve to support emerging programming patterns in machine learning, including automatic differentiation, probabilistic programming constructs, and specialized support for neural network architectures that can benefit from language-level optimization and analysis.

Chapel’s community and ecosystem continue to expand with contributions from academic researchers, industry practitioners, and open-source developers who are extending the language’s capabilities and developing domain-specific libraries and tools that enhance its utility for machine learning applications.

The integration of Chapel with emerging hardware architectures and computing paradigms, including quantum computing interfaces and neuromorphic computing systems, represents an exciting frontier that could further extend the language’s applicability to cutting-edge machine learning research and applications.

Conclusion and Strategic Implications

Chapel represents a significant advancement in parallel programming language design that addresses many of the fundamental challenges associated with developing high-performance machine learning applications for large-scale distributed computing environments. Its unique combination of programming expressiveness, performance efficiency, and scalability characteristics positions it as a compelling choice for organizations and researchers working on computationally intensive machine learning problems that require sophisticated parallel processing capabilities.

The strategic implications of adopting Chapel for machine learning applications extend beyond immediate performance benefits to include long-term advantages in code maintainability, developer productivity, and system scalability that can provide competitive advantages in rapidly evolving technological landscapes. Organizations that invest in Chapel expertise and infrastructure may find themselves better positioned to tackle emerging computational challenges and leverage new hardware architectures as they become available.

The language’s continued evolution and growing ecosystem suggest that Chapel will play an increasingly important role in the future of high-performance computing for machine learning applications. As datasets continue to grow and machine learning algorithms become more sophisticated, the need for programming languages that can effectively harness parallel computing resources while maintaining development productivity will only increase, making Chapel’s value proposition more compelling over time.

The intersection of Chapel’s parallel computing capabilities with the growing importance of machine learning across diverse industries creates opportunities for innovative applications and research directions that could fundamentally change how we approach complex computational problems in fields ranging from scientific research to commercial product development.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of Chapel language capabilities and parallel computing applications in machine learning. Readers should conduct their own research and consider their specific requirements when evaluating programming languages and parallel computing platforms. Performance characteristics and suitability for particular applications may vary depending on specific use cases, hardware configurations, and implementation details.