The artificial intelligence revolution has fundamentally transformed how organizations approach computational infrastructure, with cloud-based GPU instances becoming the cornerstone of modern AI and machine learning operations. The three dominant cloud providers—Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP)—have each developed sophisticated AI infrastructure offerings that cater to diverse computational requirements, from small-scale research projects to enterprise-level production deployments that process millions of data points daily.

Explore the latest AI infrastructure trends to understand how cloud computing is evolving to meet the demands of increasingly complex artificial intelligence applications. The selection of the appropriate cloud AI infrastructure has become a critical strategic decision that impacts not only project performance and scalability but also long-term operational costs and development velocity for organizations embarking on AI transformation initiatives.

The landscape of cloud AI infrastructure presents a complex matrix of choices, each with distinct advantages, limitations, and optimal use cases that require careful consideration of technical requirements, budget constraints, and strategic objectives. Understanding the nuances of each platform’s GPU offerings, pricing models, and AI-specific services enables organizations to make informed decisions that align with their specific computational needs and business goals.

AWS AI Infrastructure: The Pioneer’s Advantage

Amazon Web Services established itself as the early leader in cloud computing and has maintained that momentum in the AI infrastructure space through continuous innovation and comprehensive service offerings. The AWS AI infrastructure ecosystem centers around Amazon Elastic Compute Cloud (EC2) instances specifically optimized for machine learning workloads, featuring a diverse portfolio of GPU-accelerated instances that cater to different performance requirements and budget considerations.

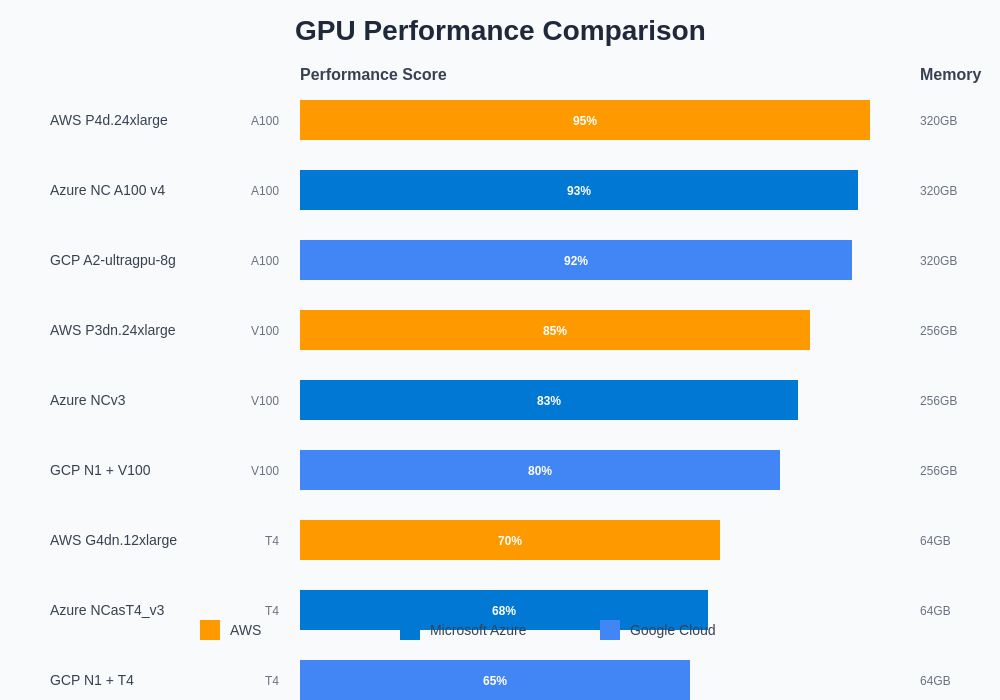

The flagship P4d instances represent AWS’s most powerful AI infrastructure offering, featuring NVIDIA A100 Tensor Core GPUs that deliver exceptional performance for large-scale deep learning training and high-performance computing applications. These instances provide up to 8 A100 GPUs with 40GB of GPU memory each, connected via NVLink and NVSwitch technology that enables efficient multi-GPU communication and scaling. The P4d instances also feature 96 vCPUs, 1.1 TB of system memory, and 8 TB of local NVMe SSD storage, creating a balanced configuration optimized for memory-intensive AI workloads.

For organizations requiring cost-effective solutions without compromising performance, AWS offers P3 instances powered by NVIDIA V100 GPUs that provide excellent value for most machine learning applications. These instances have proven particularly effective for training large neural networks, running inference workloads, and supporting research and development activities where computational efficiency and reliability are paramount.

The G4 instance family addresses the growing demand for graphics-intensive AI applications and inference workloads by combining NVIDIA T4 GPUs with Intel Cascade Lake processors. These instances excel in scenarios requiring real-time inference, video processing, and graphics rendering while maintaining cost-effectiveness for production deployments that require sustained performance over extended periods.

AWS has further enhanced its AI infrastructure appeal through innovative pricing models, including Spot Instances that can reduce costs by up to 90% for fault-tolerant workloads, Reserved Instances for predictable long-term commitments, and On-Demand pricing for flexible short-term requirements. The AWS ecosystem also benefits from seamless integration with comprehensive AI and machine learning services, including Amazon SageMaker for end-to-end machine learning workflows, AWS Lambda for serverless inference, and Amazon EKS for containerized AI applications.

Microsoft Azure: Enterprise Integration Excellence

Microsoft Azure has distinguished itself in the cloud AI infrastructure market through exceptional enterprise integration capabilities and a deep understanding of organizational workflows that stem from decades of experience serving business customers. The Azure AI infrastructure strategy focuses on providing seamless connectivity between cloud-based AI resources and existing enterprise systems, making it particularly attractive for organizations with significant investments in Microsoft technologies and hybrid cloud architectures.

Azure’s NC-series virtual machines form the backbone of their GPU computing offerings, featuring NVIDIA Tesla and A100 GPUs optimized for AI training and high-performance computing workloads. The latest NCasT4_v3 series provides NVIDIA Tesla T4 GPUs with efficient performance for inference applications, while the NC A100 v4 series delivers cutting-edge NVIDIA A100 GPUs for the most demanding AI training scenarios requiring maximum computational throughput.

The Azure ecosystem excels in hybrid cloud scenarios where organizations need to maintain some computational resources on-premises while leveraging cloud scalability for peak workloads. Azure Arc extends Azure management capabilities to on-premises and multi-cloud environments, enabling consistent AI infrastructure management across diverse deployment scenarios. This hybrid approach proves invaluable for organizations with data sovereignty requirements, compliance constraints, or existing infrastructure investments that cannot be immediately migrated to the cloud.

Leverage advanced AI capabilities with Claude to optimize your cloud infrastructure decisions through intelligent analysis of performance requirements, cost considerations, and scalability projections. Azure’s integration with the broader Microsoft ecosystem, including Office 365, Dynamics 365, and Power Platform, creates unique opportunities for AI-powered business applications that leverage existing organizational data and workflows.

Azure Machine Learning service provides comprehensive model development, training, and deployment capabilities that integrate seamlessly with Azure’s GPU infrastructure. The platform supports automated machine learning, model interpretability, and MLOps practices that streamline the transition from research and development to production deployment. Azure Cognitive Services offer pre-built AI models for common tasks such as computer vision, natural language processing, and speech recognition, reducing the need for custom model development in many scenarios.

The Azure pricing model emphasizes predictability and cost control through features such as Azure Cost Management, spending limits, and detailed usage analytics that help organizations optimize their AI infrastructure investments. Reserved instances, spot pricing, and hybrid benefit programs for existing Microsoft license holders create additional opportunities for cost optimization while maintaining performance and reliability standards.

Google Cloud Platform: Innovation and AI-First Design

Google Cloud Platform has leveraged its extensive experience in artificial intelligence research and large-scale distributed systems to create AI infrastructure offerings that reflect deep technical expertise and innovation-focused design philosophy. The GCP approach to AI infrastructure emphasizes cutting-edge technology integration, advanced networking capabilities, and AI-first service design that anticipates the unique requirements of modern machine learning workloads.

The Compute Engine GPU offerings center around carefully curated configurations that balance performance, cost, and accessibility for diverse AI applications. The A2 machine family, powered by NVIDIA A100 GPUs, represents GCP’s flagship AI infrastructure offering with configurations ranging from single GPU instances for development and testing to 16-GPU instances capable of handling the most demanding distributed training workloads. These instances feature optimized networking with up to 100 Gbps bandwidth and are designed specifically for AI and high-performance computing applications.

Google’s unique advantage lies in custom silicon development through Tensor Processing Units (TPUs), which provide specialized acceleration for TensorFlow workloads and specific types of neural network architectures. TPUs offer exceptional performance per dollar for compatible workloads while reducing complexity through Google’s managed TPU service that handles infrastructure provisioning, scaling, and optimization automatically. The integration between TPUs and Google’s AI ecosystem creates seamless workflows for organizations heavily invested in TensorFlow and Google’s machine learning frameworks.

The N1 machine family with NVIDIA T4 and K80 GPUs provides cost-effective options for inference workloads, development environments, and applications requiring moderate GPU acceleration. These instances excel in scenarios where consistent performance and predictable costs are more important than absolute peak performance, making them ideal for production inference systems and continuous integration environments for AI applications.

GCP’s global network infrastructure provides significant advantages for AI workloads that require data transfer between regions or integration with distributed data sources. The premium tier network offers improved performance and reduced latency for data-intensive AI applications, while the standard tier provides cost-effective connectivity for less demanding scenarios. The integration with Google’s global content delivery network enhances performance for AI applications serving geographically distributed users.

Google Cloud AI Platform provides comprehensive machine learning operations capabilities that integrate seamlessly with GCP’s GPU infrastructure. The platform supports automated hyperparameter tuning, distributed training, and managed serving that scales automatically based on demand. Integration with BigQuery for data analysis, Cloud Storage for dataset management, and Pub/Sub for real-time data streaming creates a cohesive ecosystem optimized for end-to-end AI workflows.

The performance characteristics of each cloud provider’s GPU offerings reveal distinct optimization patterns that reflect their respective strategic priorities and technical capabilities. While raw computational power represents one dimension of comparison, factors such as memory bandwidth, networking performance, and integration with supporting services significantly impact real-world AI application performance and development velocity.

Pricing Strategy Analysis and Cost Optimization

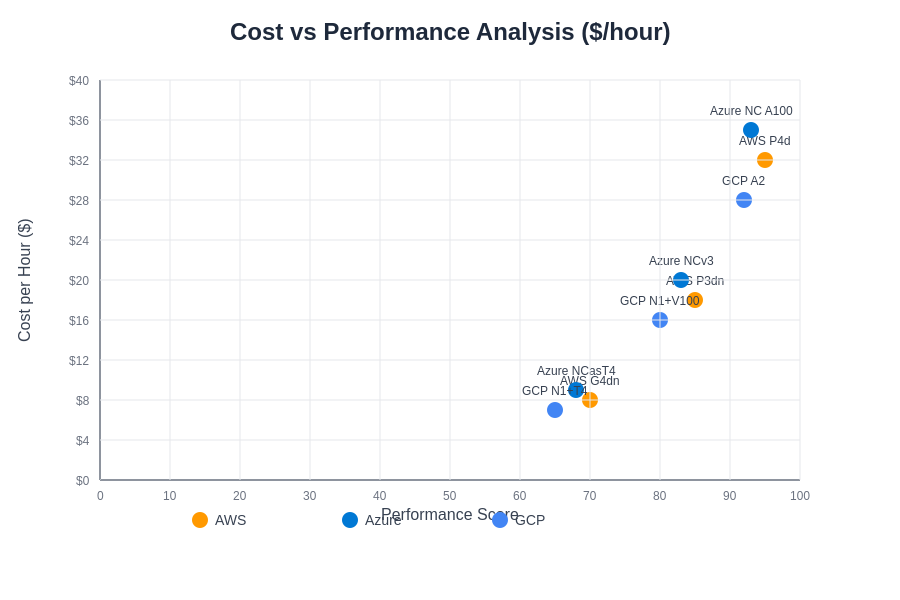

Understanding the pricing strategies and cost optimization opportunities across AWS, Azure, and GCP requires careful analysis of both obvious and hidden costs that impact total cost of ownership for AI infrastructure. Each provider employs different pricing philosophies that reflect their market positioning, target customer segments, and competitive differentiation strategies, creating complex decision matrices for organizations evaluating their options.

AWS pricing strategies emphasize flexibility and granular control through multiple purchasing options that accommodate different usage patterns and risk tolerances. On-Demand pricing provides maximum flexibility for variable workloads but represents the highest per-hour costs for sustained usage. Reserved Instances offer significant discounts of 30-75% for committed usage over 1-3 year terms, making them attractive for predictable AI training workloads or production inference systems with steady demand patterns.

Spot Instances represent AWS’s most compelling cost optimization opportunity, offering discounts of up to 90% for fault-tolerant workloads that can handle interruptions. AI training workloads often prove ideal candidates for Spot pricing due to their ability to save progress through checkpoints and resume from interruption points. However, the complexity of managing Spot Instance interruptions requires sophisticated workload management and automation capabilities that may not be suitable for all organizations.

Azure’s pricing approach focuses on predictability and integration with existing Microsoft licensing agreements through programs such as Azure Hybrid Benefit, which allows organizations to apply existing Windows Server and SQL Server licenses to reduce cloud computing costs. The Azure Reserved Virtual Machine Instances provide discounts of up to 72% for committed usage, while Azure Spot Virtual Machines offer similar interrupt-driven savings comparable to AWS Spot Instances.

The Azure pricing model particularly benefits organizations with existing Microsoft Enterprise Agreements through consolidated billing, volume discounts, and unified support relationships that reduce administrative overhead. Azure Cost Management tools provide detailed usage analytics and spending alerts that help organizations optimize their AI infrastructure investments while maintaining performance and reliability requirements.

Enhance your research capabilities with Perplexity to analyze complex pricing scenarios and identify cost optimization opportunities across different cloud providers and usage patterns. The dynamic nature of cloud pricing requires continuous monitoring and adjustment to maintain optimal cost-performance ratios as workload requirements evolve.

Google Cloud Platform pricing emphasizes simplicity and innovation through features such as sustained use discounts that automatically apply to consistent usage without requiring upfront commitments, and per-second billing that eliminates waste from partial hour usage. Committed use discounts provide savings of up to 57% for consistent workloads, while preemptible instances offer up to 80% discounts for interrupt-tolerant applications.

The GCP pricing model benefits from Google’s engineering culture that prioritizes efficiency and automation, resulting in competitive pricing for many AI workload scenarios. The integration with Google’s global infrastructure enables cost-effective data transfer and storage that can significantly impact total cost of ownership for data-intensive AI applications that require frequent data movement or backup operations.

Performance Benchmarking and Real-World Applications

Evaluating cloud AI infrastructure performance requires comprehensive benchmarking that extends beyond theoretical specifications to encompass real-world application scenarios, data transfer performance, and integration efficiency with supporting services. The performance characteristics of each platform reveal distinct strengths and limitations that impact different types of AI workloads in varying ways.

GPU computational performance represents the most obvious comparison dimension, with each provider offering similar hardware from NVIDIA but with different configuration optimizations, networking implementations, and supporting infrastructure that can significantly impact actual application performance. Training large language models, computer vision systems, and deep reinforcement learning applications each stress different aspects of the infrastructure stack, revealing platform-specific advantages and limitations.

AWS P4d instances consistently demonstrate superior performance for large-scale distributed training applications that require high-bandwidth GPU-to-GPU communication. The NVLink and NVSwitch interconnects provide exceptional performance for applications that can leverage multiple GPUs effectively, while the high-performance networking enables efficient distributed training across multiple instances when single-instance resources prove insufficient.

Azure’s strength lies in consistent performance and reliability that proves particularly valuable for production inference systems requiring predictable response times and high availability. The integration with Azure’s global network provides reliable performance for geographically distributed applications, while the hybrid connectivity options enable efficient integration with on-premises data sources and systems.

Google Cloud Platform excels in scenarios requiring integration with large-scale data analytics and processing pipelines through seamless connectivity with BigQuery, Cloud Dataflow, and other data platform services. The custom TPU offerings provide exceptional performance for TensorFlow-based applications while reducing complexity through fully managed infrastructure that handles scaling and optimization automatically.

Memory and storage performance significantly impact AI workload effectiveness, particularly for applications processing large datasets or requiring frequent data access during training. Each platform provides different storage options with varying performance characteristics, from local NVMe storage for maximum performance to network-attached storage for durability and shared access scenarios.

Network performance affects both data transfer costs and application responsiveness, particularly for applications requiring frequent communication between distributed components or integration with external data sources. The global network infrastructure of each provider creates different performance profiles that must be evaluated based on specific application requirements and user distribution patterns.

The relationship between cost and performance varies significantly across different workload types and usage patterns, creating complex optimization scenarios that require careful analysis of total cost of ownership rather than simple per-hour pricing comparisons. Factors such as data transfer costs, storage expenses, and supporting service fees can dramatically impact overall project economics.

AI Services Integration and Ecosystem Advantages

The integration capabilities and ecosystem services offered by each cloud provider create substantial differentiating factors that extend beyond basic GPU infrastructure to encompass comprehensive AI development and deployment platforms. These ecosystem advantages can significantly impact development velocity, operational efficiency, and long-term scalability for AI initiatives across diverse organizational contexts.

AWS provides the most comprehensive ecosystem of AI and machine learning services through Amazon SageMaker, which offers end-to-end machine learning workflows from data preparation and model training to deployment and monitoring. The platform includes automated model tuning, distributed training capabilities, and managed endpoints that scale automatically based on demand. Integration with other AWS services such as S3 for data storage, Lambda for serverless computing, and Kinesis for real-time data streaming creates seamless workflows for complex AI applications.

The AWS ecosystem benefits from extensive third-party integrations and marketplace offerings that provide specialized tools and services for specific AI applications. The AWS Partner Network includes numerous AI-focused companies that offer complementary services, pre-built models, and industry-specific solutions that accelerate development timelines and reduce implementation complexity.

Amazon’s AI services portfolio includes Rekognition for computer vision, Comprehend for natural language processing, and Transcribe for speech recognition, providing high-quality pre-built models that eliminate the need for custom development in many scenarios. These services integrate seamlessly with GPU infrastructure for custom model development while providing fallback options and baseline performance benchmarks.

Azure Machine Learning provides comprehensive MLOps capabilities that integrate with Azure DevOps for continuous integration and deployment of AI models. The platform supports automated machine learning, model interpretability, and responsible AI practices that address governance and compliance requirements increasingly important for enterprise AI deployments.

The Azure ecosystem excels in enterprise scenarios through deep integration with Microsoft’s productivity and business applications. Power BI enables sophisticated data visualization and business intelligence integration with AI models, while Microsoft Teams and Office 365 provide natural integration points for AI-powered business applications that enhance existing workflows rather than replacing them.

Azure Cognitive Services offer pre-built AI capabilities that integrate seamlessly with existing Microsoft applications and third-party systems through standard APIs and SDKs. The services include advanced capabilities such as Custom Vision for domain-specific image recognition, Language Understanding for conversational AI, and Form Recognizer for document processing automation.

Google Cloud AI Platform provides comprehensive machine learning operations with strong emphasis on automation and scalability. The platform includes automated hyperparameter tuning, neural architecture search, and automated model deployment that reduces operational overhead while maintaining performance and reliability standards. Integration with Google’s research and development ecosystem provides early access to cutting-edge AI techniques and algorithms.

The Google ecosystem benefits from close integration with TensorFlow and other Google-developed AI frameworks, creating optimized performance and simplified workflows for applications built on these platforms. Google’s expertise in large-scale distributed systems translates into robust infrastructure capabilities that handle scaling and optimization challenges automatically.

Google Cloud AI services include advanced capabilities such as AutoML for automated model development, AI Platform Notebooks for collaborative development environments, and Vertex AI for unified machine learning platform capabilities. The integration with Google’s search and advertising technologies provides unique opportunities for AI applications that require sophisticated information retrieval and recommendation capabilities.

Security, Compliance, and Governance Considerations

Security, compliance, and governance requirements represent critical evaluation criteria for cloud AI infrastructure that often receive insufficient attention during initial platform selection but become increasingly important as AI initiatives mature and expand throughout organizations. Each cloud provider offers different security models, compliance certifications, and governance tools that address varying organizational requirements and regulatory constraints.

AWS provides comprehensive security capabilities through Identity and Access Management (IAM), Virtual Private Cloud (VPC) networking, and encryption services that enable fine-grained control over access to AI infrastructure and data. The platform includes advanced security monitoring through CloudTrail audit logging, GuardDuty threat detection, and Macie data discovery services that provide visibility into AI workload security postures and potential vulnerabilities.

The AWS compliance program includes certifications for numerous regulatory frameworks including SOC 2, HIPAA, PCI DSS, and various international standards that enable AI deployments in regulated industries. The shared responsibility model clearly delineates security obligations between AWS and customers, providing clarity for compliance and risk management processes.

AWS offers specialized services for sensitive workloads through AWS GovCloud for government applications and AWS Outposts for hybrid deployments that require on-premises data processing while maintaining cloud management capabilities. These options address specific compliance requirements that may prohibit certain types of data from leaving organizational boundaries or require enhanced security controls.

Azure security capabilities emphasize integration with existing enterprise security infrastructure through Active Directory integration, conditional access policies, and unified security management across hybrid environments. The platform includes advanced threat protection through Azure Security Center, sentinel SIEM capabilities, and Microsoft Defender integration that provide comprehensive security monitoring and response capabilities.

The Azure compliance portfolio includes extensive certifications and attestations covering global, regional, and industry-specific requirements. The Trust Center provides transparent information about Azure’s security practices, compliance status, and data handling procedures that support organizational due diligence and risk assessment processes.

Azure’s unique strength lies in hybrid security management through Azure Arc and consistent security policies across on-premises and cloud environments. This capability proves particularly valuable for organizations with complex compliance requirements that necessitate unified security management across diverse infrastructure deployments.

Google Cloud Platform security model emphasizes defense-in-depth principles through multiple layers of protection including hardware-level security, network isolation, and application-level controls. The platform benefits from Google’s extensive experience securing large-scale internet services and incorporates advanced security technologies such as BeyondCorp zero-trust networking and titan security keys for enhanced authentication.

GCP compliance certifications address major international standards and industry-specific requirements, with particular strength in data privacy regulations such as GDPR through comprehensive data processing and residency controls. The platform provides detailed audit logging and security monitoring capabilities through Cloud Security Command Center and Cloud Asset Inventory services.

Google’s approach to AI ethics and responsible AI practices includes built-in bias detection, fairness evaluation tools, and transparent reporting capabilities that address growing regulatory and organizational requirements for AI governance. These capabilities prove increasingly important as AI applications expand into sensitive domains such as healthcare, finance, and human resources.

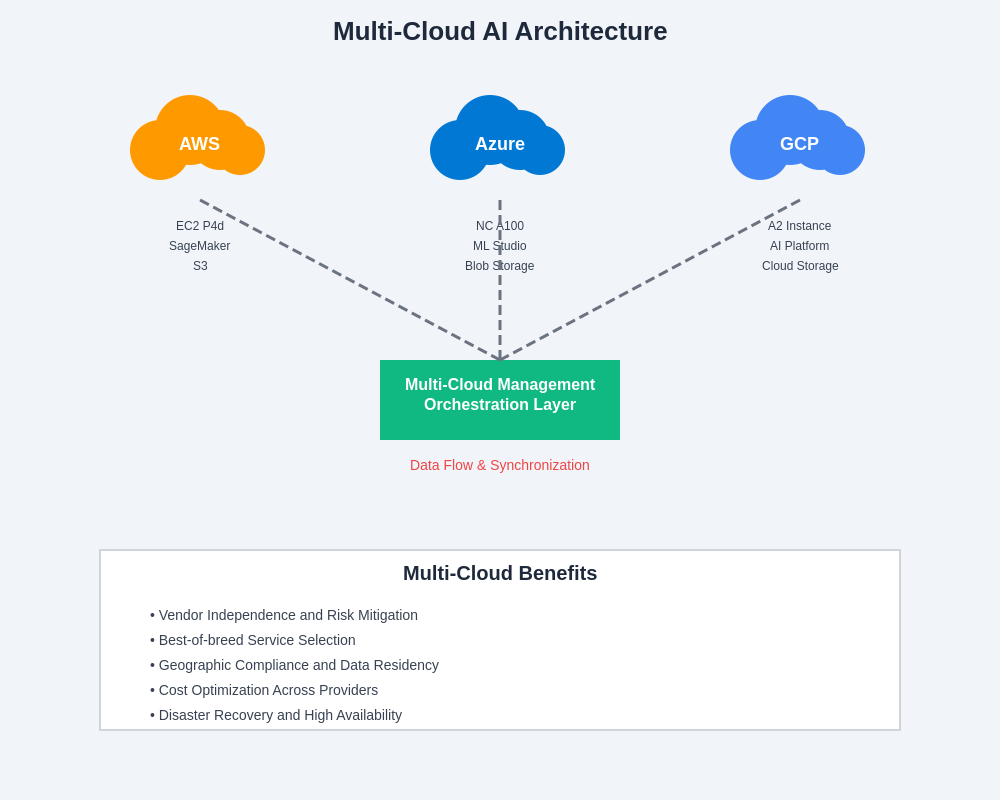

Migration Strategies and Multi-Cloud Considerations

Developing effective migration strategies and evaluating multi-cloud architectures requires careful consideration of technical compatibility, data transfer requirements, and operational complexity that impact both initial implementation timelines and long-term management overhead. The decision between single-cloud and multi-cloud deployments involves complex tradeoffs between simplicity, vendor independence, and risk mitigation that vary based on organizational requirements and strategic objectives.

Single-cloud strategies offer simplicity and deep integration advantages through unified management interfaces, consistent security models, and optimized service integration that reduces operational complexity and accelerates development velocity. Organizations choosing single-cloud approaches can leverage platform-specific optimizations, volume discounts, and specialized services that provide competitive advantages for specific use cases.

AWS migration strategies benefit from comprehensive tooling through AWS Migration Hub, Database Migration Service, and Application Discovery Service that automate assessment, planning, and execution phases of cloud migration projects. The platform’s maturity and extensive partner ecosystem provide proven methodologies and specialized expertise for complex migration scenarios across diverse application types and data sources.

Azure migration advantages include seamless integration with existing Microsoft infrastructure through Azure Migrate, hybrid connectivity options, and licensing portability that can significantly reduce migration complexity and costs for organizations with substantial Microsoft investments. The Azure ecosystem provides familiar management interfaces and operational procedures that reduce training requirements and implementation risks.

Google Cloud migration tools emphasize automation and efficiency through services such as Migrate for Compute Engine and Transfer Service that handle infrastructure migration and data transfer with minimal manual intervention. The platform’s strength in data analytics and machine learning provides unique opportunities for organizations seeking to enhance their AI capabilities during the migration process.

Multi-cloud strategies offer vendor independence, risk mitigation, and access to best-of-breed services from multiple providers but require sophisticated management capabilities and additional operational complexity that may offset potential benefits. Organizations pursuing multi-cloud approaches must develop expertise across multiple platforms while managing data consistency, security policies, and integration complexity across diverse environments.

Multi-cloud AI workload management requires careful attention to data gravity effects where computational resources must be located near data sources to minimize transfer costs and latency. The distributed nature of multi-cloud architectures can create complex data synchronization requirements and regulatory compliance challenges that require specialized expertise and tooling.

Container orchestration through Kubernetes provides platform abstraction capabilities that can simplify multi-cloud AI deployments by standardizing application packaging and deployment procedures across different cloud providers. However, the underlying infrastructure differences still require platform-specific optimization and management procedures that limit the abstraction benefits for performance-critical AI workloads.

The architectural considerations for multi-cloud AI deployments encompass data flow optimization, latency management, and cost control across distributed infrastructure that requires sophisticated planning and ongoing optimization to achieve desired performance and economic outcomes.

Future Trends and Strategic Recommendations

The evolution of cloud AI infrastructure continues to accelerate with emerging technologies, new computational paradigms, and changing market dynamics that will significantly impact strategic decisions and investment priorities over the coming years. Understanding these trends and their implications enables organizations to make infrastructure choices that remain viable and competitive as the technology landscape evolves.

Edge AI computing represents a significant trend that will reshape cloud AI infrastructure requirements by distributing computational resources closer to data sources and end users. Each cloud provider is developing edge computing capabilities that extend their AI services to edge locations, creating hybrid architectures that balance latency requirements, bandwidth constraints, and cost considerations for applications such as autonomous vehicles, industrial automation, and real-time analytics.

Quantum computing integration presents both opportunities and challenges for cloud AI infrastructure as quantum processors become available through cloud services. While current quantum computers remain limited in their practical AI applications, the potential for quantum advantage in specific optimization and machine learning algorithms suggests that organizations should consider quantum computing capabilities in their long-term infrastructure strategies.

Sustainable computing and environmental considerations are becoming increasingly important evaluation criteria as organizations seek to reduce their carbon footprint and meet environmental sustainability commitments. Each cloud provider is investing in renewable energy and energy-efficient infrastructure, but their progress and commitments vary significantly, creating differentiation opportunities for environmentally conscious organizations.

The democratization of AI through automated machine learning and no-code platforms will reduce the technical expertise requirements for AI development while increasing the demand for scalable, cost-effective infrastructure that can support diverse user populations and varying skill levels. This trend favors cloud providers that can offer simplified interfaces and automated optimization while maintaining the flexibility for advanced users.

Based on comprehensive analysis of current capabilities, market trends, and strategic positioning, organizations should consider the following recommendations for cloud AI infrastructure selection and implementation. AWS remains the optimal choice for organizations requiring maximum flexibility, extensive service ecosystem, and proven scalability for large-scale AI deployments. The platform’s maturity and comprehensive feature set make it particularly suitable for complex AI applications and organizations with diverse technical requirements.

Azure represents the best choice for organizations with significant Microsoft ecosystem investments, enterprise integration requirements, and hybrid cloud architectural needs. The platform’s strength in business application integration and enterprise security makes it particularly attractive for AI initiatives that enhance existing business processes and workflows.

Google Cloud Platform offers compelling advantages for organizations prioritizing innovation, advanced AI research capabilities, and deep integration with data analytics platforms. The platform’s strength in machine learning research and custom silicon development makes it particularly suitable for cutting-edge AI applications and organizations seeking to leverage the latest technological advances.

Multi-cloud strategies should be carefully evaluated against increased complexity and management overhead, with clear justification based on specific business requirements such as vendor independence, geographic presence, or regulatory compliance that cannot be addressed through single-cloud approaches.

Organizations should prioritize long-term strategic alignment over short-term cost optimization, considering factors such as ecosystem integration, development team expertise, and business continuity requirements that will impact total cost of ownership and operational efficiency over time.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of cloud AI infrastructure offerings and market conditions, which are subject to rapid change. Readers should conduct their own research and consider their specific requirements when selecting cloud infrastructure providers. The effectiveness and cost of cloud AI infrastructure may vary significantly based on specific use cases, workload characteristics, and organizational requirements. Performance benchmarks and cost comparisons are based on publicly available information and may not reflect actual results in specific deployment scenarios.