The exponential growth of machine learning applications has generated unprecedented volumes of training data that organizations must manage throughout their lifecycle. As artificial intelligence systems mature and evolve, the challenge of preserving historical training datasets while optimizing storage costs has become a critical concern for enterprises investing heavily in AI infrastructure. Cold storage solutions represent a sophisticated approach to this challenge, offering cost-effective long-term preservation of valuable machine learning assets that may be required for model retraining, compliance audits, or future research initiatives.

Discover the latest AI infrastructure trends that are shaping how organizations approach data management and storage optimization in machine learning environments. The strategic implementation of cold storage architectures enables organizations to maintain comprehensive historical records while minimizing operational expenses and maximizing the long-term value of their data investments.

Understanding Cold Storage in the AI Context

Cold storage represents a fundamental paradigm in data management where infrequently accessed information is moved to lower-cost storage systems that prioritize capacity and durability over immediate accessibility. In the context of artificial intelligence and machine learning, this approach addresses the unique challenges posed by massive training datasets that may contain millions or billions of data points, spanning terabytes or petabytes of storage requirements. Unlike traditional enterprise data that often follows predictable access patterns, machine learning training data exhibits complex lifecycle characteristics that require sophisticated storage strategies.

The implementation of cold storage for AI applications involves careful consideration of data retention policies, compliance requirements, and the potential need for future model iterations or comparative analyses. Organizations must balance the immediate cost savings of cold storage against the possibility that archived datasets may become valuable again as new machine learning techniques emerge or business requirements evolve. This strategic approach to data lifecycle management has become essential for organizations operating at scale in the artificial intelligence domain.

The Economics of ML Data Archiving

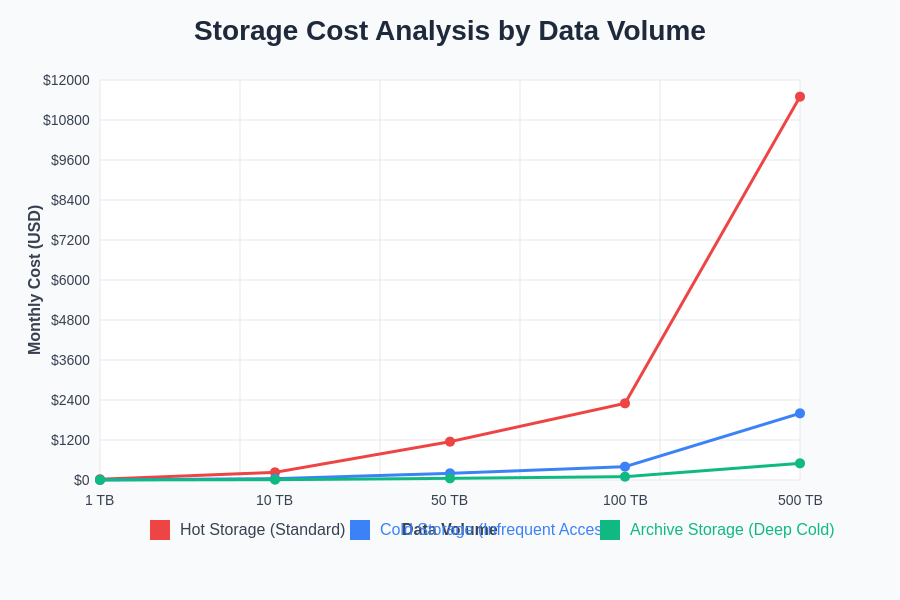

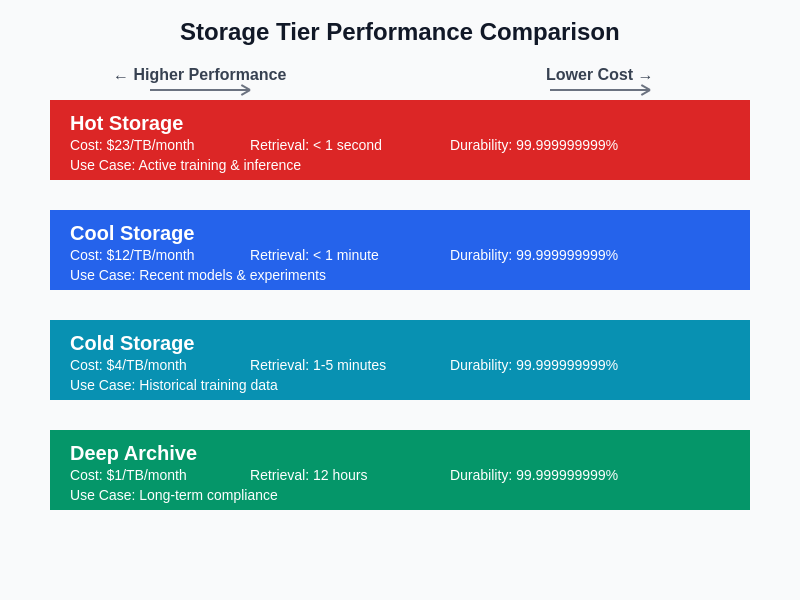

The financial implications of storing massive machine learning datasets extend far beyond simple storage costs, encompassing network transfer fees, retrieval expenses, and the hidden costs of data management overhead. Traditional hot storage solutions, while providing immediate accessibility, can result in substantial monthly expenses that accumulate rapidly as data volumes grow. Cold storage alternatives typically offer cost reductions of sixty to eighty percent compared to standard storage tiers, making them attractive for organizations managing large-scale AI operations with extensive historical data requirements.

Experience advanced AI capabilities with Claude for optimizing your data management strategies and developing comprehensive archiving frameworks that balance cost efficiency with accessibility requirements. The economic benefits of cold storage become particularly compelling when organizations consider the total cost of ownership over multi-year periods, including factors such as data durability guarantees, geographic redundancy, and integration with existing data processing pipelines.

Strategic Data Lifecycle Management

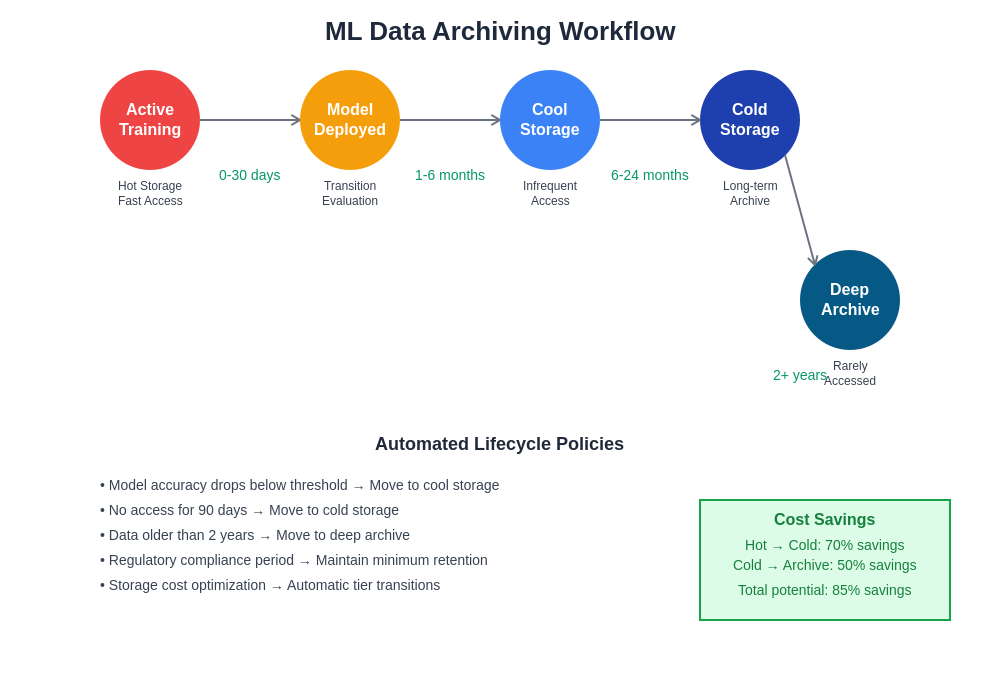

Effective cold storage implementation requires the development of comprehensive data lifecycle management strategies that define clear policies for data classification, retention periods, and archival triggers. Organizations must establish frameworks that automatically identify training datasets suitable for cold storage based on criteria such as age, usage frequency, model performance metrics, and regulatory requirements. These automated policies ensure that valuable data assets are preserved while minimizing manual intervention and reducing the risk of inadvertent data loss.

The complexity of machine learning workflows introduces additional considerations for lifecycle management, including the need to maintain relationships between training data, model artifacts, experiment logs, and performance metrics. Cold storage strategies must account for these interdependencies while ensuring that archived datasets remain accessible for future analysis, regulatory compliance, or emergency model reconstruction scenarios. This holistic approach to data lifecycle management becomes increasingly important as organizations scale their AI operations and accumulate years of historical training data.

Cloud Provider Cold Storage Solutions

Major cloud computing platforms have developed specialized cold storage offerings designed to meet the unique requirements of large-scale data archiving. Amazon Web Services provides solutions such as Amazon S3 Glacier and S3 Deep Archive, which offer different levels of retrieval latency and cost optimization for long-term data preservation. Microsoft Azure offers similar capabilities through Azure Archive Storage, while Google Cloud Platform provides Coldline and Archive storage classes that integrate seamlessly with machine learning workflows and data processing pipelines.

These cloud-native solutions typically include features such as automated data lifecycle transitions, intelligent tiering based on access patterns, and integration with data processing frameworks commonly used in machine learning environments. The choice of cloud provider and specific cold storage offering depends on factors such as existing infrastructure investments, compliance requirements, data sovereignty concerns, and integration capabilities with current machine learning platforms and tools.

The financial analysis of different cold storage options reveals significant cost variations based on data volume, retention period, and expected retrieval frequency. Organizations implementing cold storage strategies must carefully evaluate these factors to optimize their total cost of ownership while maintaining appropriate access capabilities for future requirements.

Data Format Optimization for Long-Term Storage

The selection and optimization of data formats play a crucial role in maximizing the efficiency of cold storage implementations for machine learning applications. Compressed formats such as Parquet, ORC, or specialized machine learning formats like TFRecord can significantly reduce storage requirements while maintaining data integrity and enabling efficient retrieval operations. Organizations must balance compression ratios against decompression overhead and compatibility with future processing systems that may need to access archived data.

Advanced compression techniques and data deduplication strategies can further optimize storage efficiency, particularly for datasets that contain redundant information or can benefit from delta compression approaches. The implementation of these optimization techniques requires careful consideration of data access patterns, processing requirements, and the potential impact on retrieval performance when archived data needs to be accessed for model retraining or analysis purposes.

Metadata Management and Data Governance

Comprehensive metadata management becomes critical when implementing cold storage solutions for machine learning training data, as organizations must maintain detailed records of archived datasets to ensure future accessibility and compliance. Metadata should include information such as data provenance, processing history, model associations, quality metrics, and retention policies that enable efficient discovery and retrieval of archived information when needed. This metadata management capability becomes increasingly important as organizations accumulate extensive archives spanning multiple years and countless training experiments.

Explore advanced research capabilities with Perplexity to stay current with evolving best practices in data governance and metadata management for large-scale AI operations. Effective governance frameworks must also address data privacy considerations, regulatory compliance requirements, and cross-jurisdictional data handling policies that may impact how archived training data can be stored, accessed, and processed in different geographic regions.

Retrieval Strategies and Performance Considerations

The design of efficient retrieval strategies represents a critical aspect of cold storage implementation, as organizations must balance cost optimization with the ability to access archived data when business requirements or technical needs arise. Cold storage systems typically introduce retrieval latencies ranging from minutes to hours, requiring careful planning for scenarios where archived training data may be needed for model retraining, comparative analysis, or regulatory compliance purposes. Organizations must develop retrieval workflows that account for these latencies while minimizing associated costs.

Advanced retrieval strategies may include the implementation of tiered storage architectures where frequently accessed portions of archived datasets are maintained in faster storage tiers, while less critical data remains in deep cold storage. This approach enables organizations to optimize both cost and performance by maintaining strategic subsets of data in more accessible formats while preserving the complete historical record in cost-effective cold storage systems.

The systematic approach to machine learning data archiving encompasses multiple stages from active training data through long-term cold storage, with automated policies governing transitions between storage tiers based on access patterns, age, and business value assessments.

Compliance and Regulatory Considerations

Machine learning training data often contains sensitive information that must be managed in accordance with various regulatory requirements, including data protection laws, industry-specific compliance standards, and cross-border data transfer restrictions. Cold storage implementations must incorporate appropriate security controls, audit capabilities, and data handling procedures that ensure compliance throughout the extended retention periods typically associated with archived machine learning datasets.

The complexity of regulatory compliance in cold storage environments requires organizations to implement comprehensive audit trails, encryption standards, and access controls that can be maintained and verified over multi-year retention periods. These compliance frameworks must also account for evolving regulatory requirements and the potential need to modify data handling procedures or security controls for archived datasets without compromising data integrity or accessibility.

Security and Encryption Framework

The extended retention periods associated with cold storage implementations necessitate robust security frameworks that can protect sensitive training data throughout its archived lifecycle. Organizations must implement encryption strategies that balance security requirements with performance considerations, including encryption at rest, in transit, and during processing operations. The management of encryption keys over extended periods introduces additional complexity that must be addressed through comprehensive key lifecycle management policies and procedures.

Advanced security frameworks may include features such as immutable storage capabilities, cryptographic verification of data integrity, and multi-layered access controls that ensure archived training data remains protected against unauthorized access while maintaining the ability to verify data authenticity when retrieval becomes necessary. These security considerations become particularly important for organizations operating in regulated industries or handling personally identifiable information within their training datasets.

Integration with ML Operations Pipelines

Successful cold storage implementation requires seamless integration with existing machine learning operations pipelines, ensuring that data archiving processes do not disrupt ongoing development and training activities. Organizations must develop integration strategies that enable automated archiving of training data following model deployment while maintaining the ability to retrieve historical datasets for model comparison, retraining, or research purposes. This integration complexity requires careful consideration of workflow dependencies and timing considerations.

Modern ML operations platforms increasingly include native support for cold storage integration, enabling organizations to implement archiving policies that automatically transition training data to cold storage based on predefined criteria while maintaining comprehensive tracking of data lineage and model associations. These integrated approaches reduce operational overhead while ensuring that archived data remains accessible through standard ML operations interfaces and tools.

Cost Optimization Strategies

The implementation of effective cost optimization strategies for cold storage requires ongoing analysis of data access patterns, storage utilization, and retrieval costs to identify opportunities for further efficiency improvements. Organizations should regularly review their archiving policies to ensure that data classification criteria remain aligned with business requirements while taking advantage of new storage offerings and pricing models that may provide additional cost savings opportunities.

Advanced cost optimization approaches may include the use of intelligent data placement strategies that automatically select the most cost-effective storage tier based on predicted access patterns, geographic considerations, and compliance requirements. These automated optimization systems can significantly reduce storage costs while maintaining appropriate access capabilities for business-critical operations and compliance requirements.

The comparative analysis of different storage tiers demonstrates the trade-offs between cost efficiency and access performance, enabling organizations to make informed decisions about appropriate archiving strategies for different types of machine learning training data.

Disaster Recovery and Business Continuity

Cold storage implementations must incorporate comprehensive disaster recovery and business continuity planning to protect against data loss scenarios that could compromise years of accumulated training data investments. Organizations must implement geographic redundancy, backup verification procedures, and recovery testing protocols that ensure archived datasets can be restored in emergency situations while maintaining data integrity and compliance requirements.

The development of effective disaster recovery strategies for cold storage environments requires consideration of extended recovery time objectives and recovery point objectives that may differ significantly from traditional enterprise applications. Organizations must balance the cost of enhanced redundancy and backup capabilities against the potential business impact of losing access to historical training data that may be critical for regulatory compliance or future business operations.

Future Trends and Technological Evolution

The evolution of cold storage technologies continues to be driven by the increasing scale of machine learning operations and the growing recognition of training data as valuable intellectual property that requires long-term preservation. Emerging technologies such as DNA-based storage systems, advanced compression algorithms, and intelligent data placement systems promise to further reduce storage costs while improving accessibility and durability characteristics for archived machine learning datasets.

Organizations implementing cold storage strategies must consider the long-term evolution of storage technologies and data formats to ensure that archived training data remains accessible as technological standards evolve. This forward-looking approach requires the development of migration strategies and format standardization policies that can accommodate technological changes while preserving the integrity and accessibility of historical training data assets.

The continued advancement of artificial intelligence and machine learning technologies will likely increase the strategic value of historical training data, making effective cold storage implementation an increasingly important competitive advantage for organizations operating in data-intensive industries. The organizations that successfully implement comprehensive cold storage strategies today will be better positioned to leverage their historical data assets for future AI initiatives and competitive advantages.

Disclaimer

This article provides general information about cold storage strategies for machine learning data and should not be considered as specific technical or financial advice. Organizations should conduct thorough assessments of their specific requirements, compliance obligations, and technical constraints when implementing cold storage solutions. The effectiveness and cost benefits of cold storage implementations may vary significantly based on data characteristics, access patterns, and organizational requirements. Readers should consult with qualified professionals and conduct pilot implementations before making significant investments in cold storage infrastructure.