The modern machine learning landscape demands sophisticated experiment management solutions that can handle the complexity of iterative model development, hyperparameter optimization, and collaborative research workflows. Among the leading platforms in this space, Comet and Neptune.ai have emerged as prominent solutions, each offering distinct approaches to experiment tracking, model management, and team collaboration. Understanding the nuances, strengths, and limitations of these platforms is crucial for data science teams seeking to optimize their machine learning operations and accelerate their path to production-ready models.

Explore the latest AI and ML trends to discover cutting-edge developments in experiment management and machine learning operations that are shaping the future of data science workflows. The choice between Comet and Neptune.ai represents more than a simple tool selection; it involves strategic decisions about how teams will structure their research processes, manage computational resources, and maintain reproducibility across complex machine learning projects.

The Evolution of ML Experiment Management

Machine learning experiment management has evolved from simple spreadsheet tracking to sophisticated platforms that provide comprehensive oversight of the entire model development lifecycle. Traditional approaches often resulted in scattered experiment data, difficulty in reproducing results, and challenges in collaboration among team members working on similar problems. Modern platforms like Comet and Neptune.ai address these fundamental challenges by providing centralized repositories for experiment metadata, automated tracking of model performance metrics, and collaborative features that enable seamless knowledge sharing across research teams.

The significance of robust experiment management becomes particularly apparent in enterprise environments where multiple data scientists work simultaneously on related problems, where model performance needs to be rigorously validated before deployment, and where compliance requirements demand detailed audit trails of model development processes. Both Comet and Neptune.ai have been designed to address these enterprise-grade requirements while maintaining accessibility for smaller teams and individual researchers.

Comet: Comprehensive ML Operations Platform

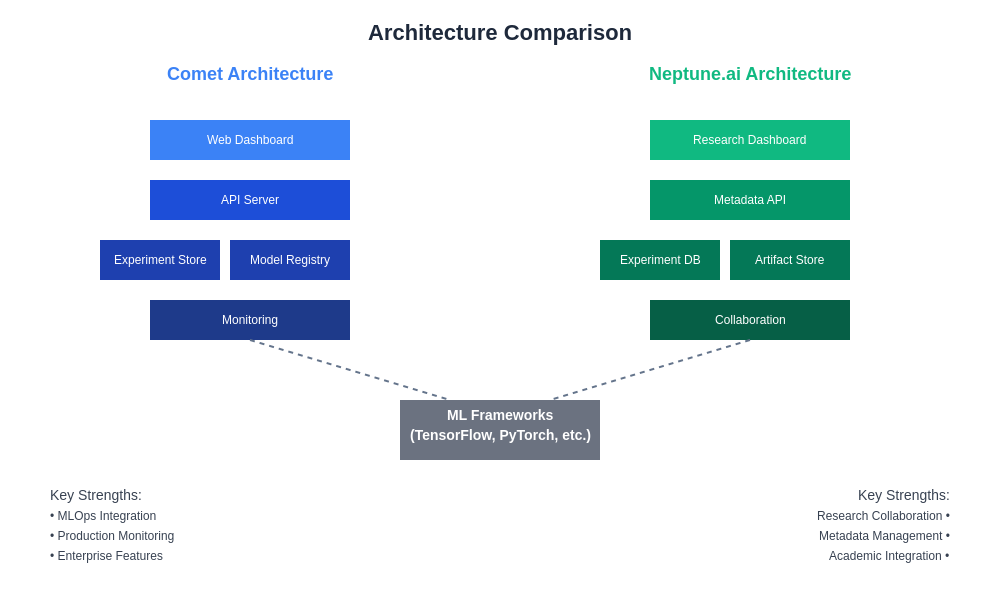

Comet positions itself as a comprehensive machine learning operations platform that provides end-to-end support for the entire machine learning lifecycle. The platform’s architecture emphasizes ease of integration with existing workflows, supporting popular machine learning frameworks including TensorFlow, PyTorch, Scikit-learn, and XGBoost through minimal code modifications. This integration-first approach has made Comet particularly attractive to teams that want to enhance their existing workflows without requiring significant restructuring of their development processes.

The platform’s experiment tracking capabilities extend beyond simple metric logging to include comprehensive artifact management, code versioning, and dependency tracking. Comet automatically captures environment information, hyperparameters, and model configurations, creating a complete record of each experiment that enables reliable reproduction of results. This level of detail proves invaluable during model debugging sessions and when attempting to understand the factors that contribute to performance variations across different experimental configurations.

Comet’s visualization capabilities provide intuitive interfaces for exploring experiment results, comparing model performance across multiple runs, and identifying trends in hyperparameter optimization processes. The platform’s dashboard system allows researchers to create custom views that highlight the metrics most relevant to their specific use cases, facilitating rapid identification of promising experimental directions and optimization opportunities.

Leverage AI assistants like Claude for enhanced experiment analysis and insight generation that can help interpret complex experimental results and suggest optimization strategies. The combination of comprehensive experiment tracking with AI-powered analysis creates powerful synergies that accelerate the research process and improve model development outcomes.

Neptune.ai: Research-Focused Experiment Management

Neptune.ai has established itself as a research-focused experiment management platform that prioritizes flexibility, scalability, and deep integration with the scientific computing ecosystem. The platform’s design philosophy emphasizes providing researchers with powerful tools for organizing, comparing, and analyzing experimental results while maintaining the flexibility necessary for diverse research workflows and methodologies.

The platform’s metadata management system represents one of its strongest features, allowing researchers to attach rich contextual information to experiments including research hypotheses, methodological notes, and detailed parameter explanations. This capability proves particularly valuable in academic and research environments where experimental context and methodology documentation are crucial for peer review processes and collaborative research efforts.

Neptune.ai’s querying and filtering capabilities enable sophisticated analysis of experimental results across large collections of experiments. Researchers can construct complex queries that identify experiments based on combinations of hyperparameters, performance metrics, and metadata tags, enabling systematic analysis of factors that influence model performance. This analytical depth supports rigorous statistical analysis of experimental results and facilitates evidence-based decision-making in model development processes.

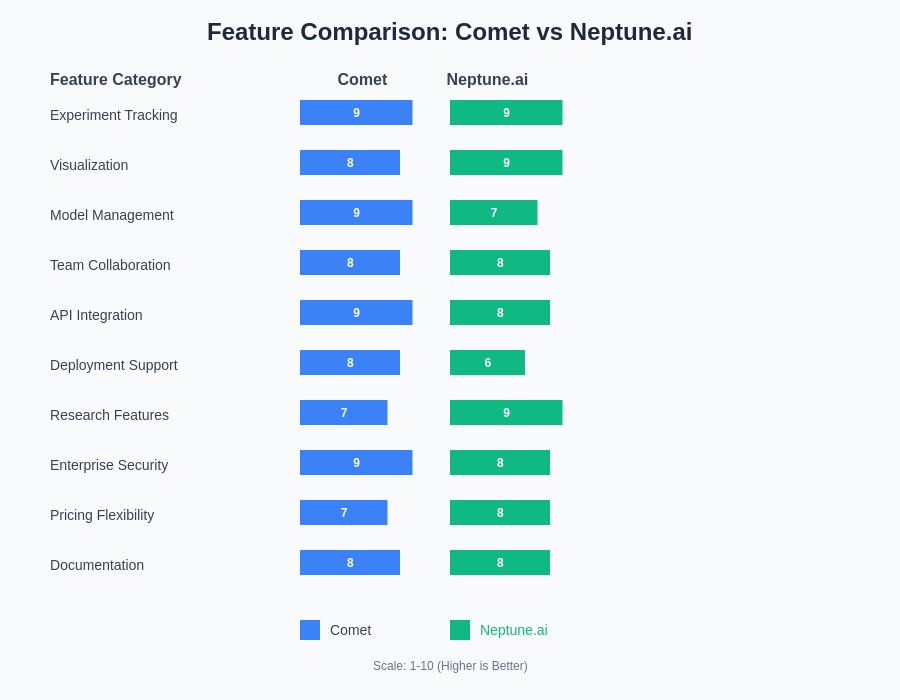

The quantitative analysis reveals distinct strengths in each platform’s feature set, with Comet excelling in model management and deployment support while Neptune.ai demonstrates superior research-oriented capabilities and visualization tools. The platform’s collaboration features are designed to support distributed research teams working on long-term projects where knowledge sharing and result reproducibility are paramount concerns. Neptune.ai provides granular access controls, comprehensive audit trails, and collaborative annotation features that enable effective teamwork while maintaining data security and experimental integrity.

Integration and Compatibility Analysis

Both platforms demonstrate strong integration capabilities with popular machine learning frameworks and development environments, but their approaches differ in significant ways that impact user experience and adoption requirements. Comet emphasizes seamless integration through minimal code changes, providing lightweight logging libraries that can be quickly incorporated into existing training scripts with minimal disruption to established workflows.

Neptune.ai takes a more comprehensive approach to integration, offering deeper hooks into the machine learning development process that provide more granular control over experiment tracking and metadata management. This approach requires slightly more setup effort but provides greater flexibility for teams with complex experimental workflows or specialized tracking requirements.

The platforms differ significantly in their approach to cloud integration and deployment options. Comet offers both cloud-hosted and on-premises deployment options, with particular strength in hybrid cloud environments that require data to remain within specific geographic regions or security boundaries. Neptune.ai primarily focuses on cloud-hosted solutions but provides robust API access that enables integration with existing infrastructure and custom deployment scenarios.

Performance Tracking and Visualization

The visualization and performance tracking capabilities of both platforms represent critical differentiators that significantly impact user experience and analytical effectiveness. Comet provides comprehensive dashboards with real-time updating capabilities that enable researchers to monitor long-running experiments and quickly identify performance trends or potential issues. The platform’s visualization system supports custom chart types, interactive filtering, and collaborative annotation features that facilitate team discussions around experimental results.

Neptune.ai’s visualization approach emphasizes flexibility and customization, providing powerful tools for creating sophisticated analytical views that can accommodate diverse research methodologies and reporting requirements. The platform’s notebook integration capabilities enable seamless incorporation of experimental results into research publications and technical reports, supporting the academic and research community’s documentation requirements.

Enhance your research capabilities with Perplexity for comprehensive literature review and competitive analysis that can inform experimental design and methodology selection. The integration of experiment management platforms with AI-powered research tools creates opportunities for more informed experimental design and better contextualization of results within the broader research landscape.

Model Management and Deployment

Model management capabilities represent another significant area of differentiation between these platforms, with implications for teams transitioning from research to production deployment. Comet provides comprehensive model registry functionality that supports versioning, staging, and deployment workflows integrated with popular ML deployment platforms including Docker, Kubernetes, and major cloud providers.

The platform’s model monitoring capabilities extend into production environments, providing observability tools that can detect data drift, performance degradation, and other issues that commonly affect deployed machine learning models. This end-to-end approach makes Comet particularly attractive to teams that need to manage the complete lifecycle from experimentation through production deployment and ongoing monitoring.

Neptune.ai focuses more heavily on the research and development phases of model creation, providing sophisticated tools for model comparison, hyperparameter optimization tracking, and collaborative model evaluation. While the platform supports model storage and versioning, its primary strength lies in supporting the iterative research process rather than production deployment workflows.

Pricing and Scalability Considerations

The pricing models and scalability characteristics of these platforms significantly impact their suitability for different types of organizations and use cases. Comet offers tiered pricing that scales from individual researchers to enterprise teams, with different feature sets available at each level. The platform’s pricing structure considers factors including the number of experiments tracked, storage requirements, and advanced feature access, providing flexibility for organizations with varying budget constraints and usage patterns.

Neptune.ai employs a usage-based pricing model that charges based on computational resources consumed and storage requirements, making it particularly attractive for research teams with variable workloads or seasonal usage patterns. This model can provide cost advantages for teams that conduct intensive experimental campaigns followed by analysis periods with lower platform utilization.

Both platforms provide enterprise-grade security features, compliance certifications, and support options, but their specific offerings differ in ways that may be significant for organizations with particular security or compliance requirements. Understanding these differences is crucial for organizations operating in regulated industries or handling sensitive data that requires specific security controls.

The architectural differences between these platforms reflect their distinct strategic focuses, with Comet emphasizing MLOps integration and production readiness while Neptune.ai prioritizes research collaboration and metadata management capabilities. The scalability characteristics of these platforms differ in their approach to handling large-scale experimental workloads and distributed computing environments. Comet’s architecture emphasizes horizontal scaling and integration with cloud computing platforms, making it well-suited for teams that need to conduct large-scale hyperparameter searches or train models on distributed computing clusters.

Team Collaboration and Workflow Integration

Effective team collaboration represents a critical success factor for machine learning projects, and both platforms provide distinct approaches to supporting collaborative research workflows. Comet’s collaboration features emphasize real-time sharing of experimental results, collaborative annotation of experiments, and integration with popular development tools including Git repositories, Jupyter notebooks, and integrated development environments.

The platform’s project organization features enable teams to structure their work according to different research initiatives, business objectives, or organizational hierarchies. Access control mechanisms provide granular permissions that can accommodate complex organizational structures while maintaining appropriate data security and intellectual property protection.

Neptune.ai’s collaboration approach emphasizes scientific rigor and research reproducibility, providing tools for peer review of experimental results, collaborative hypothesis formulation, and systematic documentation of research methodologies. The platform’s integration with academic publishing workflows makes it particularly attractive to research institutions and teams that need to produce publications based on their experimental work.

Data Security and Compliance Features

Data security and regulatory compliance considerations play increasingly important roles in platform selection decisions, particularly for organizations handling sensitive data or operating in regulated industries. Both platforms provide enterprise-grade security features, but their specific implementations and certification levels differ in ways that may impact suitability for particular use cases.

Comet provides comprehensive security controls including encryption at rest and in transit, role-based access control, single sign-on integration, and audit logging capabilities. The platform maintains compliance with major regulatory frameworks including GDPR, HIPAA, and SOC 2, making it suitable for organizations in healthcare, financial services, and other regulated industries.

Neptune.ai emphasizes data privacy and research confidentiality, providing features specifically designed to support academic research requirements including embargo periods for sensitive research, anonymous collaboration features, and integration with institutional authentication systems. The platform’s security model is designed to support the open science movement while maintaining appropriate controls for proprietary research.

Performance and Reliability Characteristics

The performance and reliability characteristics of experiment management platforms directly impact research productivity and user satisfaction, making these factors crucial considerations in platform selection decisions. Comet’s infrastructure emphasizes high availability and performance optimization, with global content delivery networks and redundant data centers ensuring consistent access regardless of geographic location.

The platform’s API design prioritizes low latency and high throughput, enabling efficient logging of experimental data even during intensive training runs or hyperparameter optimization campaigns. Automatic retry mechanisms and offline synchronization capabilities ensure that experimental data is preserved even during network disruptions or system outages.

Neptune.ai’s performance characteristics emphasize consistency and reliability over raw performance, with infrastructure designed to support long-term data preservation and consistent access to historical experimental results. The platform’s data storage architecture prioritizes data integrity and long-term accessibility, making it particularly suitable for research projects that require multi-year data retention and reproducibility guarantees.

Integration with MLOps Ecosystems

The broader MLOps ecosystem integration capabilities of these platforms significantly impact their utility in modern machine learning development workflows. Comet provides extensive integration with popular MLOps tools including Kubeflow, MLflow, Apache Airflow, and major cloud provider ML services. These integrations enable seamless incorporation of experiment tracking into existing CI/CD pipelines and automated model training workflows.

The platform’s API-first architecture facilitates custom integrations and enables organizations to incorporate Comet into proprietary MLOps platforms and workflow management systems. This flexibility proves particularly valuable for organizations with complex existing infrastructure or specialized workflow requirements that cannot be accommodated by standard integrations.

Neptune.ai’s ecosystem integration approach emphasizes compatibility with research-oriented tools and academic computing environments. The platform provides strong integration with popular research computing frameworks including Slurm, PBS, and academic cloud computing platforms, making it particularly attractive to research institutions and academic laboratories.

Future Development and Platform Evolution

Understanding the development roadmaps and strategic directions of these platforms provides insights into their long-term suitability and alignment with evolving machine learning practices. Comet’s development strategy emphasizes expanding MLOps capabilities, enhancing integration with emerging cloud technologies, and providing more sophisticated model monitoring and observability features.

The platform’s investment in automated machine learning capabilities and integration with AutoML platforms suggests a strategic focus on democratizing machine learning and reducing the technical barriers to effective model development. This direction aligns with broader industry trends toward more accessible machine learning tools and platforms.

Neptune.ai’s development roadmap emphasizes enhancing research productivity and collaboration features, with particular focus on supporting emerging research methodologies including federated learning, differential privacy, and explainable AI research. The platform’s commitment to supporting cutting-edge research practices makes it an attractive choice for organizations at the forefront of machine learning research.

The evolution of both platforms reflects broader trends in the machine learning industry toward more sophisticated experiment management, better integration with existing development workflows, and enhanced support for collaborative research and development processes. Understanding these trends helps organizations make informed decisions about platform selection that will remain relevant as their machine learning practices mature and evolve.

Disclaimer

This article provides a comparative analysis of Comet and Neptune.ai based on publicly available information and general industry knowledge. The specific features, pricing, and capabilities of these platforms may change over time, and readers should conduct their own evaluation and testing to determine the best fit for their particular use cases and requirements. The effectiveness of these platforms may vary depending on specific organizational needs, technical infrastructure, and team expertise levels.