The intersection of artificial intelligence and cybersecurity has created unprecedented challenges in protecting sensitive data and proprietary algorithms from unauthorized access and malicious exploitation. As AI systems process increasingly sensitive information and valuable intellectual property, traditional software-based security measures have proven insufficient against sophisticated attacks that target both data in transit and data at rest. Confidential computing emerges as a revolutionary approach that extends protection to data in use, creating hardware-based security enclaves that maintain confidentiality even in untrusted computing environments.

Discover the latest AI security trends and innovations that are shaping the future of secure artificial intelligence deployment and implementation. The evolution of confidential computing represents a fundamental shift in how organizations approach AI security, moving beyond perimeter-based defenses to implement protection mechanisms that operate at the hardware level, ensuring that sensitive computations remain secure regardless of the underlying infrastructure’s trustworthiness.

Understanding Confidential Computing Architecture

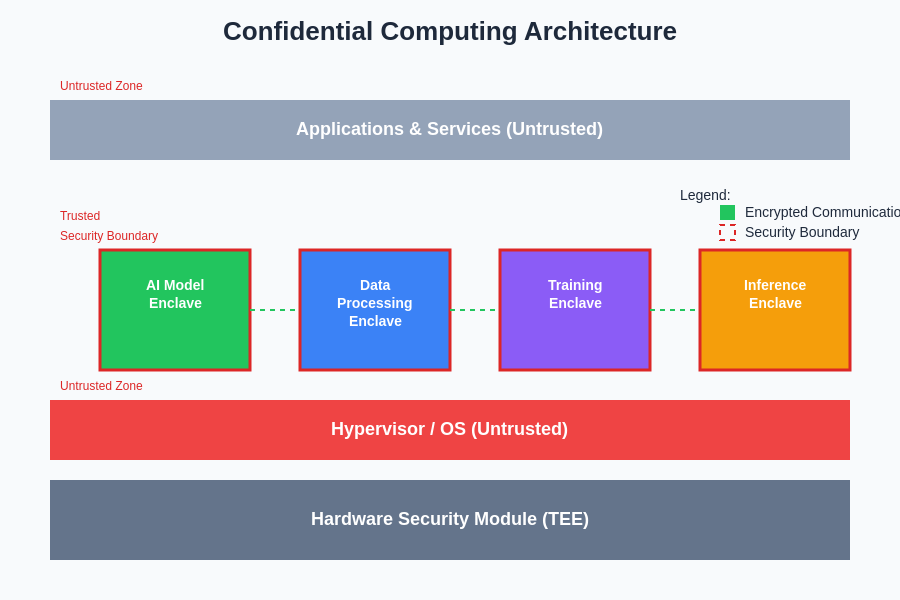

Confidential computing represents a paradigm shift in cybersecurity that addresses the fundamental challenge of protecting data during computation. Traditional security models focus primarily on securing data at rest through encryption and data in transit through secure communication protocols, but they leave a critical vulnerability during the processing phase when data must be decrypted and made available to applications. Confidential computing fills this gap by creating secure execution environments, known as Trusted Execution Environments (TEEs), that isolate sensitive computations from the underlying operating system, hypervisor, and even privileged system administrators.

The architecture of confidential computing relies on specialized hardware features that create cryptographically isolated compartments within processors. These secure enclaves utilize advanced cryptographic techniques, hardware-based attestation mechanisms, and memory encryption technologies to ensure that code and data within the enclave remain protected from external observation or modification. The hardware itself becomes the root of trust, providing mathematical guarantees about the integrity and confidentiality of computations performed within these protected environments.

Intel Software Guard Extensions (SGX), AMD Secure Encrypted Virtualization (SEV), and ARM TrustZone represent leading implementations of confidential computing technologies, each offering unique approaches to hardware-based security. These technologies enable the creation of secure compartments that can execute AI workloads while maintaining complete isolation from potentially compromised system components, including malicious privileged software, compromised hypervisors, and unauthorized physical access attempts.

AI-Specific Security Challenges

Artificial intelligence systems present unique security challenges that extend far beyond traditional data protection concerns. Machine learning models represent valuable intellectual property that can be worth millions of dollars in research and development investments, making them attractive targets for industrial espionage and competitive intelligence gathering. Model theft, where adversaries extract proprietary algorithms and training methodologies, represents a significant threat to organizations that have invested heavily in AI development.

Enhance your AI development security with Claude’s advanced capabilities to implement robust security measures throughout your machine learning pipeline. The complexity of modern AI systems creates multiple attack surfaces that must be carefully protected, including training data, model parameters, inference results, and the computational processes that transform inputs into outputs.

Adversarial attacks pose another critical challenge, where maliciously crafted inputs are designed to manipulate AI system outputs in ways that benefit attackers while remaining undetectable to human observers. These attacks can compromise the integrity of AI decision-making processes in critical applications such as autonomous vehicles, medical diagnosis systems, and financial fraud detection platforms. Privacy violations through model inversion attacks allow adversaries to reconstruct sensitive training data by analyzing model outputs, potentially exposing personal information, trade secrets, or other confidential data used during the training process.

Data poisoning attacks target the training phase of machine learning systems by introducing malicious or corrupted data that degrades model performance or introduces specific vulnerabilities that can be exploited during deployment. The distributed nature of many AI training processes, which often involve multiple parties and cloud computing resources, creates additional opportunities for attackers to compromise the integrity of training datasets or inject malicious code into the training pipeline.

Hardware Security Foundations

The foundation of confidential computing rests upon specialized hardware security features that provide cryptographic isolation and attestation capabilities at the processor level. Modern processors incorporate dedicated security coprocessors, hardware random number generators, and encrypted memory controllers that work together to create tamper-resistant execution environments. These hardware components implement cryptographic protocols that are resistant to both software-based attacks and many forms of physical tampering.

Hardware-based attestation mechanisms enable remote parties to verify the integrity and authenticity of code running within secure enclaves. This process involves the generation of cryptographic proofs that demonstrate the enclave is running the expected software in an unmodified state, providing assurance that sensitive computations are being performed correctly and have not been compromised by malicious code injection or modification attempts. The attestation process creates a chain of trust that extends from the hardware manufacturer through the enclave software to the end users who rely on the computational results.

Memory encryption technologies protect data confidentiality by automatically encrypting all data written to system memory and decrypting it when read back into the processor. This protection extends to direct memory access operations, cache contents, and even memory pages that may be swapped to disk storage. Advanced implementations include protection against memory replay attacks, where adversaries attempt to restore previous memory states to manipulate program execution or extract sensitive information from historical memory contents.

Trusted Execution Environments for AI

Trusted Execution Environments represent the practical implementation of confidential computing principles, providing isolated computational spaces where AI workloads can execute without exposure to external threats. These environments combine hardware-based security features with software frameworks that simplify the development and deployment of secure AI applications. TEEs ensure that sensitive AI computations remain confidential and tamper-proof, even when running on shared infrastructure or in public cloud environments where the underlying system may be controlled by untrusted parties.

The integration of AI workloads into TEEs requires careful consideration of performance trade-offs, memory constraints, and communication protocols that maintain security while enabling practical functionality. Modern TEE implementations support sophisticated AI frameworks and libraries while providing transparent encryption and decryption of data as it moves between secure and non-secure memory regions. This seamless integration allows existing AI applications to benefit from hardware-based security with minimal modification to existing codebases.

The architectural design of confidential computing environments creates multiple layers of protection that work together to ensure comprehensive security coverage. Hardware security modules manage cryptographic operations, secure boot processes verify the integrity of system components during startup, and runtime protection mechanisms monitor for anomalous behavior that might indicate attempted attacks or system compromises.

Intel SGX and AI Workload Protection

Intel Software Guard Extensions represents one of the most mature implementations of confidential computing technology, providing developers with comprehensive tools and libraries for creating secure AI applications. SGX enables the creation of secure enclaves that can protect AI models, training data, and inference processes from unauthorized access, even in environments where the operating system or hypervisor may be compromised. The technology supports both development and production workloads, with extensive documentation and developer resources that facilitate the integration of existing AI frameworks.

SGX-based AI deployments benefit from hardware-enforced memory protection that prevents unauthorized access to model parameters, input data, and computational results. The enclave model ensures that sensitive AI operations remain isolated from other processes running on the same system, providing strong isolation guarantees that are particularly valuable in multi-tenant cloud environments where multiple organizations may share the same physical hardware.

The attestation capabilities of SGX enable remote verification of AI model integrity, allowing organizations to prove to clients or regulators that their AI systems are running unmodified code and producing trustworthy results. This capability is particularly important for AI applications in regulated industries such as healthcare, finance, and government services, where demonstrating the integrity of computational processes is essential for compliance and trust requirements.

Performance optimization for SGX-based AI workloads involves careful management of enclave memory usage, efficient data movement between secure and non-secure memory regions, and optimization of cryptographic operations that protect data during processing. Modern AI frameworks have been enhanced to support SGX deployments with minimal performance overhead, enabling practical deployment of secure AI systems in production environments.

AMD SEV and Virtualized AI Security

Advanced Micro Devices Secure Encrypted Virtualization technology provides a different approach to confidential computing that focuses on protecting entire virtual machines rather than individual applications. This architecture is particularly well-suited for AI workloads that require multiple components, complex software stacks, or integration with existing virtualized infrastructure. SEV encrypts the contents of virtual machine memory using dedicated hardware encryption engines, ensuring that sensitive AI computations remain protected from unauthorized access by hypervisors, system administrators, or other virtual machines running on the same hardware.

Explore comprehensive AI research capabilities with Perplexity to stay informed about the latest developments in hardware-based security technologies and their applications in artificial intelligence systems. The virtual machine-based protection model of SEV enables organizations to migrate existing AI workloads to confidential computing environments with minimal modification, as the entire operating system and application stack can be protected as a cohesive unit.

SEV-based AI deployments support complex machine learning pipelines that involve multiple stages of data processing, model training, and result analysis. The technology provides protection for distributed AI workloads that span multiple virtual machines, enabling secure collaboration and data sharing between different components of large-scale AI systems. This capability is particularly valuable for federated learning scenarios where multiple organizations contribute data and computational resources to train shared AI models while maintaining strict data privacy requirements.

The key management and attestation features of SEV enable secure provisioning of AI workloads in cloud environments, with cryptographic verification that virtual machines are running on genuine AMD hardware with properly configured security features. This remote attestation capability allows organizations to verify the security posture of their AI deployments without requiring physical access to the underlying hardware infrastructure.

ARM TrustZone for Edge AI Security

ARM TrustZone technology extends confidential computing capabilities to edge computing environments where AI workloads are deployed on mobile devices, Internet of Things sensors, and embedded systems with limited computational resources. The TrustZone architecture divides the processor into secure and non-secure worlds, enabling the creation of trusted applications that can process sensitive AI operations while maintaining isolation from potentially compromised normal world software.

Edge AI deployments benefit from TrustZone’s low-power security features that provide hardware-based protection without significantly impacting battery life or thermal characteristics of mobile devices. The technology supports AI applications that process biometric data, personal information, and other sensitive inputs that require strong privacy protections throughout the entire computational pipeline.

TrustZone-based AI security is particularly important for applications such as facial recognition, voice processing, and health monitoring systems that handle highly personal data on user devices. The hardware-based isolation ensures that sensitive AI models and personal data remain protected even if the device’s main operating system is compromised by malware or other security threats.

The scalability of TrustZone across ARM’s extensive processor ecosystem enables consistent security capabilities across diverse edge computing platforms, from high-performance mobile processors to low-power microcontrollers used in IoT applications. This consistency simplifies the development and deployment of secure AI applications across heterogeneous edge computing environments.

Secure Multi-Party Computation Integration

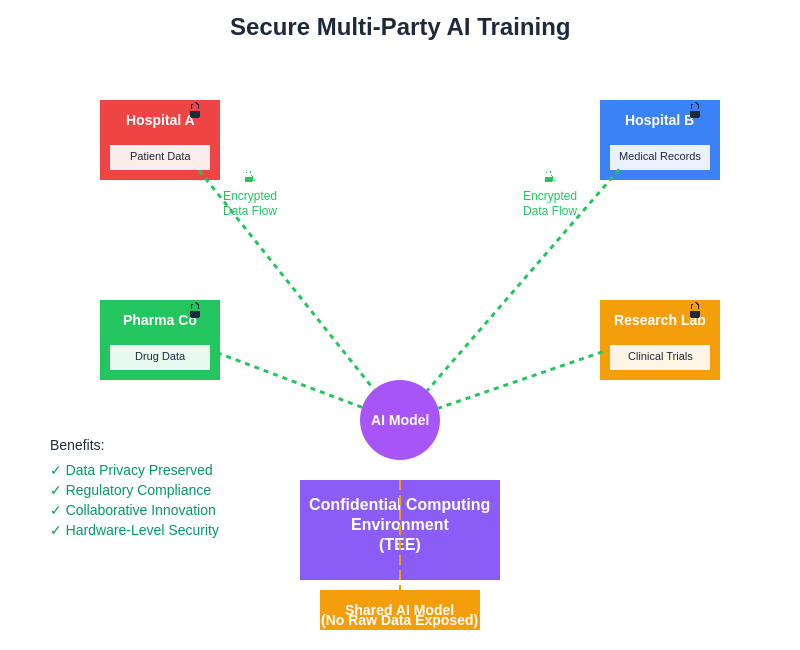

The combination of confidential computing with secure multi-party computation protocols creates powerful capabilities for collaborative AI development while maintaining strict data privacy requirements. These integrated approaches enable multiple organizations to jointly train AI models using their combined datasets without revealing sensitive information to other participants or even to the computing infrastructure that hosts the collaborative training process.

Confidential computing provides the secure execution environment necessary for implementing complex cryptographic protocols that enable privacy-preserving machine learning. The hardware-based isolation ensures that intermediate computational results, cryptographic keys, and participant data remain protected throughout the collaborative training process, even in scenarios where some participants or infrastructure providers may not be fully trusted.

The integration of these technologies enables new business models and research collaborations that were previously impossible due to data privacy concerns. Healthcare organizations can collaborate on medical AI research without sharing patient data, financial institutions can jointly develop fraud detection models without exposing transaction details, and technology companies can pool resources for AI development while protecting their proprietary datasets and algorithms.

Performance Optimization Strategies

Optimizing the performance of AI workloads running in confidential computing environments requires careful consideration of the unique constraints and capabilities of hardware-based security technologies. Memory management becomes particularly critical, as TEEs typically have limited secure memory available and frequent data movement between secure and non-secure memory regions can introduce significant performance overhead. Effective optimization strategies involve data structure reorganization, algorithmic modifications, and careful scheduling of cryptographic operations to minimize their impact on overall system performance.

Cache optimization techniques specific to confidential computing environments focus on maximizing the utilization of secure cache regions while minimizing cache conflicts that could leak information about computational patterns. Modern processors implement sophisticated cache partitioning and encryption mechanisms that require specialized optimization approaches to achieve optimal performance for AI workloads.

Parallel processing optimization in confidential computing environments involves balancing the security benefits of enclave isolation with the performance advantages of parallel execution across multiple cores or processors. Advanced implementations support secure communication between multiple enclaves running on different cores, enabling the parallelization of AI computations while maintaining strong security guarantees.

Network communication optimization focuses on minimizing the overhead of secure communication protocols while maintaining end-to-end encryption and authentication for distributed AI workloads. Efficient implementations leverage hardware acceleration for cryptographic operations and optimize protocol overhead to enable practical deployment of confidential computing in distributed AI systems.

Compliance and Regulatory Considerations

The regulatory landscape surrounding AI deployment increasingly emphasizes data protection, algorithmic transparency, and security accountability, making confidential computing an essential component of compliance strategies for organizations operating in regulated industries. Privacy regulations such as the European Union’s General Data Protection Regulation (GDPR) and various national data protection laws require organizations to implement appropriate technical and organizational measures to protect personal data during processing, making hardware-based security technologies increasingly attractive for AI deployments that handle sensitive information.

Financial services regulations require strong controls over algorithmic decision-making processes, audit trails for AI model behavior, and protection of customer data throughout the entire machine learning lifecycle. Confidential computing technologies provide the technical foundation for meeting these regulatory requirements while enabling innovative AI applications that can improve customer services and operational efficiency.

Healthcare regulations such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States require stringent protection of protected health information during all phases of processing, including AI model training and inference operations. Confidential computing enables healthcare organizations to leverage AI technologies while maintaining compliance with privacy regulations and professional ethical standards that govern the handling of patient data.

Government and defense applications of AI technology require security clearances, supply chain verification, and protection against advanced persistent threats that may have nation-state backing. Confidential computing provides the technical capabilities necessary to deploy AI systems in high-security environments while maintaining the flexibility and performance characteristics required for operational effectiveness.

Implementation Best Practices

Successful implementation of confidential computing for AI security requires careful planning, thorough testing, and ongoing monitoring to ensure that security objectives are met without compromising functionality or performance. The development process should begin with a comprehensive threat model that identifies specific risks to AI workloads and determines which components require hardware-based protection. This analysis guides the selection of appropriate confidential computing technologies and helps optimize the balance between security and performance requirements.

Security architecture design for confidential AI systems should implement defense-in-depth principles that combine hardware-based protection with complementary software security measures such as input validation, output sanitization, and anomaly detection. The integration of multiple security layers provides resilience against sophisticated attacks that may attempt to exploit vulnerabilities in individual security mechanisms.

Testing and validation procedures for confidential computing deployments should include both functional testing to verify correct operation and security testing to validate protection mechanisms against various attack scenarios. Comprehensive testing should cover normal operation, error conditions, performance stress testing, and simulated attack scenarios that attempt to compromise the confidentiality or integrity of AI computations.

Monitoring and logging strategies must balance security visibility requirements with the privacy protection objectives of confidential computing. Effective monitoring systems provide sufficient information to detect anomalous behavior and potential security incidents while avoiding the collection of sensitive data that could compromise the privacy guarantees provided by confidential computing technologies.

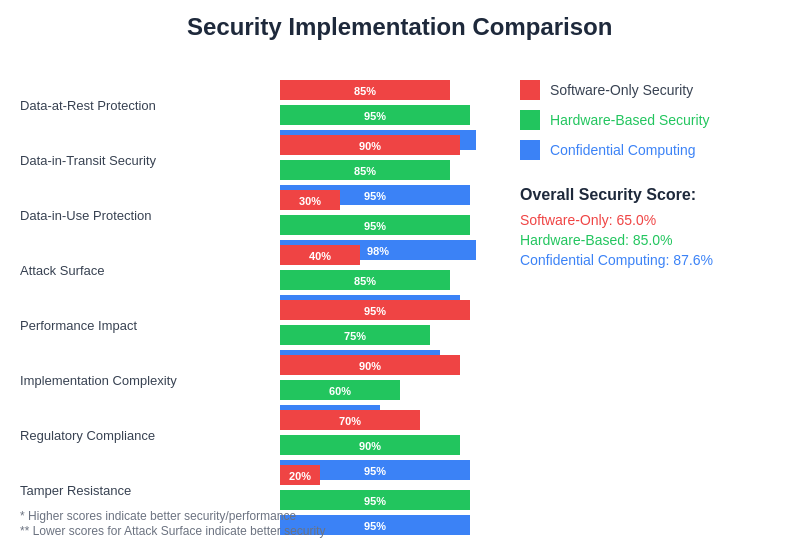

The comparative analysis of different security implementation approaches demonstrates the unique advantages of hardware-based confidential computing solutions compared to traditional software-only security measures. Organizations can evaluate these trade-offs to select the most appropriate security architecture for their specific AI deployment requirements and risk tolerance levels.

Future Developments and Industry Trends

The evolution of confidential computing technology continues to accelerate, driven by increasing demand for privacy-preserving AI applications and the growing sophistication of cyber threats targeting machine learning systems. Next-generation processor architectures are incorporating enhanced security features such as larger secure memory capacities, improved performance characteristics, and more flexible enclave management capabilities that will enable more complex AI workloads to benefit from hardware-based protection.

Industry collaboration initiatives are developing standardized approaches to confidential computing that will improve interoperability between different hardware platforms and simplify the development of portable secure AI applications. These standardization efforts include the definition of common attestation protocols, security certification processes, and development frameworks that abstract the complexities of different confidential computing implementations.

The integration of confidential computing with emerging technologies such as quantum-resistant cryptography, homomorphic encryption, and advanced secure multi-party computation protocols promises to create even more powerful privacy-preserving AI capabilities. These combined approaches will enable new applications that were previously impossible due to computational or security limitations, opening new possibilities for collaborative AI development and deployment.

Research and development efforts are focusing on addressing current limitations of confidential computing technology, including memory capacity constraints, performance overhead, and the complexity of application development and deployment. Ongoing improvements in hardware design, software frameworks, and development tools are making confidential computing more accessible to a broader range of organizations and use cases.

Economic Impact and Business Value

The business value proposition of confidential computing for AI applications extends beyond risk mitigation to enable new revenue opportunities and competitive advantages that were previously impossible due to security and privacy constraints. Organizations that implement robust hardware-based security for their AI systems can access previously unavailable datasets, participate in collaborative research initiatives, and offer services in regulated markets that require stringent data protection measures.

Cost-benefit analysis of confidential computing implementations should consider both direct costs such as hardware requirements and development overhead, as well as indirect benefits including reduced liability exposure, improved regulatory compliance, and enhanced customer trust. The total economic impact often justifies the initial investment through risk reduction, new business opportunities, and competitive differentiation in markets where security and privacy are critical success factors.

Market differentiation through superior security capabilities enables organizations to command premium pricing for AI services and attract customers who prioritize data protection and privacy. The ability to demonstrate mathematically provable security guarantees through hardware-based attestation provides a significant competitive advantage in markets where trust and security are primary decision factors.

Return on investment calculations for confidential computing projects should include quantitative measures such as reduced security incident costs, compliance cost savings, and new revenue from previously inaccessible markets, as well as qualitative benefits such as enhanced brand reputation, improved customer relationships, and reduced regulatory risk exposure.

The strategic importance of confidential computing technology is likely to increase as AI becomes more pervasive and the value of protecting AI-related intellectual property continues to grow. Organizations that establish early expertise in confidential computing will be better positioned to take advantage of future opportunities and navigate the evolving regulatory landscape surrounding AI deployment and data protection requirements.

Disclaimer

This article is for informational purposes only and does not constitute professional security advice. The views expressed are based on current understanding of confidential computing technologies and their applications in AI security. Readers should conduct their own research and consult with qualified security professionals when implementing confidential computing solutions. The effectiveness of security measures may vary depending on specific implementation details, threat models, and operational environments. Organizations should perform thorough risk assessments and security evaluations before deploying confidential computing technologies in production environments.