The quest for artificial intelligence that mirrors human learning capabilities has reached a pivotal moment with the emergence of continual learning systems. Unlike traditional machine learning models that learn from fixed datasets and remain static after training, continual learning AI represents a paradigm shift toward dynamic, adaptive systems that continuously evolve, acquire new knowledge, and refine their understanding without losing previously learned information. This revolutionary approach promises to transform how we think about artificial intelligence, creating systems that grow more capable and sophisticated over time, much like human intelligence develops throughout a lifetime.

Stay updated with the latest AI breakthroughs as continual learning technologies reshape the landscape of artificial intelligence and machine learning applications. The implications of this technological advancement extend far beyond academic research, potentially revolutionizing industries, enhancing user experiences, and creating AI systems that can truly adapt to the ever-changing demands of real-world environments.

Understanding the Foundations of Continual Learning

Continual learning, also known as lifelong learning or incremental learning, represents a fundamental departure from conventional machine learning paradigms. Traditional AI models follow a rigid training-deployment cycle where learning occurs exclusively during the training phase using a predetermined dataset, after which the model’s parameters remain fixed throughout its operational lifetime. This static approach creates significant limitations when AI systems encounter new data, changing environments, or evolving user requirements, often necessitating complete retraining or model replacement to maintain effectiveness.

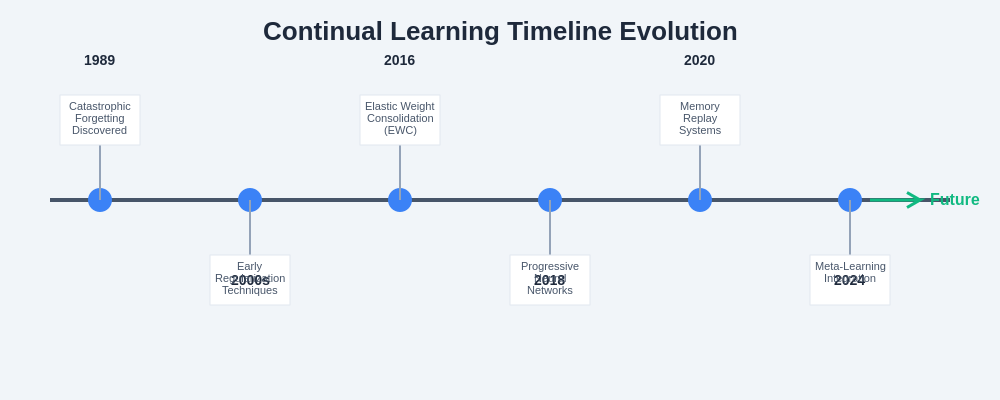

The core principle of continual learning lies in enabling AI systems to continuously acquire new knowledge while preserving previously learned information, a challenge that has proven surprisingly difficult to achieve in practice. This difficulty stems from the catastrophic forgetting phenomenon, where neural networks tend to overwrite previously learned patterns when exposed to new data, effectively erasing valuable knowledge that took considerable computational resources to acquire. Continual learning research focuses on developing sophisticated mechanisms and architectures that can overcome this fundamental limitation, creating AI systems that truly embody the concept of lifelong learning.

The theoretical foundations of continual learning draw inspiration from neuroscience and cognitive psychology, particularly the understanding of how human brains manage to learn new information throughout life while retaining childhood memories and fundamental skills. This biological inspiration has led to the development of various approaches including elastic weight consolidation, progressive neural networks, and memory-based systems that attempt to replicate the brain’s remarkable ability to balance stability with plasticity in learning processes.

The Challenge of Catastrophic Forgetting

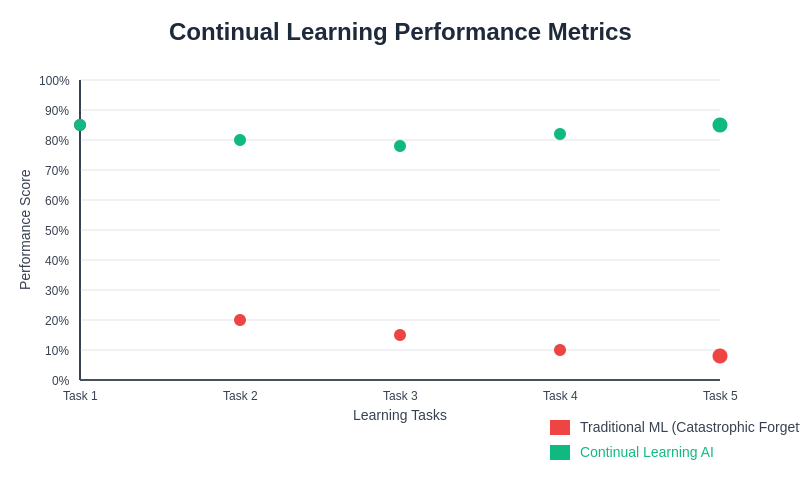

Catastrophic forgetting represents one of the most significant obstacles in developing effective continual learning systems. This phenomenon occurs when artificial neural networks, upon learning new tasks or incorporating new data, dramatically degrade their performance on previously learned tasks. The underlying cause relates to the distributed nature of knowledge representation in neural networks, where information is encoded across multiple parameters that can be disrupted when the network adapts to new learning objectives.

Traditional gradient-based learning algorithms optimize network parameters to minimize error on the current training data, without considering the impact on previously acquired knowledge. This optimization process can lead to significant parameter updates that, while beneficial for new tasks, inadvertently corrupt the representations that encoded previous learning. The severity of catastrophic forgetting varies depending on the similarity between old and new tasks, the learning rate employed, and the architecture of the neural network, but it remains a persistent challenge that must be addressed for successful continual learning implementation.

Experience advanced AI reasoning with Claude to understand how sophisticated language models handle knowledge retention and adaptation across diverse conversational contexts. The research community has developed numerous strategies to mitigate catastrophic forgetting, each with distinct advantages and limitations that must be carefully considered when designing continual learning systems for specific applications and domains.

Architectural Innovations in Continual Learning

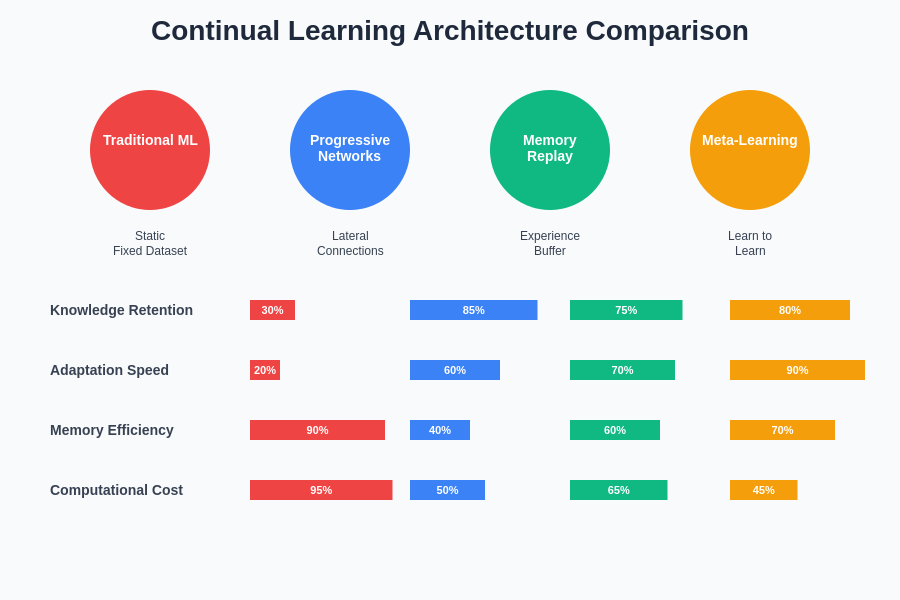

The development of continual learning capabilities has driven significant innovations in neural network architectures, leading to novel designs that can accommodate new knowledge while preserving existing capabilities. Progressive neural networks represent one such architectural innovation, employing a lateral connection strategy where new tasks are learned using additional network columns while maintaining connections to previously trained columns. This approach effectively prevents catastrophic forgetting by ensuring that old knowledge remains in dedicated network components that are not modified during new task learning.

Dynamic network expansion techniques have emerged as another promising architectural approach, automatically growing network capacity when new tasks or domains are encountered. These systems begin with a compact architecture and systematically add new parameters, layers, or modules as needed to accommodate new learning requirements. The challenge lies in determining when expansion is necessary and how to efficiently integrate new components without disrupting existing functionality or creating computational inefficiencies that could limit practical deployment.

Memory-augmented architectures have gained significant attention for their ability to explicitly store and retrieve important information from previous learning experiences. These systems incorporate external memory components that can store key examples, learned representations, or task-specific information that can be accessed during future learning episodes. The integration of attention mechanisms and sophisticated memory management strategies has enhanced the effectiveness of these approaches, enabling more selective and efficient use of stored information.

The evolution of continual learning architectures demonstrates the progression from simple parameter protection methods to sophisticated systems that can dynamically adapt their structure and memory utilization based on learning requirements. Each architectural approach offers unique advantages for different types of continual learning scenarios, from task-incremental learning to domain adaptation challenges.

Memory Replay and Experience Consolidation

Memory replay mechanisms have emerged as one of the most effective strategies for addressing catastrophic forgetting in continual learning systems. Inspired by the role of memory consolidation in biological learning, these approaches maintain a buffer of important examples or experiences from previous learning episodes that can be replayed during new task learning. This replay process helps maintain the network’s ability to perform well on old tasks while incorporating new knowledge, effectively creating a form of artificial memory consolidation.

The implementation of memory replay systems requires careful consideration of several critical factors, including the selection of examples to store, the size of the memory buffer, and the strategy for integrating replay with new learning. Sophisticated selection algorithms have been developed to identify the most informative or representative examples that should be preserved in limited memory storage, often employing uncertainty-based sampling, gradient-based importance measures, or diversity criteria to ensure optimal memory utilization.

Advanced replay techniques have evolved beyond simple example storage to include generative replay mechanisms that can synthesize pseudo-examples from previous tasks without requiring explicit storage of original training data. These approaches use generative models to create artificial examples that capture the essential characteristics of previous learning experiences, providing a more memory-efficient solution for continual learning while addressing potential privacy concerns associated with storing actual training examples.

The effectiveness of memory replay approaches has been demonstrated across various continual learning scenarios, from image classification tasks to reinforcement learning environments. However, the computational overhead of replay mechanisms and the challenge of scaling to long sequences of learning tasks remain active areas of research that require continued innovation and optimization.

Regularization Techniques for Knowledge Preservation

Regularization-based approaches to continual learning focus on constraining the learning process to preserve important parameters and representations from previous tasks. Elastic Weight Consolidation (EWC) represents a seminal technique in this category, using Fisher Information Matrix approximations to identify parameters that are crucial for previously learned tasks and applying regularization penalties to prevent significant changes to these parameters during new task learning.

The theoretical foundation of regularization techniques draws from Bayesian inference principles, treating the learning of new tasks as a posterior estimation problem where the prior is informed by previous learning experiences. This perspective has led to sophisticated approaches that can estimate parameter importance more accurately and apply appropriate constraints that balance knowledge retention with learning flexibility.

Advanced regularization methods have extended beyond simple parameter-level constraints to incorporate representational and functional regularization strategies. These approaches focus on preserving important features, activations, or input-output relationships that are critical for maintaining performance on previous tasks. The development of adaptive regularization strengths and dynamic importance estimation has further enhanced the effectiveness of these techniques across diverse learning scenarios.

Explore comprehensive AI research capabilities with Perplexity to stay informed about the latest developments in regularization techniques and their applications in continual learning systems. The ongoing research in this area continues to reveal new insights into the optimal balance between stability and plasticity in artificial learning systems.

Meta-Learning and Few-Shot Adaptation

The integration of meta-learning principles with continual learning has opened new possibilities for creating AI systems that can rapidly adapt to new tasks with minimal examples while retaining previous knowledge. Meta-learning, often described as “learning to learn,” provides mechanisms for acquiring learning strategies and representations that facilitate quick adaptation to new scenarios, making it a natural complement to continual learning objectives.

Model-Agnostic Meta-Learning (MAML) and its variants have been successfully adapted for continual learning scenarios, enabling models to learn initialization parameters that facilitate rapid adaptation to new tasks while minimizing interference with previously acquired knowledge. These approaches have demonstrated remarkable success in few-shot learning scenarios within continual learning frameworks, allowing models to acquire new capabilities with just a few examples while maintaining performance on previously learned tasks.

The combination of meta-learning with continual learning has particular relevance for practical applications where AI systems must quickly adapt to new user preferences, changing environmental conditions, or emerging task requirements. This capability is especially valuable in personalization systems, robotics applications, and adaptive user interfaces where the ability to learn from limited feedback while preserving existing functionality is crucial for system effectiveness.

Advanced meta-learning techniques have incorporated sophisticated optimization algorithms, hierarchical learning strategies, and adaptive learning rate mechanisms that enhance the continual learning process. The development of meta-learning algorithms specifically designed for continual learning scenarios continues to be an active research area with significant potential for practical applications.

The quantitative evaluation of continual learning systems reveals significant improvements in knowledge retention, adaptation speed, and overall learning efficiency compared to traditional static learning approaches. These metrics demonstrate the practical benefits of implementing continual learning mechanisms across various AI applications and domains.

Real-World Applications and Use Cases

Continual learning technologies have found numerous applications across industries and domains where AI systems must adapt to changing conditions, user preferences, or environmental factors. Recommendation systems represent one of the most successful commercial applications of continual learning, where models must continuously incorporate new user interactions, changing preferences, and emerging content to maintain relevance and effectiveness. The ability to adapt to new users, items, and interaction patterns without losing knowledge about existing user preferences has proven invaluable for maintaining engagement and satisfaction in dynamic online environments.

Autonomous systems and robotics applications have greatly benefited from continual learning capabilities, particularly in scenarios where robots must operate in changing environments or learn new tasks throughout their operational lifetime. These systems can adapt to new obstacles, learn new manipulation skills, or adjust to changes in their operating environment while retaining previously acquired capabilities. The integration of continual learning in robotics has enabled more versatile and adaptable systems that can function effectively in real-world scenarios where complete prior knowledge of all possible situations is impossible.

Natural language processing applications have embraced continual learning to address the challenge of evolving language use, emerging terminology, and changing communication patterns. Chatbots, translation systems, and language models that incorporate continual learning can adapt to new domains, languages, or communication styles while maintaining their foundational language understanding capabilities. This adaptability is particularly important for systems that serve diverse user populations or operate across different cultural and linguistic contexts.

Medical diagnosis and healthcare applications represent another promising domain for continual learning technologies, where AI systems must continuously incorporate new medical knowledge, treatment protocols, and patient data while maintaining expertise in previously learned diagnostic capabilities. The ability to adapt to new diseases, treatment options, or medical insights without forgetting established medical knowledge is crucial for maintaining the reliability and effectiveness of AI-assisted healthcare systems.

Challenges in Scalability and Computational Efficiency

The practical deployment of continual learning systems faces significant challenges related to scalability and computational efficiency, particularly as the number of learning tasks or the complexity of knowledge increases over time. Traditional continual learning approaches often exhibit linear or even super-linear growth in computational requirements as new tasks are added, making long-term deployment problematic for resource-constrained environments or applications with strict latency requirements.

Memory management represents a critical scalability challenge, as many continual learning techniques rely on storing examples, gradients, or model states from previous learning episodes. The memory requirements can grow substantially over time, potentially exceeding available storage capacity or creating prohibitive costs for large-scale deployments. Research into memory-efficient techniques, including compressed representations, selective forgetting mechanisms, and hierarchical memory structures, continues to address these practical limitations.

The computational overhead associated with continual learning mechanisms, such as replay, regularization calculations, or meta-learning updates, can significantly impact system performance and response times. Optimizing these processes for real-time applications requires careful consideration of algorithmic efficiency, hardware utilization, and the trade-offs between learning effectiveness and computational cost. The development of specialized hardware accelerators and optimized software implementations continues to improve the practical viability of continual learning systems.

Distributed and federated learning scenarios present additional scalability challenges for continual learning systems, where knowledge must be continuously acquired and shared across multiple devices or locations while maintaining privacy and communication efficiency. The coordination of continual learning processes across distributed systems requires sophisticated protocols and mechanisms that can handle network limitations, device heterogeneity, and privacy constraints while maintaining learning effectiveness.

Evaluation Metrics and Benchmarking

The evaluation of continual learning systems requires sophisticated metrics and benchmarking approaches that can capture the complex trade-offs between learning new knowledge and retaining previous information. Traditional machine learning evaluation metrics, which focus on performance for individual tasks, are insufficient for assessing the effectiveness of continual learning systems that must balance multiple competing objectives across sequences of learning experiences.

Backward transfer measurement quantifies how learning new tasks affects performance on previously learned tasks, providing insight into the system’s ability to avoid catastrophic forgetting. Forward transfer evaluation assesses whether knowledge from previous tasks facilitates learning of new tasks, indicating the system’s ability to leverage accumulated experience for improved learning efficiency. The combination of these metrics provides a comprehensive view of continual learning system performance across the full spectrum of learning scenarios.

Average accuracy across all tasks represents a holistic measure of continual learning effectiveness, but this metric can mask important details about the distribution of performance across different tasks or learning episodes. More sophisticated evaluation approaches consider the stability-plasticity trade-off, measuring how well systems balance the retention of old knowledge with the acquisition of new capabilities. The development of standardized benchmarks and evaluation protocols continues to be crucial for advancing continual learning research and comparing different approaches.

Task-specific evaluation metrics have been developed to address the unique challenges of different continual learning scenarios, from class-incremental learning in image classification to domain adaptation in natural language processing. These specialized metrics provide more detailed insights into system performance and help identify the most appropriate continual learning techniques for specific applications and domains.

The historical development of continual learning demonstrates the rapid evolution of techniques and applications, from early work on catastrophic forgetting to sophisticated modern systems that can handle complex, long-term learning scenarios. This timeline illustrates the accelerating pace of innovation in the field and highlights key milestones that have shaped current understanding and capabilities.

Future Directions and Emerging Trends

The future of continual learning research promises exciting developments across multiple fronts, from fundamental algorithmic innovations to novel application domains that leverage the unique capabilities of adaptive AI systems. Neuromorphic computing represents one of the most promising directions, with specialized hardware designs that can more efficiently implement continual learning mechanisms by mimicking the structural and functional properties of biological neural networks.

The integration of continual learning with large language models and foundation models presents both opportunities and challenges for creating more adaptive and capable AI systems. These massive models possess extensive knowledge bases that could benefit from continual learning mechanisms to stay current with evolving information, adapt to new domains, or personalize to specific user requirements without requiring complete retraining of billions of parameters.

Quantum computing applications in continual learning represent an emerging frontier where quantum mechanical properties could potentially address some of the fundamental limitations of classical continual learning approaches. Quantum superposition and entanglement might enable novel approaches to memory consolidation, parameter optimization, and knowledge representation that could overcome current scalability and efficiency limitations.

The development of continual learning systems for multi-modal AI applications, combining vision, language, audio, and other sensory modalities, presents complex challenges that require sophisticated coordination mechanisms and shared representation learning. These systems must balance learning across different modalities while maintaining coherent understanding and avoiding interference between different types of sensory information.

Ethical considerations and fairness in continual learning systems have gained increasing attention as these technologies are deployed in real-world applications that affect human lives and decisions. Ensuring that continual learning systems maintain fairness across different user groups, avoid bias amplification over time, and provide transparency in their adaptation processes remains an active area of research and development.

Privacy and Security Considerations

The deployment of continual learning systems raises important privacy and security considerations that must be carefully addressed to ensure responsible and safe implementation of these technologies. The continuous nature of learning in these systems means that they may encounter and potentially store sensitive information from users or environments over extended periods, creating unique privacy challenges that differ from traditional machine learning systems.

Data minimization techniques have become crucial for continual learning systems, focusing on methods that can learn effectively while storing minimal sensitive information. Differential privacy mechanisms have been adapted for continual learning scenarios, providing mathematical guarantees about information leakage while enabling continued adaptation and learning. The challenge lies in balancing privacy protection with learning effectiveness, as overly restrictive privacy measures can significantly impair the system’s ability to acquire and retain new knowledge.

Adversarial robustness in continual learning systems presents complex challenges, as attackers might exploit the adaptive nature of these systems to gradually introduce malicious behaviors or corrupt learned knowledge over time. The development of robust continual learning algorithms that can detect and resist adversarial manipulation while maintaining their adaptive capabilities requires sophisticated security mechanisms and monitoring systems.

Federated continual learning approaches have emerged as a promising solution for privacy-preserving continual learning, enabling multiple parties to collaboratively learn while keeping their data local and private. These systems must address the additional challenges of coordinating continual learning across distributed participants while maintaining privacy, handling device heterogeneity, and ensuring consistent learning outcomes across the federation.

Industry Adoption and Commercial Applications

The commercial adoption of continual learning technologies has accelerated across various industries, driven by the clear business value of AI systems that can adapt and improve over time without requiring expensive retraining or replacement cycles. Technology companies have been early adopters, integrating continual learning into recommendation engines, search algorithms, and personalization systems that serve millions of users with constantly evolving preferences and behaviors.

Financial services organizations have embraced continual learning for fraud detection, risk assessment, and algorithmic trading systems that must adapt to changing market conditions, emerging fraud patterns, and evolving regulatory requirements. The ability to continuously learn from new transaction data while maintaining knowledge about historical fraud patterns has proven invaluable for maintaining security and compliance in dynamic financial environments.

Healthcare organizations are exploring continual learning applications for diagnostic systems, treatment recommendation engines, and patient monitoring platforms that must incorporate new medical research, treatment protocols, and patient data while maintaining expertise in established medical knowledge. The regulatory challenges associated with healthcare AI systems require careful validation and monitoring of continual learning mechanisms to ensure patient safety and treatment efficacy.

Manufacturing and industrial applications have found value in continual learning for predictive maintenance, quality control, and process optimization systems that must adapt to changing equipment conditions, new product lines, and evolving operational requirements. These applications demonstrate the practical benefits of AI systems that can evolve with changing industrial environments while maintaining operational reliability and efficiency.

The software industry has integrated continual learning into development tools, testing frameworks, and code analysis systems that must adapt to new programming languages, frameworks, and development practices while maintaining expertise in established technologies. These applications showcase the potential for continual learning to enhance developer productivity and software quality across diverse technological environments.

Conclusion and Future Outlook

Continual learning AI represents a fundamental paradigm shift toward more adaptive, flexible, and capable artificial intelligence systems that can truly embody the concept of lifelong learning. The journey from static, single-task models to dynamic systems that can continuously acquire new knowledge while preserving previous learning has opened unprecedented possibilities for AI applications that can evolve with changing requirements, user preferences, and environmental conditions.

The challenges that remain in continual learning research, from catastrophic forgetting and scalability limitations to privacy and security concerns, continue to drive innovation and discovery across multiple disciplines. The interdisciplinary nature of continual learning research, drawing from neuroscience, cognitive psychology, computer science, and engineering, has fostered a rich ecosystem of approaches and solutions that address different aspects of the lifelong learning challenge.

The practical impact of continual learning technologies extends far beyond academic research, with real-world applications demonstrating significant improvements in system adaptability, user satisfaction, and operational efficiency across diverse domains. As these technologies mature and become more widely adopted, we can expect to see even more sophisticated applications that leverage the unique capabilities of systems that never stop learning.

The future of artificial intelligence increasingly points toward systems that can learn, adapt, and grow throughout their operational lifetime, much like human intelligence develops over years of experience and learning. Continual learning AI represents a crucial step toward this vision, creating the foundation for truly intelligent systems that can navigate the complexity and uncertainty of real-world environments while continuously improving their capabilities and understanding.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of continual learning technologies and their applications. Readers should conduct their own research and consider their specific requirements when implementing continual learning systems. The effectiveness and suitability of continual learning approaches may vary depending on specific use cases, technical constraints, and application domains.