The exponential growth of artificial intelligence and machine learning applications has created an unprecedented demand for efficient data storage solutions, making data compression a critical component of modern AI infrastructure. As datasets continue to expand from gigabytes to terabytes and beyond, organizations face mounting challenges in storage costs, transfer speeds, and processing efficiency that directly impact their ability to develop and deploy sophisticated AI models effectively.

Discover the latest trends in AI infrastructure optimization to understand how leading organizations are addressing the complex challenges of managing massive datasets while maintaining optimal performance and cost efficiency. The strategic implementation of advanced compression techniques has become essential for organizations seeking to maintain competitive advantages in the rapidly evolving landscape of artificial intelligence and machine learning applications.

The Critical Need for Compression in AI Workflows

Modern AI applications generate and consume data at scales that were previously unimaginable, creating significant challenges for storage infrastructure, network bandwidth, and computational resources. Traditional storage approaches that worked effectively for smaller datasets become prohibitively expensive and inefficient when applied to the massive volumes of data required for training sophisticated neural networks, computer vision models, and natural language processing systems.

The impact of data compression extends far beyond simple storage savings, influencing every aspect of the AI development pipeline from initial data collection and preprocessing through model training, validation, and deployment. Effective compression strategies can dramatically reduce storage costs, accelerate data transfer operations, improve training pipeline efficiency, and enable organizations to work with larger datasets than would otherwise be practical given their infrastructure constraints.

Understanding Compression Fundamentals for AI Data

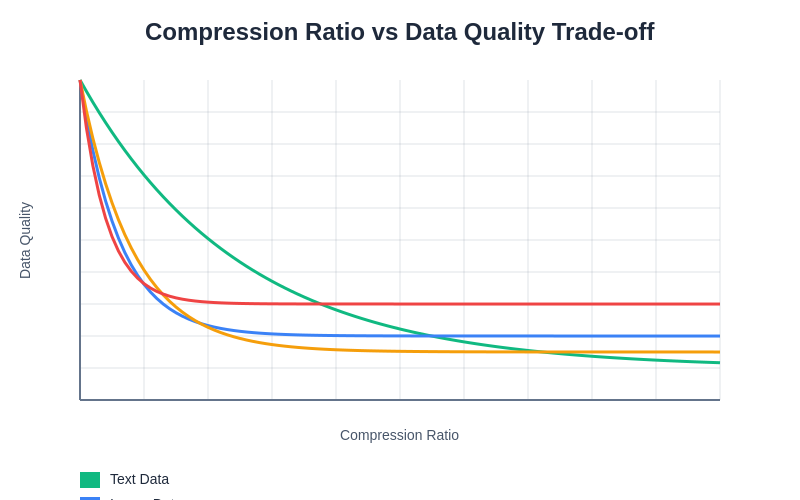

The selection of appropriate compression techniques for AI datasets requires a thorough understanding of the fundamental trade-offs between compression ratio, processing speed, and data fidelity that directly impact model training effectiveness and inference performance. Unlike traditional data compression scenarios where the primary goal is simply reducing file size, AI applications must carefully balance compression efficiency against factors such as random access patterns, parallel processing requirements, and the preservation of statistical properties that are crucial for model convergence and accuracy.

Lossless compression techniques preserve every bit of original information while achieving moderate compression ratios, making them ideal for scenarios where data integrity is paramount, such as training datasets that will be used repeatedly or critical reference data that must maintain perfect fidelity. These approaches typically achieve compression ratios ranging from 2:1 to 10:1 depending on data characteristics, with algorithms like LZ77, LZ78, and their derivatives providing excellent general-purpose compression with relatively low computational overhead.

The selection of appropriate compression algorithms requires careful evaluation of the trade-offs between compression ratio, processing speed, and data quality preservation, with different techniques offering varying advantages depending on the specific characteristics of the dataset and the requirements of the AI application.

Lossy compression techniques sacrifice some data fidelity in exchange for dramatically higher compression ratios, often achieving reductions of 20:1 to 100:1 or more while maintaining acceptable quality for specific AI applications. The key to successful lossy compression in AI contexts lies in understanding which aspects of the data are most critical for model performance and preserving those characteristics while aggressively compressing less important information.

Specialized Formats for Different Data Types

Image data represents one of the most common and challenging data types in AI applications, requiring specialized compression approaches that balance file size reduction with the preservation of visual features that are critical for computer vision tasks. Traditional image formats like JPEG provide excellent compression for natural images but may introduce artifacts that negatively impact model training, while newer formats like WebP and AVIF offer improved compression efficiency with better quality preservation.

For computer vision applications, the choice of image compression format can significantly impact model accuracy, particularly in scenarios involving fine-grained classification, object detection, or image segmentation tasks where subtle visual details are crucial for correct predictions. Lossless formats like PNG maintain perfect image fidelity but result in significantly larger file sizes, while carefully tuned lossy compression can achieve substantial storage savings with minimal impact on model performance.

Enhance your AI workflows with advanced tools like Claude to optimize data processing pipelines and implement sophisticated compression strategies that maximize storage efficiency while preserving critical information needed for accurate model training and inference.

Video data presents even greater challenges due to its temporal nature and massive storage requirements, with uncompressed video files quickly consuming terabytes of storage space for even modest datasets. Modern video compression standards like H.264, H.265, and AV1 provide excellent compression ratios while maintaining visual quality, but the computational overhead of decompression can become a bottleneck during training if not properly managed through techniques like parallel processing and strategic caching.

Audio data compression for AI applications must carefully preserve the frequency domain characteristics that are crucial for tasks like speech recognition, music classification, and audio event detection. Lossless formats like FLAC maintain perfect audio fidelity while achieving moderate compression ratios, while perceptually-based lossy formats like MP3 and AAC can provide substantial storage savings with carefully controlled quality loss.

Text and natural language data typically achieve excellent compression ratios due to the inherent redundancy in human language, with general-purpose algorithms like gzip often providing 5:1 to 10:1 compression ratios for large text corpora. However, AI applications must consider the impact of compression on tokenization, encoding schemes, and the preservation of linguistic patterns that are crucial for natural language processing tasks.

Advanced Compression Algorithms for AI

Dictionary-based compression algorithms like LZ77 and LZ78 excel at identifying and exploiting repetitive patterns within datasets, making them particularly effective for AI applications that involve structured data, configuration files, or datasets with significant redundancy. These algorithms build dynamic dictionaries of frequently occurring patterns and replace subsequent occurrences with shorter references, achieving excellent compression ratios while maintaining fast decompression speeds that are crucial for real-time AI applications.

Entropy-based compression techniques like Huffman coding and arithmetic coding optimize compression by assigning shorter codes to more frequently occurring symbols, making them highly effective for datasets with non-uniform distributions that are common in many AI applications. These techniques can be combined with other compression approaches to create hybrid algorithms that leverage multiple compression principles for optimal results.

Transform-based compression methods like discrete cosine transform (DCT) and wavelet compression work by converting data into frequency domain representations where redundant information can be more easily identified and removed. These approaches are particularly effective for signal processing applications in AI, including audio processing, image analysis, and time-series data where frequency domain characteristics are important for model performance.

Neural network-based compression represents a cutting-edge approach that uses machine learning techniques to optimize compression for specific types of data or applications. These methods can achieve superior compression ratios compared to traditional algorithms by learning optimal representations for particular data distributions, though they typically require significant computational resources for both compression and decompression operations.

Optimizing Storage Architecture

The choice of storage architecture has profound implications for the effectiveness of compression strategies in AI applications, with different storage systems offering varying levels of support for compressed data access patterns, parallel processing capabilities, and integration with machine learning frameworks. Traditional file systems may struggle with the random access patterns common in AI training pipelines, while specialized storage solutions designed for machine learning workloads can provide significant performance advantages.

Distributed storage systems enable the parallel processing of compressed data across multiple nodes, dramatically improving the throughput of data-intensive AI operations while maintaining the storage efficiency benefits of compression. These systems must carefully balance compression ratios against decompression overhead to optimize overall pipeline performance, often employing techniques like intelligent caching and predictive decompression to minimize latency.

Cloud storage solutions offer scalable storage capacity with integrated compression features, but organizations must carefully consider the trade-offs between storage costs, data transfer fees, and computational costs associated with compression and decompression operations. Hybrid approaches that combine local high-performance storage with cloud-based archival storage can provide optimal cost-performance characteristics for many AI applications.

Performance Considerations and Trade-offs

The computational overhead of compression and decompression operations can become a significant bottleneck in AI pipelines, particularly during intensive training phases where data must be continuously loaded, decompressed, and processed by GPU-accelerated neural networks. Organizations must carefully profile their specific workloads to identify optimal compression settings that balance storage efficiency against processing speed requirements.

Memory utilization patterns during decompression can significantly impact overall system performance, with some compression algorithms requiring substantial temporary memory allocation that may compete with AI model memory requirements. Streaming decompression techniques and memory-efficient algorithms can help mitigate these issues while maintaining the benefits of compressed storage.

Explore comprehensive AI research capabilities with Perplexity to stay informed about the latest developments in data compression techniques and their applications in machine learning and artificial intelligence systems.

Parallel processing capabilities vary significantly among different compression algorithms, with some techniques naturally supporting multi-threaded operation while others are inherently sequential. The selection of compression methods must consider the parallel processing capabilities of the target hardware platform to ensure optimal utilization of available computational resources.

Implementation Strategies and Best Practices

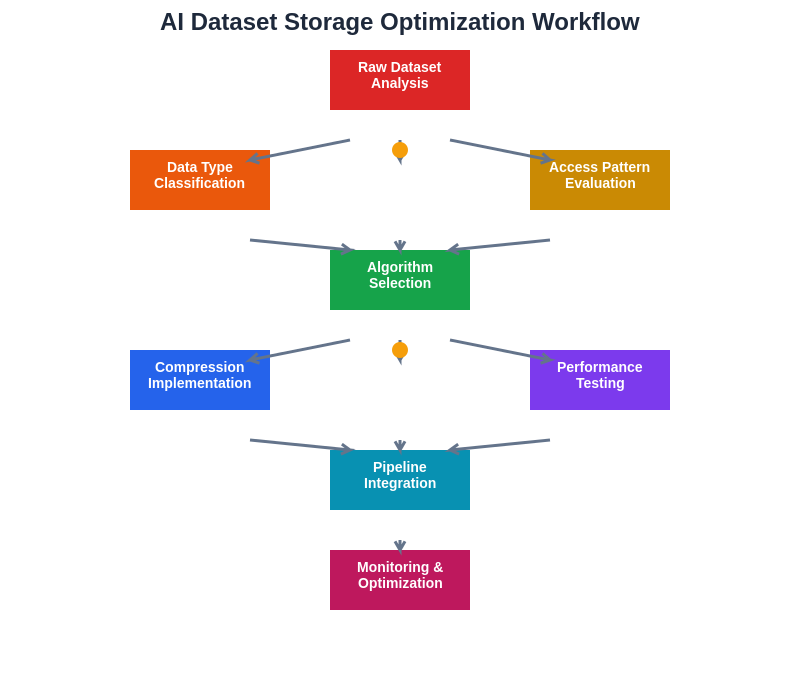

The successful implementation of compression strategies in AI workflows requires careful planning and consideration of multiple factors including data characteristics, access patterns, hardware capabilities, and performance requirements. Organizations should begin by conducting thorough analysis of their existing datasets to understand compression potential and identify the most appropriate techniques for their specific use cases.

A systematic approach to storage optimization ensures that all critical factors are considered and that the selected compression strategy aligns with both technical requirements and business objectives, maximizing the benefits while minimizing potential risks or performance impacts.

Preprocessing optimization can significantly improve compression effectiveness by reorganizing data to maximize redundancy and minimize entropy, techniques such as sorting, grouping similar data elements, and removing unnecessary metadata can substantially improve compression ratios without impacting the underlying information content that is crucial for AI model training.

Pipeline integration requires careful coordination between compression operations and other data processing steps to minimize latency and maximize throughput, with techniques like background compression, intelligent prefetching, and parallel processing helping to maintain smooth data flow throughout the AI development lifecycle.

Testing and validation procedures must verify that compression strategies maintain acceptable data quality and model performance while achieving desired storage and performance improvements, with comprehensive benchmarking helping organizations make informed decisions about compression trade-offs and optimization strategies.

Emerging Technologies and Future Trends

Machine learning-enhanced compression represents a rapidly evolving field where AI techniques are being applied to optimize compression algorithms themselves, creating adaptive systems that can learn optimal compression strategies for specific data types and applications. These approaches promise to deliver superior compression performance compared to traditional methods while maintaining or improving processing efficiency.

Hardware acceleration for compression operations is becoming increasingly available through specialized processors, GPU-based implementations, and dedicated compression accelerators that can dramatically reduce the computational overhead associated with compression and decompression operations in AI workflows.

Quantum-inspired compression algorithms leverage principles from quantum computing to develop novel compression techniques that may offer advantages for certain types of data or applications, though these approaches are still in early research phases and require further development before practical implementation.

Edge computing optimization requires specialized compression techniques that can operate effectively within the resource constraints of edge devices while maintaining the data quality necessary for accurate AI inference, driving the development of lightweight compression algorithms optimized for mobile and embedded applications.

Cost-Benefit Analysis and ROI

The financial impact of compression strategies extends beyond simple storage cost savings to include reduced bandwidth costs, improved system performance, and enhanced scalability that can provide substantial return on investment for AI-intensive organizations. Comprehensive cost-benefit analysis must consider both direct costs such as storage and bandwidth fees as well as indirect benefits like improved development velocity and enhanced system reliability.

Storage cost reduction can be substantial, with effective compression strategies often reducing storage requirements by 50% to 90% depending on data types and compression techniques employed, translating directly into reduced cloud storage fees and decreased on-premises storage infrastructure requirements.

Understanding the relationship between compression efficiency and data quality preservation is crucial for making informed decisions about compression strategies, with different data types exhibiting varying sensitivity to compression artifacts and quality degradation that can impact AI model performance.

Performance improvements from optimized compression can reduce training times, improve inference latency, and enable the processing of larger datasets within existing hardware constraints, providing significant productivity benefits that may exceed the direct storage cost savings in many scenarios.

Scalability enhancements enable organizations to work with larger datasets and more sophisticated models than would otherwise be practical, potentially unlocking new AI capabilities and business opportunities that provide substantial competitive advantages in rapidly evolving markets.

Security and Compliance Considerations

Data security implications of compression must be carefully evaluated, as compressed data may require different encryption strategies and access controls compared to uncompressed information, with some compression techniques potentially affecting the effectiveness of certain security measures or introducing new vulnerability vectors.

Compliance requirements in regulated industries may impose specific constraints on compression techniques, particularly regarding data integrity, auditability, and the ability to demonstrate that compressed and decompressed data maintains required characteristics for regulatory compliance and validation purposes.

Privacy preservation in compressed datasets requires careful consideration of whether compression techniques might inadvertently expose sensitive information or affect the effectiveness of privacy-preserving techniques like differential privacy or federated learning approaches.

Conclusion and Future Outlook

The strategic implementation of advanced data compression techniques has become essential for organizations seeking to optimize their AI infrastructure and maintain competitive advantages in an increasingly data-intensive landscape. As AI models continue to grow in complexity and datasets expand to unprecedented scales, the importance of effective compression strategies will only continue to increase, driving ongoing innovation in compression algorithms, storage architectures, and implementation methodologies.

The future of data compression for AI applications lies in the intelligent integration of multiple compression techniques, adaptive algorithms that can optimize themselves for specific workloads, and hardware acceleration that makes advanced compression techniques practical for real-time applications. Organizations that invest in developing comprehensive compression strategies today will be well-positioned to leverage the continued evolution of AI technologies while maintaining optimal cost-performance characteristics.

The continued advancement of compression technologies promises to unlock new possibilities for AI applications by enabling the processing of even larger datasets, supporting more sophisticated models, and facilitating the deployment of AI capabilities in resource-constrained environments where traditional approaches would be impractical.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of data compression technologies and their applications in artificial intelligence and machine learning. Readers should conduct their own research and consider their specific requirements when implementing compression strategies. The effectiveness of compression techniques may vary depending on specific use cases, data characteristics, and infrastructure requirements.