The exponential growth of artificial intelligence applications has created unprecedented demands for flexible, scalable cloud infrastructure that can adapt to rapidly evolving business requirements and technological innovations. As organizations mature their AI capabilities and seek to optimize costs, performance, and strategic positioning, the need to migrate AI workloads between different cloud platforms has become increasingly critical. This complex undertaking requires careful planning, sophisticated technical expertise, and a deep understanding of the unique challenges that AI workloads present compared to traditional application migrations.

Stay updated with the latest AI infrastructure trends to understand how cloud platforms are evolving to support next-generation machine learning applications. The landscape of cloud-based AI services continues to evolve at breakneck speed, with each major provider introducing specialized tools, services, and optimizations designed to capture market share in this lucrative and strategically important sector.

Understanding the Complexity of AI Workload Migration

AI workload migration represents one of the most challenging types of cloud migration due to the intricate interdependencies between data, models, training pipelines, inference systems, and specialized hardware requirements. Unlike traditional applications that primarily focus on compute and storage resources, AI workloads demand sophisticated orchestration of GPU clusters, massive data pipelines, real-time inference endpoints, and complex model versioning systems. The migration process must account for these unique characteristics while maintaining business continuity and preserving the integrity of machine learning models that may have taken months or years to develop and train.

The complexity is further amplified by the fact that AI workloads often involve petabytes of training data, pre-trained models with specific framework dependencies, and inference systems that require millisecond response times. Each component must be carefully evaluated, tested, and optimized for the target cloud environment to ensure that performance characteristics remain consistent or improve after migration. Additionally, the interconnected nature of AI pipelines means that any disruption during migration can have cascading effects across multiple dependent systems and processes.

Strategic Drivers for AI Workload Migration

Organizations undertake AI workload migrations for various strategic and operational reasons that extend beyond simple cost considerations. Cost optimization remains a primary driver, as different cloud platforms offer varying pricing models for GPU compute, data storage, and specialized AI services that can result in significant savings depending on usage patterns and workload characteristics. Some organizations discover that their current cloud provider’s pricing structure becomes prohibitively expensive as their AI workloads scale, particularly for long-running training jobs or high-throughput inference scenarios.

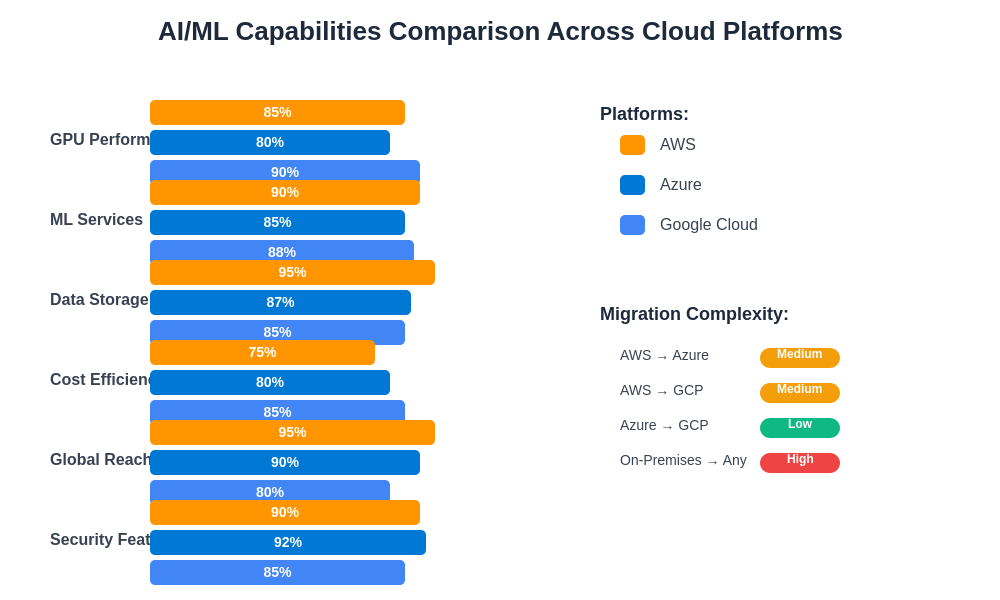

Performance optimization represents another crucial migration driver, as different cloud platforms excel in specific areas of AI workload support. Some providers offer superior GPU performance for training deep learning models, while others provide more efficient inference optimization or better support for specific machine learning frameworks. Organizations may also migrate to access cutting-edge hardware accelerators, such as specialized AI chips or the latest GPU architectures, that are exclusively available on certain cloud platforms.

Enhance your cloud strategy with advanced AI tools like Claude to make informed decisions about platform selection and migration planning. Regulatory compliance and data sovereignty requirements increasingly influence migration decisions, particularly for organizations operating in highly regulated industries or serving customers in regions with strict data residency requirements.

Pre-Migration Assessment and Planning

Successful AI workload migration begins with comprehensive assessment and planning phases that thoroughly analyze existing infrastructure, dependencies, performance requirements, and business constraints. The assessment phase must catalog all components of the AI ecosystem, including training datasets, model artifacts, preprocessing pipelines, feature stores, model registries, monitoring systems, and deployment infrastructure. This inventory process requires close collaboration between data science teams, MLOps engineers, and cloud architects to ensure that no critical components are overlooked.

Performance baseline establishment represents a critical component of the pre-migration assessment, requiring detailed measurement of current system performance across various metrics including training times, inference latency, throughput, resource utilization, and cost per prediction. These baselines serve as benchmarks for evaluating migration success and identifying potential performance regressions in the target environment. The assessment must also identify potential migration blockers, such as proprietary services, custom hardware dependencies, or legacy system integrations that may require special handling or alternative solutions.

Data Migration Strategies and Approaches

Data migration forms the foundation of successful AI workload migration, requiring sophisticated strategies to handle the massive volumes of structured and unstructured data that fuel machine learning systems. The choice of migration strategy depends heavily on factors such as data volume, acceptable downtime, network bandwidth, compliance requirements, and ongoing data synchronization needs. Organizations must carefully evaluate whether to pursue big bang migrations that move all data simultaneously, or phased approaches that gradually migrate data components while maintaining hybrid operations.

For organizations dealing with petabyte-scale datasets common in AI applications, physical data transfer methods often provide the most practical solution for initial bulk migration. Cloud providers offer specialized data transfer appliances that can securely transport massive datasets without consuming internet bandwidth or creating extended migration windows. However, these physical transfer methods must be combined with online synchronization mechanisms to handle ongoing data updates and ensure consistency between source and target environments during the migration process.

Real-time data synchronization becomes particularly challenging for AI workloads that continuously ingest streaming data for model training or feature engineering. Organizations must implement robust data replication mechanisms that can handle high-velocity data streams while maintaining data consistency and minimizing impact on existing AI pipelines. This often requires sophisticated change data capture systems, message queuing architectures, and careful orchestration of cutover procedures to prevent data loss or corruption during the transition.

The systematic approach to AI workload migration involves careful coordination across multiple phases, each with specific objectives and deliverables that build upon previous phases while preparing the foundation for subsequent migration activities. This structured methodology helps organizations manage the complexity inherent in large-scale AI system migrations.

Model and Pipeline Migration Considerations

Machine learning models present unique migration challenges due to their dependencies on specific framework versions, custom libraries, and training environments that may not directly translate between cloud platforms. Model migration requires careful attention to framework compatibility, ensuring that models trained on one platform can be successfully loaded and executed on the target platform without performance degradation or accuracy loss. This process often involves extensive testing and validation to verify that model behavior remains consistent across different environments.

Training pipeline migration involves reconstructing complex workflows that orchestrate data preprocessing, feature engineering, model training, validation, and deployment processes. These pipelines often integrate with multiple cloud-native services for job scheduling, resource management, experiment tracking, and artifact storage that may not have direct equivalents on the target platform. Organizations must either adapt pipelines to use analogous services on the target platform or implement platform-agnostic solutions that can operate consistently across different cloud environments.

Explore comprehensive research capabilities with Perplexity to stay informed about the latest developments in MLOps tools and cross-platform migration strategies. Model versioning and experiment tracking systems require special attention during migration, as these systems often contain years of valuable historical data about model performance, hyperparameter experiments, and training metrics that inform ongoing research and development efforts.

Infrastructure and Orchestration Migration

AI workloads typically require sophisticated infrastructure orchestration that manages GPU clusters, distributed training jobs, auto-scaling inference endpoints, and complex networking configurations. Migrating this infrastructure involves more than simply provisioning equivalent resources on the target platform; it requires careful redesign and optimization to leverage platform-specific capabilities and best practices. Organizations must evaluate whether to recreate existing infrastructure patterns or redesign their architecture to take advantage of unique features available on the target platform.

Container orchestration systems play a crucial role in AI workload portability, as containerized applications can more easily transition between different cloud platforms while maintaining consistent runtime environments. However, GPU support, networking configurations, storage integrations, and platform-specific optimizations often require significant modifications even for containerized workloads. Organizations must invest in comprehensive testing and validation to ensure that containerized AI applications perform optimally in the target environment.

Infrastructure as Code practices become particularly valuable during AI workload migration, enabling organizations to version control their infrastructure configurations and automate the provisioning of complex AI environments. This approach facilitates consistent deployment across different platforms and reduces the risk of configuration drift that could impact AI workload performance or reliability.

Security and Compliance Migration

AI workload migration must address complex security and compliance requirements that extend beyond traditional application security concerns. Machine learning models themselves can be considered valuable intellectual property that requires protection during transit and storage, necessitating sophisticated encryption and access control mechanisms. Additionally, training data often contains sensitive or personally identifiable information that must be protected according to various regulatory frameworks such as GDPR, HIPAA, or industry-specific compliance requirements.

Identity and access management systems require careful migration planning to ensure that data scientists, ML engineers, and automated systems maintain appropriate access to AI resources while adhering to principle of least privilege guidelines. This often involves mapping existing roles and permissions to equivalent constructs on the target platform while implementing additional security controls that may be required by organizational policies or regulatory requirements.

Audit trails and compliance reporting systems must be maintained throughout the migration process to demonstrate continuous compliance with applicable regulations and internal governance policies. This requires careful coordination between security teams, compliance officers, and technical migration teams to ensure that all activities are properly documented and monitored.

Performance Optimization Post-Migration

Post-migration performance optimization represents a critical phase that can determine the long-term success of AI workload migration initiatives. Organizations must systematically evaluate the performance characteristics of migrated workloads against established baselines and implement targeted optimizations to address any performance gaps or inefficiencies. This process often reveals opportunities to leverage platform-specific optimizations that can actually improve performance compared to the original environment.

GPU utilization optimization requires particular attention, as different cloud platforms offer varying GPU architectures, driver versions, and optimization frameworks that can significantly impact training and inference performance. Organizations must experiment with different instance types, batch sizes, and optimization settings to achieve optimal performance for their specific workloads. Additionally, the availability of newer GPU architectures on the target platform may enable performance improvements that justify the migration effort.

Cost optimization continues beyond the initial migration as organizations gain experience with the new platform’s pricing models and resource utilization patterns. This ongoing optimization process involves rightsizing instances, implementing more efficient scheduling policies, and leveraging platform-specific cost optimization features such as spot instances or reserved capacity pricing models.

Monitoring and Observability Migration

Comprehensive monitoring and observability systems are essential for maintaining AI workload reliability and performance after migration. These systems must track not only traditional infrastructure metrics but also AI-specific metrics such as model accuracy, training convergence, inference latency distribution, and data drift detection. Migrating these monitoring systems requires careful planning to ensure continuity of visibility into system behavior during and after the migration process.

Model performance monitoring presents unique challenges as it requires integration with both infrastructure monitoring systems and machine learning platforms to provide comprehensive visibility into model behavior in production environments. Organizations must establish monitoring frameworks that can detect model degradation, data quality issues, and performance anomalies that could impact business outcomes.

Log aggregation and analysis systems must be designed to handle the high-volume, high-velocity log streams generated by distributed AI workloads while providing actionable insights into system behavior and performance trends. This often requires specialized log processing pipelines and analysis tools designed specifically for machine learning workloads.

Multi-Cloud and Hybrid Strategies

Many organizations adopt multi-cloud or hybrid strategies that distribute AI workloads across multiple cloud platforms to optimize performance, cost, and risk management. These approaches enable organizations to leverage the best capabilities of each platform while avoiding vendor lock-in and creating redundancy for critical workloads. However, multi-cloud strategies introduce additional complexity in terms of data synchronization, identity management, and operational overhead.

Hybrid approaches that maintain some AI workloads on-premises while migrating others to cloud platforms require sophisticated networking and integration solutions to ensure seamless operation across different environments. These hybrid architectures often provide benefits such as reduced data transfer costs, improved compliance with data residency requirements, and better control over sensitive workloads while still enabling access to cloud-based AI services and scalability.

Understanding the relative strengths and capabilities of different cloud platforms enables informed decision-making about migration targets and helps organizations align their specific AI workload requirements with the most suitable platform characteristics. Migration complexity varies significantly depending on the source and target platform combinations, with some transitions requiring more extensive adaptation than others.

Automation and Tooling for Migration

Successful AI workload migration increasingly relies on automation and specialized tooling to manage the complexity and scale of modern machine learning environments. Organizations are developing and adopting migration frameworks that can automatically discover dependencies, assess compatibility, and orchestrate complex migration procedures with minimal manual intervention. These tools help reduce migration timelines, minimize human error, and ensure consistency across different migration projects.

Infrastructure automation tools play a crucial role in enabling repeatable, reliable migration processes that can be tested and validated before execution against production workloads. These tools often integrate with CI/CD pipelines to enable continuous deployment of migrated workloads and facilitate rapid rollback procedures if issues are discovered during the migration process.

Risk Management and Contingency Planning

AI workload migration involves significant risks that must be carefully managed through comprehensive contingency planning and risk mitigation strategies. These risks include data loss or corruption, model performance degradation, extended downtime periods, and potential business impact from migration-related issues. Organizations must develop detailed rollback procedures that can quickly restore operations to the original environment if critical issues are discovered during or after migration.

Testing and validation procedures must be comprehensive and include not only functional testing but also performance testing, security testing, and business continuity testing to ensure that all aspects of the migrated workloads function correctly. This testing often requires significant time and resources but is essential for ensuring migration success and maintaining business confidence in the process.

Future Considerations and Industry Evolution

The landscape of AI workload migration continues to evolve rapidly as cloud providers introduce new services, tools, and capabilities designed to simplify migration processes and improve workload portability. Emerging standards and frameworks are beginning to address some of the historical challenges associated with AI workload migration, including better model format standardization, improved container orchestration for AI workloads, and enhanced interoperability between different cloud platforms.

Organizations must stay informed about these developments and consider how emerging technologies and standards might impact their migration strategies and long-term cloud architecture decisions. The investment in migration capabilities and expertise often provides long-term benefits by enabling organizations to adapt more quickly to changing business requirements and technological opportunities.

The continued evolution of AI technologies and cloud platforms suggests that workload migration will become an ongoing capability rather than a one-time project, requiring organizations to build sustainable migration expertise and maintain flexible, portable AI architectures that can adapt to future requirements and opportunities.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of cloud migration practices and AI workload management. Organizations should conduct thorough assessments of their specific requirements and consult with qualified professionals before undertaking complex migration projects. The effectiveness of migration strategies may vary depending on specific use cases, regulatory requirements, and organizational constraints.