The containerization landscape for artificial intelligence applications has evolved dramatically, with organizations facing increasingly complex decisions about which container runtime to adopt for their machine learning workloads. Docker has long dominated the containerization space, but Podman has emerged as a compelling alternative that addresses many of the architectural and security concerns associated with traditional container deployments. This comprehensive analysis examines both platforms through the lens of AI application requirements, exploring how each solution handles the unique challenges of machine learning containerization including GPU access, large model storage, distributed computing, and security considerations.

Stay updated with the latest AI infrastructure trends as containerization technologies continue to evolve and adapt to the growing demands of artificial intelligence workloads. The choice between Docker and Podman represents more than a simple technology decision; it fundamentally impacts how AI teams approach development, deployment, and scaling of machine learning systems in production environments.

Understanding Containerization in AI Context

Artificial intelligence applications present unique containerization challenges that extend far beyond traditional web application deployment scenarios. Machine learning workloads require specialized hardware access, particularly for GPU acceleration, extensive memory allocation for large language models, and sophisticated networking configurations for distributed training scenarios. The container runtime must seamlessly handle these requirements while maintaining the isolation and portability benefits that make containerization attractive for AI development workflows.

Modern AI applications often involve complex dependency chains including specific versions of machine learning frameworks, CUDA drivers, mathematical libraries, and custom research code that must work together harmoniously. Containerization provides a solution by encapsulating these dependencies in reproducible environments, but the choice of container runtime significantly impacts performance, security, and operational complexity. Both Docker and Podman offer solutions for AI containerization, but their architectural differences create distinct advantages and limitations for machine learning use cases.

The rapid evolution of AI hardware, from specialized inference chips to high-memory systems designed for large language models, requires container runtimes that can adapt to changing hardware landscapes while maintaining consistency across development and production environments. This adaptability becomes crucial as organizations scale from experimental research environments to production AI services serving millions of users.

Docker: The Established Leader

Docker revolutionized software deployment by popularizing containerization technology and establishing many of the standards that define modern container ecosystems. For AI applications, Docker provides mature tooling, extensive community support, and battle-tested integration with machine learning frameworks and cloud platforms. The Docker ecosystem includes specialized images optimized for AI workloads, pre-configured with popular frameworks like TensorFlow, PyTorch, and CUDA runtime environments that significantly reduce setup complexity for machine learning teams.

The Docker Hub registry hosts thousands of AI-specific container images maintained by framework developers, hardware manufacturers, and the broader community. These images provide starting points for AI development that include optimized configurations for specific hardware architectures, pre-installed development tools, and carefully tuned runtime parameters that maximize performance for machine learning workloads. This ecosystem maturity translates into faster development cycles and reduced operational overhead for organizations adopting containerized AI workflows.

Docker’s integration with orchestration platforms like Kubernetes has enabled sophisticated AI deployment patterns including auto-scaling inference services, distributed training clusters, and multi-tenant research environments. The extensive documentation and community knowledge base around Docker deployment patterns provides AI teams with proven solutions for complex scenarios like A/B testing machine learning models, implementing canary deployments for AI services, and managing resource allocation in mixed CPU/GPU clusters.

Explore advanced AI development tools with Claude to enhance your containerization strategy with intelligent assistance for configuration management and deployment optimization. The combination of established container platforms with AI-powered development assistance creates powerful workflows for managing complex AI infrastructure deployments.

Podman: The Secure Alternative

Podman emerged as a response to architectural and security concerns inherent in Docker’s design, offering a daemon-free approach to container management that addresses many enterprise security requirements. For AI applications, Podman’s rootless execution model provides significant security advantages, particularly important when dealing with sensitive training data or proprietary machine learning models that require strict access controls and audit trails.

The daemon-free architecture eliminates the single point of failure and potential security vulnerability represented by Docker’s persistent daemon process. This design choice proves particularly valuable in AI environments where long-running training jobs and inference services require maximum reliability and security isolation. Podman’s ability to run containers without elevated privileges reduces the attack surface for AI workloads while maintaining full compatibility with existing Docker container images and Dockerfiles.

Podman’s integration with systemd enables sophisticated process management capabilities that benefit AI workloads requiring precise resource control and monitoring. The ability to generate systemd unit files for containers facilitates integration with existing enterprise infrastructure management tools and provides granular control over resource allocation, logging, and restart policies that are crucial for production AI services.

The compatibility with Docker CLI commands and container formats ensures that existing AI development workflows can migrate to Podman with minimal disruption. This compatibility extends to container registries, allowing teams to leverage existing investments in Docker-based CI/CD pipelines while gaining the security and architectural benefits of Podman’s design approach.

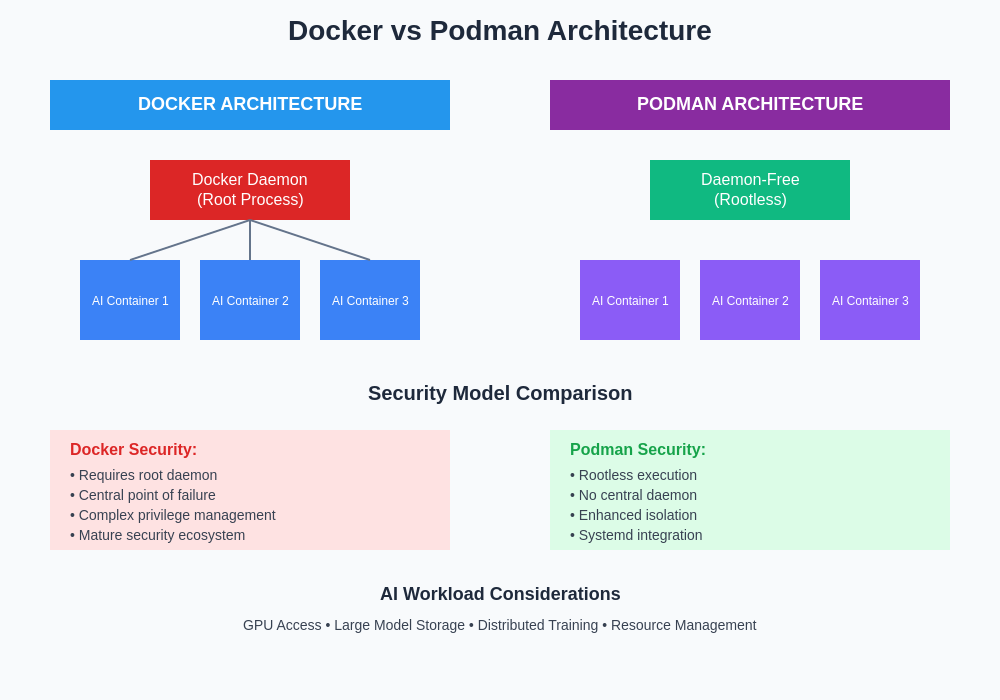

The architectural differences between Docker and Podman create distinct implications for AI workload management, security models, and operational characteristics. Understanding these fundamental design differences helps organizations make informed decisions based on their specific AI infrastructure requirements and security priorities.

Architecture and Security Comparison

The fundamental architectural differences between Docker and Podman create distinct implications for AI application security and operational characteristics. Docker’s daemon-based architecture centralizes container management through a persistent service that requires root privileges, creating a potential security bottleneck that must be carefully managed in AI environments handling sensitive data or proprietary algorithms.

Podman’s daemon-free approach distributes container management responsibilities directly to individual processes, eliminating the centralized daemon and enabling fine-grained security controls that align better with enterprise security policies. This architecture proves particularly valuable for multi-tenant AI research environments where different teams or projects require isolated container execution with distinct security boundaries and resource allocations.

The rootless execution capabilities of Podman address critical security concerns in AI environments where containers may process confidential training data or execute proprietary machine learning algorithms. By eliminating the requirement for root privileges during container execution, Podman reduces the potential impact of container breakouts or security vulnerabilities while maintaining full functionality for AI workloads including GPU access and network configuration.

The security model differences extend to image management and registry interactions, where Podman’s approach provides enhanced verification and signature validation capabilities that prove crucial when deploying AI models in regulated industries or environments with strict compliance requirements. These security enhancements become particularly important as AI applications increasingly handle sensitive personal data or operate in critical infrastructure contexts.

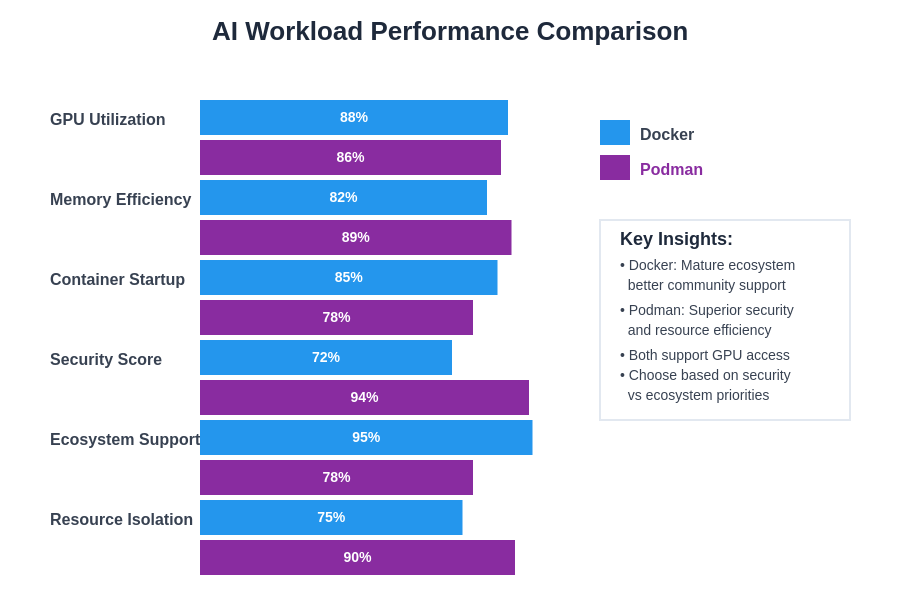

Performance Characteristics for AI Workloads

Performance considerations for AI containerization extend beyond simple CPU and memory metrics to encompass GPU utilization efficiency, I/O throughput for large dataset access, and network performance for distributed training scenarios. Docker’s mature ecosystem includes extensive optimization work specifically targeting AI workloads, with specialized runtime configurations and image optimizations that maximize performance for machine learning frameworks and GPU acceleration libraries.

The overhead characteristics of each platform impact AI workload performance differently depending on the specific use case and deployment pattern. Docker’s daemon architecture introduces minimal performance overhead for most AI applications while providing sophisticated caching mechanisms that improve startup times for frequently used machine learning container images. These caching optimizations prove particularly valuable in development environments where data scientists frequently iterate on container configurations and dependencies.

Podman’s daemon-free architecture eliminates certain sources of performance overhead while introducing different characteristics around container startup and resource management. For AI workloads involving long-running training processes, these differences typically prove negligible, but they can impact scenarios involving frequent container creation and destruction such as hyperparameter optimization workflows or dynamic scaling of inference services.

Enhance your AI research capabilities with Perplexity to access comprehensive performance benchmarking data and optimization strategies for containerized machine learning workloads. The combination of multiple AI-powered research tools provides deeper insights into performance optimization opportunities across different containerization platforms.

GPU Support and Hardware Integration

Graphics processing unit support represents one of the most critical considerations for AI containerization, as modern machine learning workloads rely heavily on GPU acceleration for both training and inference operations. Docker has established comprehensive GPU support through NVIDIA Container Runtime and AMD ROCm integration, providing mature tooling for GPU resource allocation, monitoring, and optimization within containerized AI applications.

The NVIDIA Container Toolkit integration with Docker enables seamless GPU access for AI containers while maintaining isolation between different machine learning workloads sharing the same physical hardware. This integration includes support for multi-GPU systems, GPU memory management, and specialized libraries like cuDNN and TensorRT that are essential for high-performance AI applications. The maturity of this ecosystem translates into reliable GPU support for production AI deployments.

Podman’s GPU support has evolved significantly, achieving functional parity with Docker for most AI use cases while maintaining its security and architectural advantages. The integration with crun and other OCI-compatible runtimes enables GPU access within Podman’s rootless execution model, providing secure GPU acceleration for AI workloads without compromising the security benefits of daemon-free container management.

The hardware integration capabilities extend beyond GPU support to encompass specialized AI accelerators, high-bandwidth memory systems, and custom networking hardware that modern AI infrastructure increasingly requires. Both platforms provide mechanisms for exposing these specialized resources to containerized applications, but their approaches differ in terms of security models and administrative overhead.

Container Orchestration and Scaling

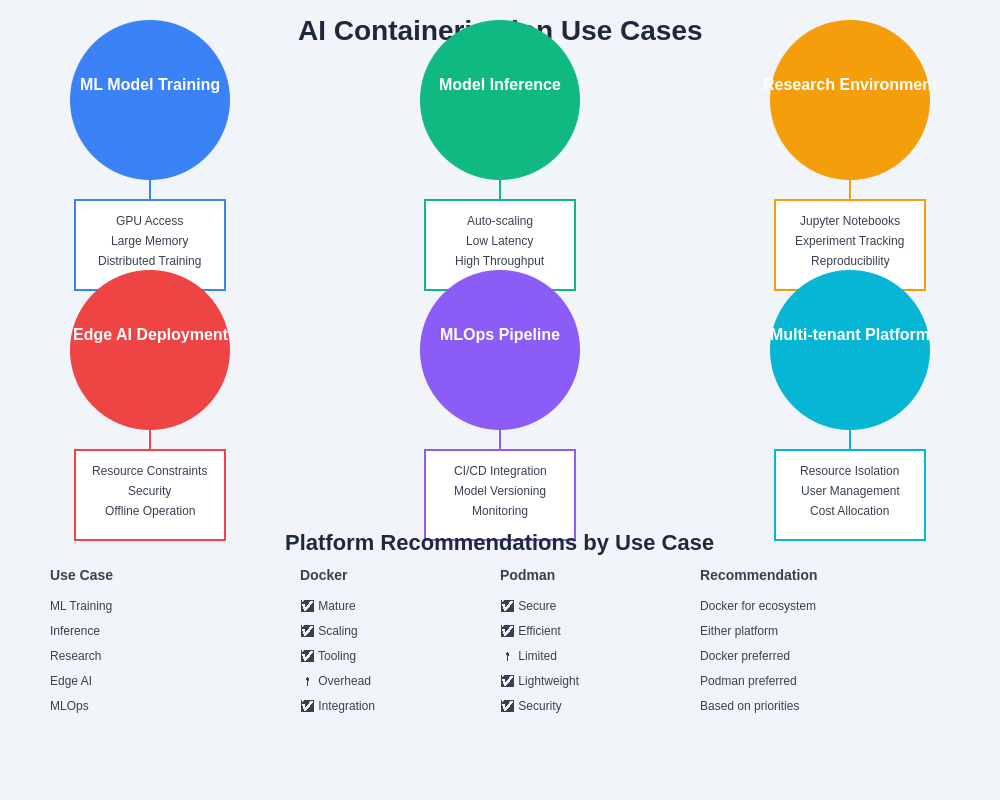

Container orchestration becomes crucial for AI applications that require dynamic scaling, distributed training, or sophisticated resource management across heterogeneous hardware environments. Docker’s deep integration with Kubernetes has established proven patterns for AI workload orchestration including job scheduling for training pipelines, auto-scaling inference services, and resource quota management for multi-tenant AI platforms.

The Kubernetes ecosystem includes specialized operators and custom resource definitions designed specifically for machine learning workloads, such as Kubeflow for ML pipeline management and various training operators for distributed deep learning frameworks. These tools leverage Docker’s container interface to provide sophisticated AI workflow management capabilities that scale from experimental research to production deployment scenarios.

Podman’s Kubernetes integration provides equivalent functionality while maintaining its security and architectural advantages. The OCI compliance ensures that Podman containers work seamlessly with Kubernetes while enabling organizations to benefit from rootless execution and daemon-free architecture in orchestrated environments. This compatibility extends to existing Kubernetes-based AI platforms and tooling.

The orchestration capabilities extend to specialized AI scenarios including federated learning deployments, edge AI inference, and hybrid cloud training scenarios where containers must move seamlessly between different infrastructure environments while maintaining consistent behavior and performance characteristics.

Development Workflow Integration

The integration of containerization platforms with AI development workflows significantly impacts productivity and iteration speed for machine learning teams. Docker’s extensive integration with development tools, IDEs, and CI/CD platforms provides mature support for containerized AI development including integrated debugging, live code reloading, and seamless transitions from development to production environments.

Popular AI development environments like Jupyter notebooks, VS Code with AI extensions, and specialized ML development platforms provide native Docker integration that enables data scientists and ML engineers to work within containerized environments without sacrificing development experience or debugging capabilities. This integration reduces the friction between local development and production deployment while ensuring consistency across different development team members.

Podman’s development integration has matured significantly, providing equivalent functionality for most AI development scenarios while enabling teams to benefit from improved security and resource isolation during development phases. The Docker CLI compatibility ensures that existing development workflows and tooling integrate seamlessly with Podman, reducing migration overhead for teams considering a platform transition.

The development workflow considerations extend to collaborative aspects of AI development including shared model registries, experiment tracking integration, and version control for both code and trained models. Both platforms provide mechanisms for integrating with popular MLOps tools and platforms that are essential for modern AI development practices.

Deployment and Operations Considerations

Production deployment of containerized AI applications requires sophisticated operational capabilities including monitoring, logging, security scanning, and lifecycle management that extend beyond traditional application deployment scenarios. Docker’s mature operational ecosystem provides extensive tooling for AI-specific operational requirements including model versioning, A/B testing infrastructure, and performance monitoring for inference services.

The operational complexity of AI applications often involves managing large container images containing multiple gigabytes of machine learning frameworks and model data, requiring efficient image distribution and caching strategies that minimize deployment times and resource utilization. Docker’s layer caching and distribution mechanisms provide proven solutions for these challenges while integrating with container registries and content delivery networks.

Podman’s operational characteristics offer distinct advantages for certain deployment scenarios, particularly those requiring enhanced security isolation or compliance with strict regulatory requirements. The ability to run production workloads without daemon processes or root privileges simplifies security auditing and reduces the attack surface for production AI services handling sensitive data.

The operational considerations encompass backup and disaster recovery strategies for AI applications, where container state management and persistent storage integration become crucial for maintaining business continuity. Both platforms provide mechanisms for managing persistent storage and backup procedures, but their approaches differ in terms of complexity and security models.

Security Implications for AI Applications

Security considerations for AI containerization extend beyond traditional application security to encompass model protection, training data confidentiality, and intellectual property safeguarding that are crucial for commercial AI applications. The security model differences between Docker and Podman create distinct implications for these AI-specific security requirements.

Docker’s security model requires careful configuration and monitoring to ensure appropriate isolation between containers and protection of sensitive AI workloads. The daemon-based architecture necessitates robust access controls and audit logging to maintain security boundaries, particularly important when multiple AI projects or teams share the same container infrastructure.

Podman’s rootless execution model provides inherent security advantages for AI workloads by eliminating many potential privilege escalation vectors and reducing the impact of container security vulnerabilities. This approach proves particularly valuable for AI research environments where experimental code and untested algorithms require execution with minimal risk to the broader system.

The security implications encompass compliance requirements for AI applications operating in regulated industries, where container security, audit trails, and data protection mechanisms must meet specific regulatory standards. Both platforms provide capabilities for meeting these requirements, but their approaches differ in terms of implementation complexity and inherent security characteristics.

Cost and Resource Efficiency

The resource efficiency characteristics of containerization platforms directly impact the operational costs of AI infrastructure, particularly for organizations running large-scale machine learning workloads or maintaining extensive AI development environments. Docker’s resource management capabilities include sophisticated memory and CPU allocation mechanisms optimized for AI workloads, with particular attention to GPU memory management and sharing.

Container density and resource utilization efficiency become crucial factors for organizations seeking to maximize the return on investment in expensive AI hardware including high-end GPUs and specialized AI accelerators. The overhead characteristics of each platform impact the number of concurrent AI workloads that can efficiently share hardware resources while maintaining acceptable performance levels.

Podman’s resource efficiency benefits from reduced system overhead due to its daemon-free architecture, potentially enabling higher container density for certain AI workload patterns. The elimination of the central daemon reduces memory overhead and simplifies resource accounting, which can translate into cost savings for large-scale AI deployments.

The cost considerations extend to operational overhead including system administration, security management, and troubleshooting complexity that impact the total cost of ownership for containerized AI infrastructure. These operational costs often exceed the direct hardware and software costs for large AI deployments.

The performance characteristics of Docker and Podman vary significantly across different AI-specific metrics, with each platform demonstrating strengths in particular areas that align with different organizational priorities and use case requirements. This comprehensive comparison helps organizations understand the trade-offs between ecosystem maturity and security-focused architecture.

Integration with AI/ML Ecosystem

The broader AI and machine learning ecosystem integration capabilities significantly influence the practical utility of containerization platforms for modern AI development and deployment workflows. Docker’s extensive integration with popular ML frameworks, cloud AI services, and specialized AI tooling provides comprehensive support for end-to-end AI development pipelines.

Major cloud platforms including AWS SageMaker, Google AI Platform, and Azure Machine Learning provide native Docker integration with pre-configured environments, automated scaling, and managed services that reduce operational complexity for AI teams. This ecosystem integration enables sophisticated deployment patterns including multi-cloud AI services and hybrid on-premises/cloud training scenarios.

The integration extends to specialized AI development tools including experiment tracking platforms like MLflow and Weights & Biases, model serving frameworks such as TensorFlow Serving and Seldon, and automated machine learning platforms that leverage containerization for scalable model training and deployment.

Podman’s ecosystem integration continues to mature, with increasing support from cloud platforms and AI tool providers recognizing the security and architectural advantages of daemon-free container management. The OCI compliance ensures compatibility with existing tooling while enabling organizations to benefit from Podman’s security model.

Future Considerations and Recommendations

The evolution of both containerization platforms continues to address emerging requirements from the rapidly advancing AI field, including support for new hardware architectures, integration with edge computing scenarios, and enhanced security models for sensitive AI workloads. Organizations planning long-term AI infrastructure investments must consider the development trajectories and community momentum behind each platform.

The increasing importance of AI governance, model explainability, and regulatory compliance creates new requirements for container platforms including enhanced audit capabilities, immutable deployment records, and sophisticated access control mechanisms that may influence platform selection decisions.

For organizations beginning their containerization journey for AI applications, Docker’s mature ecosystem and extensive community support provide lower-risk adoption paths with proven solutions for common AI deployment scenarios. The extensive documentation and community knowledge base reduce implementation risks and provide established best practices for AI containerization.

Organizations with specific security requirements, regulatory compliance needs, or architectural preferences that align with Podman’s design philosophy should consider the daemon-free approach, particularly for new AI projects where migration costs are minimal and security benefits are paramount.

The choice between Docker and Podman for AI containerization ultimately depends on organizational priorities including security requirements, operational complexity tolerance, ecosystem integration needs, and long-term strategic technology alignment. Both platforms provide viable solutions for AI containerization, with distinct advantages that align with different organizational contexts and deployment scenarios.

Different AI use cases present unique requirements that may favor one containerization platform over another. This comprehensive analysis of common AI deployment patterns provides guidance for selecting the most appropriate platform based on specific technical requirements, security considerations, and operational constraints.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The comparison between Docker and Podman is based on publicly available information and general industry knowledge. Organizations should conduct their own evaluation and testing to determine the most appropriate containerization platform for their specific AI applications and requirements. Performance characteristics, security implications, and feature availability may vary based on specific use cases, deployment configurations, and organizational contexts.