The artificial intelligence revolution has fundamentally transformed how organizations approach data storage and retrieval, creating unprecedented demands for database technologies that can efficiently handle the diverse, unstructured datasets that power modern machine learning applications. Document databases have emerged as essential infrastructure components for AI workloads, offering the flexibility and scalability required to manage everything from training datasets to real-time inference results. Among the leading contenders in this space, MongoDB and Elasticsearch stand out as two distinctly different yet equally powerful solutions, each bringing unique strengths to the complex landscape of AI data management.

Explore the latest trends in AI database technologies to understand how cutting-edge developments are reshaping data storage and retrieval paradigms for artificial intelligence applications. The choice between document database platforms has become increasingly critical as AI applications scale from experimental prototypes to production systems handling millions of documents, complex queries, and real-time analytics workloads that demand both performance and reliability.

Understanding Document Databases in AI Context

Document databases represent a paradigm shift from traditional relational database management systems, offering schema-flexible storage that naturally accommodates the varied and evolving data structures common in artificial intelligence applications. Unlike rigid table-based approaches, document databases store information as semi-structured documents, typically in JSON or BSON format, allowing for dynamic schemas that can adapt to changing AI model requirements without costly migrations or structural modifications.

The inherent flexibility of document databases makes them particularly well-suited for AI applications that must process heterogeneous data sources, including unstructured text, metadata annotations, feature vectors, model outputs, and experimental results. This adaptability becomes crucial when dealing with machine learning pipelines that may require rapid iteration on data structures, addition of new fields for model versioning, or accommodation of different data types as models evolve from research phases to production deployment.

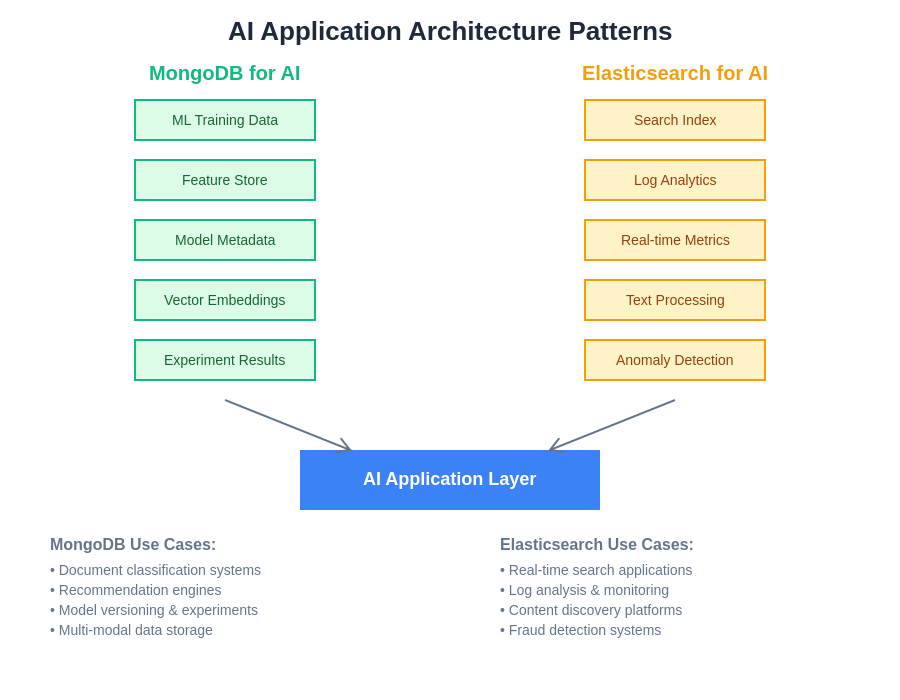

Modern AI applications leverage distinct architectural patterns depending on their primary use cases, with MongoDB excelling in flexible document storage and complex data relationships, while Elasticsearch dominates in search-centric and real-time analytics scenarios.

The scalability characteristics of document databases align perfectly with the exponential growth patterns typical in AI data workloads. As machine learning models become more sophisticated and training datasets grow larger, the ability to horizontally scale storage and processing capabilities becomes essential for maintaining performance and cost efficiency across diverse AI use cases.

MongoDB: The General-Purpose Document Champion

MongoDB has established itself as the most widely adopted document database globally, offering a comprehensive platform that balances ease of use with enterprise-grade functionality for AI applications. Its flexible document model allows developers to store complex nested structures, arrays, and varying field types within the same collection, making it ideal for AI applications that must manage diverse data formats including training examples, model configurations, experimental metadata, and result datasets.

The MongoDB ecosystem provides extensive tooling and integrations that streamline AI development workflows, including native drivers for popular machine learning frameworks, robust aggregation pipelines for data preprocessing, and comprehensive indexing strategies that optimize query performance across large datasets. The platform’s mature replication and sharding capabilities ensure high availability and horizontal scalability, critical requirements for production AI systems that cannot tolerate downtime or performance degradation as data volumes increase.

Enhance your AI development with advanced tools like Claude for intelligent database design, query optimization, and architectural decision-making that leverages both human expertise and AI assistance. MongoDB’s extensive documentation, community support, and professional services ecosystem provide comprehensive resources for teams implementing AI applications at scale.

MongoDB’s Atlas cloud service offers managed database capabilities specifically designed for modern application development, including AI workloads that require global distribution, automated scaling, and integrated analytics. The platform’s built-in security features, compliance certifications, and enterprise-grade monitoring tools address the stringent requirements of AI applications handling sensitive data or operating in regulated industries.

Elasticsearch: The Search and Analytics Powerhouse

Elasticsearch approaches document storage from a fundamentally different perspective, prioritizing search performance, real-time analytics, and distributed computing capabilities that make it exceptionally well-suited for AI applications requiring fast text search, complex aggregations, and near-real-time data processing. Built on Apache Lucene, Elasticsearch excels at full-text search, faceted navigation, and analytical queries that are common in AI applications dealing with large volumes of textual data, log analysis, and content recommendation systems.

The platform’s distributed architecture and automatic sharding capabilities provide exceptional performance for read-heavy workloads typical in AI inference scenarios where applications must quickly retrieve relevant documents, perform similarity searches, or execute complex analytical queries across massive datasets. Elasticsearch’s inverted index structure and optimized query execution engine deliver sub-second response times even when searching through millions of documents, making it ideal for real-time AI applications.

Elasticsearch’s machine learning capabilities have evolved significantly, now offering built-in anomaly detection, forecasting, and data frame analytics that complement its core search functionality. These integrated ML features enable organizations to perform advanced analytics directly within the database layer, reducing the complexity of AI architectures while improving performance through data locality and optimized processing pipelines.

The Elastic Stack ecosystem, including Logstash for data ingestion, Kibana for visualization, and Beats for data collection, provides a comprehensive platform for AI applications that require end-to-end data processing pipelines, real-time monitoring, and interactive analytics dashboards that support both technical teams and business stakeholders.

Vector Search Capabilities and Semantic Understanding

The emergence of vector embeddings and semantic search has created new requirements for document databases serving AI applications, particularly those involving natural language processing, computer vision, and recommendation systems. Both MongoDB and Elasticsearch have evolved to address these needs, but with different approaches that reflect their core architectural philosophies and target use cases.

MongoDB’s vector search capabilities, introduced through Atlas Vector Search, integrate seamlessly with existing document structures, allowing organizations to store embeddings alongside traditional document fields and perform hybrid searches that combine semantic similarity with conventional filtering criteria. This approach simplifies AI application architectures by eliminating the need for separate vector databases while maintaining the familiar MongoDB development experience.

Elasticsearch has incorporated dense vector support through its dense_vector field type and kNN search capabilities, enabling efficient similarity searches across large collections of embedded vectors. The platform’s ability to combine vector similarity with traditional text search and filtering creates powerful hybrid search experiences that are particularly valuable for AI applications requiring nuanced relevance scoring and multi-modal search capabilities.

Discover advanced AI research capabilities with Perplexity to stay current with rapidly evolving vector search technologies, embedding techniques, and semantic understanding methodologies that are transforming how AI applications interact with document databases. The integration of vector search capabilities represents a significant evolution in document database functionality, enabling new categories of AI applications.

Performance Characteristics and Optimization Strategies

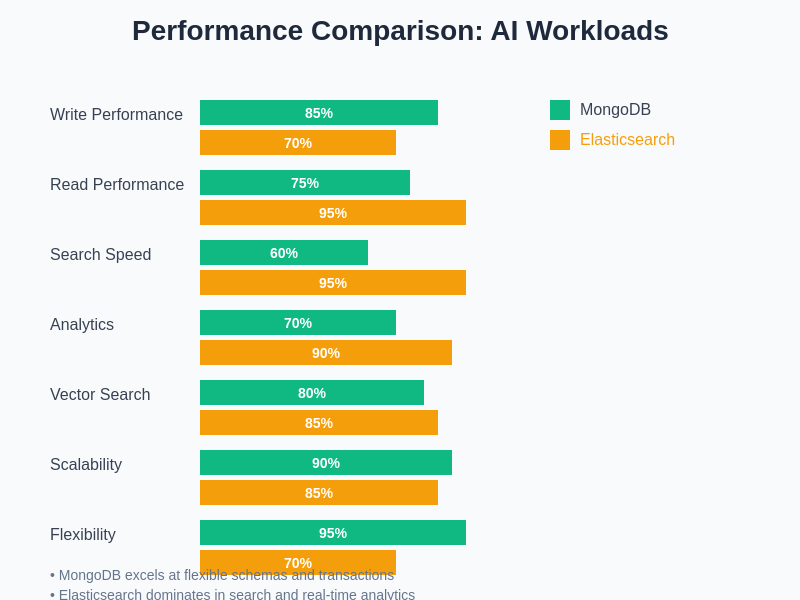

Understanding the performance characteristics of MongoDB and Elasticsearch in AI contexts requires careful consideration of workload patterns, data access requirements, and scaling strategies that differ significantly from traditional web applications. AI workloads often exhibit unique characteristics including large batch processing operations, complex analytical queries, high-volume writes during training data ingestion, and varying read patterns during different phases of model development and deployment.

MongoDB’s performance optimization strategies focus on efficient indexing, query planning, and read preference configuration that can dramatically improve performance for AI applications. The platform’s aggregation pipeline provides powerful data transformation capabilities that enable complex preprocessing operations to be performed directly within the database, reducing network overhead and improving overall application performance.

Elasticsearch’s performance advantages become particularly apparent in scenarios requiring complex search operations, real-time analytics, and high-throughput read operations. The platform’s distributed query execution, sophisticated caching mechanisms, and optimized data structures deliver exceptional performance for AI applications that must search through large volumes of text, perform complex aggregations, or support real-time user interactions.

Both platforms benefit from careful capacity planning, appropriate hardware selection, and workload-specific configuration optimization. AI applications often require specialized tuning approaches that differ from general-purpose database optimization, including considerations for memory allocation, disk I/O patterns, and network bandwidth requirements that vary significantly across different phases of AI workflows.

Performance characteristics vary significantly between MongoDB and Elasticsearch across different AI workload patterns, with each platform demonstrating distinct advantages in specific use cases and operational scenarios.

Data Modeling and Schema Design for AI Workloads

Effective data modeling for AI applications requires understanding how different document database platforms handle schema evolution, nested structures, and relationship management. The flexibility that makes document databases attractive for AI use cases also creates opportunities for suboptimal designs that can impact performance, maintainability, and system reliability as applications scale.

MongoDB’s document model naturally accommodates the hierarchical and varied data structures common in AI applications, including nested feature representations, model metadata, training configurations, and experimental results. The platform’s schema validation capabilities provide optional structure enforcement that can help maintain data quality while preserving the flexibility required for evolving AI models and datasets.

Elasticsearch’s mapping system requires more explicit schema definition but provides powerful capabilities for handling diverse data types, including nested objects, arrays, and specialized field types optimized for specific use cases. The platform’s dynamic mapping capabilities can automatically infer field types, but production AI applications typically benefit from explicit mapping definitions that optimize storage efficiency and query performance.

The choice of data modeling approach significantly impacts application performance, especially for AI workloads that may require frequent schema evolution, complex nested queries, or efficient aggregation operations across large datasets. Understanding the trade-offs between flexibility and performance optimization becomes crucial for successful AI application development.

Integration with Machine Learning Frameworks and Tools

The ecosystem compatibility and integration capabilities of document databases play a crucial role in AI application development, influencing everything from development velocity to operational complexity. Modern machine learning workflows involve multiple tools, frameworks, and services that must efficiently interact with underlying data storage systems, making seamless integration a critical selection criterion.

MongoDB’s extensive driver ecosystem and native integration with popular programming languages and machine learning frameworks simplify AI application development. The platform provides official drivers and community-contributed libraries for Python, R, Scala, and other languages commonly used in data science and machine learning. MongoDB’s aggregation capabilities and built-in data transformation functions reduce the need for external data preprocessing tools in many AI workflows.

Elasticsearch’s RESTful API and comprehensive client libraries enable integration with virtually any programming language or framework, while specialized tools like the Elasticsearch Python client provide optimized interfaces for data science workflows. The platform’s integration with Apache Spark, Logstash, and other big data processing tools creates opportunities for sophisticated data pipeline architectures that support complex AI workflows.

The choice between platforms often depends on existing technology stacks, team expertise, and integration requirements with other systems in the AI infrastructure. Organizations with significant investments in specific machine learning platforms or cloud services may find one option more naturally compatible with their existing architecture and operational processes.

Scalability and Performance at Enterprise Scale

Enterprise AI applications demand database platforms capable of handling massive data volumes, high throughput requirements, and global distribution patterns that exceed the scale of most traditional applications. The scalability characteristics of MongoDB and Elasticsearch reflect their different architectural approaches and optimization priorities, creating distinct advantages for different types of AI workloads.

MongoDB’s sharding architecture provides transparent horizontal scaling that can accommodate the growth patterns typical in AI applications, where data volumes may increase exponentially as models are trained on larger datasets or as applications capture more user interaction data. The platform’s automatic balancing capabilities and configurable shard key strategies enable fine-tuned control over data distribution and query performance optimization.

Elasticsearch’s distributed computing model excels at handling read-heavy workloads and complex analytical queries across multiple nodes, making it particularly well-suited for AI applications that require real-time search capabilities, dashboard analytics, or interactive data exploration. The platform’s ability to automatically distribute query execution across cluster nodes provides excellent performance scaling for analytical workloads.

Both platforms offer cloud-managed services that simplify scaling operations and provide enterprise-grade reliability, but the optimal choice depends on specific workload characteristics, performance requirements, and operational preferences that vary significantly across different AI use cases and organizational contexts.

Security, Compliance, and Data Governance

AI applications often handle sensitive data including personal information, proprietary algorithms, and confidential business intelligence that requires robust security controls and compliance with regulatory requirements. The security capabilities and governance features of document databases become critical factors in platform selection, particularly for organizations operating in regulated industries or handling sensitive data.

MongoDB provides comprehensive security features including authentication, authorization, encryption at rest and in transit, and audit logging capabilities that support compliance with regulations like GDPR, HIPAA, and SOX. The platform’s field-level security and role-based access control enable fine-grained data protection that can accommodate the complex permission requirements common in AI applications where different teams may require access to different subsets of data.

Elasticsearch offers enterprise security features through its commercial licensing, including user authentication, role-based access control, field and document-level security, and comprehensive audit logging. The platform’s integration with enterprise identity management systems and support for encryption and secure communications address the stringent security requirements of enterprise AI deployments.

The governance and compliance capabilities of both platforms continue to evolve in response to increasing regulatory requirements and security concerns surrounding AI applications. Organizations must carefully evaluate current and future compliance requirements when selecting database platforms that will support AI applications handling sensitive or regulated data.

Cost Considerations and Total Cost of Ownership

Understanding the total cost of ownership for document database platforms in AI contexts requires analysis of multiple factors including licensing costs, infrastructure requirements, operational overhead, and the hidden costs associated with performance optimization, data migration, and specialized expertise requirements. AI workloads often exhibit unique cost patterns that differ significantly from traditional application workloads.

MongoDB’s pricing model includes both open-source and commercial licensing options, with MongoDB Atlas providing managed cloud services that can significantly reduce operational overhead while providing predictable pricing based on cluster size and feature requirements. The platform’s efficient storage utilization and query optimization capabilities can help control costs in AI applications dealing with large data volumes.

Elasticsearch offers open-source distribution alongside commercial licensing for enterprise features, with Elastic Cloud providing managed services that simplify deployment and operations. The platform’s efficient indexing and compression capabilities can result in lower storage costs for AI applications that primarily perform search and analytical operations rather than frequent updates.

The choice between self-managed and cloud-managed deployments significantly impacts total cost of ownership, with cloud services typically providing better cost predictability and reduced operational overhead at the expense of some control and customization flexibility. Organizations must carefully model their expected usage patterns, growth projections, and operational capabilities when evaluating cost implications.

Future Evolution and Technology Roadmaps

The rapid pace of innovation in AI technologies creates ongoing pressure for database platforms to evolve their capabilities, performance characteristics, and integration options. Understanding the strategic direction and technology roadmaps of MongoDB and Elasticsearch provides insight into their long-term viability for AI applications and helps inform architectural decisions that must support multi-year development and deployment cycles.

MongoDB’s investment in vector search capabilities, real-time analytics, and machine learning integration demonstrates commitment to AI use cases, while ongoing improvements in performance, scalability, and developer experience continue to enhance the platform’s suitability for diverse AI applications. The company’s focus on simplifying complex data operations and providing unified interfaces for diverse workloads aligns well with AI development requirements.

Elasticsearch’s evolution toward a complete search and analytics platform, including enhanced machine learning capabilities, improved performance optimization, and expanded ecosystem integrations, positions it well for AI applications that prioritize search, analytics, and real-time data processing. The platform’s continued investment in distributed computing and performance optimization addresses key requirements for large-scale AI deployments.

Both platforms benefit from active open-source communities and commercial development that drive continuous improvement and feature evolution. The choice between platforms should consider not only current capabilities but also alignment with anticipated future requirements and the likelihood of continued investment in AI-relevant features and optimizations.

Making the Strategic Choice for AI Applications

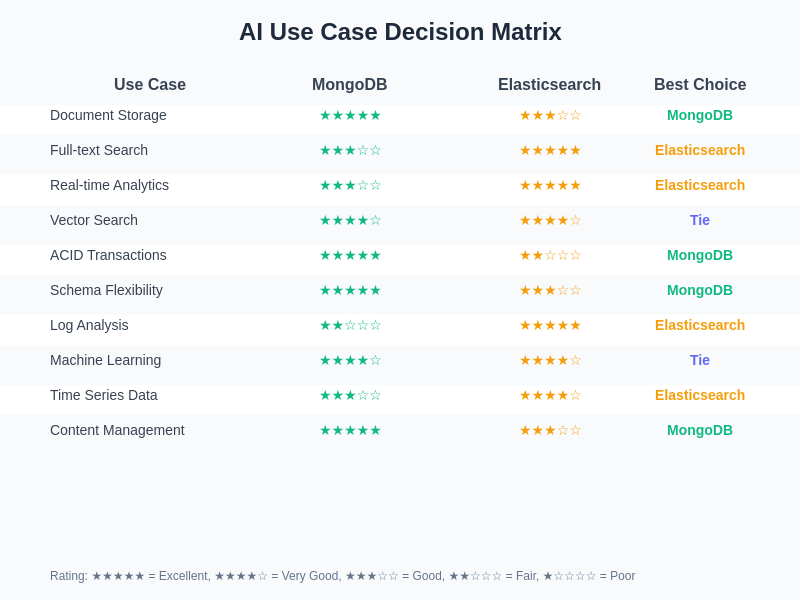

Selecting between MongoDB and Elasticsearch for AI applications requires careful consideration of multiple factors including current requirements, anticipated growth patterns, team expertise, integration needs, and long-term strategic objectives. The decision often involves trade-offs between different strengths and capabilities that may impact both immediate development velocity and long-term system evolution.

MongoDB represents an excellent choice for AI applications that require flexible document storage, complex data relationships, strong consistency guarantees, and integration with diverse machine learning workflows. The platform’s general-purpose nature and comprehensive ecosystem make it particularly suitable for organizations building diverse AI applications or those requiring a single database platform to support multiple use cases.

The selection between MongoDB and Elasticsearch should be based on specific use case requirements, with each platform demonstrating clear advantages in particular AI application scenarios and operational contexts.

Elasticsearch excels for AI applications that prioritize search capabilities, real-time analytics, high read performance, and integration with logging and monitoring workflows. The platform’s specialized optimization for search and analytical workloads makes it ideal for AI applications in domains like content recommendation, log analysis, fraud detection, and real-time monitoring.

The optimal choice often involves hybrid approaches where organizations leverage both platforms for different aspects of their AI infrastructure, using each system’s strengths to address specific requirements while managing integration complexity through well-designed architectural patterns and data synchronization strategies.

Conclusion and Recommendations

The landscape of document databases for AI applications continues to evolve rapidly, driven by advancing machine learning techniques, increasing data volumes, and growing demand for real-time intelligent applications. Both MongoDB and Elasticsearch offer compelling capabilities for AI workloads, but their different architectural approaches and optimization priorities create distinct advantages for different use cases and organizational contexts.

Organizations should evaluate their specific requirements, considering factors like data access patterns, scalability needs, team expertise, integration requirements, and long-term strategic objectives when choosing between these platforms. The decision should be based on thorough analysis of current and anticipated workloads rather than generic recommendations or platform popularity metrics.

The future success of AI applications increasingly depends on making informed architectural decisions that balance immediate development needs with long-term scalability, maintainability, and evolution requirements. Understanding the strengths and limitations of different database platforms enables organizations to build robust, efficient, and adaptable AI systems that can evolve with changing business requirements and advancing technology capabilities.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The views expressed are based on current understanding of database technologies and their applications in AI contexts. Readers should conduct their own research and consider their specific requirements when selecting database platforms. Technology capabilities, pricing, and features may change over time, and organizations should verify current information before making architectural decisions.