The convergence of artificial intelligence and unmanned aerial vehicle technology has ushered in a revolutionary era of autonomous flight capabilities that were once confined to the realm of science fiction. Modern drone AI navigation systems represent a sophisticated fusion of computer vision, machine learning algorithms, sensor fusion technologies, and real-time decision-making processes that enable unmanned aircraft to navigate complex environments with unprecedented precision and safety. These advanced systems have transformed drones from simple remote-controlled devices into intelligent autonomous agents capable of performing complex missions in challenging environments while maintaining operational safety through sophisticated object detection and avoidance mechanisms.

Discover the latest AI trends shaping aviation technology as artificial intelligence continues to revolutionize aerospace applications and autonomous vehicle development. The integration of AI into drone navigation represents a fundamental paradigm shift that extends far beyond simple autopilot functionality, encompassing dynamic path planning, environmental awareness, and adaptive behavior that mirrors the cognitive processes of experienced human pilots.

Foundations of AI-Powered Drone Navigation

The evolution of drone navigation from basic GPS-guided systems to sophisticated AI-driven platforms represents one of the most significant technological leaps in unmanned aviation. Traditional navigation systems relied heavily on pre-programmed flight paths and external positioning systems, which severely limited operational flexibility and environmental adaptability. Modern AI navigation systems leverage multiple layers of artificial intelligence to create dynamic, responsive flight control mechanisms that can adapt to changing conditions in real-time while maintaining mission objectives and safety parameters.

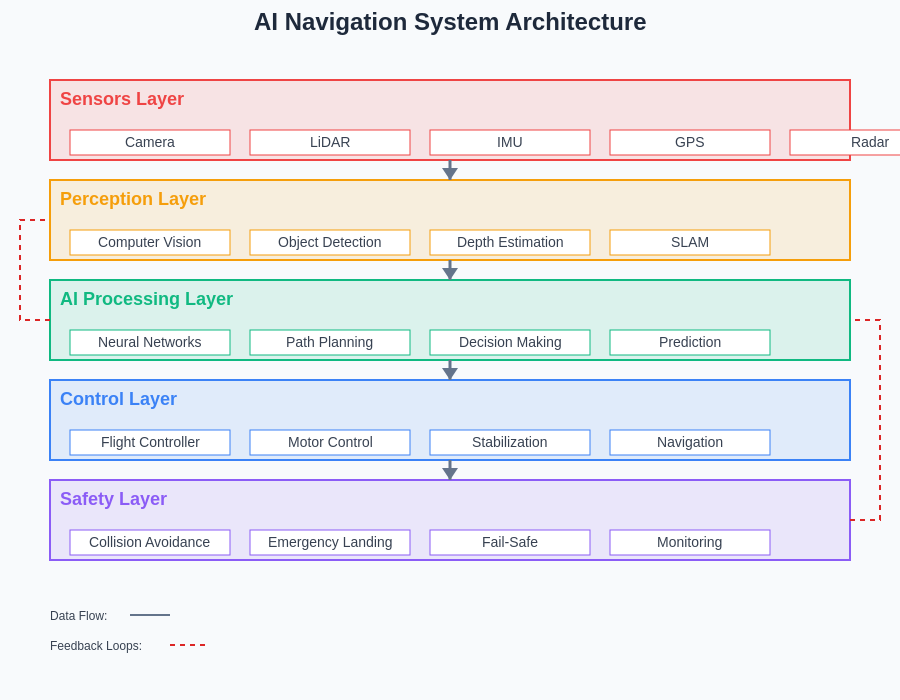

The foundation of these systems rests on advanced sensor fusion technologies that combine data from multiple sources including high-resolution cameras, LiDAR sensors, inertial measurement units, barometric pressure sensors, and GPS receivers. This multi-modal sensor approach provides comprehensive environmental awareness that enables AI algorithms to build detailed three-dimensional models of the surrounding environment while continuously updating flight parameters based on detected obstacles, weather conditions, and mission requirements.

The processing power required for real-time AI navigation has been made possible through advances in edge computing and specialized AI hardware that can perform complex calculations aboard the aircraft itself. This onboard processing capability eliminates the latency issues associated with ground-based processing while ensuring operational continuity even in environments where communication links may be compromised or unavailable.

The layered architecture of modern AI navigation systems demonstrates the sophisticated integration of multiple processing stages, from raw sensor data collection through high-level decision making and flight control execution. Each layer provides specialized functionality while maintaining seamless data flow and feedback loops that enable real-time adaptation to changing environmental conditions.

Computer Vision and Environmental Perception

Computer vision systems represent the sensory foundation of autonomous drone navigation, providing the critical visual information necessary for real-time decision-making and obstacle avoidance. These sophisticated systems employ multiple high-resolution cameras positioned strategically around the aircraft to create comprehensive visual coverage of the surrounding environment. Advanced image processing algorithms analyze these visual inputs to identify objects, estimate distances, detect motion, and classify potential threats or obstacles in the flight path.

The implementation of stereo vision systems enables drones to perceive depth and distance with remarkable accuracy, creating detailed three-dimensional maps of the environment that inform navigation decisions. These depth perception capabilities are further enhanced through the integration of structured light projection systems and time-of-flight cameras that provide precise distance measurements even in challenging lighting conditions or environments with limited visual contrast.

Experience advanced AI capabilities with Claude for developing sophisticated computer vision applications that power next-generation autonomous systems. The synergy between advanced AI reasoning and computer vision technologies creates unprecedented opportunities for developing intelligent navigation systems that can operate safely in complex environments.

Machine learning algorithms trained on vast datasets of aerial imagery enable drones to recognize and classify objects with human-level accuracy while distinguishing between static obstacles like buildings and trees and dynamic threats such as other aircraft, birds, or moving vehicles. This classification capability is essential for implementing appropriate avoidance strategies that account for the predictable nature of static obstacles versus the unpredictable movement patterns of dynamic objects.

Real-Time Object Detection and Classification

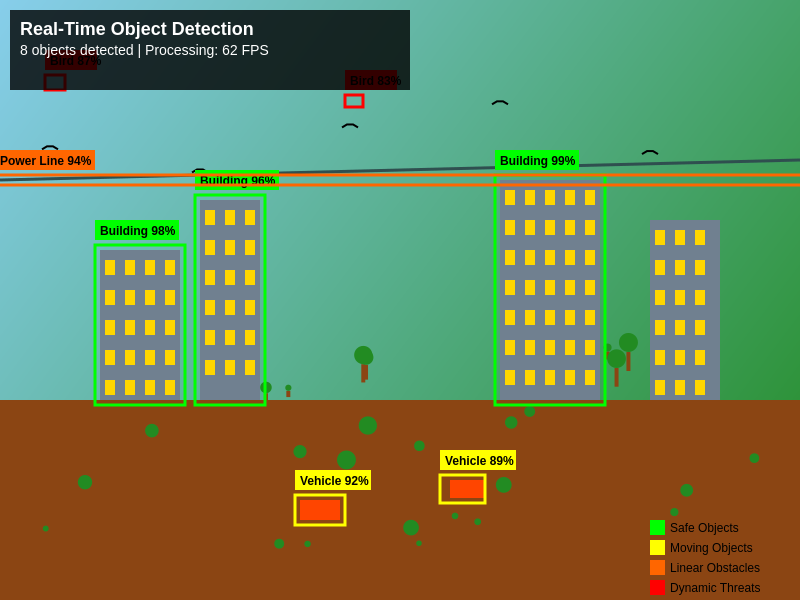

The effectiveness of autonomous drone navigation depends critically on the ability to detect, classify, and track objects in real-time while maintaining stable flight characteristics. Advanced object detection systems employ deep learning neural networks specifically trained for aerial perspectives and the unique challenges associated with high-speed flight and varying environmental conditions. These systems must process visual information at frame rates exceeding 60 frames per second to ensure timely detection and response to emerging obstacles or threats.

Contemporary object detection algorithms utilize convolutional neural networks and transformer architectures that have been optimized for edge computing environments where power consumption and processing latency are critical constraints. These systems can simultaneously detect multiple object types including other aircraft, buildings, power lines, trees, vehicles, and wildlife while maintaining accurate position tracking and trajectory prediction for moving objects.

The classification accuracy of these systems has reached remarkable levels through the implementation of multi-scale detection networks that can identify objects across a wide range of sizes and distances. Small objects such as power lines or guy wires that pose significant collision risks are detected through specialized algorithms that enhance edge detection and line recognition capabilities, while larger obstacles like buildings and terrain features are identified through region-based detection methods that analyze shape, texture, and contextual information.

This aerial perspective demonstrates the comprehensive object detection capabilities of modern drone AI systems, simultaneously identifying and classifying multiple object types including buildings, vehicles, power lines, and dynamic threats such as birds. The real-time processing capabilities enable immediate threat assessment and appropriate avoidance response strategies.

Advanced Obstacle Avoidance Algorithms

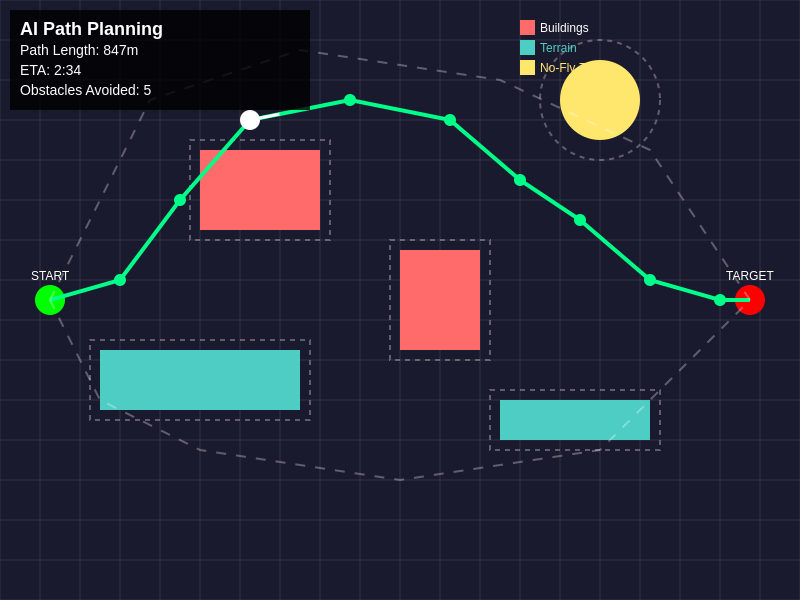

Obstacle avoidance represents one of the most complex challenges in autonomous drone navigation, requiring real-time analysis of multiple potential flight paths while optimizing for mission objectives, safety margins, and energy efficiency. Modern avoidance algorithms employ sophisticated path planning techniques that consider not only immediate obstacles but also predicted future positions of moving objects and the dynamic constraints of aircraft performance characteristics.

The implementation of potential field methods creates virtual forces around detected obstacles that guide the aircraft away from collision risks while maintaining smooth flight trajectories. These algorithms generate repulsive forces proportional to obstacle proximity and attractive forces toward mission objectives, creating natural flight paths that balance safety and efficiency. Advanced implementations incorporate dynamic field adjustments that account for object movement predictions and changing environmental conditions.

Rapidly-exploring Random Tree algorithms provide alternative path planning approaches that excel in complex environments with multiple obstacles or confined spaces. These algorithms generate probabilistic path solutions by exploring random flight trajectories and selecting optimal routes based on safety, efficiency, and mission requirements. The real-time nature of these calculations enables dynamic re-planning when new obstacles are detected or when environmental conditions change.

The sophisticated path planning algorithms demonstrate real-time route optimization that considers multiple obstacles, safety buffers, and alternative pathways. The primary route selection balances efficiency with safety while maintaining the capability to dynamically adjust to changing environmental conditions or newly detected obstacles.

Sensor Fusion and Multi-Modal Perception

The reliability and accuracy of autonomous navigation systems depend heavily on the effective integration of multiple sensor modalities that provide complementary information about the flight environment. Sensor fusion algorithms combine data from visual cameras, infrared sensors, LiDAR systems, radar units, and inertial measurement devices to create comprehensive environmental models that exceed the capabilities of any individual sensor type.

LiDAR systems provide precise distance measurements and three-dimensional mapping capabilities that function effectively in low-light conditions and adverse weather where visual systems may be compromised. These laser-based sensors generate detailed point clouds that reveal obstacle shapes, sizes, and positions with centimeter-level accuracy while maintaining consistent performance across varying environmental conditions.

Radar systems complement visual and LiDAR sensors by providing long-range detection capabilities and superior performance in challenging weather conditions such as fog, rain, or snow. Modern radar implementations designed specifically for drone applications offer high-resolution imaging capabilities that can detect small objects at significant distances while providing velocity information that aids in tracking moving obstacles.

Explore comprehensive AI research capabilities with Perplexity to stay current with the latest developments in sensor fusion technologies and multi-modal perception systems. The integration of diverse sensor technologies creates robust navigation systems that maintain operational effectiveness across a wide range of environmental conditions and mission scenarios.

Machine Learning and Adaptive Flight Control

Machine learning algorithms enable drone navigation systems to continuously improve performance through experience and adaptation to new environments and conditions. Reinforcement learning techniques allow aircraft to optimize flight patterns based on successful navigation experiences while learning to avoid strategies that result in inefficient or unsafe outcomes. These adaptive capabilities enable drones to develop increasingly sophisticated navigation behaviors that surpass the performance of rule-based systems.

Deep reinforcement learning implementations create neural network controllers that can manage complex flight dynamics while simultaneously coordinating obstacle avoidance, path planning, and mission execution tasks. These systems learn optimal control strategies through simulation training and real-world experience, developing intuitive responses to challenging navigation scenarios that mirror the decision-making processes of experienced human pilots.

Transfer learning techniques enable navigation systems trained in simulated environments to rapidly adapt to real-world conditions while leveraging knowledge gained from extensive virtual training scenarios. This approach significantly reduces the time and resources required for system deployment while ensuring robust performance across diverse operational environments and mission types.

Edge Computing and Onboard Processing

The demanding computational requirements of real-time AI navigation necessitate sophisticated onboard processing systems that can execute complex algorithms while maintaining strict power and weight constraints typical of drone applications. Modern edge computing platforms specifically designed for autonomous vehicles integrate high-performance GPU architectures, specialized AI accelerators, and efficient cooling systems within compact form factors suitable for aerial deployment.

These processing systems must handle multiple concurrent AI workloads including computer vision processing, sensor fusion calculations, path planning algorithms, and flight control operations while maintaining deterministic response times critical for safety-critical applications. The implementation of parallel processing architectures enables simultaneous execution of multiple AI tasks while optimizing resource allocation based on dynamic priority assignments and changing computational demands.

The integration of specialized AI hardware such as tensor processing units and neural processing units provides optimized performance for deep learning inference tasks while minimizing power consumption and heat generation. These specialized processors enable complex neural network models to operate efficiently aboard aircraft where power budgets and thermal management represent significant design constraints.

Safety Systems and Fail-Safe Mechanisms

Autonomous drone navigation systems incorporate multiple layers of safety mechanisms designed to ensure safe operation even when primary navigation systems experience failures or encounter unexpected conditions. These fail-safe systems monitor navigation performance continuously and automatically implement predetermined recovery procedures when anomalies are detected or when operating parameters exceed acceptable limits.

Redundant sensor systems provide backup capabilities that maintain navigation functionality when individual sensors fail or become compromised by environmental conditions. Hot-swappable sensor configurations enable automatic switching between primary and backup systems while maintaining continuous operation and navigation accuracy. These redundancy measures ensure operational continuity during critical mission phases and provide graceful degradation of capabilities rather than catastrophic system failures.

Emergency landing protocols automatically engage when navigation systems detect conditions that exceed safe operating parameters or when mission objectives cannot be achieved safely. These protocols identify suitable landing areas using terrain analysis algorithms while coordinating controlled descent procedures that minimize risks to ground personnel and property. Advanced implementations incorporate communication systems that alert operators and relevant authorities when emergency procedures are activated.

Real-World Applications and Industry Impact

The practical applications of AI-powered drone navigation span numerous industries and use cases that benefit from autonomous flight capabilities and sophisticated obstacle avoidance systems. Search and rescue operations leverage these technologies to navigate challenging terrain and hazardous environments while locating missing persons or assessing disaster areas without endangering human operators. The ability to operate autonomously in GPS-denied environments or areas with compromised communication infrastructure makes these systems invaluable for emergency response applications.

Agricultural applications utilize autonomous navigation for precision farming operations including crop monitoring, pesticide application, and livestock management. AI navigation systems enable drones to follow precise flight patterns that optimize coverage while avoiding obstacles such as power lines, trees, and structures. The integration of specialized agricultural sensors with navigation systems provides comprehensive crop analysis capabilities that inform farming decisions and optimize resource utilization.

Infrastructure inspection applications rely on autonomous navigation to safely examine power lines, bridges, pipelines, and communication towers while maintaining safe distances from energized equipment and navigating complex structural environments. These systems can identify potential maintenance issues and structural anomalies while generating detailed inspection reports that support preventive maintenance programs and infrastructure management decisions.

Future Developments and Technological Trends

The continued evolution of AI navigation technologies promises even more sophisticated capabilities that will expand the operational envelope and application possibilities for autonomous drones. Advances in neuromorphic computing architectures offer the potential for ultra-low-power AI processing that mimics biological neural systems while providing superior performance for perception and decision-making tasks. These technologies could enable smaller, more efficient drones with extended operational endurance and enhanced autonomous capabilities.

Swarm intelligence algorithms represent an emerging frontier that enables coordinated navigation and mission execution among multiple autonomous drones operating as a collective system. These distributed intelligence approaches allow drone swarms to share sensory information, coordinate obstacle avoidance maneuvers, and optimize mission execution through collaborative decision-making processes that exceed the capabilities of individual aircraft.

The integration of quantum sensing technologies promises unprecedented accuracy in navigation and environmental perception while enabling operation in challenging environments where traditional sensors may be compromised. Quantum-enhanced inertial navigation systems could provide GPS-independent positioning accuracy that rivals satellite-based systems while offering superior performance in contested or denied environments.

Advanced materials and manufacturing techniques will enable the development of more sophisticated sensor integration approaches that reduce weight and power consumption while improving performance and reliability. Smart materials that can adapt their properties based on environmental conditions could provide dynamic sensor optimization and improved durability in challenging operational environments.

Conclusion

The advancement of AI-powered drone navigation represents a transformative leap in autonomous vehicle technology that has redefined the possibilities for unmanned flight operations across numerous applications and industries. The sophisticated integration of computer vision, machine learning, sensor fusion, and real-time processing capabilities has created navigation systems that surpass human pilot capabilities in many scenarios while maintaining the safety and reliability required for critical operations.

The continued development of these technologies promises to unlock new application possibilities while reducing operational costs and improving safety across the entire spectrum of drone operations. From emergency response and agricultural monitoring to infrastructure inspection and environmental research, AI navigation systems are enabling new approaches to challenges that were previously difficult or impossible to address through conventional means.

As these technologies continue to mature and evolve, we can expect to see even more sophisticated autonomous capabilities that further expand the operational possibilities for unmanned aircraft while maintaining the highest standards of safety and reliability. The future of drone navigation lies in the continued advancement of AI technologies that enhance human capabilities while providing autonomous solutions for an ever-expanding range of applications and use cases.

Disclaimer

This article is for informational purposes only and does not constitute professional advice regarding drone operations, aviation safety, or autonomous vehicle deployment. The views expressed are based on current understanding of AI navigation technologies and their applications in unmanned aircraft systems. Readers should consult with qualified professionals and comply with all applicable regulations when implementing or operating autonomous drone systems. The effectiveness and safety of AI navigation systems may vary depending on specific applications, environmental conditions, and regulatory requirements.