The proliferation of Internet of Things devices and the exponential growth of machine learning applications have converged to create an unprecedented demand for edge AI deployment solutions. At the heart of this technological revolution lies a fundamental architectural decision that can dramatically impact the success of edge AI implementations: the choice between ARM Cortex and x86 processors for IoT machine learning workloads. This critical decision influences everything from power consumption and computational performance to development complexity and long-term scalability, making it essential for engineers and decision-makers to understand the nuanced trade-offs between these competing architectures.

Explore the latest AI hardware trends to understand how edge computing is reshaping the landscape of artificial intelligence deployment across diverse IoT applications. The convergence of sophisticated machine learning algorithms with resource-constrained edge devices represents one of the most significant technological challenges and opportunities of our time, demanding careful consideration of architectural choices that can make or break project success.

Understanding Edge AI Architecture Requirements

Edge AI deployment fundamentally differs from traditional cloud-based machine learning in its emphasis on local processing capabilities, reduced latency requirements, and stringent power consumption constraints. The architectural foundation for edge AI systems must balance computational performance with energy efficiency while maintaining the flexibility to adapt to evolving machine learning workloads and application requirements. This balancing act becomes particularly challenging when considering the diverse range of IoT applications, from simple sensor data processing to complex computer vision and natural language processing tasks.

The choice between ARM Cortex and x86 architectures represents more than a simple hardware decision; it encompasses considerations of software ecosystem compatibility, development tool availability, long-term support, and integration complexity with existing IoT infrastructure. Each architecture brings distinct advantages and limitations that must be carefully evaluated against specific application requirements, deployment constraints, and performance expectations to ensure optimal edge AI implementation success.

ARM Cortex Architecture for Edge AI

ARM Cortex processors have emerged as the dominant force in mobile and embedded computing, offering a compelling combination of power efficiency and computational capability that makes them particularly well-suited for edge AI deployment scenarios. The ARM architecture’s RISC-based design philosophy emphasizes simplified instruction sets and efficient execution patterns that translate directly into reduced power consumption and extended battery life for IoT devices operating in remote or power-constrained environments.

The ARM Cortex-A series processors, including the high-performance Cortex-A78 and Cortex-A710 cores, provide substantial computational resources for machine learning workloads while maintaining the power efficiency characteristics that have made ARM processors ubiquitous in mobile devices. These processors incorporate advanced features such as dedicated neural processing units, optimized memory hierarchies, and sophisticated power management systems that enable complex AI workloads to execute efficiently on battery-powered edge devices.

The ARM Cortex-M series, designed specifically for microcontroller applications, offers even greater power efficiency for simpler edge AI tasks such as sensor data preprocessing, anomaly detection, and basic pattern recognition. These processors can operate on extremely low power budgets while still providing sufficient computational resources for lightweight machine learning algorithms, making them ideal for applications where power consumption is the primary constraint and computational requirements are modest.

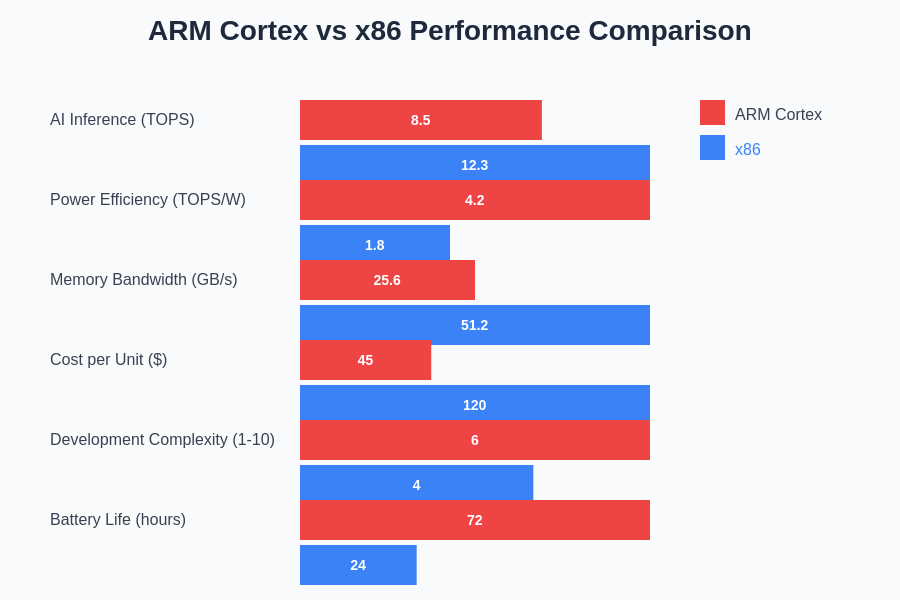

The performance characteristics of ARM Cortex processors in edge AI applications demonstrate their ability to deliver competitive computational throughput while maintaining superior power efficiency compared to traditional x86 alternatives. This performance profile makes ARM Cortex processors particularly attractive for deployment scenarios where battery life, thermal management, and form factor constraints are critical considerations.

x86 Architecture Advantages in Edge AI

Despite the growing dominance of ARM processors in mobile and embedded applications, x86 architecture continues to offer compelling advantages for certain categories of edge AI deployment, particularly those requiring maximum computational performance or compatibility with existing x86-based software ecosystems. The x86 architecture’s complex instruction set computing approach enables highly optimized execution of computationally intensive machine learning algorithms, making it particularly well-suited for applications requiring real-time processing of high-resolution video streams, complex neural network inference, or sophisticated data analytics.

Discover advanced AI processing capabilities with Claude to understand how different hardware architectures can be optimized for specific machine learning workloads and deployment scenarios. The x86 ecosystem’s mature development tools, extensive software libraries, and comprehensive debugging capabilities provide significant advantages for teams working on complex edge AI projects that require rapid development cycles and sophisticated optimization strategies.

Modern x86 processors designed for edge computing applications, such as Intel’s Atom and Core M series, have made significant strides in power efficiency while maintaining the computational advantages that have traditionally favored x86 architecture. These processors incorporate advanced power management features, hardware-accelerated machine learning instructions, and optimized thermal design points that make them viable options for edge AI deployment scenarios where maximum performance is prioritized over absolute power efficiency.

The x86 architecture’s compatibility with standard desktop and server development environments significantly reduces the complexity of cross-platform development and testing workflows. Development teams can leverage familiar tools, libraries, and debugging environments throughout the entire development lifecycle, from initial algorithm development on high-performance workstations to final deployment on edge devices, streamlining the transition from prototype to production.

Power Efficiency Analysis

Power consumption represents perhaps the most critical differentiator between ARM Cortex and x86 architectures in edge AI deployment scenarios, with implications that extend far beyond simple battery life considerations to encompass thermal management, system reliability, and overall operational costs. The fundamental architectural differences between RISC and CISC design philosophies manifest most clearly in their respective approaches to power management and energy-efficient computation.

ARM Cortex processors achieve superior power efficiency through a combination of architectural features including simplified instruction sets that require fewer transistors per operation, advanced clock gating mechanisms that minimize power consumption during idle periods, and sophisticated voltage and frequency scaling systems that dynamically adjust power consumption based on computational workload requirements. These features enable ARM-based edge AI systems to operate continuously for months or years on battery power while still providing sufficient computational resources for meaningful machine learning tasks.

The power efficiency advantages of ARM Cortex architecture become particularly pronounced in deployment scenarios involving large numbers of distributed IoT devices, where aggregate power consumption can significantly impact operational costs and environmental sustainability. The ability to deploy thousands of ARM-based edge AI nodes with minimal power infrastructure requirements creates opportunities for comprehensive IoT coverage in environments where traditional power distribution would be impractical or cost-prohibitive.

x86 architecture, while historically less power-efficient than ARM alternatives, has made significant improvements in recent generations through the incorporation of advanced manufacturing processes, intelligent power management systems, and hardware-accelerated machine learning instructions that reduce the computational overhead of AI workloads. Modern x86 processors designed for edge computing applications can achieve respectable power efficiency levels while maintaining the computational advantages that justify their selection for performance-critical applications.

Performance Benchmarking for Machine Learning Workloads

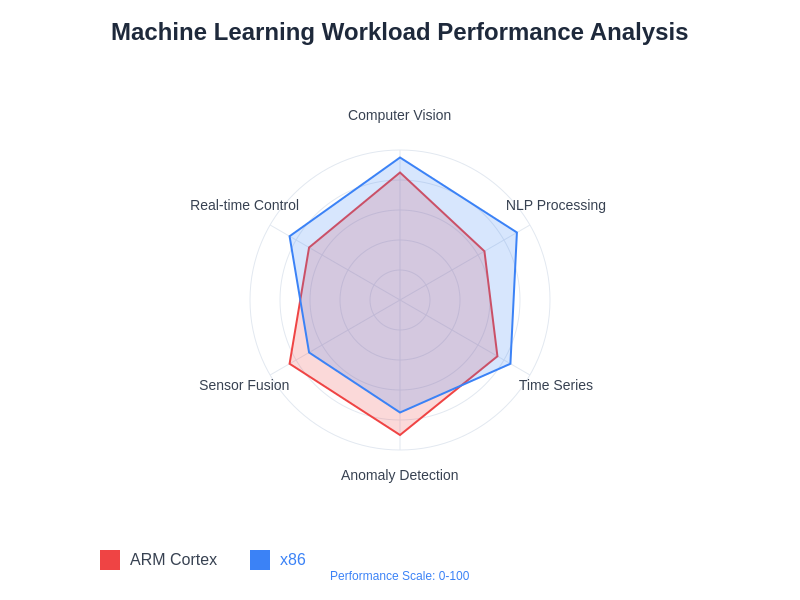

The performance characteristics of ARM Cortex and x86 processors in machine learning applications vary significantly depending on the specific types of algorithms, data processing requirements, and optimization strategies employed in edge AI implementations. Comprehensive benchmarking reveals that the optimal architectural choice depends heavily on the particular characteristics of the machine learning workload, including computational complexity, memory access patterns, and parallelization opportunities.

Convolutional neural networks, commonly used for computer vision applications in edge AI scenarios, demonstrate different performance profiles on ARM Cortex versus x86 architectures depending on factors such as input resolution, network depth, and optimization framework selection. ARM Cortex processors with dedicated neural processing units can achieve exceptional performance per watt for optimized CNN workloads, while x86 processors may provide superior absolute performance for complex networks requiring extensive floating-point computation.

Natural language processing and time series analysis workloads present different architectural trade-offs, with x86 processors often demonstrating advantages in applications requiring complex memory access patterns or extensive use of transcendental mathematical functions. The sophisticated branch prediction and out-of-order execution capabilities of modern x86 processors can significantly accelerate certain categories of machine learning algorithms that exhibit irregular computational patterns.

Enhanced AI research capabilities with Perplexity provide comprehensive insights into optimizing machine learning workloads for different hardware architectures, enabling informed architectural decisions based on specific application requirements and performance objectives. The selection of appropriate benchmarking methodologies and performance metrics becomes crucial for making informed architectural decisions that align with real-world deployment requirements.

Development Ecosystem and Tool Support

The software development ecosystem surrounding ARM Cortex and x86 architectures represents a critical factor in edge AI deployment decisions, influencing everything from initial development velocity and debugging capabilities to long-term maintenance and optimization opportunities. The maturity and comprehensiveness of development tools, machine learning frameworks, and optimization libraries can significantly impact project success and long-term viability.

ARM development ecosystems have evolved rapidly to support the growing demand for edge AI applications, with comprehensive toolchains including ARM Development Studio, Keil MDK, and extensive support from popular machine learning frameworks such as TensorFlow Lite, PyTorch Mobile, and ONNX Runtime. The ARM ecosystem’s focus on power-efficient computing has resulted in specialized optimization tools and profiling capabilities that enable developers to fine-tune machine learning workloads for optimal power consumption and performance characteristics.

The x86 development ecosystem benefits from decades of investment in sophisticated development tools, debugging capabilities, and optimization frameworks that provide unparalleled visibility into system behavior and performance characteristics. Intel’s comprehensive suite of development tools, including VTune Profiler, Intel oneAPI, and extensive machine learning optimization libraries, enables sophisticated optimization strategies that can significantly improve the performance of complex machine learning workloads on x86 hardware.

Cross-compilation complexity represents a significant consideration for teams working with ARM Cortex targets, particularly when integrating with existing x86-based development workflows and continuous integration systems. The need to maintain separate toolchains, manage cross-compilation dependencies, and validate functionality across different architectural targets can introduce development overhead that must be weighed against the benefits of ARM deployment.

Real-World Implementation Strategies

Successful edge AI deployment requires careful consideration of implementation strategies that align architectural choices with specific application requirements, operational constraints, and long-term scalability objectives. The selection between ARM Cortex and x86 architectures must be informed by comprehensive analysis of factors including computational requirements, power constraints, environmental conditions, and integration complexity with existing systems.

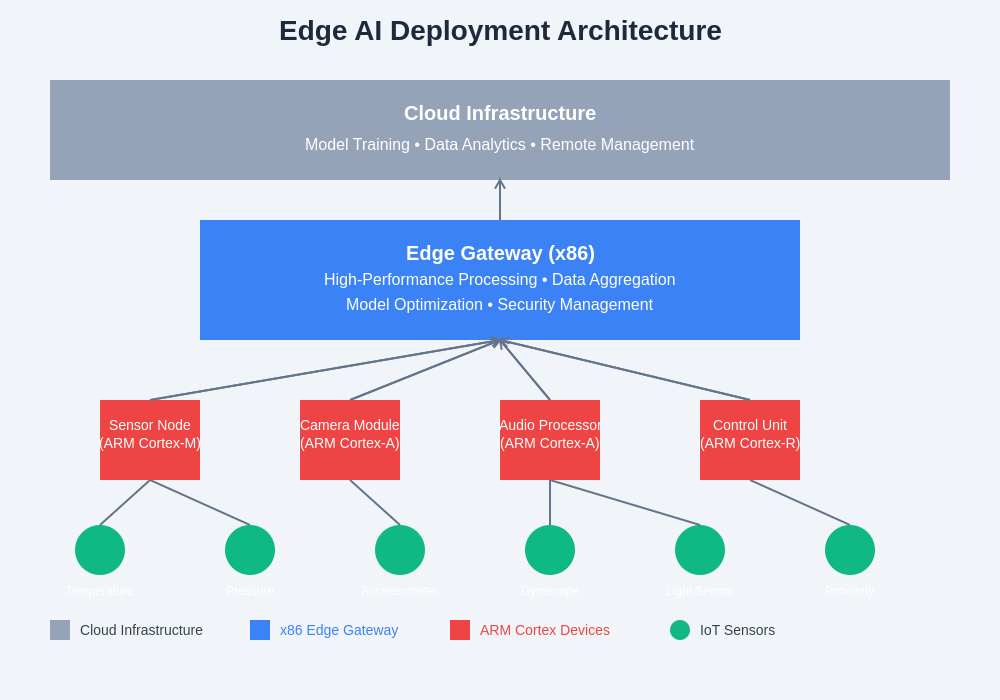

Hybrid deployment strategies that leverage the complementary strengths of both ARM Cortex and x86 architectures can provide optimal solutions for complex edge AI applications requiring diverse computational capabilities. High-performance x86 processors can serve as edge gateways handling computationally intensive preprocessing and coordination tasks, while distributed ARM Cortex devices manage local sensing, basic inference, and power-efficient operation in remote locations.

The implementation of effective model optimization and quantization strategies becomes crucial for maximizing performance on resource-constrained edge devices regardless of architectural choice. Techniques such as neural network pruning, weight quantization, and knowledge distillation can significantly reduce computational requirements and memory footprint, enabling deployment of sophisticated machine learning models on both ARM Cortex and x86 edge platforms.

Container-based deployment strategies using technologies such as Docker and Kubernetes can simplify the management and orchestration of edge AI applications across heterogeneous hardware platforms, enabling consistent deployment experiences regardless of underlying architectural differences. This approach can facilitate the development of portable edge AI solutions that can be adapted to different hardware platforms based on specific deployment requirements and constraints.

Cost Analysis and ROI Considerations

The total cost of ownership for edge AI deployments encompasses far more than initial hardware acquisition costs, extending to include development expenses, operational costs, maintenance requirements, and long-term scalability considerations that can significantly impact the overall return on investment for edge AI initiatives. Comprehensive cost analysis must account for the complex interactions between hardware costs, software development expenses, power consumption, and operational complexity.

ARM Cortex processors typically offer lower per-unit hardware costs compared to x86 alternatives, particularly for high-volume IoT deployments where cost optimization is critical for commercial viability. The power efficiency advantages of ARM architecture translate directly into reduced operational costs through lower power consumption requirements, simplified thermal management, and extended battery life that reduces maintenance frequency and operational complexity.

x86 processors may justify higher hardware and operational costs through superior computational performance that enables more sophisticated machine learning capabilities, reduced processing latency, and the ability to consolidate multiple functions on a single platform. The development cost advantages of x86 ecosystems, including reduced cross-compilation complexity and mature debugging tools, can offset higher hardware costs through accelerated development timelines and reduced engineering expenses.

The scalability characteristics of ARM Cortex versus x86 architectures present different cost implications for large-scale edge AI deployments, with ARM’s lower per-unit costs and power requirements potentially providing significant advantages for deployments involving thousands or millions of edge devices, while x86’s superior performance per device may be more cost-effective for applications requiring fewer but more capable edge nodes.

Security and Reliability Considerations

Security and reliability requirements for edge AI deployments present unique challenges that must be addressed through appropriate architectural choices and implementation strategies. The distributed nature of edge computing creates expanded attack surfaces and increased vulnerability to physical tampering, making security considerations paramount in architectural decision-making processes.

ARM Cortex processors incorporate comprehensive security features including TrustZone technology, secure boot capabilities, and hardware-based cryptographic acceleration that provide robust foundations for secure edge AI deployment. The ARM ecosystem’s focus on low-power operation often translates into simplified system architectures that can reduce the complexity of security implementation and validation compared to more complex x86 systems.

x86 architecture offers extensive security features including Intel’s Software Guard Extensions, hardware-based attestation capabilities, and comprehensive memory protection mechanisms that provide sophisticated security capabilities for edge AI applications handling sensitive data or operating in high-threat environments. The maturity of x86 security ecosystems and the availability of comprehensive security validation tools can provide advantages for applications with stringent security requirements.

Reliability considerations encompass both hardware reliability characteristics and the ability to implement effective fault tolerance and recovery mechanisms in edge AI systems. The simpler architecture and lower power consumption of ARM Cortex systems can contribute to improved reliability through reduced thermal stress and fewer potential failure modes, while x86 systems may offer superior error detection and correction capabilities for applications requiring maximum reliability.

Future Technology Trends and Evolution

The rapid evolution of both ARM Cortex and x86 architectures continues to reshape the landscape of edge AI deployment, with new technological developments promising to address current limitations and expand the possibilities for sophisticated machine learning at the edge. Understanding these evolutionary trends is crucial for making architectural decisions that will remain viable and competitive over the multi-year lifecycles typical of IoT deployments.

ARM’s roadmap includes continued improvements in neural processing capabilities, with dedicated AI acceleration units becoming standard features across the Cortex processor family. The development of more sophisticated power management capabilities and the integration of advanced manufacturing processes promise to further extend ARM’s advantages in power-constrained edge AI applications while simultaneously improving computational performance.

x86 evolution focuses on improving power efficiency while maintaining computational advantages, with Intel’s hybrid architecture approaches combining high-performance and efficiency cores to optimize both peak performance and power consumption characteristics. The integration of dedicated AI acceleration units and improved manufacturing processes promises to reduce the power consumption gap between x86 and ARM architectures while maintaining x86’s computational advantages.

The emergence of specialized AI processors and neuromorphic computing architectures represents a potential disruption to traditional ARM versus x86 comparisons, offering dedicated solutions optimized specifically for machine learning workloads that may provide superior performance and efficiency compared to general-purpose processor architectures. These specialized solutions may complement rather than replace traditional processors, creating hybrid architectures that combine the best characteristics of different processing approaches.

The continued development of edge AI software frameworks and optimization tools promises to reduce the significance of architectural differences by providing abstraction layers that enable portable and optimized deployment across diverse hardware platforms, potentially reducing the importance of specific architectural choices while simultaneously improving the performance and capabilities of edge AI systems regardless of underlying hardware architecture.

Conclusion and Architectural Recommendations

The choice between ARM Cortex and x86 architectures for edge AI deployment represents a complex decision that must balance multiple competing priorities including computational performance, power efficiency, development complexity, cost considerations, and long-term scalability requirements. Neither architecture provides universally superior solutions; instead, the optimal choice depends heavily on specific application requirements, deployment constraints, and organizational capabilities.

ARM Cortex processors excel in scenarios prioritizing power efficiency, cost optimization, and large-scale deployment, making them ideal for battery-powered IoT devices, remote sensing applications, and deployments requiring thousands of distributed edge nodes. The ARM ecosystem’s focus on power-efficient computing and the availability of specialized neural processing capabilities provide compelling advantages for applications where power consumption is the primary constraint.

x86 processors remain the preferred choice for applications requiring maximum computational performance, compatibility with existing software ecosystems, or sophisticated debugging and optimization capabilities. The mature development environment and superior computational performance make x86 architecture particularly suitable for edge gateway applications, high-performance inference tasks, and scenarios where development velocity is prioritized over power efficiency.

Hybrid deployment strategies leveraging both ARM Cortex and x86 architectures can provide optimal solutions for complex edge AI applications, enabling organizations to optimize different aspects of their edge computing infrastructure based on specific requirements and constraints while maintaining overall system coherence and manageability.

Disclaimer

This article is for informational purposes only and does not constitute professional advice. The performance characteristics, cost implications, and suitability assessments presented are based on general architectural principles and may vary significantly depending on specific hardware implementations, software optimizations, and deployment conditions. Readers should conduct thorough testing and evaluation with their specific use cases and requirements before making architectural decisions for production deployments. The rapidly evolving nature of both ARM and x86 architectures means that current assessments may not reflect future capabilities or market conditions.